您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

小編給大家分享一下PyTorch中梯度下降及反向傳播的示例分析,希望大家閱讀完這篇文章之后都有所收獲,下面讓我們一起去探討吧!

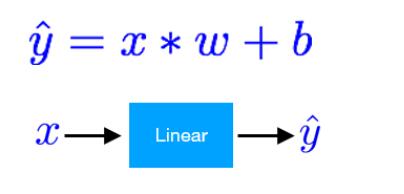

線性模型

線性模型介紹

線性模型是很常見的機器學習模型,通常通過線性的公式來擬合訓練數據集。訓練集包括(x,y),x為特征,y為目標。如下圖:

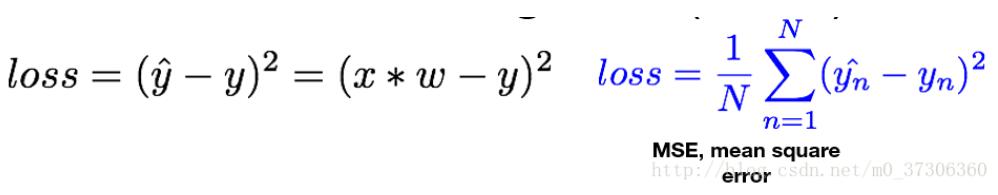

將真實值和預測值用于構建損失函數,訓練的目標是最小化這個函數,從而更新w。當損失函數達到最小時(理想上,實際情況可能會陷入局部最優),此時的模型為最優模型,線性模型常見的的損失函數:

線性模型例子

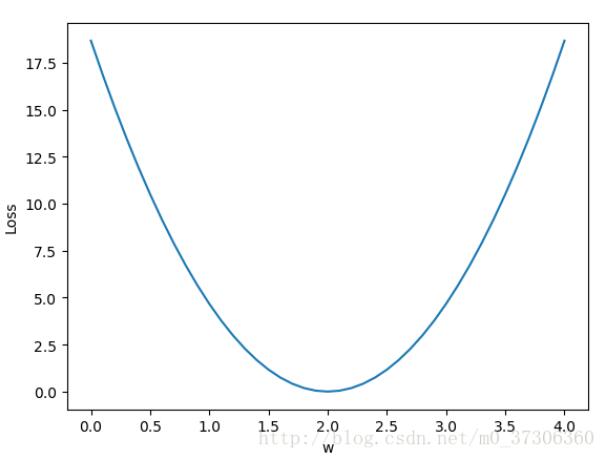

下面通過一個例子可以觀察不同權重(w)對模型損失函數的影響。

#author:yuquanle

#data:2018.2.5

#Study of Linear Model

import numpy as np

import matplotlib.pyplot as plt

x_data = [1.0, 2.0, 3.0]

y_data = [2.0, 4.0, 6.0]

def forward(x):

return x * w

def loss(x, y):

y_pred = forward(x)

return (y_pred - y)*(y_pred - y)

w_list = []

mse_list = []

for w in np.arange(0.0, 4.1, 0.1):

print("w=", w)

l_sum = 0

for x_val, y_val in zip(x_data, y_data):

# error

l = loss(x_val, y_val)

l_sum += l

print("MSE=", l_sum/3)

w_list.append(w)

mse_list.append(l_sum/3)

plt.plot(w_list, mse_list)

plt.ylabel("Loss")

plt.xlabel("w")

plt.show()

輸出結果:

w= 0.0

MSE= 18.6666666667

w= 0.1

MSE= 16.8466666667

w= 0.2

MSE= 15.12

w= 0.3

MSE= 13.4866666667

w= 0.4

MSE= 11.9466666667

w= 0.5

MSE= 10.5

w= 0.6

MSE= 9.14666666667調整w,loss變化圖:

可以發現當w=2時,loss最小。但是現實中最常見的情況是,我們知道數據集,定義好損失函數之后(loss),我們并不會從0到n去設置w的值,然后求loss,最后選取使得loss最小的w作為最佳模型的參數。更常見的做法是,首先隨機初始化w的值,然后根據loss函數定義對w求梯度,然后通過w的梯度來更新w的值,這就是經典的梯度下降法思想。

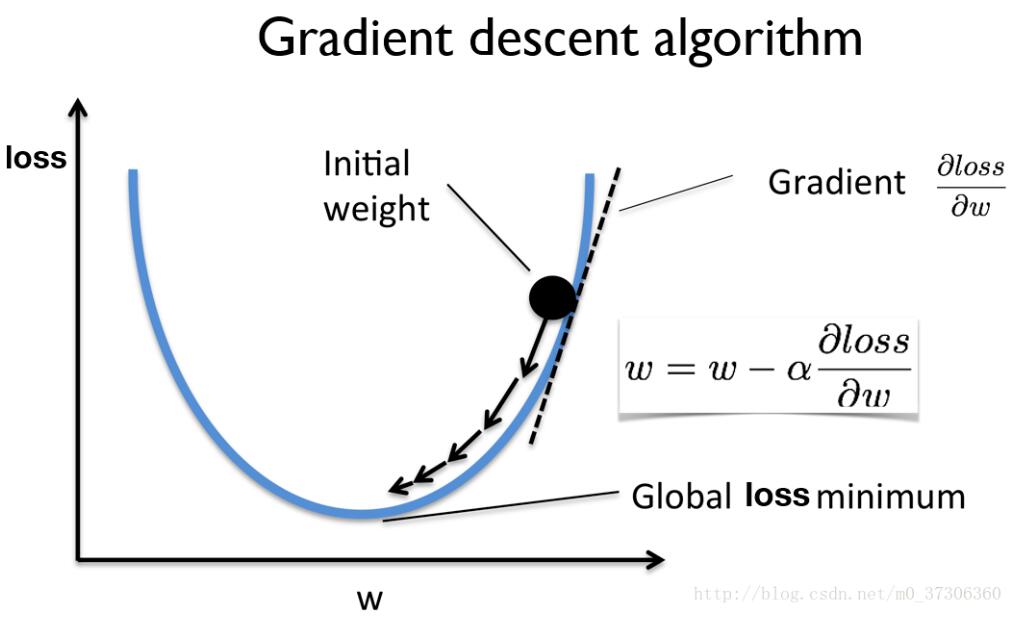

梯度下降法

梯度的本意是一個向量,表示某一函數在該點處的方向導數沿著該方向取得最大值,即函數在該點處沿著該方向(此梯度的方向)變化最快,變化率最大(為該梯度的模)。

梯度下降是迭代法的一種,可以用于求解最小二乘問題(線性和非線性都可以)。在求解機器學習算法的模型參數,即無約束優化問題時,梯度下降(Gradient Descent)是最常采用的方法之一,另一種常用的方法是最小二乘法。在求解損失函數的最小值時,可以通過梯度下降法來一步步的迭代求解,得到最小化的損失函數和模型參數值。即每次更新參數w減去其梯度(通常會乘以學習率)。

#author:yuquanle

#data:2018.2.5

#Study of SGD

x_data = [1.0, 2.0, 3.0]

y_data = [2.0, 4.0, 6.0]

# any random value

w = 1.0

# forward pass

def forward(x):

return x * w

def loss(x, y):

y_pred = forward(x)

return (y_pred - y)*(y_pred - y)

# compute gradient (loss對w求導)

def gradient(x, y):

return 2*x*(x*w - y)

# Before training

print("predict (before training)", 4, forward(4))

# Training loop

for epoch in range(20):

for x, y in zip(x_data, y_data):

grad = gradient(x, y)

w = w - 0.01 * grad

print("\t grad: ",x, y, grad)

l = loss(x, y)

print("progress:", epoch, l)

# After training

print("predict (after training)", 4, forward(4))

輸出結果:

predict (before training) 4 4.0

grad: 1.0 2.0 -2.0

grad: 2.0 4.0 -7.84

grad: 3.0 6.0 -16.2288

progress: 0 4.919240100095999

grad: 1.0 2.0 -1.478624

grad: 2.0 4.0 -5.796206079999999

grad: 3.0 6.0 -11.998146585599997

progress: 1 2.688769240265834

grad: 1.0 2.0 -1.093164466688

grad: 2.0 4.0 -4.285204709416961

grad: 3.0 6.0 -8.87037374849311

progress: 2 1.4696334962911515

grad: 1.0 2.0 -0.8081896081960389

grad: 2.0 4.0 -3.1681032641284723

grad: 3.0 6.0 -6.557973756745939

progress: 3 0.8032755585999681

grad: 1.0 2.0 -0.59750427561463

grad: 2.0 4.0 -2.3422167604093502

grad: 3.0 6.0 -4.848388694047353

progress: 4 0.43905614881022015

grad: 1.0 2.0 -0.44174208101320334

grad: 2.0 4.0 -1.7316289575717576

grad: 3.0 6.0 -3.584471942173538

progress: 5 0.2399802903801062

grad: 1.0 2.0 -0.3265852213980338

grad: 2.0 4.0 -1.2802140678802925

grad: 3.0 6.0 -2.650043120512205

progress: 6 0.1311689630744999

grad: 1.0 2.0 -0.241448373202223

grad: 2.0 4.0 -0.946477622952715

grad: 3.0 6.0 -1.9592086795121197

progress: 7 0.07169462478267678

grad: 1.0 2.0 -0.17850567968888198

grad: 2.0 4.0 -0.6997422643804168

grad: 3.0 6.0 -1.4484664872674653

progress: 8 0.03918700813247573

grad: 1.0 2.0 -0.13197139106214673

grad: 2.0 4.0 -0.5173278529636143

grad: 3.0 6.0 -1.0708686556346834

progress: 9 0.021418922423117836

predict (after training) 4 7.804863933862125反向傳播

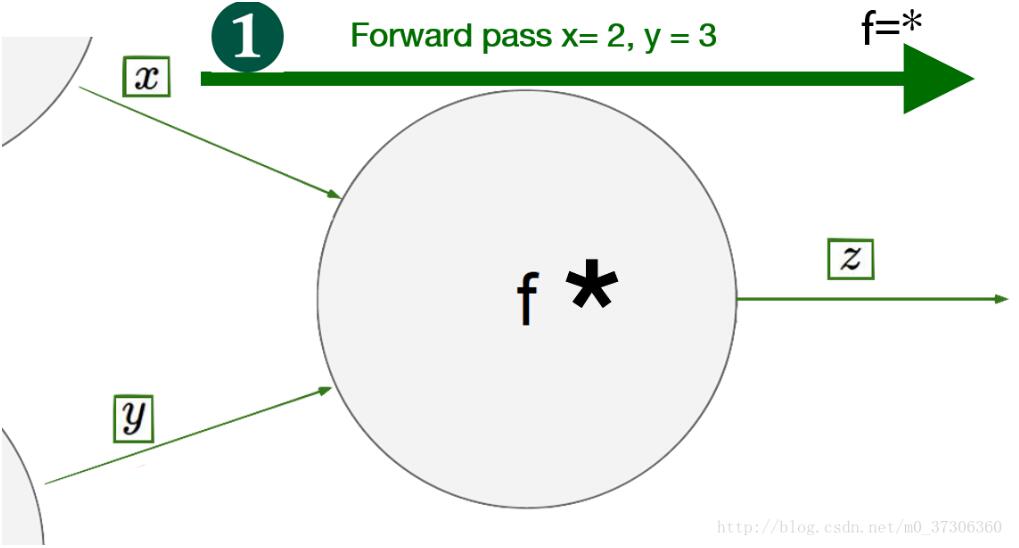

但是在定義好模型之后,使用pytorch框架不需要我們手動的求導,我們可以通過反向傳播將梯度往回傳播。通常有二個過程,forward和backward:

#author:yuquanle

#data:2018.2.6

#Study of BackPagation

import torch

from torch import nn

from torch.autograd import Variable

x_data = [1.0, 2.0, 3.0]

y_data = [2.0, 4.0, 6.0]

# Any random value

w = Variable(torch.Tensor([1.0]), requires_grad=True)

# forward pass

def forward(x):

return x*w

# Before training

print("predict (before training)", 4, forward(4))

def loss(x, y):

y_pred = forward(x)

return (y_pred-y)*(y_pred-y)

# Training: forward, backward and update weight

# Training loop

for epoch in range(10):

for x, y in zip(x_data, y_data):

l = loss(x, y)

l.backward()

print("\t grad:", x, y, w.grad.data[0])

w.data = w.data - 0.01 * w.grad.data

# Manually zero the gradients after running the backward pass and update w

w.grad.data.zero_()

print("progress:", epoch, l.data[0])

# After training

print("predict (after training)", 4, forward(4))

輸出結果:

predict (before training) 4 Variable containing:

4

[torch.FloatTensor of size 1]

grad: 1.0 2.0 -2.0

grad: 2.0 4.0 -7.840000152587891

grad: 3.0 6.0 -16.228801727294922

progress: 0 7.315943717956543

grad: 1.0 2.0 -1.478623867034912

grad: 2.0 4.0 -5.796205520629883

grad: 3.0 6.0 -11.998146057128906

progress: 1 3.9987640380859375

grad: 1.0 2.0 -1.0931644439697266

grad: 2.0 4.0 -4.285204887390137

grad: 3.0 6.0 -8.870372772216797

progress: 2 2.1856532096862793

grad: 1.0 2.0 -0.8081896305084229

grad: 2.0 4.0 -3.1681032180786133

grad: 3.0 6.0 -6.557973861694336

progress: 3 1.1946394443511963

grad: 1.0 2.0 -0.5975041389465332

grad: 2.0 4.0 -2.3422164916992188

grad: 3.0 6.0 -4.848389625549316

progress: 4 0.6529689431190491

grad: 1.0 2.0 -0.4417421817779541

grad: 2.0 4.0 -1.7316293716430664

grad: 3.0 6.0 -3.58447265625

progress: 5 0.35690122842788696

grad: 1.0 2.0 -0.3265852928161621

grad: 2.0 4.0 -1.2802143096923828

grad: 3.0 6.0 -2.650045394897461

progress: 6 0.195076122879982

grad: 1.0 2.0 -0.24144840240478516

grad: 2.0 4.0 -0.9464778900146484

grad: 3.0 6.0 -1.9592113494873047

progress: 7 0.10662525147199631

grad: 1.0 2.0 -0.17850565910339355

grad: 2.0 4.0 -0.699742317199707

grad: 3.0 6.0 -1.4484672546386719

progress: 8 0.0582793727517128

grad: 1.0 2.0 -0.1319713592529297

grad: 2.0 4.0 -0.5173273086547852

grad: 3.0 6.0 -1.070866584777832

progress: 9 0.03185431286692619

predict (after training) 4 Variable containing:

7.8049

[torch.FloatTensor of size 1]

Process finished with exit code 0看完了這篇文章,相信你對“PyTorch中梯度下降及反向傳播的示例分析”有了一定的了解,如果想了解更多相關知識,歡迎關注億速云行業資訊頻道,感謝各位的閱讀!

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。