您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

預計該博文篇幅較長,這里不再廢話,對ganglia不太了解的去問谷老師,直接看環境:

hadoop1.updb.com 192.168.0.101

hadoop2.updb.com 192.168.0.102

hadoop3.updb.com 192.168.0.103

hadoop4.updb.com 192.168.0.104

hadoop5.updb.com 192.168.0.105

操作系統:centos 6.5 x86_64,使用自帶網絡yum源,同時配置epel擴展源。

在安裝ganglia之前,確保你的hadoop及hbase已經安裝成功,看我的安裝規劃:

hadoop1.updb.com NameNode|HMaster|gmetad|gmond|ganglia-web|nagios

hadoop2.updb.com DataNode|Regionserver|gmond|nrpe

hadoop3.updb.com DataNode|Regionserver|gmond|nrpe

hadoop4.updb.com DataNode|Regionserver|gmond|nrpe

hadoop5.updb.com DataNode|Regionserver|gmond|nrpe

hadoop1作為ganglia和nagios的主控端,安裝的軟件為ganglia的服務端gmetad、由于要監控自身節點,所以還需要安裝ganglia的客戶端gmond以及ganglia的web應用ganglia-web和nagios服務端;hadoop2、hadoop3、hadoop4、hadoop5作為被控端,安裝的軟件有ganglia的客戶端gmond以及nagios的客戶端nrpe。注意這里的nrpe不是一定要安裝的,這里是因為我要監控hadoop2、hadoop3、hadoop4、hadoop5節點上的mysql及其他一些服務,所以選擇安裝nrpe。

1、hadoop1安裝ganglia的gmetad、gmond及ganglia-web

首先安裝ganglia所需要的依賴包

[root@hadoop1 ~]# cat ganglia.rpm apr-devel apr-util check-devel cairo-devel pango-devel libxml2-devel glib2-devel dbus-devel freetype-devel fontconfig-devel gcc-c++ expat-devel python-devel libXrender-devel zlib libart_lgpl libpng dejavu-lgc-sans-mono-fonts dejavu-sans-mono-fonts perl-ExtUtils-CBuilder perl-ExtUtils-MakeMaker [root@hadoop1 ~]# yum install -y `cat ganglia.rpm`

除了上面的依賴,還需要安裝confuse-2.7.tar.gz、rrdtool-1.4.8.tar.gz兩個軟件

## 解壓軟件 [root@hadoop1 pub]# tar xf rrdtool-1.4.8.tar.gz -C /opt/soft/ [root@hadoop1 pub]# tar xf confuse-2.7.tar.gz -C /opt/soft/ ## 安裝rrdtool [root@hadoop1 rrdtool-1.4.8]# ./configure -prefix=/usr/local/rrdtool [root@hadoop1 rrdtool-1.4.8]# make && make install [root@hadoop1 rrdtool-1.4.8]# mkdir /usr/local/rrdtool/lib64 [root@hadoop1 rrdtool-1.4.8]# cp /usr/local/rrdtool/lib/* /usr/local/rrdtool/lib64/ -rf [root@hadoop1 rrdtool-1.4.8]# cp /usr/local/rrdtool/lib/librrd.so /usr/lib/ [root@hadoop1 rrdtool-1.4.8]# cp /usr/local/rrdtool/lib/librrd.so /usr/lib64/ ## 安裝confuse [root@hadoop1 rrdtool-1.4.8]# cd ../confuse-2.7/ [root@hadoop1 confuse-2.7]# ./configure CFLAGS=-fPIC --disable-nls --prefix=/usr/local/confuse [root@hadoop1 confuse-2.7]# make && make install [root@hadoop1 confuse-2.7]# mkdir /usr/local/confuse/lib64 [root@hadoop1 confuse-2.7]# cp /usr/local/confuse/lib/* /usr/local/confuse/lib64/ -rf

ok,準備工作做好之后,開始安裝ganglia軟件中的gmetad和gmond

## 解壓軟件 [root@hadoop1 pub]# tar xf ganglia-3.6.0.tar.gz -C /opt/soft/ [root@hadoop1 pub]# cd /opt/soft/ganglia-3.6.0/ ## 安裝gmetad [root@hadoop1 ganglia-3.6.0]# ./configure --prefix=/usr/local/ganglia --with-librrd=/usr/local/rrdtool --with-libconfuse=/usr/local/confuse --with-gmetad --with-libpcre=no --enable-gexec --enable-status --sysconfdir=/etc/ganglia [root@hadoop1 ganglia-3.6.0]# make && make install [root@hadoop1 ganglia-3.6.0]# cp gmetad/gmetad.init /etc/init.d/gmetad [root@hadoop1 ganglia-3.6.0]# cp /usr/local/ganglia/sbin/gmetad /usr/sbin/ [root@hadoop1 ganglia-3.6.0]# chkconfig --add gmetad ## 安裝gmond [root@hadoop1 ganglia-3.6.0]# cp gmond/gmond.init /etc/init.d/gmond [root@hadoop1 ganglia-3.6.0]# cp /usr//local/ganglia/sbin/gmond /usr/sbin/ [root@hadoop1 ganglia-3.6.0]# gmond --default_config>/etc/ganglia/gmond.conf [root@hadoop1 ganglia-3.6.0]# chkconfig --add gmond

至此,hadoop1上的gmetad、gmond安裝成功,接著安裝ganglia-web,首先要安裝php和httpd

yum install php httpd -y

修改httpd的配置文件/etc/httpd/conf/httpd.conf,只把監聽端口改為8080

Listen 8080

安裝ganglia-web

[root@hadoop1 pub]# tar xf ganglia-web-3.6.2.tar.gz -C /opt/soft/ [root@hadoop1 pub]# cd /opt/soft/ [root@hadoop1 soft]# mv ganglia-web-3.6.2/ /var/www/html/ganglia [root@hadoop1 soft]# chmod 777 /var/www/html/ganglia -R [root@hadoop1 soft]# cd /var/www/html/ganglia [root@hadoop1 ganglia]# useradd www-data [root@hadoop1 ganglia]# make install [root@hadoop1 ganglia]# chmod 777 /var/lib/ganglia-web/dwoo/cache/ [root@hadoop1 ganglia]# chmod 777 /var/lib/ganglia-web/dwoo/compiled/

至此ganglia-web安裝完成,修改conf_default.php修改文件,指定ganglia-web的目錄及rrds的數據目錄,修改如下兩行:

36 # Where gmetad stores the rrd archives. 37 $conf['gmetad_root'] = "/var/www/html/ganglia"; ## 改為web程序的安裝目錄 38 $conf['rrds'] = "/var/lib/ganglia/rrds"; ## 指定rrd數據存放的路徑

創建rrd數據存放目錄并授權

[root@hadoop1 ganglia]# mkdir /var/lib/ganglia/rrds -p [root@hadoop1 ganglia]# chown nobody:nobody /var/lib/ganglia/rrds/ -R

到這里,hadoop1上的ganglia的所有安裝工作就完成了,接下來就是要在hadoop2、hadoop3、hadoop4、hadoop5上安裝ganglia的gmond客戶端。

2、在hadoop2、hadoop3、hadoop4、hadoop5上安裝gmond

首先還是需要安裝依賴,參照hadoop1中的前兩步來安裝所需依賴

ok,準備工作做好之后,開始安裝gmond,4個節點的操作是一樣的,這里以hadoop2為例

## 解壓軟件 [root@hadoop2 pub]# tar xf ganglia-3.6.0.tar.gz -C /opt/soft/ [root@hadoop2 pub]# cd /opt/soft/ganglia-3.6.0/ ## 安裝gmond,注意這里的編譯和gmetad相比少了--with-gmetad [root@hadoop2 ganglia-3.6.0]# ./configure --prefix=/usr/local/ganglia --with-librrd=/usr/local/rrdtool --with-libconfuse=/usr/local/confuse --with-libpcre=no --enable-gexec --enable-status --sysconfdir=/etc/ganglia [root@hadoop2 ganglia-3.6.0]# make && make install [root@hadoop2 ganglia-3.6.0]# cp gmond/gmond.init /etc/init.d/gmond [root@hadoop2 ganglia-3.6.0]# cp /usr//local/ganglia/sbin/gmond /usr/sbin/ [root@hadoop2 ganglia-3.6.0]# gmond --default_config>/etc/ganglia/gmond.conf [root@hadoop2 ganglia-3.6.0]# chkconfig --add gmond

到這里hadoop2上的gmond已經安裝成功,hadoop3、hadoop4、hadoop5依次安裝成功。

3、配置ganglia,分為服務端和客戶端的配置,服務端的配置文件為gmetad.conf,客戶端的配置文件為gmond.conf

首先配置hadoop1上的gmetad.conf

[root@hadoop1 ~]# vi /etc/ganglia/gmetad.conf ## 定義數據源的名字及監聽地址,gmond會將收集的數據發送到數據源監聽機器上的rrd數據目錄中 data_source "hadoop cluster" 192.168.0.101:8649

gmetad.conf的配置相當的簡單,注意gmetad.conf只有hadoop1上有,因此只在hadoop1上配置。接著配置hadoop1上的gmond.conf

[root@hadoop1 ~]# head -n 80 /etc/ganglia/gmond.conf

/* This configuration is as close to 2.5.x default behavior as possible

The values closely match ./gmond/metric.h definitions in 2.5.x */

globals {

daemonize = yes ## 以守護進程運行

setuid = yes

user = nobody ## 運行gmond的用戶

debug_level = 0 ## 改為1會在啟動時打印debug信息

max_udp_msg_len = 1472

mute = no ## 啞巴,本節點將不會再廣播任何自己收集到的數據到網絡上

deaf = no ## 聾子,本節點將不再接收任何其他節點廣播的數據包

allow_extra_data = yes

host_dmax = 86400 /*secs. Expires (removes from web interface) hosts in 1 day */

host_tmax = 20 /*secs */

cleanup_threshold = 300 /*secs */

gexec = no

# By default gmond will use reverse DNS resolution when displaying your hostname

# Uncommeting following value will override that value.

# override_hostname = "mywebserver.domain.com"

# If you are not using multicast this value should be set to something other than 0.

# Otherwise if you restart aggregator gmond you will get empty graphs. 60 seconds is reasonable

send_metadata_interval = 0 /*secs */

}

/*

* The cluster attributes specified will be used as part of the <CLUSTER>

* tag that will wrap all hosts collected by this instance.

*/

cluster {

name = "hadoop cluster" ## 指定集群的名字

owner = "nobody" ## 集群的所有者

latlong = "unspecified"

url = "unspecified"

}

/* The host section describes attributes of the host, like the location */

host {

location = "unspecified"

}

/* Feel free to specify as many udp_send_channels as you like. Gmond

used to only support having a single channel */

udp_send_channel {

#bind_hostname = yes # Highly recommended, soon to be default.

# This option tells gmond to use a source address

# that resolves to the machine's hostname. Without

# this, the metrics may appear to come from any

# interface and the DNS names associated with

# those IPs will be used to create the RRDs.

# mcast_join = 239.2.11.71 ## 單播模式要注釋調這行

host = 192.168.0.101 ## 單播模式,指定接受數據的主機

port = 8649 ## 監聽端口

ttl = 1

}

/* You can specify as many udp_recv_channels as you like as well. */

udp_recv_channel {

#mcast_join = 239.2.11.71 ## 單播模式要注釋調這行

port = 8649

#bind = 239.2.11.71 ## 單播模式要注釋調這行

retry_bind = true

# Size of the UDP buffer. If you are handling lots of metrics you really

# should bump it up to e.g. 10MB or even higher.

# buffer = 10485760

}

/* You can specify as many tcp_accept_channels as you like to share

an xml description of the state of the cluster */

tcp_accept_channel {

port = 8649

# If you want to gzip XML output

gzip_output = no

}

/* Channel to receive sFlow datagrams */

#udp_recv_channel {

# port = 6343

#}

/* Optional sFlow settings */好了,hadoop1上的gmetad.conf和gmond.conf配置文件已經修改完成,這時,直接將hadoop1上的gmond.conf文件scp到hadoop2、hadoop3、hadoop4、hadoop5上相同的路徑下覆蓋原來的gmond.conf即可。

4、啟動服務

啟動hadoop1、hadoop2、hadoop3、hadoop4、hadoop5上的gmond服務

/etc/init.d/gmond start

啟動hadoop1上的httpd服務和gmetad服務

/etc/init.d/gmetad start /etc/init.d/httpd start

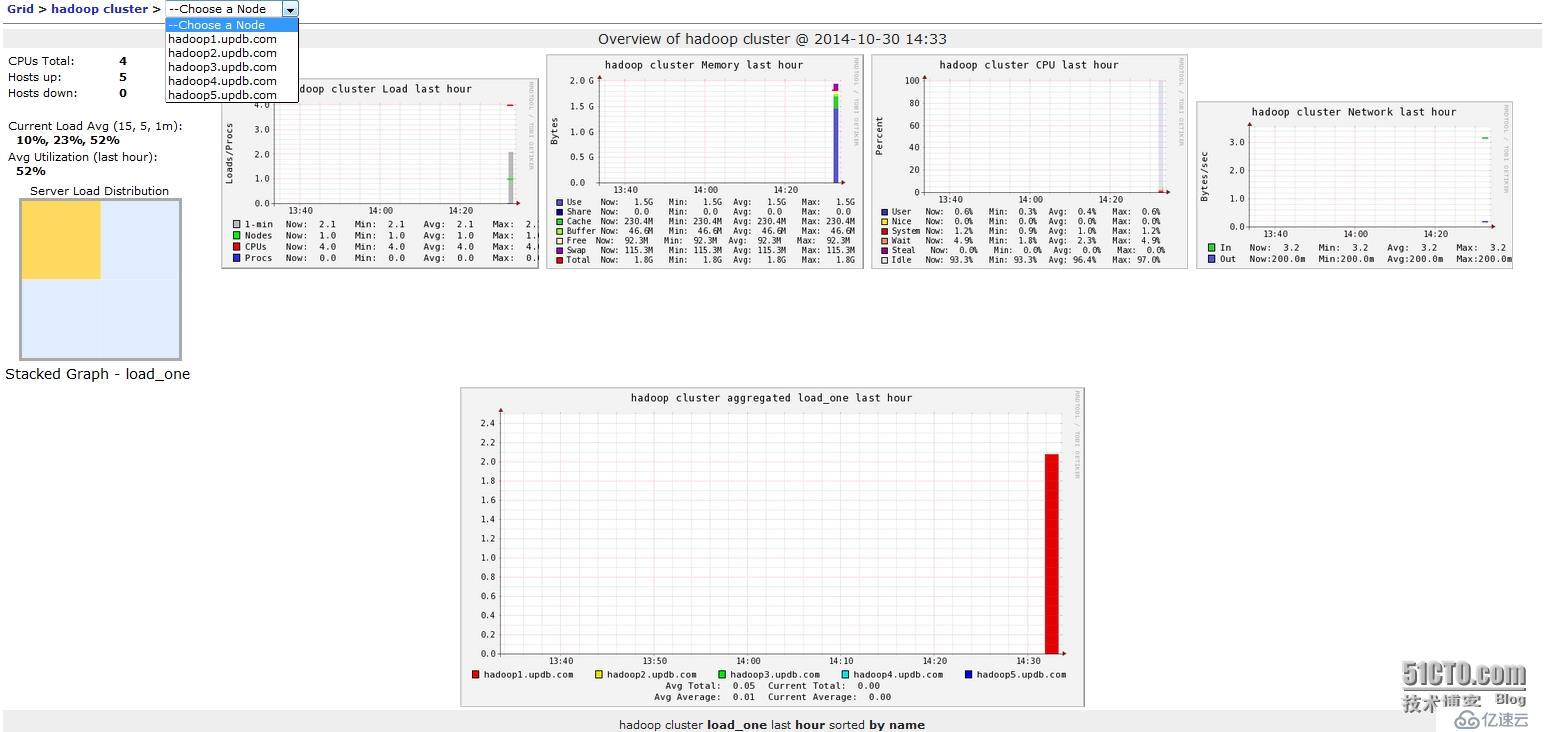

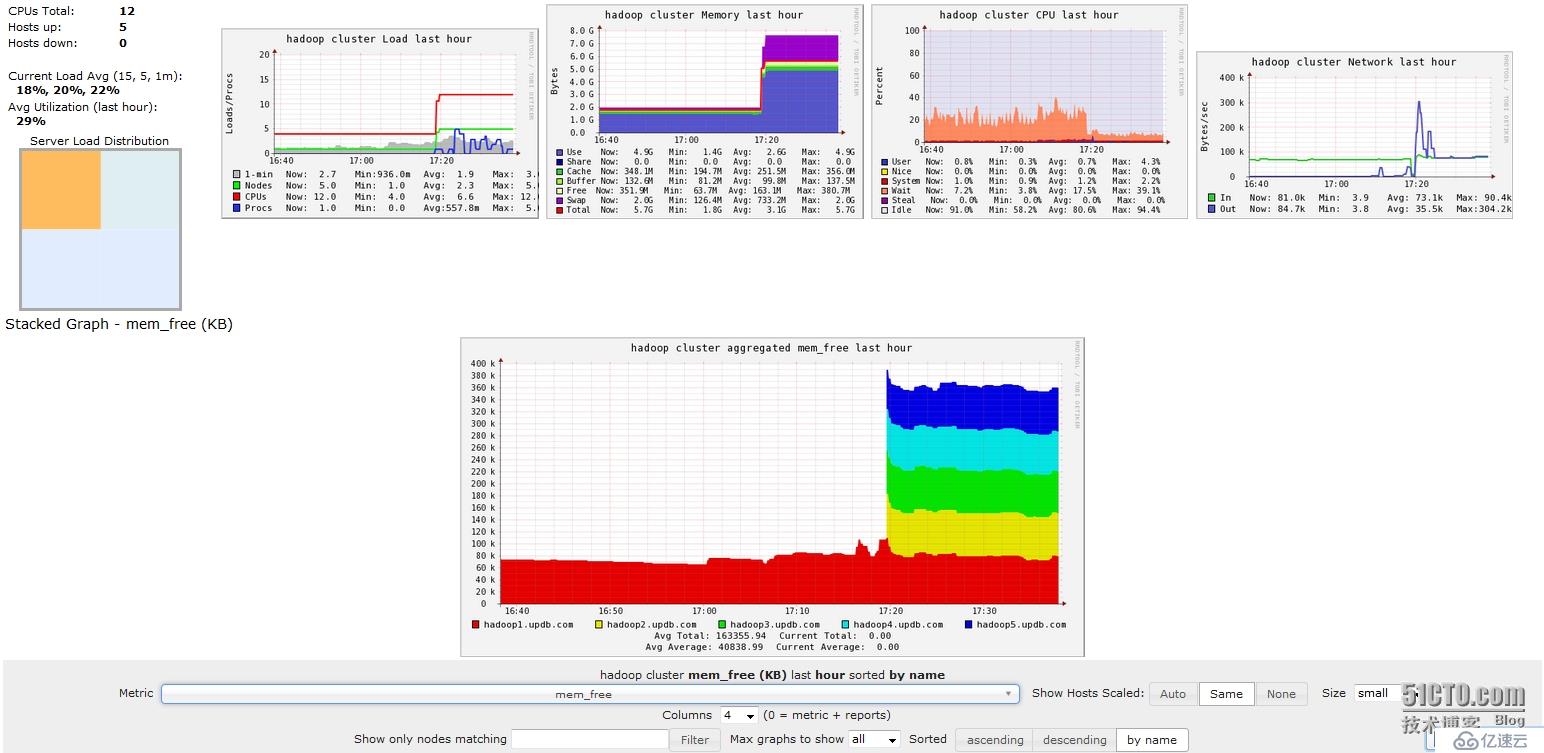

5、在瀏覽器中訪問192.168.0.101:8080/ganglia,就會出現下面的頁面

但此時,ganglia只是監控了各主機基本的性能,并沒有監控到hadoop和hbase,接下來需要配置hadoop和hbase的配置文件,這里以hadoop1上的配置文件為例,其他節點對應的配置文件應從hadoop1上拷貝,首先需要修改的是hadoop配置目錄下的hadoop-metrics2.properties

[root@hadoop1 ~]# cd /opt/hadoop-2.4.1/etc/hadoop/ [root@hadoop1 hadoop]# cat hadoop-metrics2.properties # # Licensed to the Apache Software Foundation (ASF) under one or more # contributor license agreements. See the NOTICE file distributed with # this work for additional information regarding copyright ownership. # The ASF licenses this file to You under the Apache License, Version 2.0 # (the "License"); you may not use this file except in compliance with # the License. You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # # syntax: [prefix].[source|sink].[instance].[options] # See javadoc of package-info.java for org.apache.hadoop.metrics2 for details #*.sink.file.class=org.apache.hadoop.metrics2.sink.FileSink # default sampling period, in seconds #*.period=10 # The namenode-metrics.out will contain metrics from all context #namenode.sink.file.filename=namenode-metrics.out # Specifying a special sampling period for namenode: #namenode.sink.*.period=8 #datanode.sink.file.filename=datanode-metrics.out # the following example split metrics of different # context to different sinks (in this case files) #jobtracker.sink.file_jvm.context=jvm #jobtracker.sink.file_jvm.filename=jobtracker-jvm-metrics.out #jobtracker.sink.file_mapred.context=mapred #jobtracker.sink.file_mapred.filename=jobtracker-mapred-metrics.out #tasktracker.sink.file.filename=tasktracker-metrics.out #maptask.sink.file.filename=maptask-metrics.out #reducetask.sink.file.filename=reducetask-metrics.out *.sink.ganglia.class=org.apache.hadoop.metrics2.sink.ganglia.GangliaSink31 *.sink.ganglia.period=10 *.sink.ganglia.supportsparse=true *.sink.ganglia.slope=jvm.metrics.gcCount=zero,jvm.metrics.memHeapUsedM=both *.sink.ganglia.dmax=jvm.metrics.threadsBlocked=70,jvm.metrics.memHeapUsedM=40 namenode.sink.ganglia.servers=192.168.0.101:8649 datanode.sink.ganglia.servers=192.168.0.101:8649 resourcemanager.sink.ganglia.servers=192.168.0.101:8649 secondarynamenode.sink.ganglia.servers=192.168.0.101:8649 nodemanager.sink.ganglia.servers=192.168.0.101:8649

接著需要修改hbase配置目錄下的hadoop-metrics2-hbase.properties

[root@hadoop1 hadoop]# cd /opt/hbase-0.98.4-hadoop2/conf/ [root@hadoop1 conf]# cat hadoop-metrics2-hbase.properties # syntax: [prefix].[source|sink].[instance].[options] # See javadoc of package-info.java for org.apache.hadoop.metrics2 for details #*.sink.file*.class=org.apache.hadoop.metrics2.sink.FileSink # default sampling period #*.period=10 # Below are some examples of sinks that could be used # to monitor different hbase daemons. # hbase.sink.file-all.class=org.apache.hadoop.metrics2.sink.FileSink # hbase.sink.file-all.filename=all.metrics # hbase.sink.file0.class=org.apache.hadoop.metrics2.sink.FileSink # hbase.sink.file0.context=hmaster # hbase.sink.file0.filename=master.metrics # hbase.sink.file1.class=org.apache.hadoop.metrics2.sink.FileSink # hbase.sink.file1.context=thrift-one # hbase.sink.file1.filename=thrift-one.metrics # hbase.sink.file2.class=org.apache.hadoop.metrics2.sink.FileSink # hbase.sink.file2.context=thrift-two # hbase.sink.file2.filename=thrift-one.metrics # hbase.sink.file3.class=org.apache.hadoop.metrics2.sink.FileSink # hbase.sink.file3.context=rest # hbase.sink.file3.filename=rest.metrics *.sink.ganglia.class=org.apache.hadoop.metrics2.sink.ganglia.GangliaSink31 *.sink.ganglia.period=10 hbase.sink.ganglia.period=10 hbase.sink.ganglia.servers=192.168.0.101:8649

將hadoop1上的這兩個文件,scp到hadoop2~5這4個節點上相同的路徑下,覆蓋原來的文件,然后重啟hadoop、hbase,這時ganglia就能夠監控到hadoop和hbase了,如下如

到這里位置ganglia已經完全安裝配置完成了,且已經成功的監控到了hadoop和hbase。

接下來,安裝nagios,在hadoop1上安裝nagios服務端、在hadoop2、hadoop3、hadoop4、hadoop5上安裝客戶端nrpe。

首先在hadoop1上安裝nagios、及相關插件

yum install nagios nagios-plugins nagios-plugins-all nagios-plugins-nrpe -y

設置nagios web界面的登錄口令

[root@hadoop1 ~]# cat /etc/httpd/conf.d/nagios.conf # SAMPLE CONFIG SNIPPETS FOR APACHE WEB SERVER # Last Modified: 11-26-2005 # # This file contains examples of entries that need # to be incorporated into your Apache web server # configuration file. Customize the paths, etc. as # needed to fit your system. ScriptAlias /nagios/cgi-bin/ "/usr/lib64/nagios/cgi-bin/" <Directory "/usr/lib64/nagios/cgi-bin/"> # SSLRequireSSL Options ExecCGI AllowOverride None Order allow,deny Allow from all # Order deny,allow # Deny from all # Allow from 127.0.0.1 ## 這里的用戶名必須是nagiosadmin AuthName "nagiosadmin" AuthType Basic ## 這里指定密碼文件的路徑 AuthUserFile /etc/nagios/htpasswd.users Require valid-user </Directory> Alias /nagios "/usr/share/nagios/html" <Directory "/usr/share/nagios/html"> # SSLRequireSSL Options None AllowOverride None Order allow,deny Allow from all # Order deny,allow # Deny from all # Allow from 127.0.0.1 ## 這里的用戶名必須是nagiosadmin AuthName "nagiosadmin" AuthType Basic ## 這里指定密碼文件的路徑 AuthUserFile /etc/nagios/htpasswd.users Require valid-user </Directory>

保存,退出,生成密碼文件

[root@hadoop1 ~]# htpasswd -c /etc/nagios/htpasswd.users nagiosadmin New password: Re-type new password: Adding password for user nagiosadmin [root@hadoop1 ~]# cat /etc/nagios/htpasswd.users nagiosadmin:qWrXYKDlycqHM

生成密碼成功,接著在hadoop2、hadoop3、hadoop4、hadoop5上安裝客戶端nrpe及相關插件

yum install nagios-plugins nagios-plugins-nrpe nrpe nagios-plugins-all -y

所有節點的插件均位于/usr/lib64/nagios/plugins/下

[root@hadoop2 ~]# ls /usr/lib64/nagios/plugins/ check_breeze check_game check_mrtgtraf check_overcr check_swap check_by_ssh check_hpjd check_mysql check_pgsql check_tcp check_clamd check_http check_mysql_query check_ping check_time check_cluster check_icmp check_nagios check_pop check_udp check_dhcp check_ide_smart check_nntp check_procs check_ups check_dig check_imap check_nntps check_real check_users check_disk check_ircd check_nrpe check_rpc check_wave check_disk_smb check_jabber check_nt check_sensors negate check_dns check_ldap check_ntp check_simap urlize check_dummy check_ldaps check_ntp_peer check_smtp utils.pm check_file_age check_load check_ntp.pl check_snmp utils.sh check_flexlm check_log check_ntp_time check_spop check_fping check_mailq check_nwstat check_ssh check_ftp check_mrtg check_oracle check_ssmtp

要想讓nagios與ganglia整合起來,就需要在hadoop1上把ganglia安裝包中的ganglia的插件放到nagios的插件目錄下

[root@hadoop1 ~]# cd /opt/soft/ganglia-3.6.0/ [root@hadoop1 ganglia-3.6.0]# ls contrib/check_ganglia.py contrib/check_ganglia.py [root@hadoop1 ganglia-3.6.0]# cp contrib/check_ganglia.py /usr/lib64/nagios/plugins/

默認的check_ganglia.py 插件中只有監控項的實際值大于critical閥值的情況,這里需要增加監控項的實際值小于critical閥值的情況,即最后添加的一段代碼

[root@hadoop1 plugins]# cat check_ganglia.py

#!/usr/bin/env python

import sys

import getopt

import socket

import xml.parsers.expat

class GParser:

def __init__(self, host, metric):

self.inhost =0

self.inmetric = 0

self.value = None

self.host = host

self.metric = metric

def parse(self, file):

p = xml.parsers.expat.ParserCreate()

p.StartElementHandler = parser.start_element

p.EndElementHandler = parser.end_element

p.ParseFile(file)

if self.value == None:

raise Exception('Host/value not found')

return float(self.value)

def start_element(self, name, attrs):

if name == "HOST":

if attrs["NAME"]==self.host:

self.inhost=1

elif self.inhost==1 and name == "METRIC" and attrs["NAME"]==self.metric:

self.value=attrs["VAL"]

def end_element(self, name):

if name == "HOST" and self.inhost==1:

self.inhost=0

def usage():

print """Usage: check_ganglia \

-h|--host= -m|--metric= -w|--warning= \

-c|--critical= [-s|--server=] [-p|--port=] """

sys.exit(3)

if __name__ == "__main__":

##############################################################

ganglia_host = '192.168.0.101'

ganglia_port = 8649

host = None

metric = None

warning = None

critical = None

try:

options, args = getopt.getopt(sys.argv[1:],

"h:m:w:c:s:p:",

["host=", "metric=", "warning=", "critical=", "server=", "port="],

)

except getopt.GetoptError, err:

print "check_gmond:", str(err)

usage()

sys.exit(3)

for o, a in options:

if o in ("-h", "--host"):

host = a

elif o in ("-m", "--metric"):

metric = a

elif o in ("-w", "--warning"):

warning = float(a)

elif o in ("-c", "--critical"):

critical = float(a)

elif o in ("-p", "--port"):

ganglia_port = int(a)

elif o in ("-s", "--server"):

ganglia_host = a

if critical == None or warning == None or metric == None or host == None:

usage()

sys.exit(3)

try:

s = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

s.connect((ganglia_host,ganglia_port))

parser = GParser(host, metric)

value = parser.parse(s.makefile("r"))

s.close()

except Exception, err:

print "CHECKGANGLIA UNKNOWN: Error while getting value \"%s\"" % (err)

sys.exit(3)

if critical > warning:

if value >= critical:

print "CHECKGANGLIA CRITICAL: %s is %.2f" % (metric, value)

sys.exit(2)

elif value >= warning:

print "CHECKGANGLIA WARNING: %s is %.2f" % (metric, value)

sys.exit(1)

else:

print "CHECKGANGLIA OK: %s is %.2f" % (metric, value)

sys.exit(0)

else:

if critical >=value:

print "CHECKGANGLIA CRITICAL: %s is %.2f" % (metric, value)

sys.exit(2)

elif warning >=value:

print "CHECKGANGLIA WARNING: %s is %.2f" % (metric, value)

sys.exit(1)

else:

print "CHECKGANGLIA OK: %s is %.2f" % (metric, value)

sys.exit(0)配置hadoop2、hadoop3、hadoop4、hadoop5上的nrpe客戶端,這里以hadoop2為例演示,其他節點直接從hadoop2上scp,然后覆蓋相同路徑的下的文件即可

[root@hadoop2 ~]# cat /etc/nagios/nrpe.cfg log_facility=daemon pid_file=/var/run/nrpe/nrpe.pid ## nagios的監聽端口 server_port=5666 nrpe_user=nrpe nrpe_group=nrpe ## nagios服務器主機地址 allowed_hosts=192.168.0.101 dont_blame_nrpe=0 allow_bash_command_substitution=0 debug=0 command_timeout=60 connection_timeout=300 ## 監控負載 command[check_load]=/usr/lib64/nagios/plugins/check_load -w 15,10,5 -c 30,25,20 ## 當前系統用戶數 command[check_users]=/usr/lib64/nagios/plugins/check_users -w 5 -c 10 ## 根分區空閑容量 command[check_sda2]=/usr/lib64/nagios/plugins/check_disk -w 20% -c 10% -p /dev/sda2 ## mysql狀態 command[check_mysql]=/usr/lib64/nagios/plugins/check_mysql -H hadoop2.updb.com -P 3306 -d kora -u kora -p upbjsxt ## 主機是否存活 command[check_ping]=/usr/lib64/nagios/plugins/check_ping -H hadoop2.updb.com -w 100.0,20% -c 500.0,60% ## 當前系統的進程總數 command[check_total_procs]=/usr/lib64/nagios/plugins/check_procs -w 150 -c 200 include_dir=/etc/nrpe.d/

scp該文件到hadoop3、hadoop4、hadoop5上相同路徑下覆蓋源文件,注意要將文件中的主機名改為對應的主機名。

hadoop1上配置各個主機及對應的監控項,有如下配置文件

[root@hadoop1 plugins]# cd /etc/nagios/objects/ ## 每個節點對應一個host文件和一個監控項文件,如hadoop2對應的是hadoop2.cfg和service2.cfg [root@hadoop1 objects]# ls commands.cfg hadoop3.cfg localhost.cfg service3.cfg templates.cfg contacts.cfg hadoop4.cfg printer.cfg service4.cfg timeperiods.cfg hadoop1.cfg hadoop5.cfg service1.cfg service5.cfg windows.cfg hadoop2.cfg hosts.cfg service2.cfg switch.cfg

首先在commond.cfg中聲明check_ganglia、check_nrpe命令,在文件最后追加如下內容

# 'check_ganglia' command definition

define command{

command_name check_ganglia

command_line $USER1$/check_ganglia.py -h $HOSTADDRESS$ -m $ARG1$ -w $ARG2$ -c $ARG3$

}

# 'check_nrpe' command definition

define command{

command_name check_nrpe

command_line $USER1$/check_nrpe -H $HOSTADDRESS$ -c $ARG1$

}然后修改templates.cfg模版配置文件,在最后追加如下內容

define service {

use generic-service

name ganglia-service1 ## 這里的配置在service1.cfg中用到

hostgroup_name hadoop1 ## 這里的配置在hadoop1.cfg中用到

service_groups ganglia-metrics1 ## 這里的配置在service1.cfg中用到

register 0

}

define service {

use generic-service

name ganglia-service2 ## 這里的配置在service2.cfg中用到

hostgroup_name hadoop2 ## 這里的配置在hadoop2.cfg中用到

service_groups ganglia-metrics2 ## 這里的配置在service2.cfg中用到

register 0

}

define service {

use generic-service

name ganglia-service3 ## 這里的配置在service3.cfg中用到

hostgroup_name hadoop3 ## 這里的配置在hadoop3.cfg中用到

service_groups ganglia-metrics3 ## 這里的配置在service3.cfg中用到

register 0

}

define service {

use generic-service

name ganglia-service4 ## 這里的配置在service4.cfg中用到

hostgroup_name hadoop4 ## 這里的配置在hadoop4.cfg中用到

service_groups ganglia-metrics4 ## 這里的配置在service4.cfg中用到

register 0

}

define service {

use generic-service

name ganglia-service5 ## 這里的配置在service5.cfg中用到

hostgroup_name hadoop5 ## 這里的配置在hadoop5.cfg中用到

service_groups ganglia-metrics5 ## 這里的配置在service5.cfg中用到

register 0

}hadoop1的配置如下,由于hadoop1是服務端,無需使用nrpe來監控自己,配置如下

## hadoop1.cfg中的監控項為常規的本機監控項,而service1.cfg中的監控項為ganglia的監控項

[root@hadoop1 objects]# cat hadoop1.cfg

define host{

use linux-server

host_name hadoop1.updb.com

alias hadoop1.updb.com

address hadoop1.updb.com

}

define hostgroup {

hostgroup_name hadoop1

alias hadoop1

members hadoop1.updb.com

}

define service{

use local-service

host_name hadoop1.updb.com

service_description PING

check_command check_ping!100,20%!500,60%

}

define service{

use local-service

host_name hadoop1.updb.com

service_description 根分區

check_command check_local_disk!20%!10%!/

# contact_groups admins

}

define service{

use local-service

host_name hadoop1.updb.com

service_description 用戶數量

check_command check_local_users!20!50

}

define service{

use local-service

host_name hadoop1.updb.com

service_description 進程數

check_command check_local_procs!250!400!RSZDT

}

define service{

use local-service

host_name hadoop1.updb.com

service_description 系統負載

check_command check_local_load!5.0,4.0,3.0!10.0,6.0,4.0

}

## services.cfg

[root@hadoop1 objects]# cat service1.cfg

define servicegroup {

servicegroup_name ganglia-metrics1

alias Ganglia Metrics1

}

## 這里的check_ganglia為commonds.cfg中聲明的check_ganglia命令

define service{

use ganglia-service1

service_description HMaster負載

check_command check_ganglia!master.Server.averageLoad!5!10

}

define service{

use ganglia-service1

service_description 內存空閑

check_command check_ganglia!mem_free!200!50

}

define service{

use ganglia-service1

service_description NameNode同步

check_command check_ganglia!dfs.namenode.SyncsAvgTime!10!50

}hadoop2的配置如下,需要注意使用check_nrpe插件的監控項必須要在hadoop2上的nrpe.cfg中聲明

## 這里的監控項就使用了遠程客戶端節點上的nrpe來收集數據并周期性的發送給hadoop1的nagios server

[root@hadoop1 objects]# cat hadoop2.cfg

define host{

use linux-server

host_name hadoop2.updb.com

alias hadoop2.updb.com

address hadoop2.updb.com

}

define hostgroup {

hostgroup_name hadoop2

alias hadoop2

members hadoop2.updb.com

}

## 這里的check_nrpe為commonds.cfg中聲明的check_nrpe

define service{

use local-service

host_name hadoop2.updb.com

service_description Mysql狀態

check_command check_nrpe!check_mysql

}

define service{

use local-service

host_name hadoop2.updb.com

service_description PING

check_command check_nrpe!check_ping

}

define service{

use local-service

host_name hadoop2.updb.com

service_description 根分區

check_command check_nrpe!check_sda2

}

define service{

use local-service

host_name hadoop2.updb.com

service_description 用戶數量

check_command check_nrpe!check_users

}

define service{

use local-service

host_name hadoop2.updb.com

service_description 進程數

check_command check_nrpe!check_total_procs

}

define service{

use local-service

host_name hadoop2.updb.com

service_description 系統負載

check_command check_nrpe!check_load

}

## 這里的監控項為ganglia的監控項使用check_ganglia插件

[root@hadoop1 objects]# cat service2.cfg

define servicegroup {

servicegroup_name ganglia-metrics2

alias Ganglia Metrics2

}

define service{

use ganglia-service2

service_description 內存空閑

check_command check_ganglia!mem_free!200!50

}

define service{

use ganglia-service2

service_description RegionServer_Get

check_command check_ganglia!regionserver.Server.Get_min!5!15

}

define service{

use ganglia-service2

service_description DateNode_Heartbeat

check_command check_ganglia!dfs.datanode.HeartbeatsAvgTime!15!40

}hadoop3、hadoop4、hadoop5的配置與hadoop2一樣,除了主機名要改之外。

最后,還要將這些配置include到nagios的主配置文件中去

## 涉及修改的內容如下,其他的保持不變即可 [root@hadoop1 objects]# vi ../nagios.cfg # You can specify individual object config files as shown below: #cfg_file=/etc/nagios/objects/localhost.cfg cfg_file=/etc/nagios/objects/commands.cfg cfg_file=/etc/nagios/objects/contacts.cfg cfg_file=/etc/nagios/objects/timeperiods.cfg cfg_file=/etc/nagios/objects/templates.cfg ## 將host文件引入進來 cfg_file=/etc/nagios/objects/hadoop1.cfg cfg_file=/etc/nagios/objects/hadoop2.cfg cfg_file=/etc/nagios/objects/hadoop3.cfg cfg_file=/etc/nagios/objects/hadoop4.cfg cfg_file=/etc/nagios/objects/hadoop5.cfg ## 將監控項的文件引入進來 cfg_file=/etc/nagios/objects/service1.cfg cfg_file=/etc/nagios/objects/service2.cfg cfg_file=/etc/nagios/objects/service3.cfg cfg_file=/etc/nagios/objects/service4.cfg cfg_file=/etc/nagios/objects/service5.cfg

接下來驗證,配置項是否正確

[root@hadoop1 objects]# nagios -v ../nagios.cfg Nagios Core 3.5.1 Copyright (c) 2009-2011 Nagios Core Development Team and Community Contributors Copyright (c) 1999-2009 Ethan Galstad Last Modified: 08-30-2013 License: GPL Website: http://www.nagios.org Reading configuration data... Read main config file okay... Processing object config file '/etc/nagios/objects/commands.cfg'... Processing object config file '/etc/nagios/objects/contacts.cfg'... Processing object config file '/etc/nagios/objects/timeperiods.cfg'... Processing object config file '/etc/nagios/objects/templates.cfg'... Processing object config file '/etc/nagios/objects/hadoop1.cfg'... Processing object config file '/etc/nagios/objects/hadoop2.cfg'... Processing object config file '/etc/nagios/objects/hadoop3.cfg'... Processing object config file '/etc/nagios/objects/hadoop4.cfg'... Processing object config file '/etc/nagios/objects/hadoop5.cfg'... Processing object config file '/etc/nagios/objects/service1.cfg'... Processing object config file '/etc/nagios/objects/service2.cfg'... Processing object config file '/etc/nagios/objects/service3.cfg'... Processing object config file '/etc/nagios/objects/service4.cfg'... Processing object config file '/etc/nagios/objects/service5.cfg'... Processing object config directory '/etc/nagios/conf.d'... Read object config files okay... Running pre-flight check on configuration data... Checking services... Checked 44 services. Checking hosts... Checked 5 hosts. Checking host groups... Checked 5 host groups. Checking service groups... Checked 5 service groups. Checking contacts... Checked 1 contacts. Checking contact groups... Checked 1 contact groups. Checking service escalations... Checked 0 service escalations. Checking service dependencies... Checked 0 service dependencies. Checking host escalations... Checked 0 host escalations. Checking host dependencies... Checked 0 host dependencies. Checking commands... Checked 26 commands. Checking time periods... Checked 5 time periods. Checking for circular paths between hosts... Checking for circular host and service dependencies... Checking global event handlers... Checking obsessive compulsive processor commands... Checking misc settings... Total Warnings: 0 Total Errors: 0 Things look okay - No serious problems were detected during the pre-flight check

ok,沒有錯誤,這時就可以啟動hadoop1上的nagios服務

[root@hadoop1 objects]# /etc/init.d/nagios start Starting nagios: done.

啟動hadoop2-5上的nrpe服務

[root@hadoop2 ~]# /etc/init.d/nrpe start Starting nrpe: [ OK ]

在hadoop1上測試nagios與nrpe通信是否正常

[root@hadoop1 objects]# cd /usr/lib64/nagios/plugins/ [root@hadoop1 plugins]# ./check_nrpe -H hadoop1.updb.com [root@hadoop1 plugins]# ./check_nrpe -H hadoop2.updb.com NRPE v2.15 [root@hadoop1 plugins]# ./check_nrpe -H hadoop3.updb.com NRPE v2.15 [root@hadoop1 plugins]# ./check_nrpe -H hadoop4.updb.com NRPE v2.15 [root@hadoop1 plugins]# ./check_nrpe -H hadoop5.updb.com NRPE v2.15

ok,通信正常,驗證check_ganglia.py插件是否工作正常

[root@hadoop1 ~]# cd /usr/lib64/nagios/plugins/ [root@hadoop1 plugins]# ./check_ganglia.py -h hadoop2.updb.com -m mem_free -w 200 -c 50 CHECKGANGLIA OK: mem_free is 72336.00

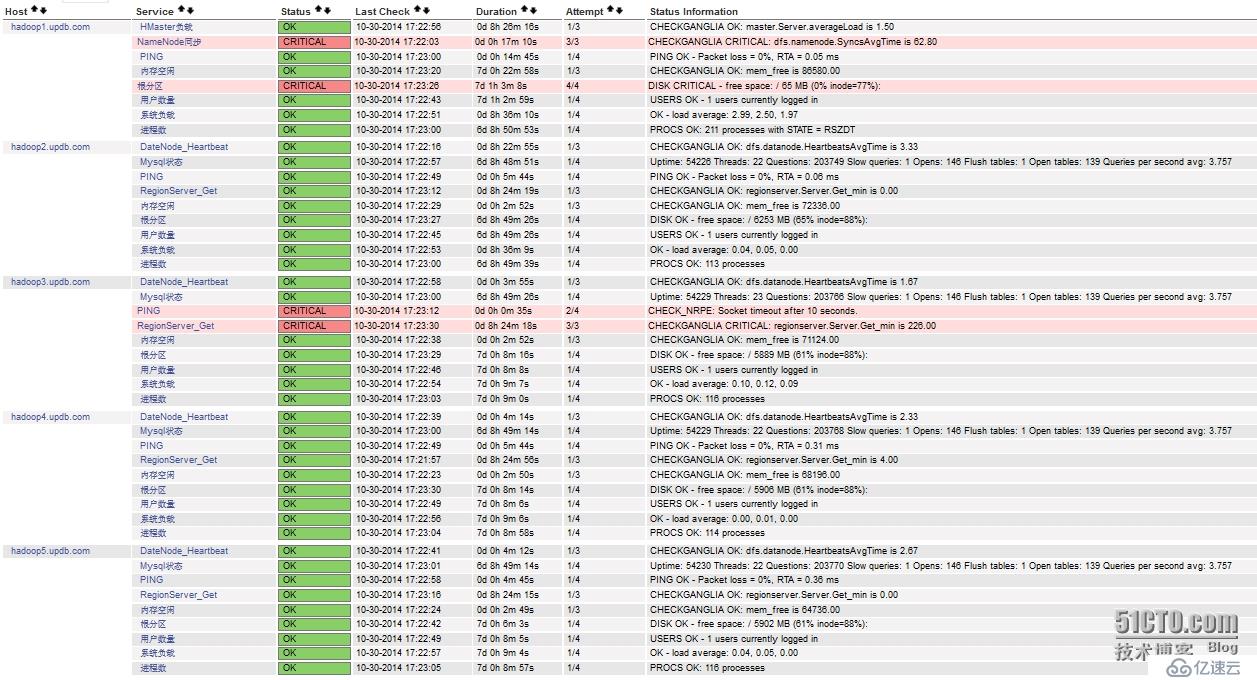

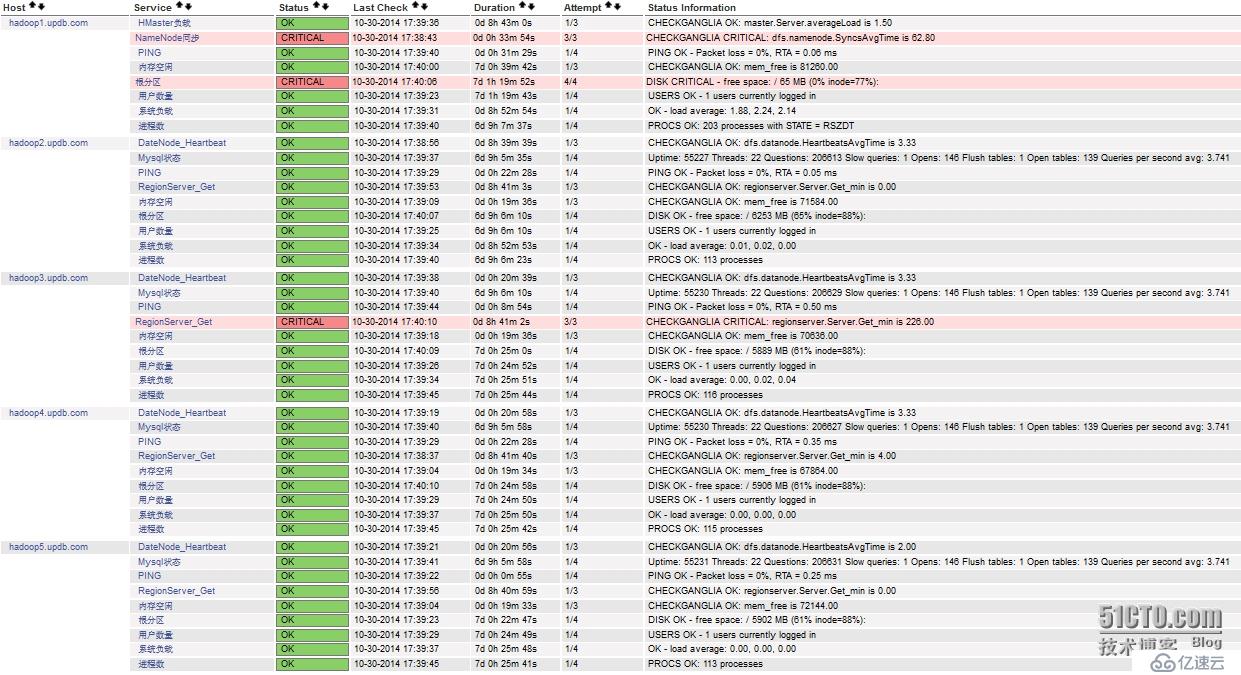

工作正常,現在我們可以nagios的web頁面,看是否監控成功。

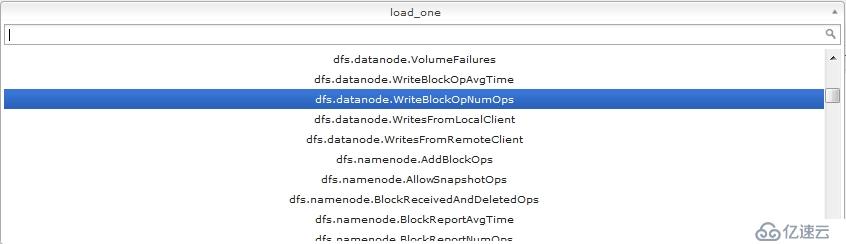

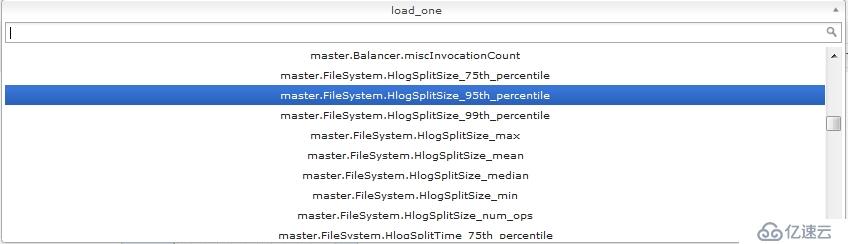

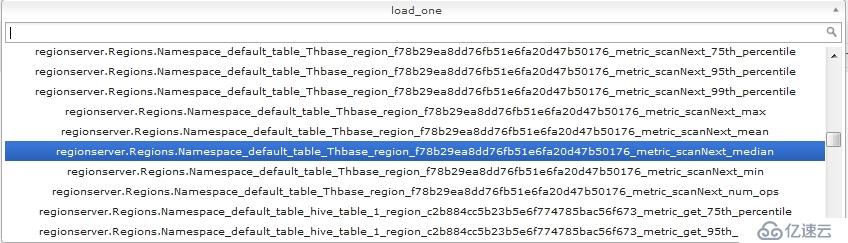

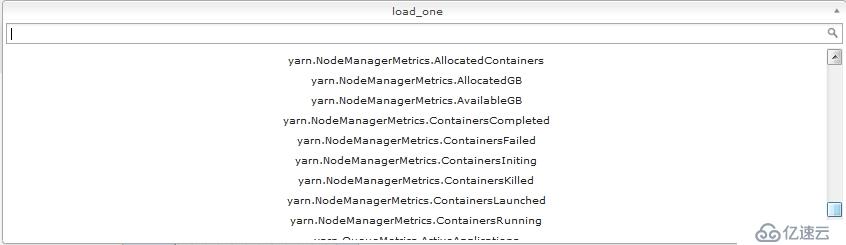

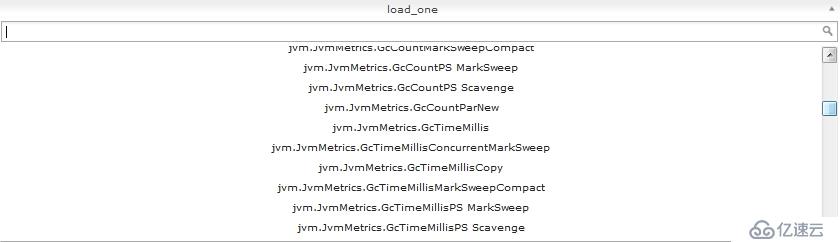

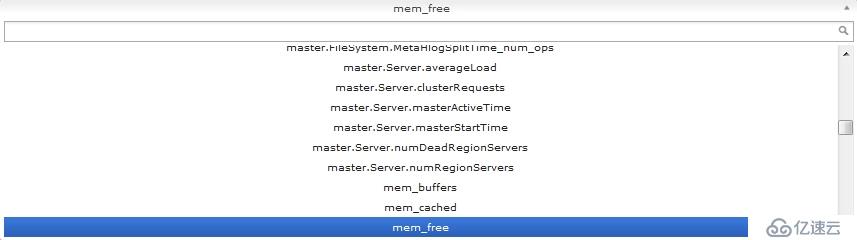

可以看到已經成功監控,如果需要監控hadoop、hbase更多更加詳細的監控項,可以在這里查找,nagios中配置監控項的名字和這里出現的一致即可

你會發現,ganglia中出現的可監控項非常的多,只要你愿意,你可以監控任何你想要監控的集群選項,前提是要弄明白這些監控項的含義,以及閥值設置為多少最為合適,顯然這才是真正的挑戰,實驗中的集群監控項我是隨便選取的,并沒有特別的含義,因為鄙人也還是屌絲一枚,正在慢慢學習這些監控項,好了,到這里nagios已經成功監控到了我們想要監控的內容了,那么怎么實現手機短信報警呢?

看下面,首先我們要配置contacts.cfg,添加郵件的接受人

[root@hadoop1 plugins]# cd /etc/nagios/objects/

[root@hadoop1 objects]# cat contacts.cfg

define contact{

contact_name nagiosadmin

use generic-contact

alias Nagios Admin

## 告警時間段

service_notification_period 24x7

host_notification_period 24x7

## 告警信息格式

service_notification_options w,u,c,r,f,s

host_notification_options d,u,r,f,s

## 告警方式為郵件

service_notification_commands notify-service-by-email

host_notification_commands notify-host-by-email

## 聯系人的139郵箱

email 1820280----@139.com

}

## 定義聯系人所屬組

define contactgroup{

contactgroup_name admins

alias Nagios Administrators

members nagiosadmin

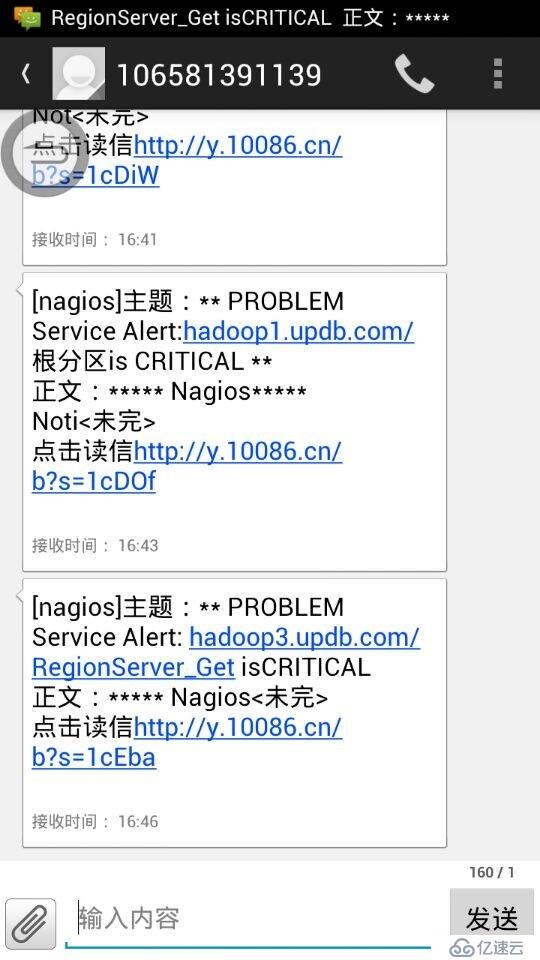

}重啟nagios服務,要保證你的139郵箱開啟了長短信轉發格式,確保郵件到達郵箱后會轉發到你的手機,這里上一張手機接受到郵件后的截圖:

好了,大功告成。看到此刻的你,是否猶如孫悟空的精魂剛剛回歸肉體,與周圍的世界有片刻的神離。哈哈,好好學習ganglia的監控項,以后嘛嘛就再也不用擔心你的運維工作了,上班時間就是喝茶看新聞了,坐等短信告訴你哪個地方有問題。

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。