您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

這篇文章主要介紹了Keras函數式API怎么使用的相關知識,內容詳細易懂,操作簡單快捷,具有一定借鑒價值,相信大家閱讀完這篇Keras函數式API怎么使用文章都會有所收獲,下面我們一起來看看吧。

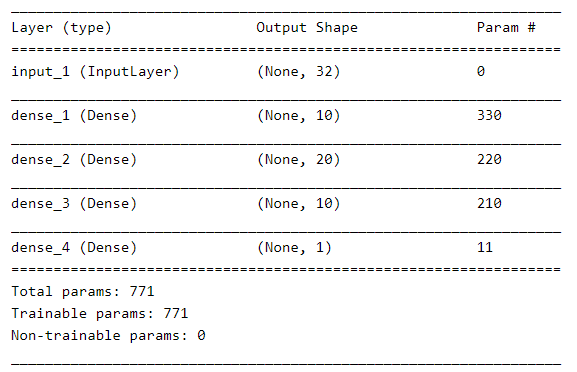

定義了用于二分類的多層感知器模型。

模型輸入32維特征,經過三個全連接層,每層使用relu線性激活函數,并且在輸出層中使用sigmoid激活函數,最后用于二分類。

##------ Multilayer Perceptron ------## from keras.models import Model from keras.layers import Input, Dense from keras import backend as K K.clear_session() # MLP model x = Input(shape=(32,)) hidden1 = Dense(10, activation='relu')(x) hidden2 = Dense(20, activation='relu')(hidden1) hidden3 = Dense(10, activation='relu')(hidden2) output = Dense(1, activation='sigmoid')(hidden3) model = Model(inputs=x, outputs=output) # summarize layers model.summary()

模型的結構和參數如下:

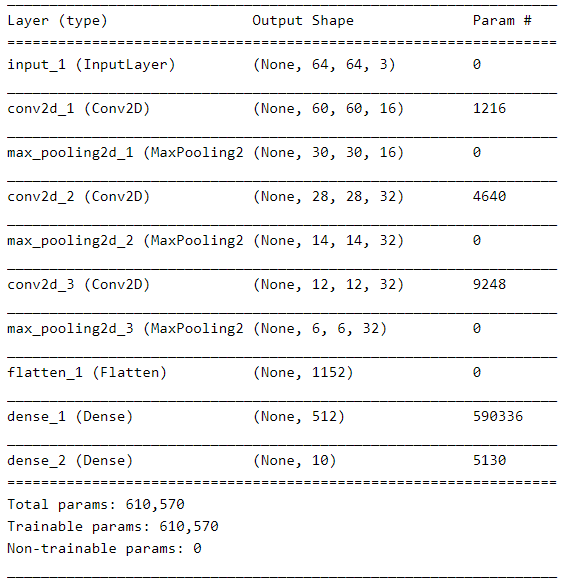

定義用于圖像分類的卷積神經網絡。

該模型接收3通道的64×64圖像作為輸入,然后經過兩個卷積和池化層的序列作為特征提取器,接著過一個全連接層,最后輸出層過softmax激活函數進行10個類別的分類。

##------ Convolutional Neural Network ------## from keras.models import Model from keras.layers import Input from keras.layers import Dense, Flatten from keras.layers import Conv2D, MaxPooling2D from keras import backend as K K.clear_session() # CNN model x = Input(shape=(64,64,3)) conv1 = Conv2D(16, (5,5), activation='relu')(x) pool1 = MaxPooling2D((2,2))(conv1) conv2 = Conv2D(32, (3,3), activation='relu')(pool1) pool2 = MaxPooling2D((2,2))(conv2) conv3 = Conv2D(32, (3,3), activation='relu')(pool2) pool3 = MaxPooling2D((2,2))(conv3) flat = Flatten()(pool3) hidden1 = Dense(512, activation='relu')(flat) output = Dense(10, activation='softmax')(hidden1) model = Model(inputs=x, outputs=output) # summarize layers model.summary()

模型的結構和參數如下:

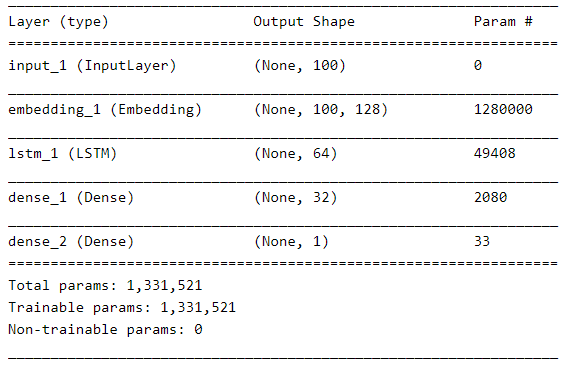

定義一個用于文本序列分類的LSTM網絡。

該模型需要100個時間步長作為輸入,然后經過一個Embedding層,每個時間步變成128維特征表示,然后經過一個LSTM層,LSTM輸出過一個全連接層,最后輸出用sigmoid激活函數用于進行二分類預測。

##------ Recurrent Neural Network ------## from keras.models import Model from keras.layers import Input from keras.layers import Dense, LSTM, Embedding from keras import backend as K K.clear_session() VOCAB_SIZE = 10000 EMBED_DIM = 128 x = Input(shape=(100,), dtype='int32') embedding = Embedding(VOCAB_SIZE, EMBED_DIM, mask_zero=True)(x) hidden1 = LSTM(64)(embedding) hidden2 = Dense(32, activation='relu')(hidden1) output = Dense(1, activation='sigmoid')(hidden2) model = Model(inputs=x, outputs=output) # summarize layers model.summary()

模型的結構和參數如下:

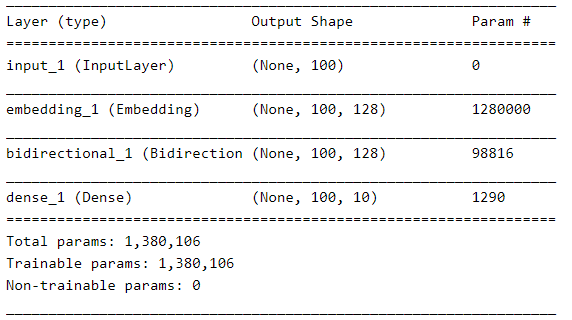

定義一個雙向循環神經網絡,可以用來完成序列標注等任務,相比上面的LSTM網絡,多了一個反向的LSTM,其它設置一樣。

##------ Bidirectional recurrent neural network ------## from keras.models import Model from keras.layers import Input, Embedding from keras.layers import Dense, LSTM, Bidirectional from keras import backend as K K.clear_session() VOCAB_SIZE = 10000 EMBED_DIM = 128 HIDDEN_SIZE = 64 # input layer x = Input(shape=(100,), dtype='int32') # embedding layer embedding = Embedding(VOCAB_SIZE, EMBED_DIM, mask_zero=True)(x) # BiLSTM layer hidden = Bidirectional(LSTM(HIDDEN_SIZE, return_sequences=True))(embedding) # prediction layer output = Dense(10, activation='softmax')(hidden) model = Model(inputs=x, outputs=output) model.summary()

模型的結構和參數如下:

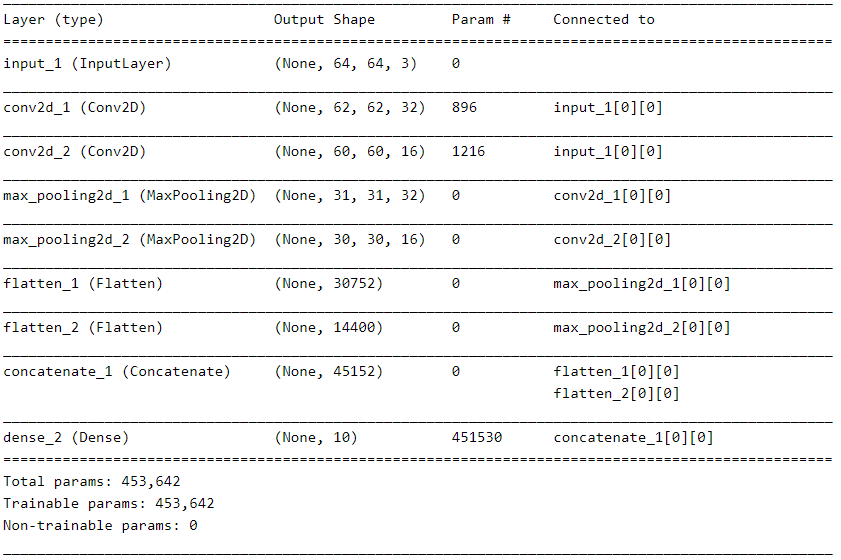

定義了具有不同大小內核的多個卷積層來解釋圖像輸入。

該模型采用尺寸為64×64像素的3通道圖像。

有兩個共享此輸入的CNN特征提取子模型; 第一個內核大小為5x5,第二個內核大小為3x3。

把提取的特征展平為向量然后拼接成一個長向量,然后過一個全連接層,最后輸出層完成10分類。

##------ Shared Input Layer Model ------## from keras.models import Model from keras.layers import Input from keras.layers import Dense, Flatten from keras.layers import Conv2D, MaxPooling2D, Concatenate from keras import backend as K K.clear_session() # input layer x = Input(shape=(64,64,3)) # first feature extractor conv1 = Conv2D(32, (3,3), activation='relu')(x) pool1 = MaxPooling2D((2,2))(conv1) flat1 = Flatten()(pool1) # second feature extractor conv2 = Conv2D(16, (5,5), activation='relu')(x) pool2 = MaxPooling2D((2,2))(conv2) flat2 = Flatten()(pool2) # merge feature merge = Concatenate()([flat1, flat2]) # interpretation layer hidden1 = Dense(128, activation='relu')(merge) # prediction layer output = Dense(10, activation='softmax')(merge) model = Model(inputs=x, outputs=output) model.summary()

模型的結構和參數如下:

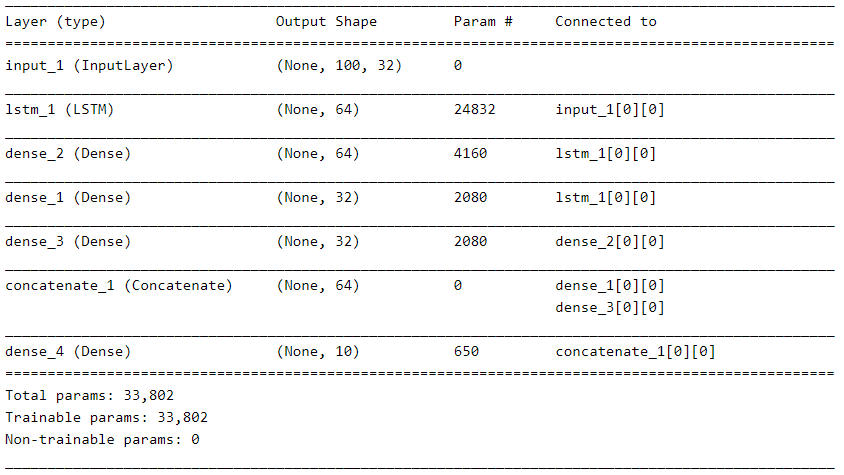

定義一個共享特征抽取層的模型,這里共享的是LSTM層的輸出,具體共享參見代碼

##------ Shared Feature Extraction Layer ------## from keras.models import Model from keras.layers import Input, Embedding from keras.layers import Dense, LSTM, Concatenate from keras import backend as K K.clear_session() # input layer x = Input(shape=(100,32)) # feature extraction extract1 = LSTM(64)(x) # first interpretation model interp1 = Dense(32, activation='relu')(extract1) # second interpretation model interp11 = Dense(64, activation='relu')(extract1) interp12 = Dense(32, activation='relu')(interp11) # merge interpretation merge = Concatenate()([interp1, interp12]) # output layer output = Dense(10, activation='softmax')(merge) model = Model(inputs=x, outputs=output) model.summary()

模型的結構和參數如下:

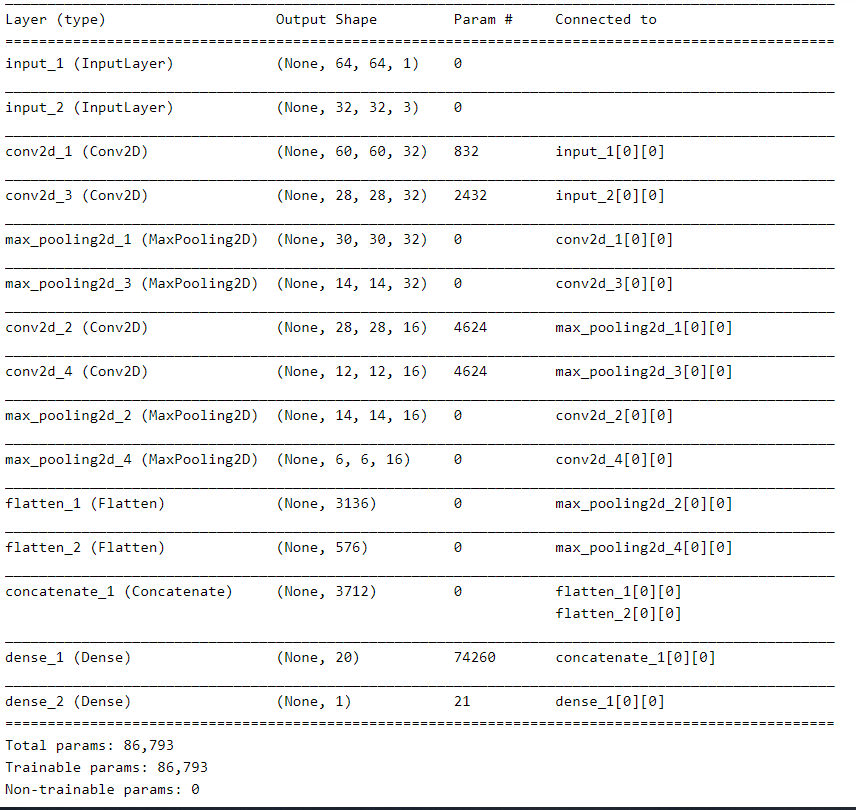

定義有兩個輸入的模型,這里測試的是輸入兩張圖片,一個輸入是單通道的64x64,另一個是3通道的32x32,兩個經過卷積層、池化層后,展平拼接,最后進行二分類。

##------ Multiple Input Model ------## from keras.models import Model from keras.layers import Input from keras.layers import Dense, Flatten from keras.layers import Conv2D, MaxPooling2D, Concatenate from keras import backend as K K.clear_session() # first input model input1 = Input(shape=(64,64,1)) conv11 = Conv2D(32, (5,5), activation='relu')(input1) pool11 = MaxPooling2D(pool_size=(2,2))(conv11) conv12 = Conv2D(16, (3,3), activation='relu')(pool11) pool12 = MaxPooling2D(pool_size=(2,2))(conv12) flat1 = Flatten()(pool12) # second input model input2 = Input(shape=(32,32,3)) conv21 = Conv2D(32, (5,5), activation='relu')(input2) pool21 = MaxPooling2D(pool_size=(2,2))(conv21) conv22 = Conv2D(16, (3,3), activation='relu')(pool21) pool22 = MaxPooling2D(pool_size=(2,2))(conv22) flat2 = Flatten()(pool22) # merge input models merge = Concatenate()([flat1, flat2]) # interpretation model hidden1 = Dense(20, activation='relu')(merge) output = Dense(1, activation='sigmoid')(hidden1) model = Model(inputs=[input1, input2], outputs=output) model.summary()

模型的結構和參數如下:

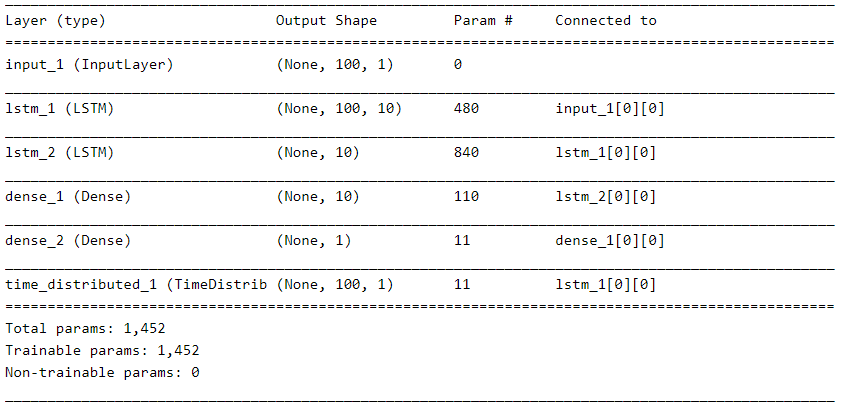

定義有多個輸出的模型,以文本序列輸入LSTM網絡為例,一個輸出是對文本的分類,另外一個輸出是對文本進行序列標注。

##------ Multiple Output Model ------ ## from keras.models import Model from keras.layers import Input from keras.layers import Dense, Flatten, TimeDistributed, LSTM from keras.layers import Conv2D, MaxPooling2D, Concatenate from keras import backend as K K.clear_session() x = Input(shape=(100,1)) extract = LSTM(10, return_sequences=True)(x) class11 = LSTM(10)(extract) class12 = Dense(10, activation='relu')(class11) output1 = Dense(1, activation='sigmoid')(class12) output2 = TimeDistributed(Dense(1, activation='linear'))(extract) model = Model(inputs=x, outputs=[output1, output2]) model.summary()

模型的結構和參數如下:

關于“Keras函數式API怎么使用”這篇文章的內容就介紹到這里,感謝各位的閱讀!相信大家對“Keras函數式API怎么使用”知識都有一定的了解,大家如果還想學習更多知識,歡迎關注億速云行業資訊頻道。

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。