您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

環境:

Xshell: 5

Xftp: 4

Virtual Box: 5.16

Linux: CentOS-7-x86_64-Minimal-1511

Vim: yum -y install vim-enhanced

JDK: 8

Hadoop: 2.7.3.tar.gz

在Virtual Box中安裝完成 Linux后,設置網卡為自動啟動:

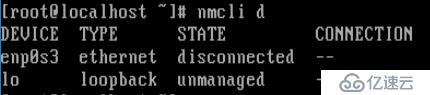

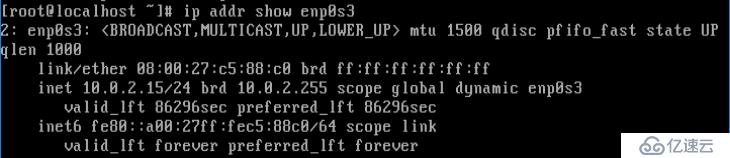

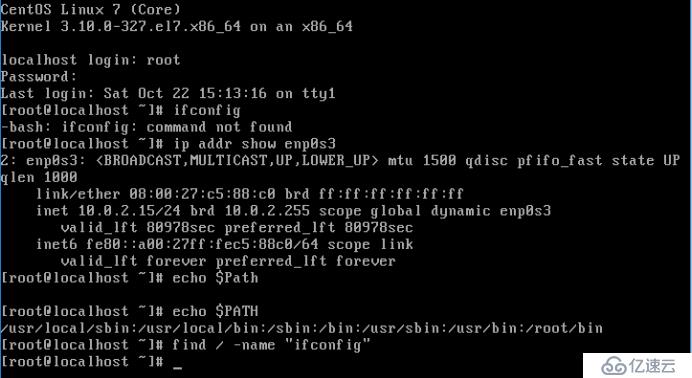

檢查機器網卡:

nmcli d

可以看到有一個網卡:enp0s3

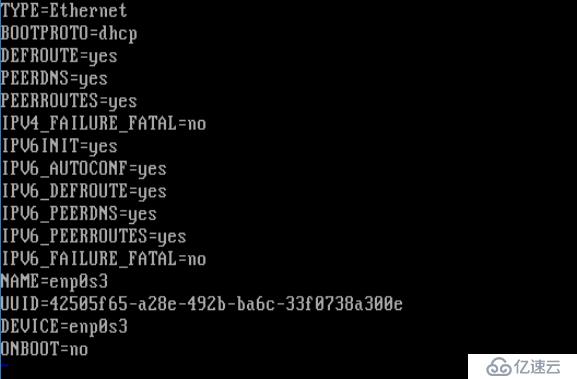

用vi打開網卡配置文件:

vi /etc/sysconfig/network-scirpts/ifcfg-enp0s3

修改最后一行:ONBOOT=no -> ONBOOT=yes

DEVICE=eth0 | 描述網卡對應的設備別名,例如ifcfg-eth0的文件中它為eth0 |

BOOTPROTO=static | 設置網卡獲得ip地址的方式,可能的選項為static,dhcp或bootp,分別對應靜態指定的ip地址,通過dhcp協議獲得的ip地址,通過bootp協議獲得的ip地址 |

BROADCAST=192.168.0.255 | 對應的子網廣播地址 |

HWADDR=00:07:E9:05:E8:B4 | 對應的網卡物理地址 |

IPADDR=12.168.1.2 | 如果設置網卡獲得ip地址的方式為靜態指定,此字段就指定了網卡對應的ip地址 |

IPV6INIT=no | 開啟或關閉IPv6;關閉no,開啟yes |

IPV6_AUTOCONF=no | 開啟或關閉IPv6自動配置;關閉no,開啟yes |

NETMASK=255.255.255.0 | 網卡對應的網絡掩碼 |

NETWORK=192.168.1.0 | 網卡對應的網絡地址 |

ONBOOT=yes | 系統啟動時是否設置此網絡接口,設置為yes時,系統啟動時激活此設備 |

安裝Hadoop

[root@centosmaster opt]# tar zxf hadoop-2.7.3.tar.gz [root@centosmaster opt]# cd hadoop-2.7.3 [root@centosmaster opt]# cd /opt/hadoop-2.7.3/etc/hadoop

core-site.xml

<configuration> <property> <name>fs.defaultFS</name> <value>hdfs://CentOS_105:9000</value> </property> <property> <name>hadoop.tmp.dir</name> <value>file:/opt/hadoop-2.7.3/current/tmp</value> </property> <property> <!-- 分鐘 --> <name>fs.trash.interval</name> <value>8</value> </property> </configuration>

hdfs-site.xml

<configuration> <property> <name>dfs.namenode.name.dir</name> <value>/opt/hadoop-2.7.3/current/dfs/name</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>/opt/hadoop-2.7.3/current/data</value> </property> <!--副本 --> <property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.webhdfs.enabled</name> <value>true</value> </property> <property> <name>dfs.permissions.superusergroup</name> <value>staff</value> </property> <property> <name>dfs.permissions.enabled</name> <value>false</value> </property> </configuration>

yarn-site.xml

<configuration> <!-- Site specific YARN configuration properties --> <property> <name>yarn.resourcemanager.hostname</name> <value>centosmaster</value> </property> <property> <name>yarn.nodemanager.aux.services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name> <value>org.apache.hadoop.mapred.ShuffleHandler</value> </property> <property> <name>yarn.resourcemanager.address</name> <value>centosmaster:18040</value> </property> <property> <name>yarn.resourcemanager.scheduler.address</name> <value>centosmaster:18030</value> </property> <property> <name>yarn.resourcemanager.resource.tracker.address</name> <value>centosmaster:18025</value> </property> <property> <name>yarn.resourcemanager.manager.admin.address</name> <value>centosmaster:18141</value> </property> <property> <name>yarn.resourcemanager.webapp.address</name> <value>centosmaster:18088</value> </property> <property> <name>yarn.log-aggregation-enable</name> <value>true</value> </property> <property> <name>yarn.log-aggregation.retain-seconds</name> <value>86400</value> </property> <property> <name>yarn.log-aggregation.retain-check-interval-seconds</name> <value>86400</value> </property> <property> <name>yarn.nodemanager.remote-app-log-dir</name> <value>/tmp/logs</value> </property> <property> <name>yarn.nodemanager.remote-app-log-dir-suffix</name> <value>logs</value> </property> </configuration>

mapred-site.xml

<configuration> <property> <name>mapreduce.foramework.name</name> <value>yarn</value> </property> <property> <name>mapreduce.jobtracker.http.address</name> <value>centosmaster:50030</value> </property> <property> <name>mapreduce.jobhistory.address</name> <value>centosmaster:10020</value> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>centosmaster:19888</value> </property> <property> <name>mapreduce.jobhistory.done.dir</name> <value>/jobhistory/done</value> </property> <property> <name>mapreduce.intermediate-done-dir</name> <value>/jobhistory/one_intermediate</value> </property> <property> <name>mapreduce.job.ubertask.enable</name> <value>true</value> </property> </configuration>

在Slaves文件中添加本機ip,指定本機為Slave:

centosmaster

給hadoop指定java jdk

vim hadoop-env.sh # The java implementation to use. export JAVA_HOME=/usr/java/jdk1.8.0_111/

格式化HDFS文件系統

[root@centosmaster~]# hdfs namenode -format ************************************************************/ 16/10/23 08:58:31 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT] 16/10/23 08:58:31 INFO namenode.NameNode: createNameNode [-format] 16/10/23 08:58:31 WARN common.Util: Path /opt/hadoop-2.7.3/current/dfs/name should be specified as a URI in configuration files. Please update hdfs configuration. 16/10/23 08:58:31 WARN common.Util: Path /opt/hadoop-2.7.3/current/dfs/name should be specified as a URI in configuration files. Please update hdfs configuration. Formatting using clusterid: CID-1294bdbb-d45c-49f3-b5c5-3d26934e084f 16/10/23 08:58:32 INFO namenode.FSNamesystem: No KeyProvider found. 16/10/23 08:58:32 INFO namenode.FSNamesystem: fsLock is fair:true 16/10/23 08:58:32 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000 16/10/23 08:58:32 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true 16/10/23 08:58:32 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000 16/10/23 08:58:32 INFO blockmanagement.BlockManager: The block deletion will start around 2016 Oct 23 08:58:32 16/10/23 08:58:32 INFO util.GSet: Computing capacity for map BlocksMap 16/10/23 08:58:32 INFO util.GSet: VM type = 64-bit 16/10/23 08:58:32 INFO util.GSet: 2.0% max memory 966.7 MB = 19.3 MB 16/10/23 08:58:32 INFO util.GSet: capacity = 2^21 = 2097152 entries 16/10/23 08:58:32 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false 16/10/23 08:58:32 INFO blockmanagement.BlockManager: defaultReplication = 1 16/10/23 08:58:32 INFO blockmanagement.BlockManager: maxReplication = 512 16/10/23 08:58:32 INFO blockmanagement.BlockManager: minReplication = 1 16/10/23 08:58:32 INFO blockmanagement.BlockManager: maxReplicationStreams = 2 16/10/23 08:58:32 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000 16/10/23 08:58:32 INFO blockmanagement.BlockManager: encryptDataTransfer = false 16/10/23 08:58:32 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000 16/10/23 08:58:32 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE) 16/10/23 08:58:32 INFO namenode.FSNamesystem: supergroup = staff 16/10/23 08:58:32 INFO namenode.FSNamesystem: isPermissionEnabled = false 16/10/23 08:58:32 INFO namenode.FSNamesystem: HA Enabled: false 16/10/23 08:58:32 INFO namenode.FSNamesystem: Append Enabled: true 16/10/23 08:58:32 INFO util.GSet: Computing capacity for map INodeMap 16/10/23 08:58:32 INFO util.GSet: VM type = 64-bit 16/10/23 08:58:32 INFO util.GSet: 1.0% max memory 966.7 MB = 9.7 MB 16/10/23 08:58:32 INFO util.GSet: capacity = 2^20 = 1048576 entries 16/10/23 08:58:32 INFO namenode.FSDirectory: ACLs enabled? false 16/10/23 08:58:32 INFO namenode.FSDirectory: XAttrs enabled? true 16/10/23 08:58:32 INFO namenode.FSDirectory: Maximum size of an xattr: 16384 16/10/23 08:58:32 INFO namenode.NameNode: Caching file names occuring more than 10 times 16/10/23 08:58:32 INFO util.GSet: Computing capacity for map cachedBlocks 16/10/23 08:58:32 INFO util.GSet: VM type = 64-bit 16/10/23 08:58:32 INFO util.GSet: 0.25% max memory 966.7 MB = 2.4 MB 16/10/23 08:58:32 INFO util.GSet: capacity = 2^18 = 262144 entries 16/10/23 08:58:32 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033 16/10/23 08:58:32 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0 16/10/23 08:58:32 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000 16/10/23 08:58:32 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10 16/10/23 08:58:32 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10 16/10/23 08:58:32 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25 16/10/23 08:58:32 INFO namenode.FSNamesystem: Retry cache on namenode is enabled 16/10/23 08:58:32 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis 16/10/23 08:58:32 INFO util.GSet: Computing capacity for map NameNodeRetryCache 16/10/23 08:58:32 INFO util.GSet: VM type = 64-bit 16/10/23 08:58:32 INFO util.GSet: 0.029999999329447746% max memory 966.7 MB = 297.0 KB 16/10/23 08:58:32 INFO util.GSet: capacity = 2^15 = 32768 entries 16/10/23 08:58:32 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1532573559-192.168.0.105-1477184312651 16/10/23 08:58:32 INFO common.Storage: Storage directory /opt/hadoop-2.7.3/current/dfs/name has been successfully formatted. 16/10/23 08:58:32 INFO namenode.FSImageFormatProtobuf: Saving p_w_picpath file /opt/hadoop-2.7.3/current/dfs/name/current/fsp_w_picpath.ckpt_0000000000000000000 using no compression 16/10/23 08:58:32 INFO namenode.FSImageFormatProtobuf: Image file /opt/hadoop-2.7.3/current/dfs/name/current/fsp_w_picpath.ckpt_0000000000000000000 of size 346 bytes saved in 0 seconds. 16/10/23 08:58:32 INFO namenode.NNStorageRetentionManager: Going to retain 1 p_w_picpaths with txid >= 0 16/10/23 08:58:32 INFO util.ExitUtil: Exiting with status 0 16/10/23 08:58:32 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at CentOS_105/192.168.0.105 ************************************************************/

從打出的Log文件可以看到格式化成功:

INFO common.Storage: Storage directory /opt/hadoop-2.7.3/current/dfs/name has been successfully formatted.

hdfs的路徑有個warning,需要修改hdfs-site.xml

<property>

<name>dfs.namenode.name.dir</name>

<value>/opt/hadoop-2.7.3/current/dfs/name</value>

<value>file:///opt/hadoop-2.7.3/current/dfs/name</value>

</property>

重新新格式化:

hdfs namenode -format

查看host:

hostnamectl

修改hostname:

[root@centosmaster~]#Hostnamectl set-hostname "centosmaster"

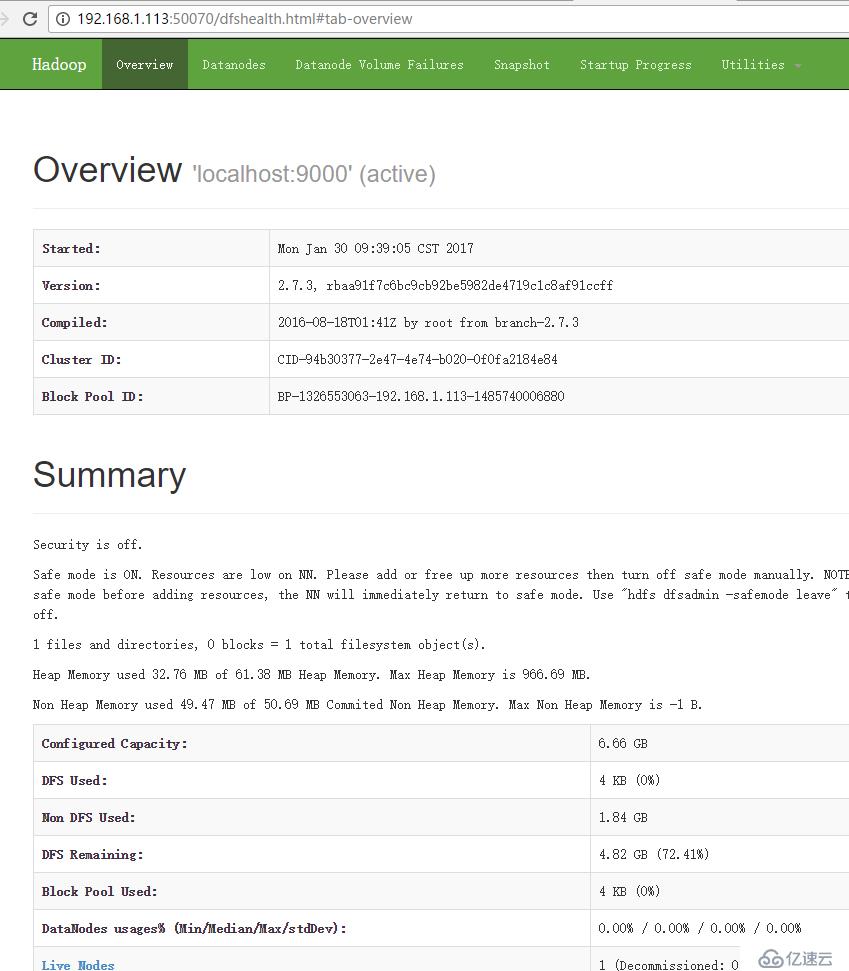

啟動hadoop:

[root@centosmaster hadoop-2.7.3]# sbin/start-all.sh This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh Starting namenodes on [localhost] localhost: starting namenode, logging to /opt/hadoop-2.7.3/logs/hadoop-root-namenode-centosmaster.out centosmaster: starting datanode, logging to /opt/hadoop-2.7.3/logs/hadoop-root-datanode-centosmaster.out Starting secondary namenodes [Centosmaster] Centosmaster: starting secondarynamenode, logging to /opt/hadoop-2.7.3/logs/hadoop-root-secondarynamenode-centosmaster.out starting yarn daemons starting resourcemanager, logging to /opt/hadoop-2.7.3/logs/yarn-root-resourcemanager-centosmaster.out centosmaster: starting nodemanager, logging to /opt/hadoop-2.7.3/logs/yarn-root-nodemanager-centosmaster.out

用Jps查看啟動了什么節點:

[root@centosmaster hadoop]# jps 2546 NodeManager 3090 SecondaryNameNode 3348 Jps 2201 DataNode 2109 NameNode 2447 ResourceManager

停止Hadoop:

sbin/stop-all.sh

驗證:

問題1-權限:

[root@CentOS_105 jdk1.8.0_111]# java -version bash: /usr/java/jdk1.8.0_111//bin/java: Permission denied

解決:chmod 777 /usr/java/jdk1.8.0_111/bin/java

問題2-配置:

[root@centos_1 hadoop-2.7.3]# sbin/start-all.sh This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh Incorrect configuration: namenode address dfs.namenode.servicerpc-address or dfs.namenode.rpc-address is not configured. Starting namenodes on []

解決:在etc/hadoop/core-site.xml中增加配置:

<property> <name>fs.default.name</name> <value>hdfs://127.0.0.1:9000</value> </property>

問題3-Hostname

原因:Hadoop的xml配置中會因為某些特殊字符而不正常.

解決:主機使用的hostname不合法,修改為不包含著‘.’ '/' '_'等非法字符的主機名

參閱

網卡配置信息:http://www.krizna.com/centos/setup-network-centos-7/

JDK安裝詳解:http://www.cnblogs.com/wangfajun/p/5257899.html

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。