您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

這篇文章將為大家詳細講解有關在scrapy中使用selenium實現一個爬取網頁的功能,文章內容質量較高,因此小編分享給大家做個參考,希望大家閱讀完這篇文章后對相關知識有一定的了解。

1.背景

2. 環境

3.原理分析

3.1. 分析request請求的流程

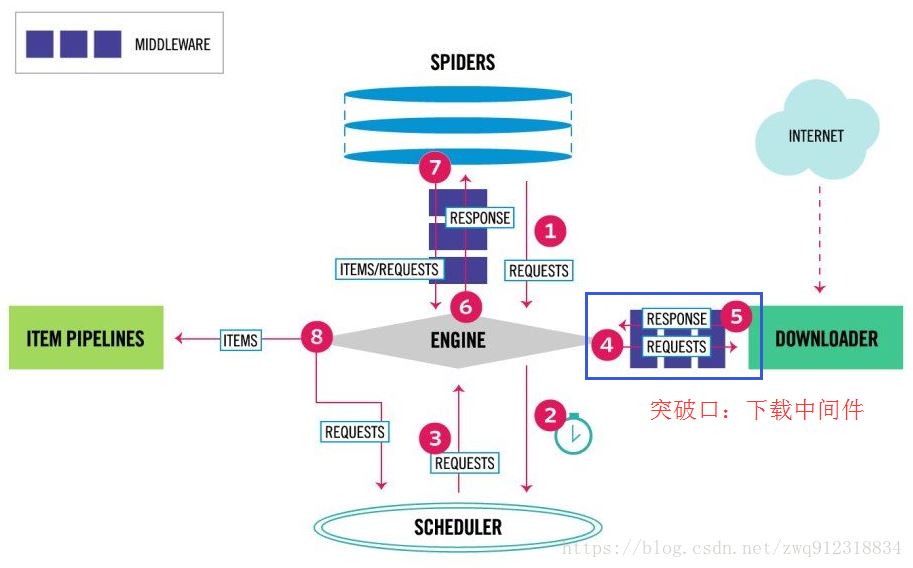

首先看一下scrapy最新的架構圖:

部分流程:

第一:爬蟲引擎生成requests請求,送往scheduler調度模塊,進入等待隊列,等待調度。

第二:scheduler模塊開始調度這些requests,出隊,發往爬蟲引擎。

第三:爬蟲引擎將這些requests送到下載中間件(多個,例如加header,代理,自定義等等)進行處理。

第四:處理完之后,送往Downloader模塊進行下載。從這個處理過程來看,突破口就在下載中間件部分,用selenium直接處理掉request請求。

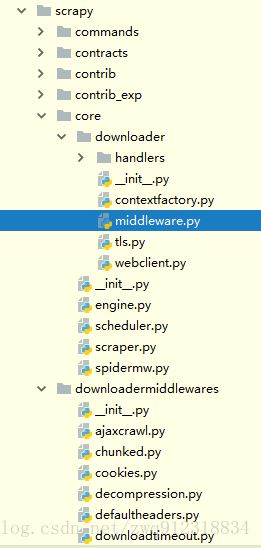

3.2. requests和response中間處理件源碼分析

相關代碼位置:

源碼解析:

# 文件:E:\Miniconda\Lib\site-packages\scrapy\core\downloader\middleware.py

"""

Downloader Middleware manager

See documentation in docs/topics/downloader-middleware.rst

"""

import six

from twisted.internet import defer

from scrapy.http import Request, Response

from scrapy.middleware import MiddlewareManager

from scrapy.utils.defer import mustbe_deferred

from scrapy.utils.conf import build_component_list

class DownloaderMiddlewareManager(MiddlewareManager):

component_name = 'downloader middleware'

@classmethod

def _get_mwlist_from_settings(cls, settings):

# 從settings.py或這custom_setting中拿到自定義的Middleware中間件

'''

'DOWNLOADER_MIDDLEWARES': {

'mySpider.middlewares.ProxiesMiddleware': 400,

# SeleniumMiddleware

'mySpider.middlewares.SeleniumMiddleware': 543,

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware': None,

},

'''

return build_component_list(

settings.getwithbase('DOWNLOADER_MIDDLEWARES'))

# 將所有自定義Middleware中間件的處理函數添加到對應的methods列表中

def _add_middleware(self, mw):

if hasattr(mw, 'process_request'):

self.methods['process_request'].append(mw.process_request)

if hasattr(mw, 'process_response'):

self.methods['process_response'].insert(0, mw.process_response)

if hasattr(mw, 'process_exception'):

self.methods['process_exception'].insert(0, mw.process_exception)

# 整個下載流程

def download(self, download_func, request, spider):

@defer.inlineCallbacks

def process_request(request):

# 處理request請求,依次經過各個自定義Middleware中間件的process_request方法,前面有加入到list中

for method in self.methods['process_request']:

response = yield method(request=request, spider=spider)

assert response is None or isinstance(response, (Response, Request)), \

'Middleware %s.process_request must return None, Response or Request, got %s' % \

(six.get_method_self(method).__class__.__name__, response.__class__.__name__)

# 這是關鍵地方

# 如果在某個Middleware中間件的process_request中處理完之后,生成了一個response對象

# 那么會直接將這個response return 出去,跳出循環,不再處理其他的process_request

# 之前我們的header,proxy中間件,都只是加個user-agent,加個proxy,并不做任何return值

# 還需要注意一點:就是這個return的必須是Response對象

# 后面我們構造的HtmlResponse正是Response的子類對象

if response:

defer.returnValue(response)

# 如果在上面的所有process_request中,都沒有返回任何Response對象的話

# 最后,會將這個加工過的Request送往download_func,進行下載,返回的就是一個Response對象

# 然后依次經過各個Middleware中間件的process_response方法進行加工,如下

defer.returnValue((yield download_func(request=request,spider=spider)))

@defer.inlineCallbacks

def process_response(response):

assert response is not None, 'Received None in process_response'

if isinstance(response, Request):

defer.returnValue(response)

for method in self.methods['process_response']:

response = yield method(request=request, response=response,

spider=spider)

assert isinstance(response, (Response, Request)), \

'Middleware %s.process_response must return Response or Request, got %s' % \

(six.get_method_self(method).__class__.__name__, type(response))

if isinstance(response, Request):

defer.returnValue(response)

defer.returnValue(response)

@defer.inlineCallbacks

def process_exception(_failure):

exception = _failure.value

for method in self.methods['process_exception']:

response = yield method(request=request, exception=exception,

spider=spider)

assert response is None or isinstance(response, (Response, Request)), \

'Middleware %s.process_exception must return None, Response or Request, got %s' % \

(six.get_method_self(method).__class__.__name__, type(response))

if response:

defer.returnValue(response)

defer.returnValue(_failure)

deferred = mustbe_deferred(process_request, request)

deferred.addErrback(process_exception)

deferred.addCallback(process_response)

return deferred4. 代碼

在settings.py中,配置好selenium參數:

# 文件settings.py中 # ----------- selenium參數配置 ------------- SELENIUM_TIMEOUT = 25 # selenium瀏覽器的超時時間,單位秒 LOAD_IMAGE = True # 是否下載圖片 WINDOW_HEIGHT = 900 # 瀏覽器窗口大小 WINDOW_WIDTH = 900

在spider中,生成request時,標記哪些請求需要走selenium下載:

# 文件mySpider.py中

class mySpider(CrawlSpider):

name = "mySpiderAmazon"

allowed_domains = ['amazon.com']

custom_settings = {

'LOG_LEVEL':'INFO',

'DOWNLOAD_DELAY': 0,

'COOKIES_ENABLED': False, # enabled by default

'DOWNLOADER_MIDDLEWARES': {

# 代理中間件

'mySpider.middlewares.ProxiesMiddleware': 400,

# SeleniumMiddleware 中間件

'mySpider.middlewares.SeleniumMiddleware': 543,

# 將scrapy默認的user-agent中間件關閉

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware': None,

},

#.....................華麗的分割線.......................

# 生成request時,將是否使用selenium下載的標記,放入到meta中

yield Request(

url = "https://www.amazon.com/",

meta = {'usedSelenium': True, 'dont_redirect': True},

callback = self.parseIndexPage,

errback = self.error

)在下載中間件middlewares.py中,使用selenium抓取頁面(核心部分)

# -*- coding: utf-8 -*-

from selenium import webdriver

from selenium.common.exceptions import TimeoutException

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.common.keys import Keys

from scrapy.http import HtmlResponse

from logging import getLogger

import time

class SeleniumMiddleware():

# 經常需要在pipeline或者中間件中獲取settings的屬性,可以通過scrapy.crawler.Crawler.settings屬性

@classmethod

def from_crawler(cls, crawler):

# 從settings.py中,提取selenium設置參數,初始化類

return cls(timeout=crawler.settings.get('SELENIUM_TIMEOUT'),

isLoadImage=crawler.settings.get('LOAD_IMAGE'),

windowHeight=crawler.settings.get('WINDOW_HEIGHT'),

windowWidth=crawler.settings.get('WINDOW_WIDTH')

)

def __init__(self, timeout=30, isLoadImage=True, windowHeight=None, windowWidth=None):

self.logger = getLogger(__name__)

self.timeout = timeout

self.isLoadImage = isLoadImage

# 定義一個屬于這個類的browser,防止每次請求頁面時,都會打開一個新的chrome瀏覽器

# 這樣,這個類處理的Request都可以只用這一個browser

self.browser = webdriver.Chrome()

if windowHeight and windowWidth:

self.browser.set_window_size(900, 900)

self.browser.set_page_load_timeout(self.timeout) # 頁面加載超時時間

self.wait = WebDriverWait(self.browser, 25) # 指定元素加載超時時間

def process_request(self, request, spider):

'''

用chrome抓取頁面

:param request: Request請求對象

:param spider: Spider對象

:return: HtmlResponse響應

'''

# self.logger.debug('chrome is getting page')

print(f"chrome is getting page")

# 依靠meta中的標記,來決定是否需要使用selenium來爬取

usedSelenium = request.meta.get('usedSelenium', False)

if usedSelenium:

try:

self.browser.get(request.url)

# 搜索框是否出現

input = self.wait.until(

EC.presence_of_element_located((By.XPATH, "//div[@class='nav-search-field ']/input"))

)

time.sleep(2)

input.clear()

input.send_keys("iphone 7s")

# 敲enter鍵, 進行搜索

input.send_keys(Keys.RETURN)

# 查看搜索結果是否出現

searchRes = self.wait.until(

EC.presence_of_element_located((By.XPATH, "//div[@id='resultsCol']"))

)

except Exception as e:

# self.logger.debug(f'chrome getting page error, Exception = {e}')

print(f"chrome getting page error, Exception = {e}")

return HtmlResponse(url=request.url, status=500, request=request)

else:

time.sleep(3)

return HtmlResponse(url=request.url,

body=self.browser.page_source,

request=request,

# 最好根據網頁的具體編碼而定

encoding='utf-8',

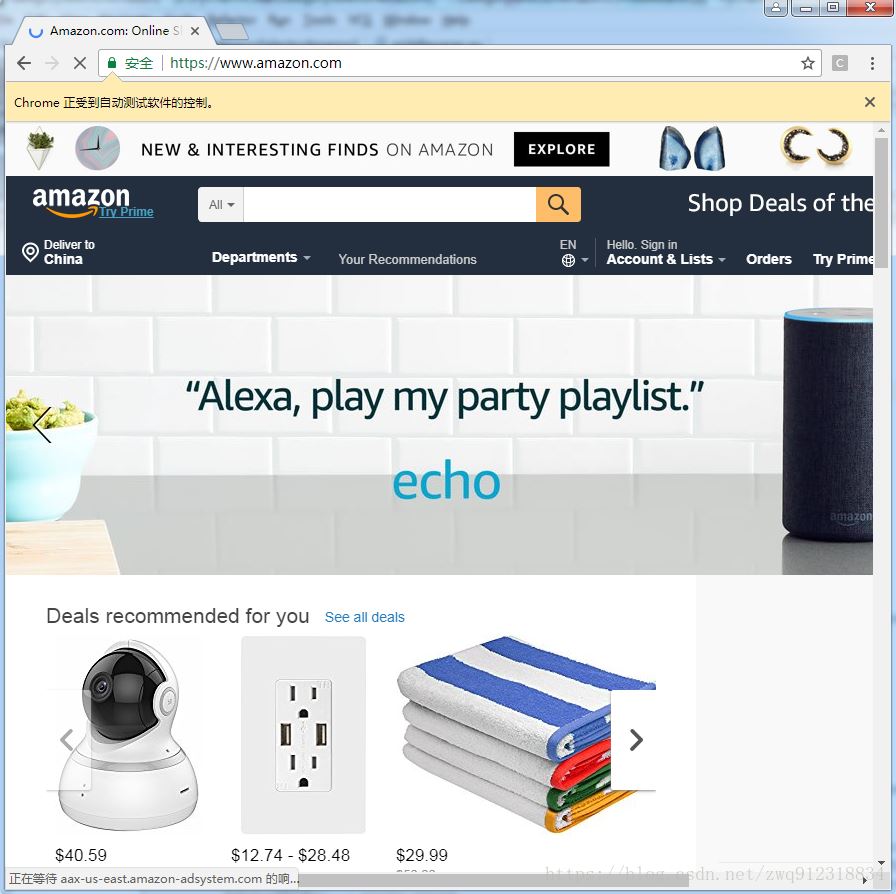

status=200)5. 執行結果

6. 存在的問題

6.1. Spider關閉了,chrome沒有退出。

2018-04-04 09:26:18 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{'downloader/response_bytes': 2092766,

'downloader/response_count': 2,

'downloader/response_status_count/200': 2,

'finish_reason': 'finished',

'finish_time': datetime.datetime(2018, 4, 4, 1, 26, 16, 763602),

'log_count/INFO': 7,

'request_depth_max': 1,

'response_received_count': 2,

'scheduler/dequeued': 2,

'scheduler/dequeued/memory': 2,

'scheduler/enqueued': 2,

'scheduler/enqueued/memory': 2,

'start_time': datetime.datetime(2018, 4, 4, 1, 25, 48, 301602)}

2018-04-04 09:26:18 [scrapy.core.engine] INFO: Spider closed (finished)

上面,我們是把browser對象放到了Middleware中間件中,只能做process_request和process_response, 沒有說在中間件中介紹如何調用scrapy的close方法。

解決方案:利用信號量的方式,當收到spider_closed信號時,調用browser.quit()

6.2. 當一個項目同時啟動多個spider,會共用到Middleware中的selenium,不利于并發。

因為用scrapy + selenium的方式,只有部分,甚至是一小部分頁面會用到chrome,既然把chrome放到Middleware中有這么多限制,那為什么不能把chrome放到spider里面呢。這樣的好處在于:每個spider都有自己的chrome,這樣當啟動多個spider時,就會有多個chrome,不是所有的spider共用一個chrome,這對我們的并發是有好處的。

解決方案:將chrome的初始化放到spider中,每個spider獨占自己的chrome

7. 改進版代碼

在settings.py中,配置好selenium參數:

# 文件settings.py中 # ----------- selenium參數配置 ------------- SELENIUM_TIMEOUT = 25 # selenium瀏覽器的超時時間,單位秒 LOAD_IMAGE = True # 是否下載圖片 WINDOW_HEIGHT = 900 # 瀏覽器窗口大小 WINDOW_WIDTH = 900

在spider中,生成request時,標記哪些請求需要走selenium下載:

# 文件mySpider.py中

# selenium相關庫

from selenium import webdriver

from selenium.webdriver.support.ui import WebDriverWait

# scrapy 信號相關庫

from scrapy.utils.project import get_project_settings

# 下面這種方式,即將廢棄,所以不用

# from scrapy.xlib.pydispatch import dispatcher

from scrapy import signals

# scrapy最新采用的方案

from pydispatch import dispatcher

class mySpider(CrawlSpider):

name = "mySpiderAmazon"

allowed_domains = ['amazon.com']

custom_settings = {

'LOG_LEVEL':'INFO',

'DOWNLOAD_DELAY': 0,

'COOKIES_ENABLED': False, # enabled by default

'DOWNLOADER_MIDDLEWARES': {

# 代理中間件

'mySpider.middlewares.ProxiesMiddleware': 400,

# SeleniumMiddleware 中間件

'mySpider.middlewares.SeleniumMiddleware': 543,

# 將scrapy默認的user-agent中間件關閉

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware': None,

},

# 將chrome初始化放到spider中,成為spider中的元素

def __init__(self, timeout=30, isLoadImage=True, windowHeight=None, windowWidth=None):

# 從settings.py中獲取設置參數

self.mySetting = get_project_settings()

self.timeout = self.mySetting['SELENIUM_TIMEOUT']

self.isLoadImage = self.mySetting['LOAD_IMAGE']

self.windowHeight = self.mySetting['WINDOW_HEIGHT']

self.windowWidth = self.mySetting['windowWidth']

# 初始化chrome對象

self.browser = webdriver.Chrome()

if self.windowHeight and self.windowWidth:

self.browser.set_window_size(900, 900)

self.browser.set_page_load_timeout(self.timeout) # 頁面加載超時時間

self.wait = WebDriverWait(self.browser, 25) # 指定元素加載超時時間

super(mySpider, self).__init__()

# 設置信號量,當收到spider_closed信號時,調用mySpiderCloseHandle方法,關閉chrome

dispatcher.connect(receiver = self.mySpiderCloseHandle,

signal = signals.spider_closed

)

# 信號量處理函數:關閉chrome瀏覽器

def mySpiderCloseHandle(self, spider):

print(f"mySpiderCloseHandle: enter ")

self.browser.quit()

#.....................華麗的分割線.......................

# 生成request時,將是否使用selenium下載的標記,放入到meta中

yield Request(

url = "https://www.amazon.com/",

meta = {'usedSelenium': True, 'dont_redirect': True},

callback = self.parseIndexPage,

errback = self.error

)在下載中間件middlewares.py中,使用selenium抓取頁面

# -*- coding: utf-8 -*-

from selenium import webdriver

from selenium.common.exceptions import TimeoutException

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.common.keys import Keys

from scrapy.http import HtmlResponse

from logging import getLogger

import time

class SeleniumMiddleware():

# Middleware中會傳遞進來一個spider,這就是我們的spider對象,從中可以獲取__init__時的chrome相關元素

def process_request(self, request, spider):

'''

用chrome抓取頁面

:param request: Request請求對象

:param spider: Spider對象

:return: HtmlResponse響應

'''

print(f"chrome is getting page")

# 依靠meta中的標記,來決定是否需要使用selenium來爬取

usedSelenium = request.meta.get('usedSelenium', False)

if usedSelenium:

try:

spider.browser.get(request.url)

# 搜索框是否出現

input = spider.wait.until(

EC.presence_of_element_located((By.XPATH, "//div[@class='nav-search-field ']/input"))

)

time.sleep(2)

input.clear()

input.send_keys("iphone 7s")

# 敲enter鍵, 進行搜索

input.send_keys(Keys.RETURN)

# 查看搜索結果是否出現

searchRes = spider.wait.until(

EC.presence_of_element_located((By.XPATH, "//div[@id='resultsCol']"))

)

except Exception as e:

print(f"chrome getting page error, Exception = {e}")

return HtmlResponse(url=request.url, status=500, request=request)

else:

time.sleep(3)

# 頁面爬取成功,構造一個成功的Response對象(HtmlResponse是它的子類)

return HtmlResponse(url=request.url,

body=spider.browser.page_source,

request=request,

# 最好根據網頁的具體編碼而定

encoding='utf-8',

status=200)運行結果(spider結束,執行mySpiderCloseHandle關閉chrome瀏覽器):

['categorySelectorAmazon1.pipelines.MongoPipeline']

2018-04-04 11:56:21 [scrapy.core.engine] INFO: Spider opened

2018-04-04 11:56:21 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

chrome is getting page

parseProductDetail url = https://www.amazon.com/, status = 200, meta = {'usedSelenium': True, 'dont_redirect': True, 'download_timeout': 25.0, 'proxy': 'http://H37XPSB6V57VU96D:CAB31DAEB9313CE5@proxy.abuyun.com:9020', 'depth': 0}

chrome is getting page

2018-04-04 11:56:54 [scrapy.core.engine] INFO: Closing spider (finished)

mySpiderCloseHandle: enter

2018-04-04 11:56:59 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{'downloader/response_bytes': 1938619,

'downloader/response_count': 2,

'downloader/response_status_count/200': 2,

'finish_reason': 'finished',

'finish_time': datetime.datetime(2018, 4, 4, 3, 56, 54, 301602),

'log_count/INFO': 7,

'request_depth_max': 1,

'response_received_count': 2,

'scheduler/dequeued': 2,

'scheduler/dequeued/memory': 2,

'scheduler/enqueued': 2,

'scheduler/enqueued/memory': 2,

'start_time': datetime.datetime(2018, 4, 4, 3, 56, 21, 642602)}

2018-04-04 11:56:59 [scrapy.core.engine] INFO: Spider closed (finished)

關于在scrapy中使用selenium實現一個爬取網頁的功能就分享到這里了,希望以上內容可以對大家有一定的幫助,可以學到更多知識。如果覺得文章不錯,可以把它分享出去讓更多的人看到。

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。