您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

怎么在IDEA中使用Maven創建一個Scala項目?相信很多沒有經驗的人對此束手無策,為此本文總結了問題出現的原因和解決方法,通過這篇文章希望你能解決這個問題。

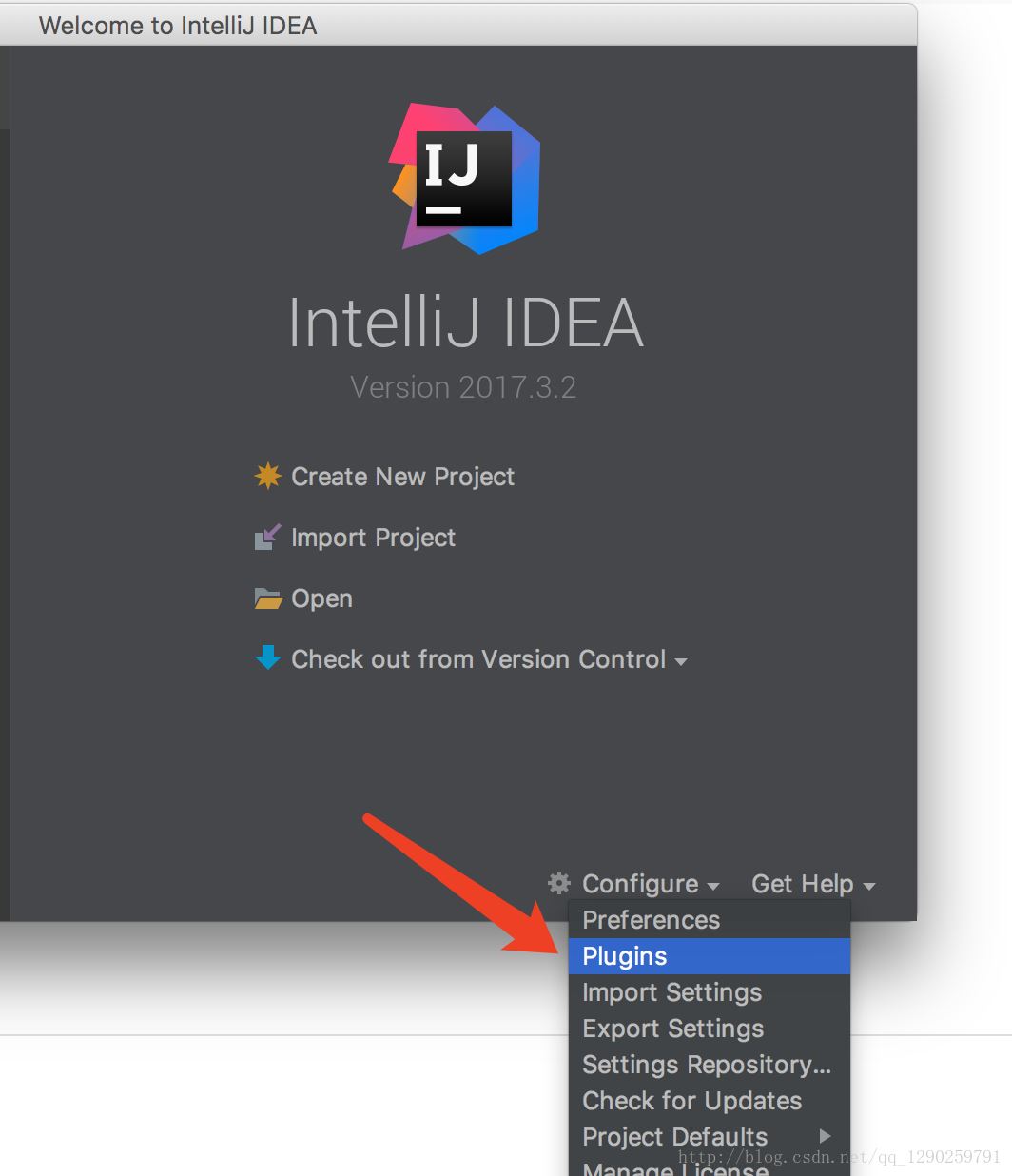

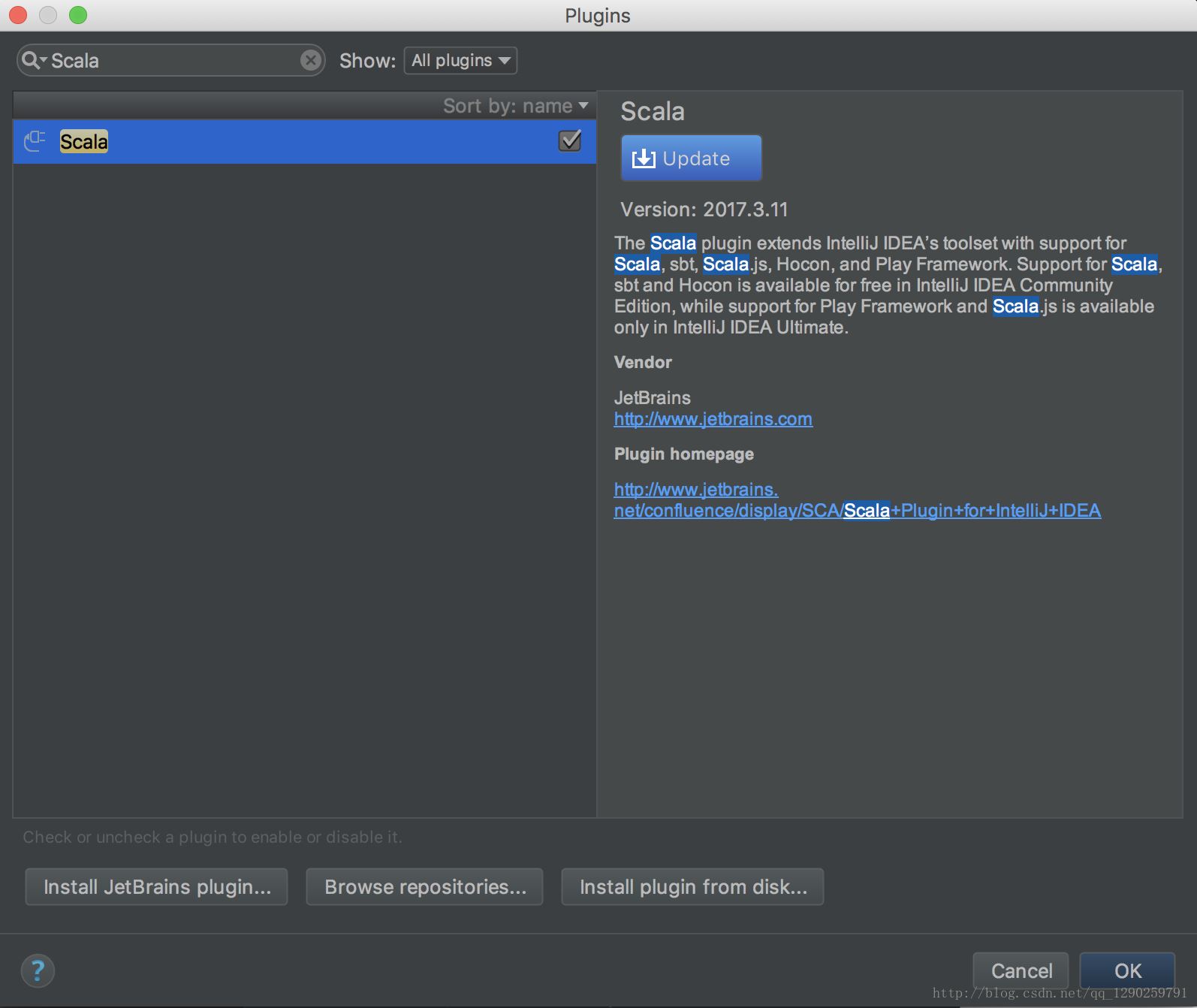

第一步:IntelliJ IDEA下安裝 Scala 插件

安裝完 Scala 插件完成

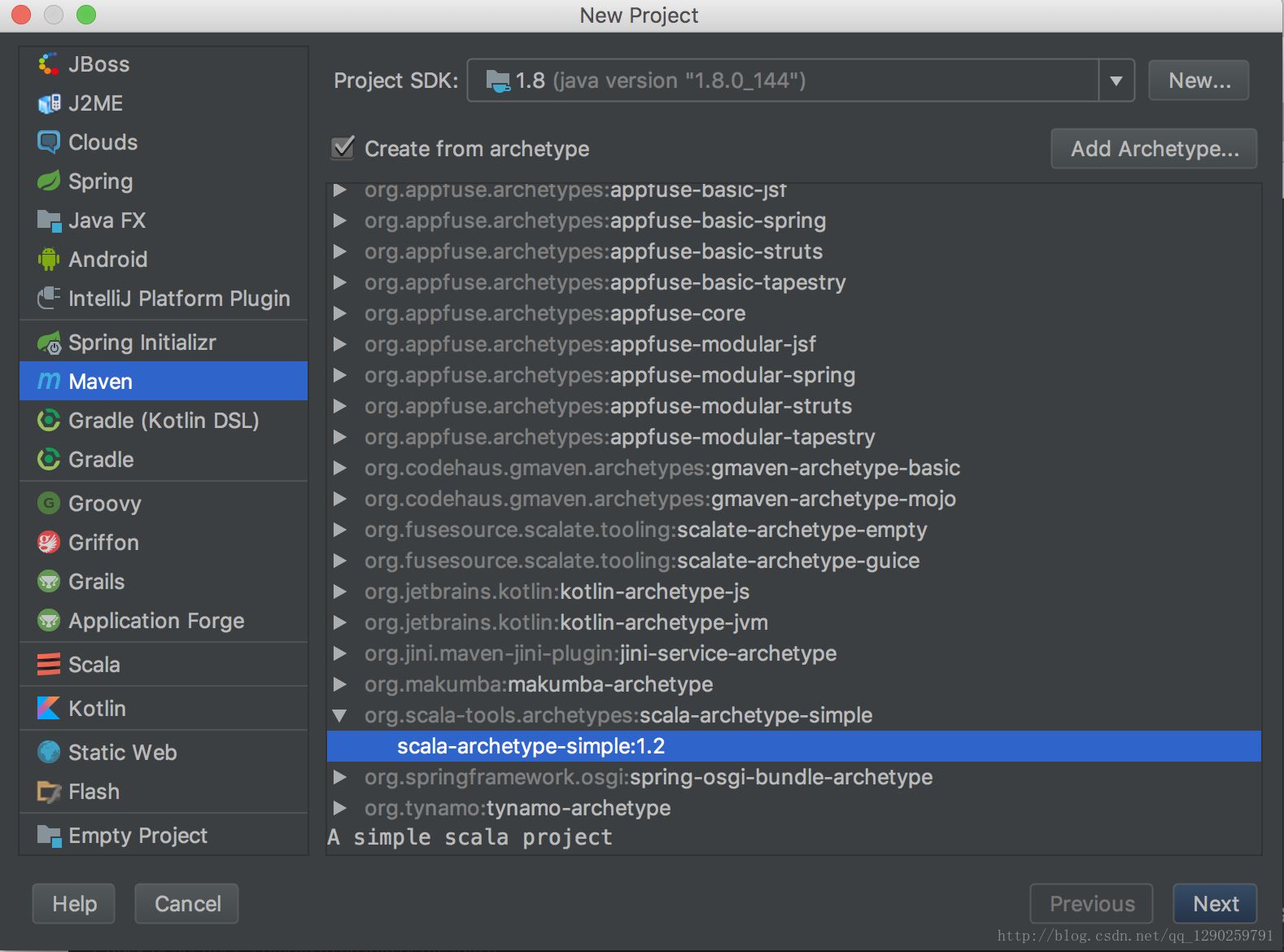

第二步:Maven 下 Scala 下的項目創建

正常創建 Maven 項目(不會的看另一篇 Maven 配置)

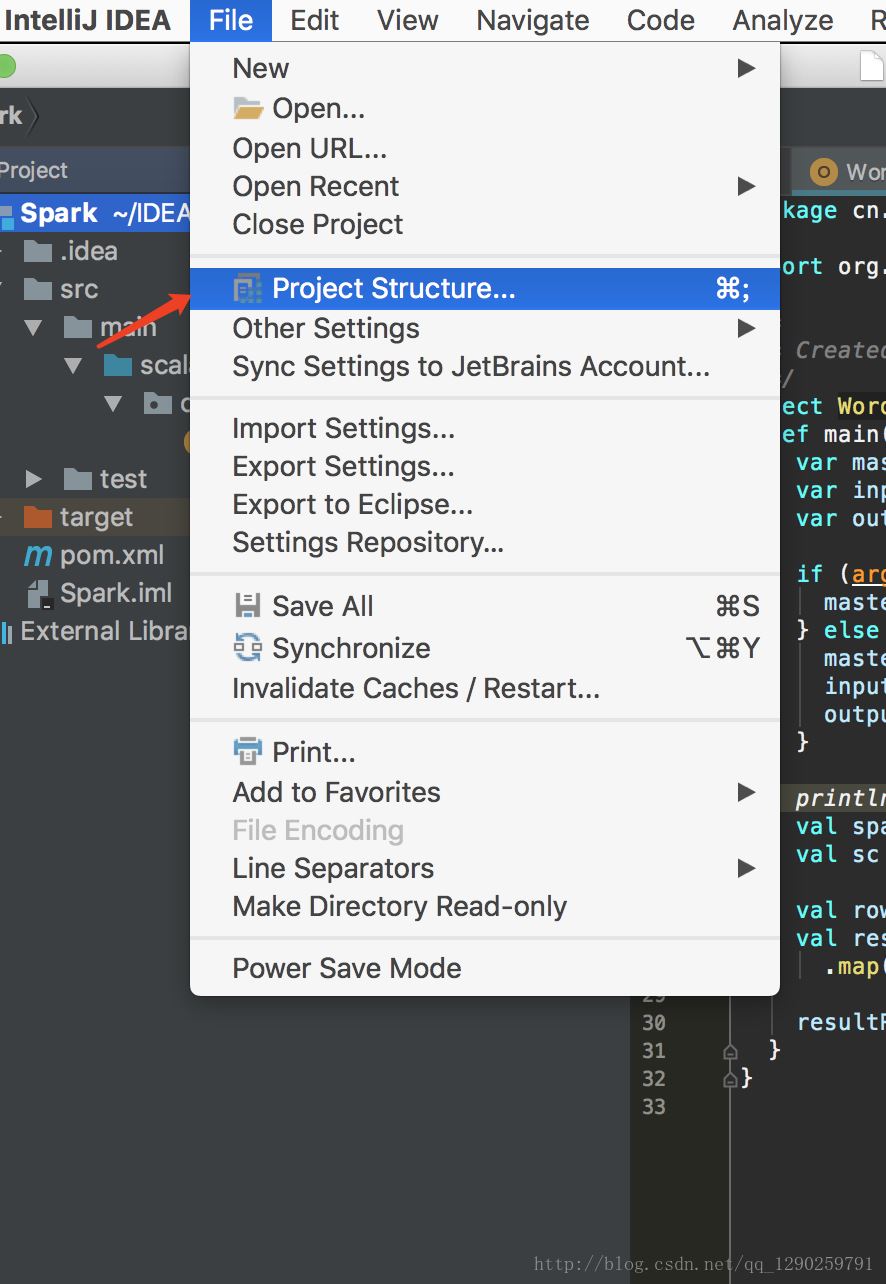

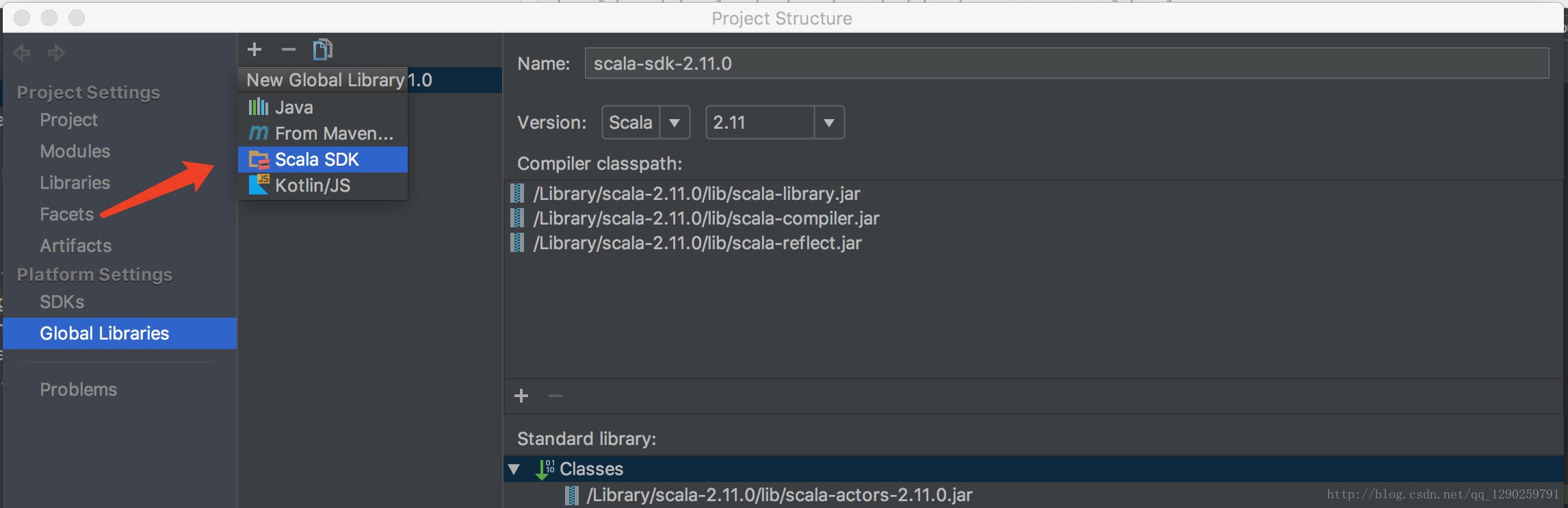

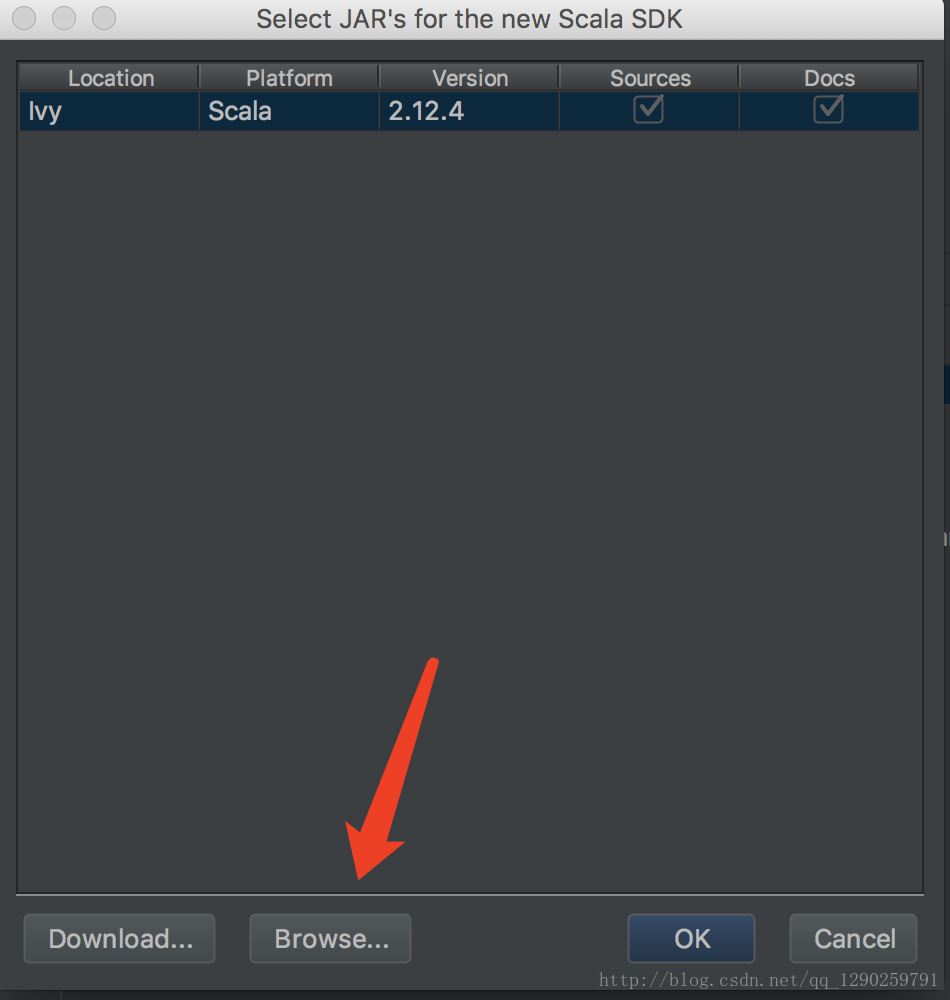

第三步:Scala 版本的下載及配置

通過Spark官網下載頁面http://spark.apache.org/downloads.html 可知“Note: Starting version 2.0, Spark is built with Scala 2.11 by default.”,建議下載Spark2.2對應的 Scala 2.11。

登錄Scala官網http://www.scala-lang.org/,單擊download按鈕,然后再“Other Releases”標題下找到“下載2.11.0

根據自己的系統下載相應的版本

接下來就是配置Scala 的環境變量(跟 jdk 的配置方法一樣)

輸入 Scala -version 查看是否配置成功 會顯示 Scala code runner version 2.11.0 – Copyright 2002-2013, LAMP/EPFL

選擇自己安裝 Scala 的路徑

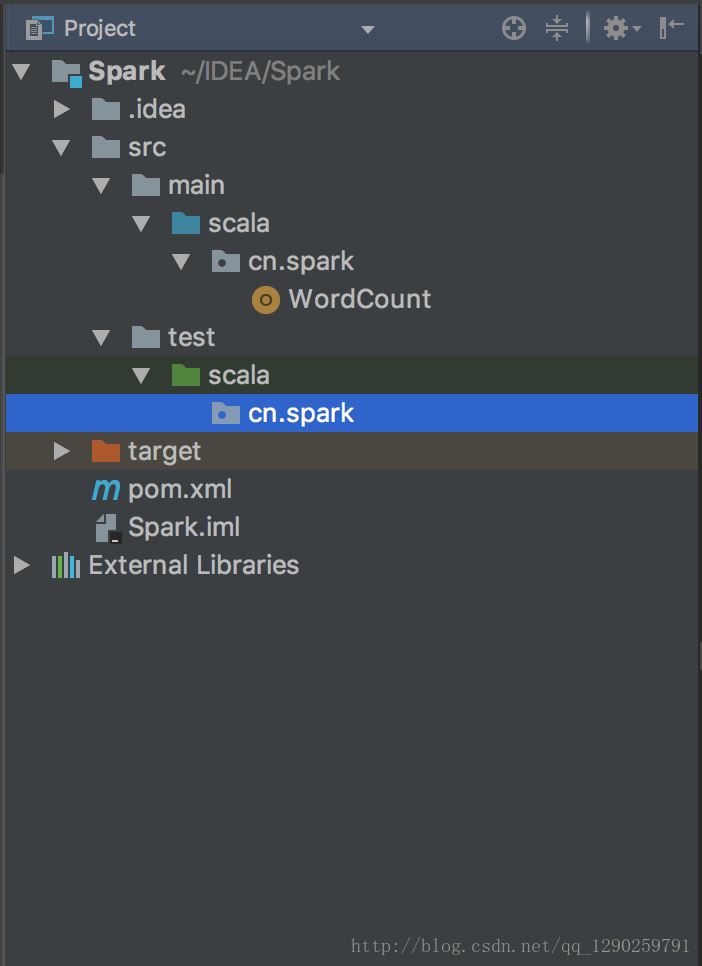

第四步:編寫 Scala 程序

將其他的代碼刪除,不然在編輯的時候會報錯

配置 pom.xml文件

在里面添加一個 Spark

<properties>

<scala.version>2.11.0</scala.version>

<spark.version>2.2.1</spark.version>

</properties>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

<version>${spark.version}</version>

</dependency>具體的 pom.xml 內容

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>cn.spark</groupId>

<artifactId>Spark</artifactId>

<version>1.0-SNAPSHOT</version>

<inceptionYear>2008</inceptionYear>

<properties>

<scala.version>2.11.0</scala.version>

<spark.version>2.2.1</spark.version>

</properties>

<pluginRepositories>

<pluginRepository>

<id>scala-tools.org</id>

<name>Scala-Tools Maven2 Repository</name>

<url>http://scala-tools.org/repo-releases</url>

</pluginRepository>

</pluginRepositories>

<dependencies>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.4</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.specs</groupId>

<artifactId>specs</artifactId>

<version>1.2.5</version>

<scope>test</scope>

</dependency>

</dependencies>

<build>

<sourceDirectory>src/main/scala</sourceDirectory>

<testSourceDirectory>src/test/scala</testSourceDirectory>

<plugins>

<plugin>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<executions>

<execution>

<goals>

<goal>compile</goal>

<goal>testCompile</goal>

</goals>

</execution>

</executions>

<configuration>

<scalaVersion>${scala.version}</scalaVersion>

<args>

<arg>-target:jvm-1.5</arg>

</args>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-eclipse-plugin</artifactId>

<configuration>

<downloadSources>true</downloadSources>

<buildcommands>

<buildcommand>ch.epfl.lamp.sdt.core.scalabuilder</buildcommand>

</buildcommands>

<additionalProjectnatures>

<projectnature>ch.epfl.lamp.sdt.core.scalanature</projectnature>

</additionalProjectnatures>

<classpathContainers>

<classpathContainer>org.eclipse.jdt.launching.JRE_CONTAINER</classpathContainer>

<classpathContainer>ch.epfl.lamp.sdt.launching.SCALA_CONTAINER</classpathContainer>

</classpathContainers>

</configuration>

</plugin>

</plugins>

</build>

<reporting>

<plugins>

<plugin>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<configuration>

<scalaVersion>${scala.version}</scalaVersion>

</configuration>

</plugin>

</plugins>

</reporting>

</project>編寫 WordCount 文件

package cn.spark

import org.apache.spark.{SparkConf, SparkContext}

/**

* Created by hubo on 2018/1/13

*/

object WordCount {

def main(args: Array[String]) {

var masterUrl = "local"

var inputPath = "/Users/huwenbo/Desktop/a.txt"

var outputPath = "/Users/huwenbo/Desktop/out"

if (args.length == 1) {

masterUrl = args(0)

} else if (args.length == 3) {

masterUrl = args(0)

inputPath = args(1)

outputPath = args(2)

}

println(s"masterUrl:$masterUrl, inputPath: $inputPath, outputPath: $outputPath")

val sparkConf = new SparkConf().setMaster(masterUrl).setAppName("WordCount")

val sc = new SparkContext(sparkConf)

val rowRdd = sc.textFile(inputPath)

val resultRdd = rowRdd.flatMap(line => line.split("\\s+"))

.map(word => (word, 1)).reduceByKey(_ + _)

resultRdd.saveAsTextFile(outputPath)

}

}看完上述內容,你們掌握怎么在IDEA中使用Maven創建一個Scala項目的方法了嗎?如果還想學到更多技能或想了解更多相關內容,歡迎關注億速云行業資訊頻道,感謝各位的閱讀!

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。