您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

這篇文章給大家分享的是有關PyTorch中自適應池化Adaptive Pooling的示例分析的內容。小編覺得挺實用的,因此分享給大家做個參考,一起跟隨小編過來看看吧。

簡介

自適應池化Adaptive Pooling是PyTorch含有的一種池化層,在PyTorch的中有六種形式:

自適應最大池化Adaptive Max Pooling:

torch.nn.AdaptiveMaxPool1d(output_size)

torch.nn.AdaptiveMaxPool2d(output_size)

torch.nn.AdaptiveMaxPool3d(output_size)

自適應平均池化Adaptive Average Pooling:

torch.nn.AdaptiveAvgPool1d(output_size)

torch.nn.AdaptiveAvgPool2d(output_size)

torch.nn.AdaptiveAvgPool3d(output_size)

具體可見官方文檔。

官方給出的例子: >>> # target output size of 5x7 >>> m = nn.AdaptiveMaxPool2d((5,7)) >>> input = torch.randn(1, 64, 8, 9) >>> output = m(input) >>> output.size() torch.Size([1, 64, 5, 7]) >>> # target output size of 7x7 (square) >>> m = nn.AdaptiveMaxPool2d(7) >>> input = torch.randn(1, 64, 10, 9) >>> output = m(input) >>> output.size() torch.Size([1, 64, 7, 7]) >>> # target output size of 10x7 >>> m = nn.AdaptiveMaxPool2d((None, 7)) >>> input = torch.randn(1, 64, 10, 9) >>> output = m(input) >>> output.size() torch.Size([1, 64, 10, 7])

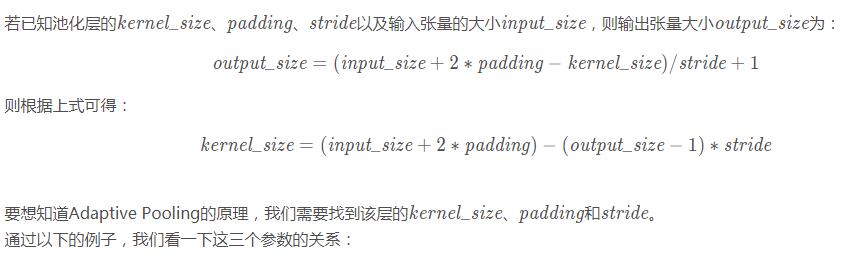

Adaptive Pooling特殊性在于,輸出張量的大小都是給定的output_size output\_sizeoutput_size。例如輸入張量大小為(1, 64, 8, 9),設定輸出大小為(5,7),通過Adaptive Pooling層,可以得到大小為(1, 64, 5, 7)的張量。

原理

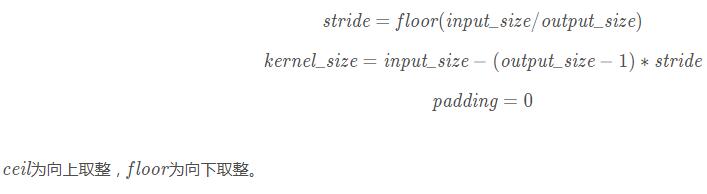

>>> inputsize = 9 >>> outputsize = 4 >>> input = torch.randn(1, 1, inputsize) >>> input tensor([[[ 1.5695, -0.4357, 1.5179, 0.9639, -0.4226, 0.5312, -0.5689, 0.4945, 0.1421]]]) >>> m1 = nn.AdaptiveMaxPool1d(outputsize) >>> m2 = nn.MaxPool1d(kernel_size=math.ceil(inputsize / outputsize), stride=math.floor(inputsize / outputsize), padding=0) >>> output1 = m1(input) >>> output2 = m2(input) >>> output1 tensor([[[1.5695, 1.5179, 0.5312, 0.4945]]]) torch.Size([1, 1, 4]) >>> output2 tensor([[[1.5695, 1.5179, 0.5312, 0.4945]]]) torch.Size([1, 1, 4])

通過實驗發現:

下面是Adaptive Average Pooling的c++源碼部分。

template <typename scalar_t>

static void adaptive_avg_pool2d_out_frame(

scalar_t *input_p,

scalar_t *output_p,

int64_t sizeD,

int64_t isizeH,

int64_t isizeW,

int64_t osizeH,

int64_t osizeW,

int64_t istrideD,

int64_t istrideH,

int64_t istrideW)

{

int64_t d;

#pragma omp parallel for private(d)

for (d = 0; d < sizeD; d++)

{

/* loop over output */

int64_t oh, ow;

for(oh = 0; oh < osizeH; oh++)

{

int istartH = start_index(oh, osizeH, isizeH);

int iendH = end_index(oh, osizeH, isizeH);

int kH = iendH - istartH;

for(ow = 0; ow < osizeW; ow++)

{

int istartW = start_index(ow, osizeW, isizeW);

int iendW = end_index(ow, osizeW, isizeW);

int kW = iendW - istartW;

/* local pointers */

scalar_t *ip = input_p + d*istrideD + istartH*istrideH + istartW*istrideW;

scalar_t *op = output_p + d*osizeH*osizeW + oh*osizeW + ow;

/* compute local average: */

scalar_t sum = 0;

int ih, iw;

for(ih = 0; ih < kH; ih++)

{

for(iw = 0; iw < kW; iw++)

{

scalar_t val = *(ip + ih*istrideH + iw*istrideW);

sum += val;

}

}

/* set output to local average */

*op = sum / kW / kH;

}

}

}

}感謝各位的閱讀!關于“PyTorch中自適應池化Adaptive Pooling的示例分析”這篇文章就分享到這里了,希望以上內容可以對大家有一定的幫助,讓大家可以學到更多知識,如果覺得文章不錯,可以把它分享出去讓更多的人看到吧!

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。