您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

本文小編為大家詳細介紹“Springboot 2.x集成kafka 2.2.0的方法”,內容詳細,步驟清晰,細節處理妥當,希望這篇“Springboot 2.x集成kafka 2.2.0的方法”文章能幫助大家解決疑惑,下面跟著小編的思路慢慢深入,一起來學習新知識吧。

kafka近幾年更新非常快,也可以看出kafka在企業中是用的頻率越來越高,在springboot中集成kafka還是比較簡單的,但是應該注意使用的版本和kafka中基本配置,這個地方需要信心,防止進入坑中。

springboot版本2.1.4

kafka版本2.2.0

jdk 1.8

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <parent> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-parent</artifactId> <version>2.1.4.RELEASE</version> <relativePath/> <!-- lookup parent from repository --> </parent> <groupId>com.example</groupId> <artifactId>demo</artifactId> <version>0.0.1-SNAPSHOT</version> <name>kafkademo</name> <description>Demo project for Spring Boot</description> <properties> <java.version>1.8</java.version> </properties> <dependencies> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> </dependency> <dependency> <groupId>mysql</groupId> <artifactId>mysql-connector-java</artifactId> <scope>runtime</scope> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-test</artifactId> <scope>test</scope> </dependency> <dependency> <groupId>org.springframework.kafka</groupId> <artifactId>spring-kafka</artifactId> <version>2.2.0.RELEASE</version> </dependency> <dependency> <groupId>com.google.code.gson</groupId> <artifactId>gson</artifactId> <version>2.7</version> </dependency> </dependencies> <build> <plugins> <plugin> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-maven-plugin</artifactId> </plugin> </plugins> </build> </project>

spring.kafka.bootstrap-servers=2.1.1.1:9092 spring.kafka.consumer.group-id=test-consumer-group spring.kafka.consumer.key-deserializer=org.apache.kafka.common.serialization.StringDeserializer spring.kafka.consumer.value-deserializer=org.apache.kafka.common.serialization.StringDeserializer spring.kafka.producer.key-serializer=org.apache.kafka.common.serialization.StringSerializer spring.kafka.producer.value-serializer=org.apache.kafka.common.serialization.StringSerializer #logging.level.root=debug

package com.example.demo.model;

import java.util.Date;

public class Messages {

private Long id;

private String msg;

private Date sendTime;

public Long getId() {

return id;

}

public void setId(Long id) {

this.id = id;

}

public String getMsg() {

return msg;

}

public void setMsg(String msg) {

this.msg = msg;

}

public Date getSendTime() {

return sendTime;

}

public void setSendTime(Date sendTime) {

this.sendTime = sendTime;

}

}package com.example.demo.service;

import com.example.demo.model.Messages;

import com.google.gson.Gson;

import com.google.gson.GsonBuilder;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.kafka.support.SendResult;

import org.springframework.stereotype.Service;

import org.springframework.util.concurrent.ListenableFuture;

import java.util.Date;

import java.util.UUID;

@Service

public class KafkaSender {

@Autowired

private KafkaTemplate<String, String> kafkaTemplate;

private Gson gson = new GsonBuilder().create();

public void send() {

Messages message = new Messages();

message.setId(System.currentTimeMillis());

message.setMsg("123");

message.setSendTime(new Date());

ListenableFuture<SendResult<String, String>> test0 = kafkaTemplate.send("newtopic", gson.toJson(message));

}

}package com.example.demo.service;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.stereotype.Service;

import java.util.Optional;

@Service

public class KafkaReceiver {

@KafkaListener(topics = {"newtopic"})

public void listen(ConsumerRecord<?, ?> record) {

Optional<?> kafkaMessage = Optional.ofNullable(record.value());

if (kafkaMessage.isPresent()) {

Object message = kafkaMessage.get();

System.out.println("record =" + record);

System.out.println("message =" + message);

}

}

}在啟動方法中模擬消息生產者,向kafka中發送消息

package com.example.demo;

import com.example.demo.service.KafkaSender;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.context.ConfigurableApplicationContext;

@SpringBootApplication

public class KafkademoApplication {

public static void main(String[] args) {

ConfigurableApplicationContext context = SpringApplication.run(KafkademoApplication.class, args);

KafkaSender sender = context.getBean(KafkaSender.class);

for (int i = 0; i <1000; i++) {

sender.send();

try {

Thread.sleep(300);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

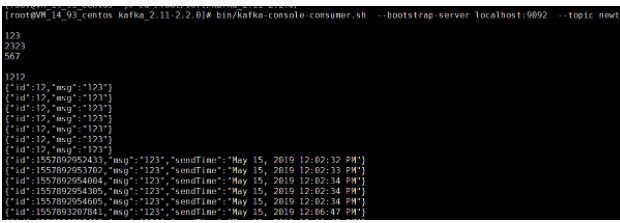

命令行直接消費消息

生產端連接kafka超時

at org.apache.kafka.common.network.NetworkReceive.readFrom(NetworkReceive.java:119)

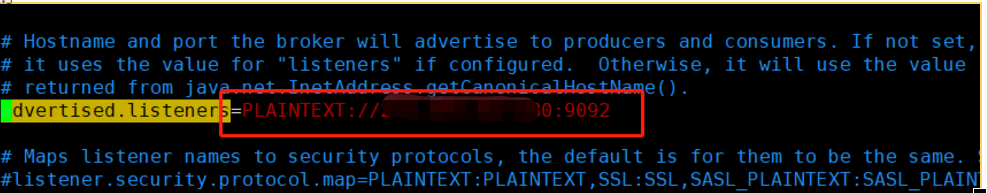

解決方案:

修改kafka中的server.properties中的下面配置,將原來的默認配置替換成下面ip+端口的形式,重啟kafka

讀到這里,這篇“Springboot 2.x集成kafka 2.2.0的方法”文章已經介紹完畢,想要掌握這篇文章的知識點還需要大家自己動手實踐使用過才能領會,如果想了解更多相關內容的文章,歡迎關注億速云行業資訊頻道。

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。