您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

啟動spark-shell后查詢hive中的表信息,報錯

$SPARK_HOME/bin/spark-shell

spark.sql("select * from student.student ").show()Caused by: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1523)

at org.apache.hadoop.hive.metastore.RetryingMetaSto

Caused by: org.datanucleus.store.rdbms.connectionpool.DatastoreDriverNotFoundException:

The specified datastore driver ("com.mysql.jdbc.Driver") was not found in the

CLASSPATH. Please check your CLASSPATH specification,

and the name of the driver.spark訪問存放hive的Metastore的mysql數據庫時,沒有連接成功,因為spark沒有沒有mysql-connecter的jar包,即mysql驅動

有人說,那就直接把jar包cp到$SPARK_HOME/jars下唄,不好意思,這樣再生產中是絕對不行的,并不是所有spark程序都會用到mysql驅動,所以我們要在提交作業時指定--jars,多個jar包用逗號分隔 注(我的mysql版本是5.1.73)

[hadoop@hadoop003 spark]$ spark-shell --jars ~/softwares/mysql-connector-java-5.1.47.jar

19/05/21 08:02:55 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://hadoop003:4040

Spark context available as 'sc' (master = local[*], app id = local-1558440185051).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.4.2

/_/

Using Scala version 2.11.12 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_131)

Type in expressions to have them evaluated.

Type :help for more information.

scala> spark.sql("select * from student.student ").show()

19/05/21 08:04:42 WARN DataNucleus.General: Plugin (Bundle) "org.datanucleus" is already registered. Ensure you dont have multiple JAR versions of the same plugin in the classpath. The URL "file:/home/hadoop/app/spark-2.4.2-bin-hadoop-2.6.0-cdh6.7.0/jars/datanucleus-core-3.2.10.jar" is already registered, and you are trying to register an identical plugin located at URL "file:/home/hadoop/app/spark/jars/datanucleus-core-3.2.10.jar."

19/05/21 08:04:42 WARN DataNucleus.General: Plugin (Bundle) "org.datanucleus.api.jdo" is already registered. Ensure you dont have multiple JAR versions of the same plugin in the classpath. The URL "file:/home/hadoop/app/spark/jars/datanucleus-api-jdo-3.2.6.jar" is already registered, and you are trying to register an identical plugin located at URL "file:/home/hadoop/app/spark-2.4.2-bin-hadoop-2.6.0-cdh6.7.0/jars/datanucleus-api-jdo-3.2.6.jar."

19/05/21 08:04:42 WARN DataNucleus.General: Plugin (Bundle) "org.datanucleus.store.rdbms" is already registered. Ensure you dont have multiple JAR versions of the same plugin in the classpath. The URL "file:/home/hadoop/app/spark-2.4.2-bin-hadoop-2.6.0-cdh6.7.0/jars/datanucleus-rdbms-3.2.9.jar" is already registered, and you are trying to register an identical plugin located at URL "file:/home/hadoop/app/spark/jars/datanucleus-rdbms-3.2.9.jar."

19/05/21 08:04:45 ERROR metastore.ObjectStore: Version information found in metastore differs 1.1.0 from expected schema version 1.2.0. Schema verififcation is disabled hive.metastore.schema.verification so setting version.

19/05/21 08:04:46 WARN metastore.ObjectStore: Failed to get database global_temp, returning NoSuchObjectException

+------+--------+--------------+--------------------+

|stu_id|stu_name| stu_phone_num| stu_email|

+------+--------+--------------+--------------------+

| 1| Burke|1-300-746-8446|ullamcorper.velit...|

| 2| Kamal|1-668-571-5046|pede.Suspendisse@...|

| 3| Olga|1-956-311-1686|Aenean.eget.metus...|

| 4| Belle|1-246-894-6340|vitae.aliquet.nec...|

| 5| Trevor|1-300-527-4967|dapibus.id@acturp...|

| 6| Laurel|1-691-379-9921|adipiscing@consec...|

| 7| Sara|1-608-140-1995|Donec.nibh@enimEt...|

| 8| Kaseem|1-881-586-2689|cursus.et.magna@e...|

| 9| Lev|1-916-367-5608|Vivamus.nisi@ipsu...|

| 10| Maya|1-271-683-2698|accumsan.convalli...|

| 11| Emi|1-467-270-1337| est@nunc.com|

| 12| Caleb|1-683-212-0896|Suspendisse@Quisq...|

| 13|Florence|1-603-575-2444|sit.amet.dapibus@...|

| 14| Anika|1-856-828-7883|euismod@ligulaeli...|

| 15| Tarik|1-398-171-2268|turpis@felisorci.com|

| 16| Amena|1-878-250-3129|lorem.luctus.ut@s...|

| 17| Blossom|1-154-406-9596|Nunc.commodo.auct...|

| 18| Guy|1-869-521-3230|senectus.et.netus...|

| 19| Malachi|1-608-637-2772|Proin.mi.Aliquam@...|

| 20| Edward|1-711-710-6552|lectus@aliquetlib...|

+------+--------+--------------+--------------------+

only showing top 20 rows解決了

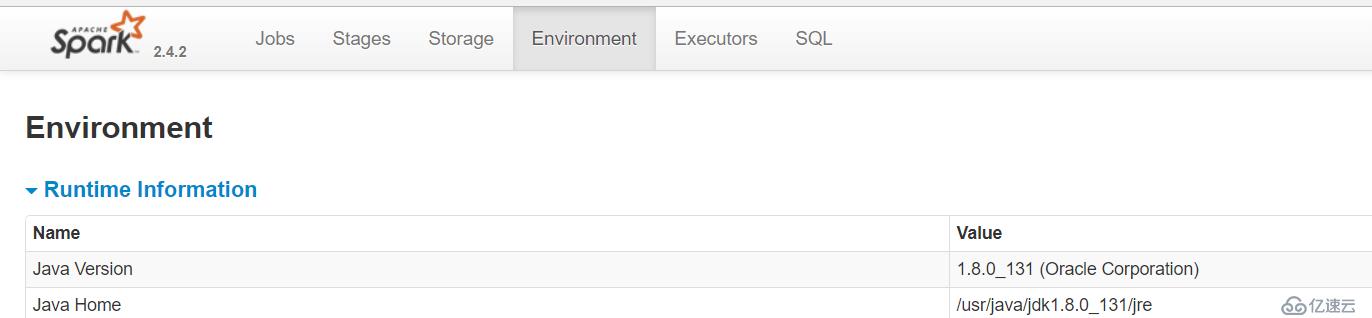

此時我們也可以在ui界面上看一下是不是將mysql驅動包添加到該任務中了

發現的確添加成功

啟動spark-sql時,發生報錯

spark-sql19/05/21 08:54:14 ERROR Datastore.Schema: Failed initialising database.

Unable to open a test connection to the given database.

JDBC url = jdbc:mysql://192.168.1.201:3306/hiveDB?createDatabaseIfNotExist=true, username = root. Terminating connection pool

(set lazyInit to true if you expect to start your database after your app).

Original Exception: ------

java.sql.SQLException: No suitable driver found for jdbc:mysql://192.168.1.201:3306/hiveDB?createDatabaseIfNotExist=true

Caused by: java.sql.SQLException: No suitable driver found for

jdbc:mysql://192.168.1.201:3306/hiveDB?createDatabaseIfNotExist=truedriver端沒有mysql驅動,連接不上hive的元數據。

查看一下我的啟動命令,發現已經使用--jars指定了mysql驅動包的路徑

并且根據spark-sql --help的說明,可以將指定得jar包都添加到driver 和 executor的classpaths

--jars JARS Comma-separated list of jars to include on the driver and executor classpaths.但是driver的classpath依舊沒有mysql的驅動,這是為什么呢? 暫時不得而知,,所以,我嘗試了一下,啟動時另外添加driver的classpath路徑

spark-sql --jars softwares/mysql-connector-java-5.1.47.jar --driver-class-path softwares/mysql-connector-java-5.1.47.jar 19/05/21 09:19:30 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

19/05/21 09:19:31 INFO metastore.HiveMetaStore: 0: Opening raw store with implemenation class:org.apache.hadoop.hive.metastore.ObjectStore

......

19/05/21 09:19:36 INFO spark.SparkEnv: Registering OutputCommitCoordinator

19/05/21 09:19:37 INFO util.log: Logging initialized @8235ms

19/05/21 09:19:37 INFO server.Server: jetty-9.3.z-SNAPSHOT, build timestamp: unknown, git hash: unknown

19/05/21 09:19:37 INFO server.Server: Started @8471ms

19/05/21 09:19:37 INFO server.AbstractConnector: Started ServerConnector@55f0e536{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

19/05/21 09:19:37 INFO util.Utils: Successfully started service 'SparkUI' on port 4040.

......

19/05/21 09:19:38 INFO internal.SharedState: Warehouse path is 'file:/home/hadoop/spark-warehouse'.

19/05/21 09:19:38 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@16e1219f{/SQL,null,AVAILABLE,@Spark}

19/05/21 09:19:38 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@13f40d71{/SQL/json,null,AVAILABLE,@Spark}

19/05/21 09:19:38 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@34f7b44f{/SQL/execution,null,AVAILABLE,@Spark}

19/05/21 09:19:38 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5403907{/SQL/execution/json,null,AVAILABLE,@Spark}

19/05/21 09:19:38 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@7911cc15{/static/sql,null,AVAILABLE,@Spark}

19/05/21 09:19:38 INFO hive.HiveUtils: Initializing HiveMetastoreConnection version 1.2.1 using Spark classes.

19/05/21 09:19:38 INFO client.HiveClientImpl: Warehouse location for Hive client (version 1.2.2) is file:/home/hadoop/spark-warehouse

19/05/21 09:19:38 INFO hive.metastore: Mestastore configuration hive.metastore.warehouse.dir changed from /user/hive/warehouse to file:/home/hadoop/spark-warehouse

19/05/21 09:19:38 INFO metastore.HiveMetaStore: 0: Shutting down the object store...

19/05/21 09:19:38 INFO HiveMetaStore.audit: ugi=hadoop ip=unknown-ip-addr cmd=Shutting down the object store...

19/05/21 09:19:38 INFO metastore.HiveMetaStore: 0: Metastore shutdown complete.

19/05/21 09:19:38 INFO HiveMetaStore.audit: ugi=hadoop ip=unknown-ip-addr cmd=Metastore shutdown complete.

19/05/21 09:19:38 INFO metastore.HiveMetaStore: 0: get_database: default

19/05/21 09:19:38 INFO HiveMetaStore.audit: ugi=hadoop ip=unknown-ip-addr cmd=get_database: default

19/05/21 09:19:38 INFO metastore.HiveMetaStore: 0: Opening raw store with implemenation class:org.apache.hadoop.hive.metastore.ObjectStore

19/05/21 09:19:38 INFO metastore.ObjectStore: ObjectStore, initialize called

19/05/21 09:19:38 INFO DataNucleus.Query: Reading in results for query "org.datanucleus.store.rdbms.query.SQLQuery@0" since the connection used is closing

19/05/21 09:19:38 INFO metastore.MetaStoreDirectSql: Using direct SQL, underlying DB is MYSQL

19/05/21 09:19:38 INFO metastore.ObjectStore: Initialized ObjectStore

19/05/21 09:19:39 INFO state.StateStoreCoordinatorRef: Registered StateStoreCoordinator endpoint

Spark master: local[*], Application Id: local-1558444777553

19/05/21 09:19:40 INFO thriftserver.SparkSQLCLIDriver: Spark master: local[*], Application Id: local-1558444777553

spark-sql (default)>啟動成功~,看來官方解釋也不一定就是準確的呀,還是要實測!小坑

在IDEA中將RDD轉換成為DataFrame時,在代碼沒有問題的情況下(同段代碼在spark-shell測試成功)發生報錯。

代碼如下:

object SparkSessionDemo {

/**

* 創建SparkSession,測試

*

* @param args

*/

def main(args: Array[String]): Unit = {

val spark=SparkSession

.builder()

.master("local[*]")

.appName("SparkSessionDemo")

.getOrCreate()

import spark.implicits._

val logs=spark.sparkContext.textFile("file:///D:/cleaned.log ") .map(_.split("\t"))

val logsDF=logs.map(x=>CleanedLog(x(0),x(1),x(2),x(3),x(4),x(5),1L,x(6),x(7))).toDF()

logsDF.show()

}

case class CleanedLog(cdn:String,region:String,level:String,date:String,ip:String, domain:String, pv:Long,url:String, traffic:String)

}一共是兩次報錯,但是原因相同,為了節約空間,我放到一起了

一、

Exception in thread "main" java.lang.NoClassDefFoundError:

org/codehaus/janino/InternalCompilerException

...

Caused by: java.lang.ClassNotFoundException: org.codehaus.janino.InternalCompilerException

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:331)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 33 more

二、

Exception in thread "main" java.lang.NoSuchMethodError:

org.codehaus.commons.compiler.Location.<init>(Ljava/lang/String;II)Vjar包沖突

spark-sql-2.4.2需要的janino和compiler包和hive-1.1.0需要的版本不一致,由于hive的版本過低,并且比spark-sql先添加到pom.xml中,所以當發生jar包沖突時默認使用hive中使用的jar包。

使用maven helper插件,將沖突jar包janino、compiler的低版本添加exclusion標簽,具體做法請參照我另一篇博客IDEA-plugin 之解決maven工程jar沖突必備良藥 Maven Helper

運行成功之后是這樣滴

+-----+------+-----+----------+---------------+--------------------+---+--------------------+-------+

| cdn|region|level| date| ip| domain| pv| url|traffic|

+-----+------+-----+----------+---------------+--------------------+---+--------------------+-------+

|baidu| CN| E|2018050102| 121.77.52.1| github.com| 1|http://github.com...| 8732|

|baidu| CN| E|2018050102| 182.90.237.16| yooku.com| 1|http://yooku.com/...| 39912|

|baidu| CN| E|2018050101| 171.14.31.240|movieshow2000.edu...| 1|http://movieshow2...| 37164|

|baidu| CN| E|2018050103| 222.20.40.164|movieshow2000.edu...| 1|http://movieshow2...| 24963|

|baidu| CN| E|2018050102| 171.14.203.164| github.com| 1|http://github.com...| 24881|

|baidu| CN| E|2018050103| 61.237.150.187|movieshow2000.edu...| 1|http://movieshow2...| 90965|

|baidu| CN| E|2018050103| 123.233.221.56|movieshow2000.edu...| 1|http://movieshow2...| 85190|

|baidu| CN| E|2018050103| 171.9.197.248| github.com| 1|http://github.com...| 36031|

|baidu| CN| E|2018050101| 222.88.229.163| yooku.com| 1|http://yooku.com/...| 35809|

|baidu| CN| E|2018050101| 139.208.68.178| github.com| 1|http://github.com...| 9787|

|baidu| CN| E|2018050103| 171.12.206.176|movieshow2000.edu...| 1|http://movieshow2...| 83860|

|baidu| CN| E|2018050101| 121.77.141.58| github.com| 1|http://github.com...| 89197|

|baidu| CN| E|2018050102| 171.14.63.223| rw.uestc.edu.cn| 1|http://rw.uestc.e...| 74642|

|baidu| CN| E|2018050101| 36.57.94.77| yooku.com| 1|http://yooku.com/...| 25020|

|baidu| CN| E|2018050101| 171.15.34.129|movieshow2000.edu...| 1|http://movieshow2...| 51978|

|baidu| CN| E|2018050101| 121.76.172.122| yooku.com| 1|http://yooku.com/...| 48488|

|baidu| CN| E|2018050102| 36.61.89.99| yooku.com| 1|http://yooku.com/...| 86480|

|baidu| CN| E|2018050102| 182.89.232.143|movieshow2000.edu...| 1|http://movieshow2...| 24312|

|baidu| CN| E|2018050102|139.205.123.192|movieshow2000.edu...| 1|http://movieshow2...| 51601|

|baidu| CN| E|2018050102| 123.233.171.52| yooku.com| 1|http://yooku.com/...| 14132|

+-----+------+-----+----------+---------------+--------------------+---+--------------------+-------+

only showing top 20 rows

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。