您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

本來一直都有玩下ASM EXTEND RAC這樣的想法,苦于沒有資源測試,等。。。。。

老天不負有心人啊~哈哈!終于有資源玩了。

2套存儲:EMS跟HDS,分別放在不同的機房。

由于原測試系統用的是文件系統,故要將其先改為ASM,再創建ASM EXTEND RAC。

此次修改成ASM EXTEND RAC遇到一系列問題,雖然解決這些問題有過苦惱,但EXTEND RAC成功完成之后,有種莫名的成就感,

各位看官大問題解決之后有木有同感~ 呵呵

1.系統環境

主機OS版本:AIX 7.1 ("7100-02-03-1334")

ORACLE版本:oracle 11.2.0.3 PSU10

是否RAC:是

節點個數:4個

存儲:HDS 100G,EMS 50G

ASM或文件系統:賽門鐵克VERITAS卷管理工具搭建集群文件系統

RAM : 128

SWAP: 13G

8GB

IBM JDK 1.6.0.00 (64 BIT)

/oracle 50GB

/oraclelog 30GB

/ocrvote 2G

/archivelog 400G

/oradata 850

100.15.64.180 testdb1

100.15.64.181 testdb2

100.15.64.182 testdb3

100.15.64.183 testdb4

100.15.64.184 testdb1-vip

100.15.64.185 testdb2-vip

100.15.64.186 testdb3-vip

100.15.64.187 testdb4-vip

100.15.64.188 testdb-scan

7.154.64.1 testdb1-priv

7.154.64.2 testdb2-priv

7.154.64.3 testdb3-priv

7.154.64.4 testdb4-priv

2.文件系統更換成ASM

chown grid:asmadmin /dev/vx/rdmp/remc0_04a1

chown grid:asmadmin /dev/vx/rdmp/rhitachi_v0_11cd

chmod 660 /dev/vx/rdmp/remc0_04a1

chmod 660 /dev/vx/rdmp/rhitachi_v0_11cd

(注:由于測試庫使用的是賽門鐵克的存儲多路徑軟件,故無需修改磁盤屬性)

su – grid

export DISPLAY=100.15.70.169:0.0

asmca

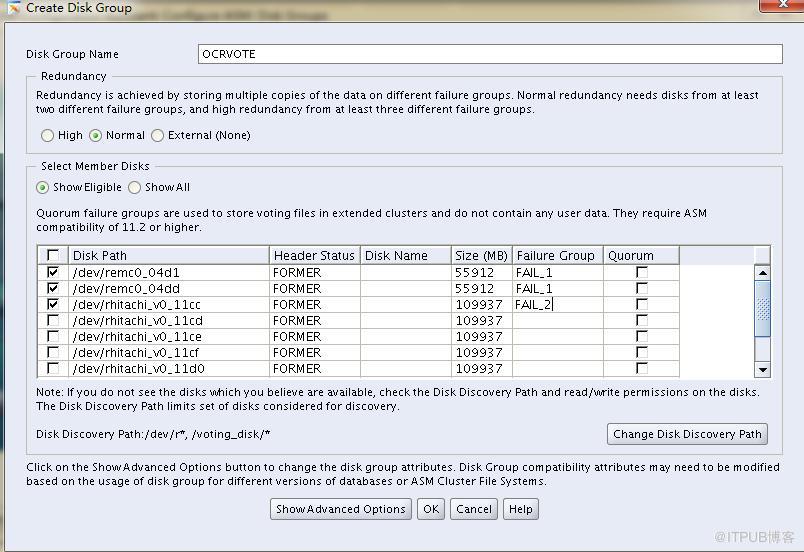

(注:創建OCTVOTE磁盤組選NORMAL冗余,創建2個故障組,最少3塊磁盤,建議選用3塊磁盤,當asm的故障組如果有多余3塊盤,votedisk遷移到這個磁盤組也只用其中的3塊盤。使用crsctl query css votedisk只看到votedisk放在3塊盤上。磁盤組的可用空間以其故障組總大小最小的為準)

su – grid

export DISPLAY=100.15.70.169:0.0

asmca

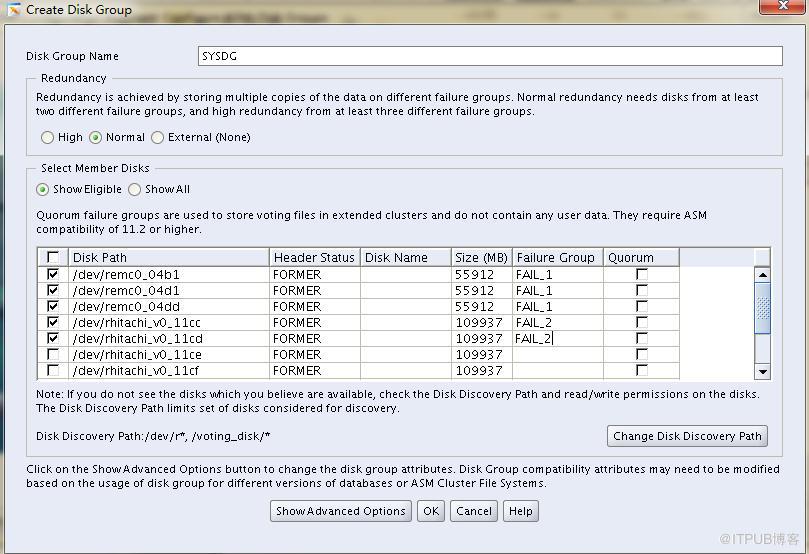

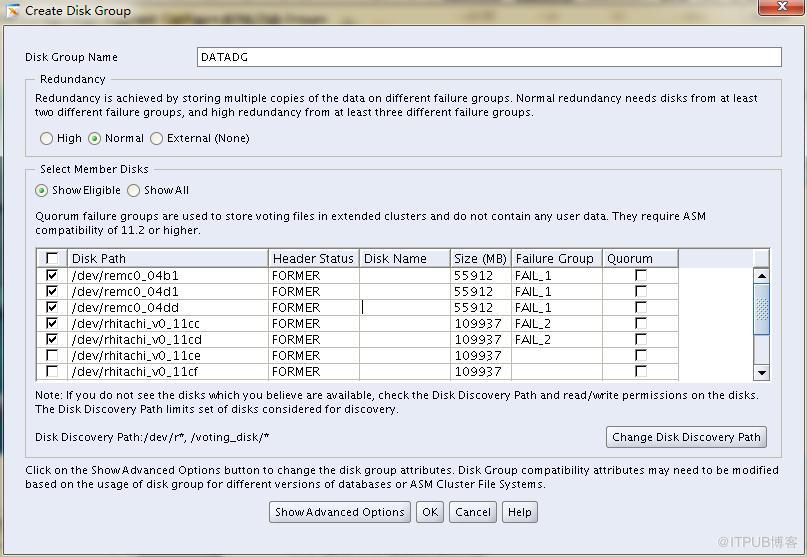

注:同一邊的存儲放在一個故障組中。

oracle 11G之后的ASM需要將rdbms的compatible參數修改為11.2.0.0,這個參數默認的是10.2.0.0,如果這個參數不修改,后面如果使用兩個故障組,其中一個故障組故障修復后,將故障組在線online的時候會報如下錯:

ORA-15283: ASM operation requires compatible.rdbms of 11.1.0.0.0 or higher

修改命令:

alter diskgroup SYSDG set attribute 'compatible.rdbms'='11.2.0.0';

select name,COMPATIBILITY,DATABASE_COMPATIBILITY from v$asm_diskgroup;

----compatibility對應asm的版本,

DATABASE_COMPATIBILITY --- 兼容數據庫版本

因為本次測試沒建庫,所以不涉及數據文件遷移,如需遷移,使用RMAN實現。

1)查看ocr跟votedisk

root@testdb1:/#/oracle/app/11.2.0/grid/bin/ocrcheck

Status of Oracle Cluster Registry is as follows :

Version : 3

Total space (kbytes) : 262120

Used space (kbytes) : 3296

Available space (kbytes) : 258824

ID : 1187520997

Device/File Name : /ocrvote/ocr1

Device/File integrity check succeeded

Device/File not configured

Device/File not configured

Device/File not configured

Device/File not configured

Cluster registry integrity check succeeded

Logical corruption check succeeded

root@testdb1:/#/oracle/app/11.2.0/grid/bin/crsctl query css votedisk

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE a948649dc0e14f65bf171ba2ca496962 (/ocrvote/votedisk1) []

2. ONLINE a5f290d560684f47bf82eb3d34db5fc7 (/ocrvote/votedisk2) []

3. ONLINE 49617fb984fc4fcdbf5b7566a9e1778f (/ocrvote/votedisk3) []

Located 3 voting disk(s).

2)查看資源狀態

$ crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.DATADG.dg

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.LISTENER.lsnr

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.OCRVOTE.dg

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.SYSDG.dg

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.asm

ONLINE ONLINE testdb1 Started

ONLINE ONLINE testdb2 Started

ONLINE ONLINE testdb3 Started

ONLINE ONLINE testdb4 Started

ora.gsd

OFFLINE OFFLINE testdb1

OFFLINE OFFLINE testdb2

OFFLINE OFFLINE testdb3

OFFLINE OFFLINE testdb4

ora.net1.network

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.ons

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.registry.acfs

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE testdb1

ora.cvu

1 ONLINE ONLINE testdb1

ora.oc4j

1 ONLINE ONLINE testdb1

ora.scan1.vip

1 ONLINE ONLINE testdb1

ora.testdb1.vip

1 ONLINE ONLINE testdb1

ora.testdb2.vip

1 ONLINE ONLINE testdb2

ora.testdb3.vip

1 ONLINE ONLINE testdb3

ora.testdb4.vip

1 ONLINE ONLINE testdb4

3)備份OCR

root@testdb1:/#/oracle/app/11.2.0/grid/bin/ocrconfig -manualbackup

root@testdb1:/#/oracle/app/11.2.0/grid/bin/ocrconfig -showbackup

4)將OCR增加到磁盤組中并刪除原有文件系統中的OCR

root@testdb1:/#/oracle/app/11.2.0/grid/bin/ocrconfig -add +OCRVOTE

root@testdb1:/#/oracle/app/11.2.0/grid/bin/ocrcheck

Status of Oracle Cluster Registry is as follows :

Version : 3

Total space (kbytes) : 262120

Used space (kbytes) : 3336

Available space (kbytes) : 258784

ID : 1187520997

Device/File Name : /ocrvote/ocr1

Device/File integrity check succeeded

Device/File Name : +OCRVOTE

Device/File integrity check succeeded

Device/File not configured

Device/File not configured

Device/File not configured

Cluster registry integrity check succeeded

Logical corruption check succeeded

root@testdb1:/#/oracle/app/11.2.0/grid/bin/ocrconfig -delete /ocrvote/ocr1

root@testdb1:/#/oracle/app/11.2.0/grid/bin/ocrcheck

Status of Oracle Cluster Registry is as follows :

Version : 3

Total space (kbytes) : 262120

Used space (kbytes) : 3336

Available space (kbytes) : 258784

ID : 1187520997

Device/File Name : +OCRVOTE

Device/File integrity check succeeded

Device/File not configured

Device/File not configured

Device/File not configured

Device/File not configured

Cluster registry integrity check succeeded

Logical corruption check succeeded

5)將votedisk遷移至文件系統中

root@testdb1:/#/oracle/app/11.2.0/grid/bin/crsctl query css votedisk

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE a948649dc0e14f65bf171ba2ca496962 (/ocrvote/votedisk1) []

2. ONLINE a5f290d560684f47bf82eb3d34db5fc7 (/ocrvote/votedisk2) []

3. ONLINE 49617fb984fc4fcdbf5b7566a9e1778f (/ocrvote/votedisk3) []

Located 3 voting disk(s).

root@testdb1:/#/oracle/app/11.2.0/grid/bin/crsctl replace votedisk +OCRVOTE

CRS-4256: Updating the profile

Successful addition of voting disk 3a5e5e8622024f17bf0c1a4594e303f5.

Successful addition of voting disk 92ff4555f7064f70bf3c022bd687dbc5.

Successful addition of voting disk 19a1fed74b7f4fb6bf780d43b5427dc9.

Successful deletion of voting disk a948649dc0e14f65bf171ba2ca496962.

Successful deletion of voting disk a5f290d560684f47bf82eb3d34db5fc7.

Successful deletion of voting disk 49617fb984fc4fcdbf5b7566a9e1778f.

Successfully replaced voting disk group with +OCRVOTE.

CRS-4256: Updating the profile

CRS-4266: Voting file(s) successfully replaced

root@testdb1:/#/oracle/app/11.2.0/grid/bin/crsctl query css votedisk

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE 3a5e5e8622024f17bf0c1a4594e303f5 (/dev/vx/rdmp/emc0_04a1) [OCRVOTE]

2. ONLINE 92ff4555f7064f70bf3c022bd687dbc5 (/dev/vx/rdmp/hitachi_vsp0_11cc) [OCRVOTE]

3. ONLINE 19a1fed74b7f4fb6bf780d43b5427dc9 (/dev/vx/rdmp/emc0_04c1) [OCRVOTE]

Located 3 voting disk(s).

3.將NFS添加至磁盤組OCTVOTE中,作為第三塊仲裁盤

asm extend rac需要在2套存儲之外的地方放置一臺linux的pc server,并需要在這臺server上創建一個文件系統。 將此文件系統以NFS形式掛載到asm extend rac的服務器端,NFS上需要使用dd命令生成盤。

系統版本:Linux el5 x86_64

groupadd -g 1000 oinstall

groupadd -g 1100 asmadmin

useradd -u 1100 -g oinstall -G oinstall,asmadmin -d /home/grid -c "GRID Software Owner" grid

注:建議nfs服務器用戶ID、組ID跟生產庫一致

cd /oradata

mkdir votedisk

chown 1100:1100 votedisk

vi /etc/exports

新增如下行

/oradata/votedisk *(rw,sync,all_squash,anonuid=1100,anongid=1100)

service nfs stop

service nfs start

[root@ywtcdb ~]# exportfs -v

/oradata 100.15.64.*(rw,wdelay,no_root_squash,no_subtree_check,anonuid=65534,anongid=65534)

/oradata/votedisk

<world>(rw,wdelay,root_squash,all_squash,no_subtree_check,anonuid=1100,anongid=1100)

(注:紅色部分為新增部分)

su - root

mkdir /voting_disk

chown grid:asmadmin /voting_disk

vi /etc/filesystems

新增如下內容:

/voting_disk:

dev = "/oradata/votedisk"

vfs = nfs

nodename = ywtcdb

mount = true

options = rw,bg,hard,intr,rsize=32768,wsize=32768,timeo=600,vers=3,proto=tcp,noac,sec=sys

account = false

(注:嚴格按照/etc/filesystems的已有選項進行配置,包括標點符號,空格等,建議使用smit nfs命令進行nfs配置,并在命令配置完成之后修改/etc/filesystems文件中對應掛載目錄的options屬性,options屬性必須是rw,bg,hard,intr,rsize=32768,wsize=32768,timeo=600,vers=3,proto=tcp,noac,sec=sys)

使用smit nfs命令設置啟動自動掛載nfs

#smit nfs

[TOP] [Entry Fields]

* Pathname of mount point [/voting_disk]

* Pathname of remote directory [/oradata/votedisk]

* Host where remote directory resides [ywtcdb]

Mount type name []

* Security method [sys]

* Mount now, add entry to /etc/filesystems or both? both

* /etc/filesystems entry will mount the directory yes

/usr/sbin/nfso –p -o nfs_use_reserved_ports=1

或nfso -p -o nfs_use_reserved_ports=1

su - root

mount -v nfs -o rw,bg,hard,intr,rsize=32768,wsize=32768,timeo=600,vers=3,proto=tcp,noac,sec=sys 100.15.57.125:/oradata/votedisk /voting_disk

注:命令中的100.15.57.125問NFS服務器的IP, /oradata/votedisk為NFS服務器的目錄,/voting_disk為生產主機的目錄。

dd if=/dev/zero of=/voting_disk/vote_disk_nfs bs=1M count=1000

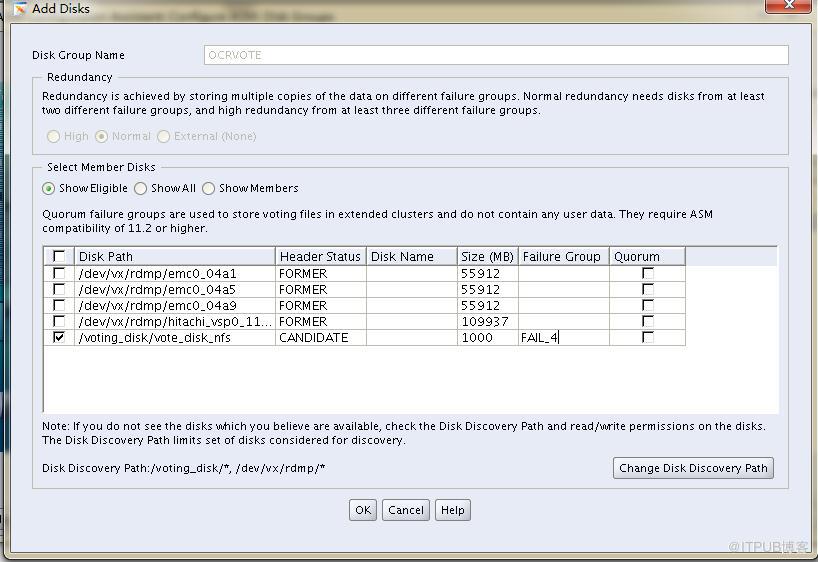

su – grid

export DISPLAY=100.15.70.169:0.0

asmca

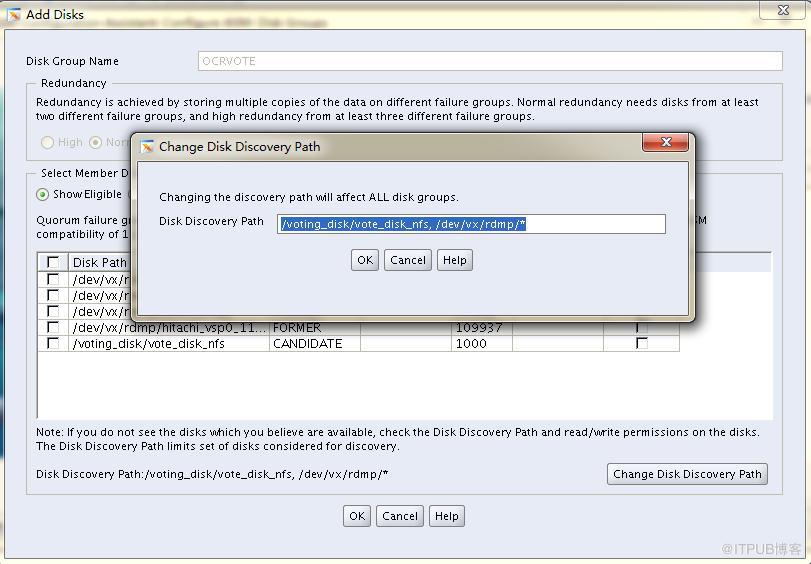

在asmca中要先改變Disk Discovery Path

修改前:

/dev/vx/rdmp/*

修改后:

/voting_disk/vote_disk_nfs, /dev/vx/rdmp/*

將盤/voting_disk/vote_disk_nfs加到磁盤組OCRVOTE中的一個新的故障組中,添加完成之后我們可以看到磁盤組OCRVOTE有3個故障組。

$ crsctl query css votedisk

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE 89210622f0864ff0bf9517205691e679 (/voting_disk/vote_disk_nfs) [OCRVOTE]

2. ONLINE 55c4ee685a824ff3bf6ce510bf09468e (/dev/vx/rdmp/remc0_04a1) [OCRVOTE]

3. ONLINE 159234e88fe64f55bf0d4571362c3b07 (/dev/vx/rdmp/ rhitachi_v0_11cd) [OCRVOTE]

Located 3 voting disk(s).

4.ASM EXTEND RAC高可用測試

css日志如下:

節點1::

2014-05-20 14:46:44.886:

[cssd(4129042)]CRS-1649:An I/O error occured for voting file: /dev/remc0_04a5; details at (:CSSNM00060:) in /oracle/app/11.2.0/grid/log/testdb1/cssd/ocssd.log.

2014-05-20 14:46:44.886:

[cssd(4129042)]CRS-1649:An I/O error occured for voting file: /dev/remc0_04a5; details at (:CSSNM00059:) in /oracle/app/11.2.0/grid/log/testdb1/cssd/ocssd.log.

2014-05-20 14:46:46.051:

[cssd(4129042)]CRS-1626:A Configuration change request completed successfully

2014-05-20 14:46:46.071:

[cssd(4129042)]CRS-1601:CSSD Reconfiguration complete. Active nodes are testdb1 testdb2 testdb3 testdb4 .

節點2:

2014-05-20 14:46:46.053:

[cssd(4195026)]CRS-1604:CSSD voting file is offline: /dev/remc0_04a5; details at (:CSSNM00069:) in /oracle/app/11.2.0/grid/log/testdb2/cssd/ocssd.log.

2014-05-20 14:46:46.053:

[cssd(4195026)]CRS-1626:A Configuration change request completed successfully

2014-05-20 14:46:46.071:

[cssd(4195026)]CRS-1601:CSSD Reconfiguration complete. Active nodes are testdb1 testdb2 testdb3 testdb4 .

節點3:

2014-05-20 14:46:46.053:

[cssd(3604942)]CRS-1604:CSSD voting file is offline: /dev/remc0_04a5; details at (:CSSNM00069:) in /oracle/app/11.2.0/grid/log/testdb3/cssd/ocssd.log.

2014-05-20 14:46:46.053:

[cssd(3604942)]CRS-1626:A Configuration change request completed successfully

2014-05-20 14:46:46.074:

[cssd(3604942)]CRS-1601:CSSD Reconfiguration complete. Active nodes are testdb1 testdb2 testdb3 testdb4 .

節點4:

2014-05-20 14:46:46.053:

[cssd(3015132)]CRS-1604:CSSD voting file is offline: /dev/remc0_04a5; details at (:CSSNM00069:) in /oracle/app/11.2.0/grid/log/testdb4/cssd/ocssd.log.

2014-05-20 14:46:46.053:

[cssd(3015132)]CRS-1626:A Configuration change request completed successfully

2014-05-20 14:46:46.073:

[cssd(3015132)]CRS-1601:CSSD Reconfiguration complete. Active nodes are testdb1 testdb2 testdb3 testdb4 .

CRS狀態正常:

testdb3:/oracle/app/11.2.0/grid/log/testdb3/cssd(testdb3)$/oracle/app/11.2.0/grid/bin/crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.DATADG.dg

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.LISTENER.lsnr

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.OCRVOTE.dg

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.SYSDG.dg

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.asm

ONLINE ONLINE testdb1 Started

ONLINE ONLINE testdb2 Started

ONLINE ONLINE testdb3 Started

ONLINE ONLINE testdb4 Started

ora.gsd

OFFLINE OFFLINE testdb1

OFFLINE OFFLINE testdb2

OFFLINE OFFLINE testdb3

OFFLINE OFFLINE testdb4

ora.net1.network

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.ons

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.registry.acfs

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE

testdb4

ora.cvu

1 ONLINE ONLINE testdb3

ora.oc4j

1 ONLINE ONLINE testdb3

ora.scan1.vip

1 ONLINE ONLINE testdb4

ora.testdb.db

1 ONLINE ONLINE testdb1 Open

2 ONLINE ONLINE testdb2 Open

3 ONLINE ONLINE testdb3 Open

4 ONLINE ONLINE testdb4 Open

ora.testdb1.vip

1 ONLINE ONLINE testdb1

ora.testdb2.vip

1 ONLINE ONLINE testdb2

ora.testdb3.vip

1 ONLINE ONLINE testdb3

ora.testdb4.vip

1 ONLINE ONLINE testdb4

查看votedisk如下:

$ /oracle/app/11.2.0/grid/bin/crsctl query css votedisk

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE 8a31ddf5013d4fb1bfdbb01d6fc6eb7b (/dev/rhitachi_v0_11cc) [OCRVOTE]

2. ONLINE 1ef9486d54b24f8cbf07814d2848a009 (/voting_disk/vote_disk_nfs) [OCRVOTE]

Located 2 voting disk(s).

當把存儲光纖插回去之后手動online磁盤,兩邊存儲會自動同步數據

alter diskgroup SYSDG online disks in failgroup fail_1;

alter diskgroup DATADG online disks in failgroup fail_1;

所有EMC存儲在各節點ASM磁盤組中都自動OFFLINE,保留HDS存儲,各節點實例正常。在測試中我們拔掉hds存儲光纖,現象跟拔掉EMS存儲光纖一致。由此可以得出:當一邊存儲宕掉之后,ASM EXTEND RAC保留好的那邊存儲,各節點實例均正常。當把存儲光纖插回去之后手動online磁盤,兩邊存儲會自動同步數據。

注:存放votedisk的磁盤組在磁盤掛回來之后會自動online磁盤

當reboot節點1、2主機,查看crs資源狀態如下:

$ crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.ARCHDG.dg

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.DATADG.dg

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.LISTENER.lsnr

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.OCRVOTE.dg

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.SYSDG.dg

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.asm

ONLINE ONLINE testdb3 Started

ONLINE ONLINE testdb4 Started

ora.gsd

OFFLINE OFFLINE testdb3

OFFLINE OFFLINE testdb4

ora.net1.network

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.ons

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.registry.acfs

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE testdb3

ora.cvu

1 ONLINE ONLINE testdb3

ora.oc4j

1 ONLINE ONLINE testdb3

ora.scan1.vip

1 ONLINE ONLINE testdb3

ora.testdb.db

1 ONLINE OFFLINE

2 ONLINE OFFLINE

3 ONLINE ONLINE testdb3 Open

4 ONLINE ONLINE testdb4 Open

ora.testdb1.vip

1 ONLINE INTERMEDIATE testdb4 FAILED OVER

ora.testdb2.vip

1 ONLINE INTERMEDIATE testdb3 FAILED OVER

ora.testdb3.vip

1 ONLINE ONLINE testdb3

ora.testdb4.vip

1 ONLINE ONLINE testdb4

當節點1、2主機起來之后,在查看CRS狀態如下:

$crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.DATADG.dg

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.LISTENER.lsnr

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.OCRVOTE.dg

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.SYSDG.dg

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.asm

ONLINE ONLINE testdb1 Started

ONLINE ONLINE testdb2 Started

ONLINE ONLINE testdb3 Started

ONLINE ONLINE testdb4 Started

ora.gsd

OFFLINE OFFLINE testdb1

OFFLINE OFFLINE testdb2

OFFLINE OFFLINE testdb3

OFFLINE OFFLINE testdb4

ora.net1.network

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.ons

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.registry.acfs

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE testdb3

ora.cvu

1 ONLINE ONLINE testdb3

ora.oc4j

1 ONLINE ONLINE testdb4

ora.scan1.vip

1 ONLINE ONLINE testdb3

ora.testdb.db

1 ONLINE ONLINE testdb1 Open

2 ONLINE ONLINE testdb2 Open

3 ONLINE ONLINE testdb3 Open

4 ONLINE ONLINE testdb4 Open

ora.testdb1.vip

1 ONLINE ONLINE testdb1

ora.testdb2.vip

1 ONLINE ONLINE testdb2

ora.testdb3.vip

1 ONLINE ONLINE testdb3

ora.testdb4.vip

1 ONLINE ONLINE testdb4

當宕掉1個或多個節點時,其VIP會飄至正常節點,所有客戶端重連接到可用節點,當測試主機重啟完成之后,CRS會自動拉起,且VIP會正常回飄。

由于主機做了虛擬化,無法拔除網線。使用命令ifconfig en1 down宕掉節點1 public ip所在的網卡進行測試

1)查看節點1發現公有IP、VIP及SCAN IP均在網卡en1上。

root@testdb1:/#netstat -in

Name Mtu Network Address Ipkts Ierrs Opkts Oerrs Coll

en1 1500 link#2 0.14.5e.79.5c.ca 5153732 0 4066346 2 0

en1 1500 100.15.64 100.15.64.180 5153732 0 4066346 2 0

en1 1500 100.15.64 100.15.64.184 5153732 0 4066346 2 0

en1 1500 100.15.64 100.15.64.188 5153732 0 4066346 2 0

en2 1500 link#3 0.14.5e.79.5b.e6 40305463 0 44224443 2 0

en2 1500 7.154.64 7.154.64.1 40305463 0 44224443 2 0

en2 1500 169.254 169.254.78.30 40305463 0 44224443 2 0

lo0 16896 link#1 2316784 0 2316787 0 0

lo0 16896 127 127.0.0.1 2316784 0 2316787 0 0

lo0 16896 ::1%1 2316784 0 2316787 0 0

2)使用命令ifconfig en1 down進行測試

root@testdb1:/oracle/app/11.2.0/grid/bin#ifconfig en1 down

3)查看crs資源狀態發現vip,scan ip均已飄至正常節點

testdb3:/home/oracle(testdb3)$crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.DATADG.dg

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.LISTENER.lsnr

ONLINE OFFLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.OCRVOTE.dg

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.SYSDG.dg

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.asm

ONLINE ONLINE testdb1 Started

ONLINE ONLINE testdb2 Started

ONLINE ONLINE testdb3 Started

ONLINE ONLINE testdb4 Started

ora.gsd

OFFLINE OFFLINE testdb1

OFFLINE OFFLINE testdb2

OFFLINE OFFLINE testdb3

OFFLINE OFFLINE testdb4

ora.net1.network

ONLINE OFFLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.ons

ONLINE OFFLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.registry.acfs

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE testdb2

ora.cvu

1 ONLINE ONLINE testdb2

ora.oc4j

1 ONLINE ONLINE testdb4

ora.scan1.vip

1 ONLINE ONLINE testdb2

ora.testdb.db

1 ONLINE ONLINE testdb1 Open

2 ONLINE ONLINE testdb2 Open

3 ONLINE ONLINE testdb3 Open

4 ONLINE ONLINE testdb4 Open

ora.testdb1.vip

1 ONLINE INTERMEDIATE testdb4 FAILED OVER

ora.testdb2.vip

1 ONLINE ONLINE testdb2

ora.testdb3.vip

1 ONLINE ONLINE testdb3

ora.testdb4.vip

1 ONLINE ONLINE testdb4

4)將節點1的en1網卡啟起來

root@testdb1:/#ifconfig en1 up

5)查看crs資源狀態發現vip正常回飄

testdb3:/home/oracle(testdb3)$crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.DATADG.dg

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.LISTENER.lsnr

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.OCRVOTE.dg

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.SYSDG.dg

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.asm

ONLINE ONLINE testdb1 Started

ONLINE ONLINE testdb2 Started

ONLINE ONLINE testdb3 Started

ONLINE ONLINE testdb4 Started

ora.gsd

OFFLINE OFFLINE testdb1

OFFLINE OFFLINE testdb2

OFFLINE OFFLINE testdb3

OFFLINE OFFLINE testdb4

ora.net1.network

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.ons

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

ora.registry.acfs

ONLINE ONLINE testdb1

ONLINE ONLINE testdb2

ONLINE ONLINE testdb3

ONLINE ONLINE testdb4

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE testdb2

ora.cvu

1 ONLINE ONLINE testdb2

ora.oc4j

1 ONLINE ONLINE testdb4

ora.scan1.vip

1 ONLINE ONLINE testdb2

ora.testdb.db

1 ONLINE ONLINE testdb1 Open

2 ONLINE ONLINE testdb2 Open

3 ONLINE ONLINE testdb3 Open

4 ONLINE ONLINE testdb4 Open

ora.testdb1.vip

1 ONLINE ONLINE testdb1

ora.testdb2.vip

1 ONLINE ONLINE testdb2

ora.testdb3.vip

1 ONLINE ONLINE testdb3

ora.testdb4.vip

1 ONLINE ONLINE testdb4

測試節點(節點1)監聽停止,SCAN LISTENER原來在該節點運行,已漂移到其他可用節點,測試節點 VIP漂移到其他可用節點,當網卡起來之后(public網絡恢復正常),VIP正常回飄,測試節點監聽自動online,SCAN LISTENER及scan VIP沒回飄。而后我們依次測試宕掉其他節點的public IP所在網卡,發現SCAN LISTENER漂移至instance_number最小的節點,而vip隨機漂移。

通過kill監聽進程實現

原有連接沒有收到影響,新的連接不能連到該節點實例,應用通過TAF或自動重連到另一節點

監聽進程自動重新啟動

通過kill pmon進程實現

kill pmon進程后,數據庫實例crash,并且實例自動重啟,重啟完成后會話自動重新連接

通過kill cssd進程實現

kill cssd進程后,該節點重啟,VIP飄至其他正常節點,主機啟動完成后CRS自動拉起,集群重新配置。

通過kill crsd進程實現

kill crsd.bin進程后,一分鐘內該進程自動拉起。原理:crsd進程crash將會被orarootagent檢測到,同時crsd進程會被自動重啟。

通過kill evmd進程實現

kill evmd.bin進程后,一分鐘內該進程自動拉起。原理:evmd進程crash將被ohasd進程檢測到,evmd、orarootagent和crsd進程將會被重啟

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。