您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

Ansible自動化部署k8s二進制集群Ansible是一種IT自動化工具。它可以配置系統,部署軟件以及協調更高級的IT任務,例如持續部署,滾動更新。Ansible適用于管理企業IT基礎設施。 這里我通過Ansible來實現Kubernetes v1.16 高可用集群自動部署(離線版) (但是還是需要網絡,因為這里需要去部署flannel,coredns,ingress,dashboard插件,需要拉取鏡像

Ansible自動化部署k8s-1.16.0版集群

介紹

使用ansible自動化部署k8s集群(支持單master,多master)離線版

軟件架構

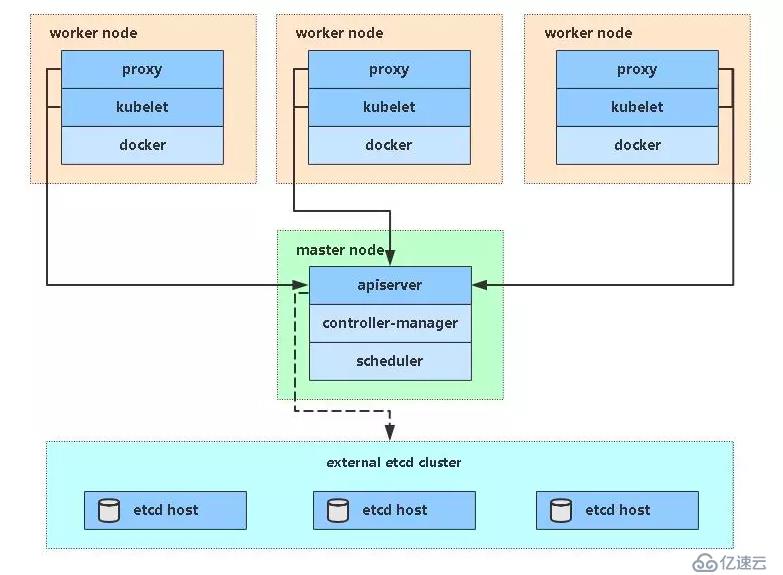

軟件架構說明單master架構

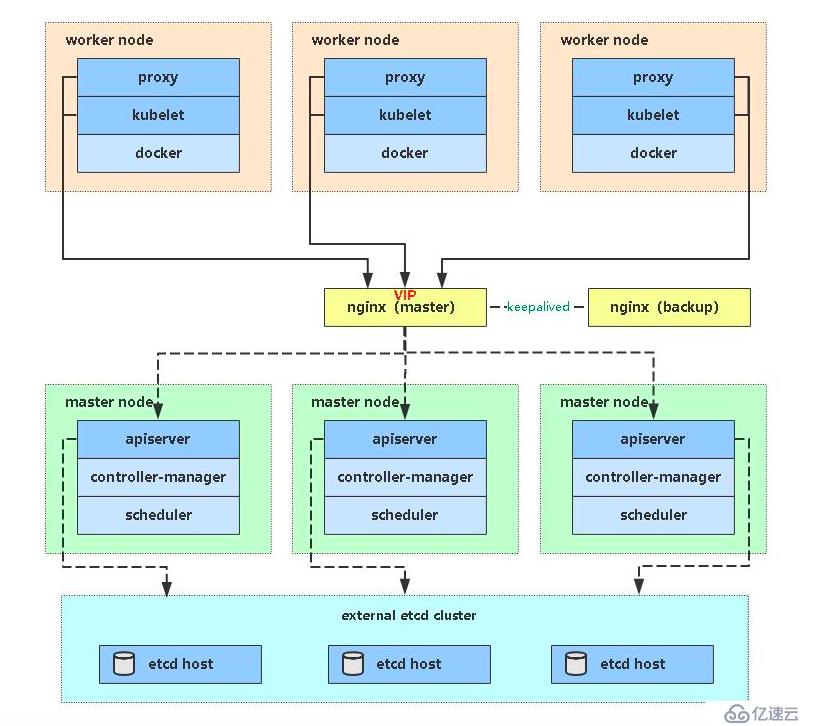

多master架構

1.安裝教程

先部署一臺Ansible來做管控節點,安裝步驟這里省略

將兩個文件都解壓到ansible服務器上,我的工作目錄是在/opt/下,將解壓的目錄都放在/opt下

修改hosts文件,指定部署是單master,還是多master,以及group_var下的all的變量,將ip指定需要修改的2.使用說明

單master,4c,8g,(1臺master,2臺node,1臺ansible)

多master,4c,8g,(2臺master,2臺node,1臺ansible,2臺nginx)

如果部署的是多master主機,那么需要在nginx上再跑1個keepalived,如果是云主機可以拿slb來補充1. 系統初始化

3.Roles組織K8S各組件部署解析

編寫建議:

下載所需文件

確保所有節點系統時間一致

4.下載Ansible部署文件:

git clone git@gitee.com:zhaocheng172/ansible-k8s.git拉取代碼的時候,請把你的公鑰發給我,不然你拉取不下來

下載軟件包并解壓:

https://pan.baidu.com/s/1Wf9sFR4zkpx_D0BJbZK7ZQ

tar zxf binary_pkg.tar.gz

修改Ansible文件

修改hosts文件,根據規劃修改對應IP和名稱。

vi hosts修改group_vars/all.yml文件,修改nic網卡地址和證書可信任IP。

vim group_vars/all.yml

nic: eth0 根據自己的網卡去寫

k8s:信任的ip5.一鍵部署

單Master版ansible-playbook -i hosts single-master-deploy.yml -uroot -k

多Master版:ansible-playbook -i hosts multi-master-deploy.yml -uroot -k

6、部署控制

如果安裝某個階段失敗,可針對性測試.

例如:只運行部署插件ansible-playbook -i hosts single-master-deploy.yml -uroot -k --tags master

部署單master完效果

[root@k8s-master1 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master1 Ready <none> 2d3h v1.16.0

k8s-node1 Ready <none> 2d3h v1.16.0

k8s-node2 Ready <none> 2d3h v1.16.0[root@k8s-master1 ~]# kubectl get cs

NAME AGE

controller-manager <unknown>

scheduler <unknown>

etcd-2 <unknown>

etcd-0 <unknown>

etcd-1 <unknown>[root@k8s-master1 ~]# kubectl get pod,svc -A

NAMESPACE NAME READY STATUS RESTARTS AGE

ingress-nginx pod/nginx-ingress-controller-8zp8r 1/1 Running 0 2d3h

ingress-nginx pod/nginx-ingress-controller-bfgj6 1/1 Running 0 2d3h

ingress-nginx pod/nginx-ingress-controller-n5k22 1/1 Running 0 2d3h

kube-system pod/coredns-59fb8d54d6-n6m5w 1/1 Running 0 2d3h

kube-system pod/kube-flannel-ds-amd64-jwvw6 1/1 Running 0 2d3h

kube-system pod/kube-flannel-ds-amd64-m92sg 1/1 Running 0 2d3h

kube-system pod/kube-flannel-ds-amd64-xwf2h 1/1 Running 0 2d3h

kubernetes-dashboard pod/dashboard-metrics-scraper-566cddb686-smw6p 1/1 Running 0 2d3h

kubernetes-dashboard pod/kubernetes-dashboard-c4bc5bd44-zgd82 1/1 Running 0 2d3h

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 2d3h

ingress-nginx service/ingress-nginx ClusterIP 10.0.0.22 <none> 80/TCP,443/TCP 2d3h

kube-system service/kube-dns ClusterIP 10.0.0.2 <none> 53/UDP,53/TCP 2d3h

kubernetes-dashboard service/dashboard-metrics-scraper ClusterIP 10.0.0.176 <none> 8000/TCP 2d3h

kubernetes-dashboard service/kubernetes-dashboard NodePort 10.0.0.72 <none> 443:30001/TCP 2d3h部署完多master效果

[root@k8s-master1 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master1 Ready <none> 6m18s v1.16.0

k8s-master2 Ready <none> 6m17s v1.16.0

k8s-node1 Ready <none> 6m10s v1.16.0

k8s-node2 Ready <none> 6m16s v1.16.0[root@k8s-master1 ~]# kubectl get cs

NAME AGE

controller-manager <unknown>

scheduler <unknown>

etcd-2 <unknown>

etcd-1 <unknown>

etcd-0 <unknown>[root@k8s-master1 ~]# kubectl get pod,svc -A

NAMESPACE NAME READY STATUS RESTARTS AGE

ingress-nginx pod/nginx-ingress-controller-4nf6j 1/1 Running 0 45s

ingress-nginx pod/nginx-ingress-controller-5fknt 1/1 Running 0 45s

ingress-nginx pod/nginx-ingress-controller-lwbkz 1/1 Running 0 45s

ingress-nginx pod/nginx-ingress-controller-v8k8n 1/1 Running 0 45s

kube-system pod/coredns-59fb8d54d6-959xj 1/1 Running 0 6m44s

kube-system pod/kube-flannel-ds-amd64-2hnzq 1/1 Running 0 6m31s

kube-system pod/kube-flannel-ds-amd64-64hqc 1/1 Running 0 6m25s

kube-system pod/kube-flannel-ds-amd64-p9d8w 1/1 Running 0 6m32s

kube-system pod/kube-flannel-ds-amd64-pchp5 1/1 Running 0 6m33s

kubernetes-dashboard pod/dashboard-metrics-scraper-566cddb686-kf4qq 1/1 Running 0 32s

kubernetes-dashboard pod/kubernetes-dashboard-c4bc5bd44-dqfb8 1/1 Running 0 32s

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 19m

ingress-nginx service/ingress-nginx ClusterIP 10.0.0.53 <none> 80/TCP,443/TCP 45s

kube-system service/kube-dns ClusterIP 10.0.0.2 <none> 53/UDP,53/TCP 6m47s

kubernetes-dashboard service/dashboard-metrics-scraper ClusterIP 10.0.0.147 <none> 8000/TCP 32s

kubernetes-dashboard service/kubernetes-dashboard NodePort 10.0.0.176 <none> 443:30001/TCP 32s擴容Node節點

模擬擴容node節點,由于我的資源過多,導致無法分配,出現pending的狀態

[root@k8s-master1 ~]# kubectl run web --image=nginx --replicas=6 --requests="cpu=1,memory=256Mi"

[root@k8s-master1 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-944cddf48-6qhcl 1/1 Running 0 15m

web-944cddf48-7ldsv 1/1 Running 0 15m

web-944cddf48-7nv9p 0/1 Pending 0 2s

web-944cddf48-b299n 1/1 Running 0 15m

web-944cddf48-nsxgg 0/1 Pending 0 15m

web-944cddf48-pl4zt 1/1 Running 0 15m

web-944cddf48-t8fqt 1/1 Running 0 15m現在的狀態就是pod由于資源池不夠,無法分配資源到當前的節點上了,所以現在我們需要對我們的node節點進行擴容

執行playbook,指定新的節點

[root@ansible ansible-install-k8s-master]# ansible-playbook -i hosts add-node.yml -uroot -k

查看已經收到加入node的請求,并運行通過

[root@k8s-master1 ~]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-0i7BzFaf8NyG_cdx_hqDmWg8nd4FHQOqIxKa45x3BJU 45m kubelet-bootstrap Approved,Issued查看node節點狀態

[root@k8s-master1 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master1 Ready <none> 7d v1.16.0

k8s-node1 Ready <none> 7d v1.16.0

k8s-node2 Ready <none> 7d v1.16.0

k8s-node3 Ready <none> 2m52s v1.16.0查看pod資源已經自動分配上新節點上

[root@k8s-master1 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-944cddf48-6qhcl 1/1 Running 0 80m

web-944cddf48-7ldsv 1/1 Running 0 80m

web-944cddf48-7nv9p 1/1 Running 0 65m

web-944cddf48-b299n 1/1 Running 0 80m

web-944cddf48-nsxgg 1/1 Running 0 80m

web-944cddf48-pl4zt 1/1 Running 0 80m

web-944cddf48-t8fqt 1/1 Running 0 80m免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。