您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

本文以kubernetes文件為例,為大家分析kubernetes進行離線升級和在線升級的方法步驟。閱讀完整文相信大家對kubernetes升級更新有了一定的認識。

kubernetes版本升級迭代非常快,每三個月更新一個版本,很多新的功能在新版本中快速迭代,為了與社區版本功能保持一致,升級kubernetes集群,社區已通過kubeadm工具統一升級集群,升級步驟簡單易行。首先來看下升級kubernetes集群需要升級那些組件:

worker工作節點,升級工作節點上的Container Runtime如docker,kubelet和kube-proxy。

版本升級通常分為兩類:小版本升級和跨版本升級,小版本升級如1.14.1升級只1.14.2,小版本之間可以跨版本升級如1.14.1直接升級至1.14.3;跨版本升級指大版本升級,如1.14.x升級至1.15.x。本文以離線的方式將1.14.1升級至1.1.5.1版本,升級前需要滿足條件如下:

1、查看當前版本,系統上部署的版本是1.1.4.1

[root@node-1 ~]# kubectl version

Client Version: version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.1", GitCommit:"b7394102d6ef778017f2ca4046abbaa23b88c290", GitTreeState:"clean", BuildDate:"2019-04-08T17:11:31Z", GoVersion:"go1.12.1", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.1", GitCommit:"b7394102d6ef778017f2ca4046abbaa23b88c290", GitTreeState:"clean", BuildDate:"2019-04-08T17:02:58Z", GoVersion:"go1.12.1", Compiler:"gc", Platform:"linux/amd64"}

[root@node-1 ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.1", GitCommit:"b7394102d6ef778017f2ca4046abbaa23b88c290", GitTreeState:"clean", BuildDate:"2019-04-08T17:08:49Z", GoVersion:"go1.12.1", Compiler:"gc", Platform:"linux/amd64"}2、查看node節點的版本,node上的kubelet和kube-proxy使用1.1.4.1版本

[root@node-1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

node-1 Ready master 25h v1.14.1

node-2 Ready <none> 25h v1.14.1

node-3 Ready <none> 25h v1.14.13、其他組件狀態,確保當前組件,應用狀態正常

[root@node-1 ~]# kubectl get componentstatuses

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

[root@node-1 ~]#

[root@node-1 ~]# kubectl get deployments --all-namespaces

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE

default demo 3/3 3 3 37m

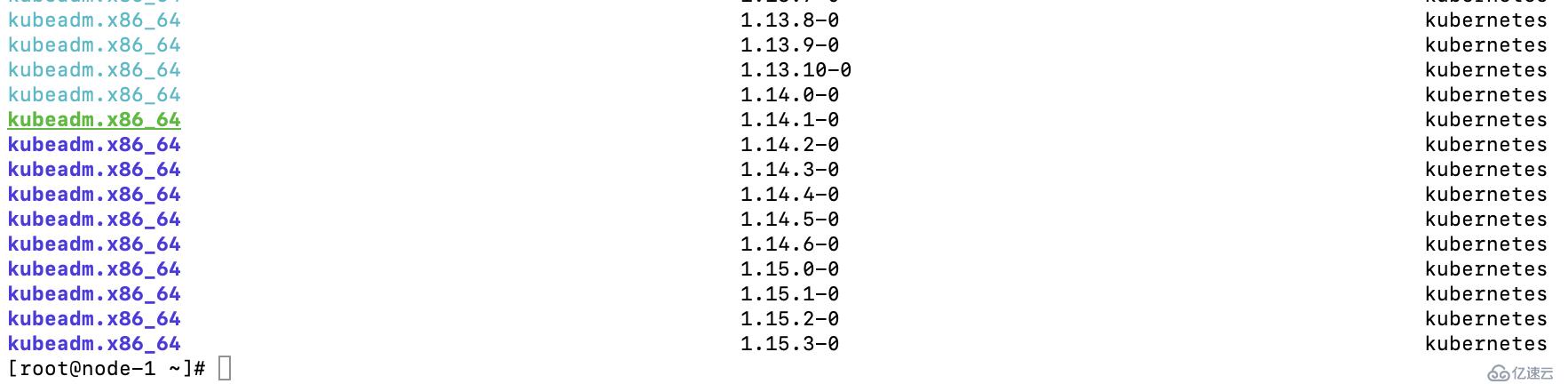

kube-system coredns 2/2 2 2 25h4、查看kubernetes最新版本(配置kubernetes的yum源,需要合理上網才可以訪問),使用yum list --showduplicates kubeadm --disableexcludes=kubernetes查看當前能升級版本,綠色為當前版本,藍色為可以升級的版本,如下圖:

1、倒入安裝鏡像,先從https://pan.baidu.com/s/1hw8Q0Vf3xvhKoEiVtMi6SA網盤中下載kubernetes安裝鏡像并上傳到集群中,解壓并進入到v1.15.1目錄下,將鏡像倒入到三個節點中,以node-2為例倒入鏡像:

倒入鏡像:

[root@node-2 v1.15.1]# docker image load -i kube-apiserver\:v1.15.1.tar

[root@node-2 v1.15.1]# docker image load -i kube-scheduler\:v1.15.1.tar

[root@node-2 v1.15.1]# docker image load -i kube-controller-manager\:v1.15.1.tar

[root@node-2 v1.15.1]# docker image load -i kube-proxy\:v1.15.1.tar

查看當前系統導入鏡像列表:

[root@node-1 ~]# docker image list

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/kube-proxy v1.15.1 89a062da739d 8 weeks ago 82.4MB

k8s.gcr.io/kube-controller-manager v1.15.1 d75082f1d121 8 weeks ago 159MB

k8s.gcr.io/kube-scheduler v1.15.1 b0b3c4c404da 8 weeks ago 81.1MB

k8s.gcr.io/kube-apiserver v1.15.1 68c3eb07bfc3 8 weeks ago 207MB

k8s.gcr.io/kube-proxy v1.14.1 20a2d7035165 5 months ago 82.1MB

k8s.gcr.io/kube-apiserver v1.14.1 cfaa4ad74c37 5 months ago 210MB

k8s.gcr.io/kube-scheduler v1.14.1 8931473d5bdb 5 months ago 81.6MB

k8s.gcr.io/kube-controller-manager v1.14.1 efb3887b411d 5 months ago 158MB

quay.io/coreos/flannel v0.11.0-amd64 ff281650a721 7 months ago 52.6MB

k8s.gcr.io/coredns 1.3.1 eb516548c180 8 months ago 40.3MB

k8s.gcr.io/etcd 3.3.10 2c4adeb21b4f 9 months ago 258MB

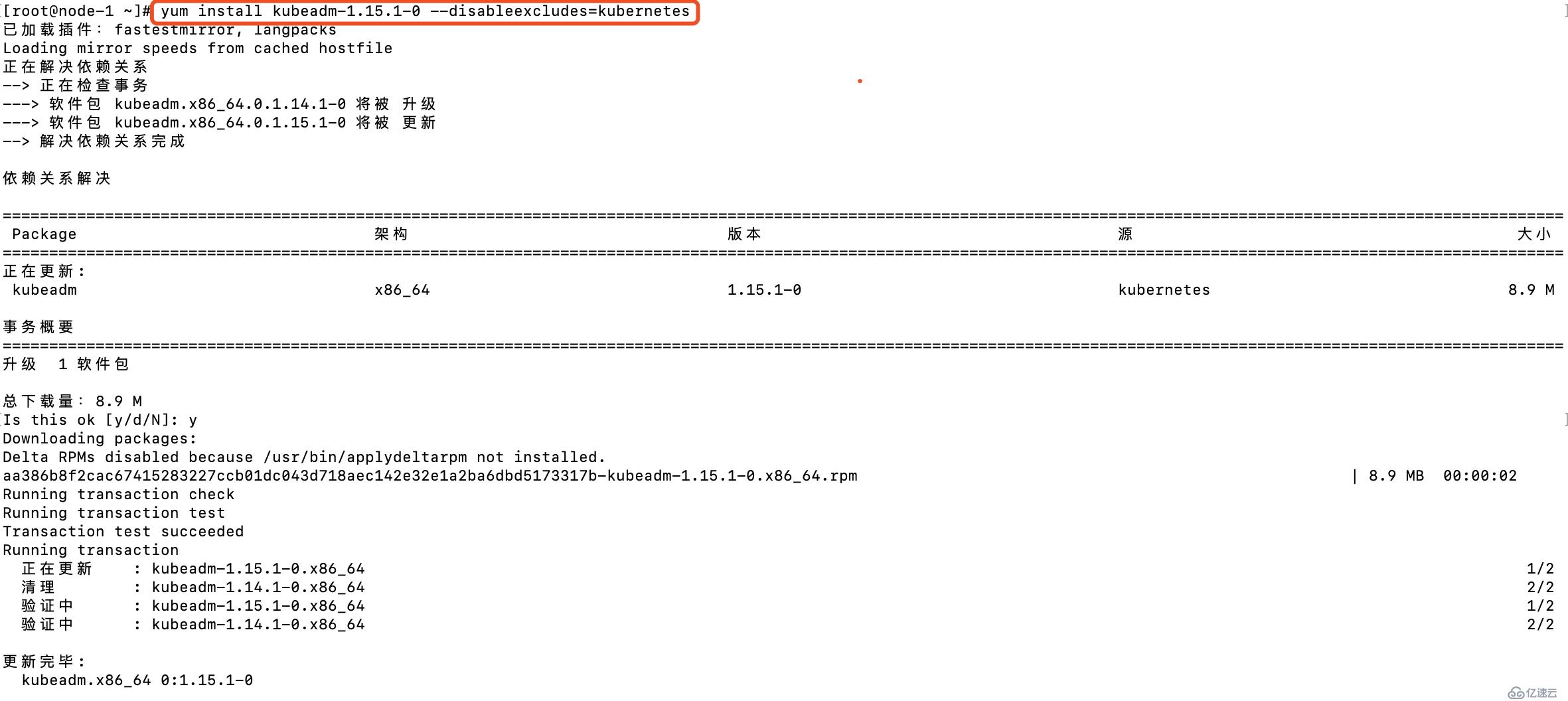

k8s.gcr.io/pause 3.1 da86e6ba6ca1 21 months ago 742kB2、更新kubeadm版本至1.15.1,國內可以參考https://blog.51cto.com/2157217/1983992設置kubernetes源

3、校驗kubeadm版本,已升級至1.1.5.1版本

[root@node-1 ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"15", GitVersion:"v1.15.1", GitCommit:"4485c6f18cee9a5d3c3b4e523bd27972b1b53892", GitTreeState:"clean", BuildDate:"2019-07-18T09:15:32Z", GoVersion:"go1.12.5", Compiler:"gc", Platform:"linux/amd64"}4、查看升級計劃,通過kubeadm可以查看當前集群的升級計劃,會顯示當前小版本最新的版本以及社區最新的版本

[root@node-1 ~]# kubeadm upgrade plan

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[preflight] Running pre-flight checks.

[upgrade] Making sure the cluster is healthy:

[upgrade] Fetching available versions to upgrade to

[upgrade/versions] Cluster version: v1.14.1 #當前集群版本

[upgrade/versions] kubeadm version: v1.15.1 #當前kubeadm版本

[upgrade/versions] Latest stable version: v1.15.3 #社區最新版本

[upgrade/versions] Latest version in the v1.14 series: v1.14.6 #1.14.x中最新的版本

Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':

COMPONENT CURRENT AVAILABLE

Kubelet 3 x v1.14.1 v1.14.6

Upgrade to the latest version in the v1.14 series:

COMPONENT CURRENT AVAILABLE #可升級的版本信息,當前可從1.14.1升級至1.14.6版本

API Server v1.14.1 v1.14.6

Controller Manager v1.14.1 v1.14.6

Scheduler v1.14.1 v1.14.6

Kube Proxy v1.14.1 v1.14.6

CoreDNS 1.3.1 1.3.1

Etcd 3.3.10 3.3.10

You can now apply the upgrade by executing the following command:

kubeadm upgrade apply v1.14.6 #升級至1.14.6執行的操作命令

_____________________________________________________________________

Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':

COMPONENT CURRENT AVAILABLE

Kubelet 3 x v1.14.1 v1.15.3

Upgrade to the latest stable version:

COMPONENT CURRENT AVAILABLE #跨版本升級的版本,當前最新的版本是1.15.3

API Server v1.14.1 v1.15.3

Controller Manager v1.14.1 v1.15.3

Scheduler v1.14.1 v1.15.3

Kube Proxy v1.14.1 v1.15.3

CoreDNS 1.3.1 1.3.1

Etcd 3.3.10 3.3.10

You can now apply the upgrade by executing the following command:

kubeadm upgrade apply v1.15.3 #升級至社區最新的版本執行的操作

Note: Before you can perform this upgrade, you have to update kubeadm to v1.15.3.

_____________________________________________________________________5、當前鏡像沒有下載最新鏡像,本文以升級1.1.5.1版本為例,升級其他版本相類似,需要確保當前集群已獲取到相關鏡像,升級過程中也會更新證書,可通過--certificate-renewal=false關閉證書升級,升級至1.15.1版本操作如下:

[root@node-1 ~]# kubeadm upgrade apply v1.15.1

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[preflight] Running pre-flight checks.

[upgrade] Making sure the cluster is healthy:

[upgrade/version] You have chosen to change the cluster version to "v1.15.1"

[upgrade/versions] Cluster version: v1.14.1

[upgrade/versions] kubeadm version: v1.15.1

[upgrade/confirm] Are you sure you want to proceed with the upgrade? [y/N]: y 版本升級信息,確認操作

[upgrade/prepull] Will prepull images for components [kube-apiserver kube-controller-manager kube-scheduler etcd]

[upgrade/prepull] Prepulling image for component etcd.

[upgrade/prepull] Prepulling image for component kube-apiserver.

[upgrade/prepull] Prepulling image for component kube-controller-manager.

[upgrade/prepull] Prepulling image for component kube-scheduler.

[apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-kube-controller-manager

[apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-kube-apiserver

[apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-etcd

[apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-kube-scheduler

[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-controller-manager

[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-scheduler

[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-etcd

[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-apiserver

[upgrade/prepull] Prepulled image for component kube-controller-manager.

[upgrade/prepull] Prepulled image for component kube-scheduler.

[upgrade/prepull] Prepulled image for component etcd.

[upgrade/prepull] Prepulled image for component kube-apiserver. #拉取鏡像的步驟

[upgrade/prepull] Successfully prepulled the images for all the control plane components

[upgrade/apply] Upgrading your Static Pod-hosted control plane to version "v1.15.1"...

Static pod: kube-apiserver-node-1 hash: bdf7ffba48feb2fc4c7676e7525066fd

Static pod: kube-controller-manager-node-1 hash: ecf9c37413eace225bc60becabeddb3b

Static pod: kube-scheduler-node-1 hash: f44110a0ca540009109bfc32a7eb0baa

[upgrade/etcd] Upgrading to TLS for etcd #開始更新靜態pod,及master上的每個節點

[upgrade/staticpods] Writing new Static Pod manifests to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests122291749"

[upgrade/staticpods] Preparing for "kube-apiserver" upgrade

[upgrade/staticpods] Renewing apiserver certificate

[upgrade/staticpods] Renewing apiserver-kubelet-client certificate

[upgrade/staticpods] Renewing front-proxy-client certificate

[upgrade/staticpods] Renewing apiserver-etcd-client certificate #更新證書

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-apiserver.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2019-09-15-12-41-40/kube-apiserver.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

Static pod: kube-apiserver-node-1 hash: bdf7ffba48feb2fc4c7676e7525066fd

Static pod: kube-apiserver-node-1 hash: bdf7ffba48feb2fc4c7676e7525066fd

Static pod: kube-apiserver-node-1 hash: bdf7ffba48feb2fc4c7676e7525066fd

Static pod: kube-apiserver-node-1 hash: 4cd1e2acc44e2d908fd2c7b307bfce59

[apiclient] Found 1 Pods for label selector component=kube-apiserver

[upgrade/staticpods] Component "kube-apiserver" upgraded successfully! #更新成功

[upgrade/staticpods] Preparing for "kube-controller-manager" upgrade

[upgrade/staticpods] Renewing controller-manager.conf certificate

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-controller-manager.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2019-09-15-12-41-40/kube-controller-manager.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

Static pod: kube-controller-manager-node-1 hash: ecf9c37413eace225bc60becabeddb3b

Static pod: kube-controller-manager-node-1 hash: 17b23c8c6fcf9b9f8a3061b3a2fbf633

[apiclient] Found 1 Pods for label selector component=kube-controller-manager

[upgrade/staticpods] Component "kube-controller-manager" upgraded successfully!#更新成功

[upgrade/staticpods] Preparing for "kube-scheduler" upgrade

[upgrade/staticpods] Renewing scheduler.conf certificate

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-scheduler.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2019-09-15-12-41-40/kube-scheduler.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

Static pod: kube-scheduler-node-1 hash: f44110a0ca540009109bfc32a7eb0baa

Static pod: kube-scheduler-node-1 hash: 18859150495c74ad1b9f283da804a3db

[apiclient] Found 1 Pods for label selector component=kube-scheduler

[upgrade/staticpods] Component "kube-scheduler" upgraded successfully! #更新成功

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.15" in namespace kube-system with the configuration for the kubelets in the cluster

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.15" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

[upgrade/successful] SUCCESS! Your cluster was upgraded to "v1.15.1". Enjoy! #更新成功提示

[upgrade/kubelet] Now that your control plane is upgraded, please proceed with upgrading your kubelets if you haven't already done so.6、上述可看到master升級成功的信息,升級指特定版本只需要在apply的后面指定具體的版本即可,升級完完master后可以升級各個組件的plugin,詳情參考不同網絡的升級步驟,如flannel,calico等,升級過程升級對應的DaemonSets即可。

7、升級kubelet版本并重啟kubelet服務,至此,master節點版本升級完畢。

[root@node-1 ~]# yum install -y kubelet-1.15.1-0 kubectl-1.15.1-0 --disableexcludes=kubernetes

[root@node-1 ~]# systemctl daemon-reload

[root@node-1 ~]# systemctl restart kubelet1、升級kubeadm和kubelet軟件包

[root@node-2 ~]# yum install -y kubelet-1.15.1-0 --disableexcludes=kubernetes

[root@node-2 ~]# yum install -y kubeadm-1.15.1-0 --disableexcludes=kubernetes

[root@node-2 ~]# yum install -y kubectl-1.15.1-0 --disableexcludes=kubernetes2、設置節點進入維護模式并驅逐worker節點上的應用,會將出了DaemonSets之外的其他應用遷移到其他節點上

設置維護和驅逐:

[root@node-1 ~]# kubectl drain node-2 --ignore-daemonsets

node/node-2 cordoned

WARNING: ignoring DaemonSet-managed Pods: kube-system/kube-flannel-ds-amd64-tm6wj, kube-system/kube-proxy-2wqhj

evicting pod "coredns-5c98db65d4-86gg7"

evicting pod "demo-7b86696648-djvgb"

pod/demo-7b86696648-djvgb evicted

pod/coredns-5c98db65d4-86gg7 evicted

node/node-2 evicted

查看node的情況,此時node-2多了SchedulingDisabled標識位,即新的node都不會調度到該節點上

[root@node-1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

node-1 Ready master 26h v1.15.1

node-2 Ready,SchedulingDisabled <none> 26h v1.14.1

node-3 Ready <none> 26h v1.14.1

查看node-2上的pods,pod都已經遷移到其他node節點上

[root@node-1 ~]# kubectl get pods --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default demo-7b86696648-6f22r 1/1 Running 0 30s 10.244.2.5 node-3 <none> <none>

default demo-7b86696648-fjmxn 1/1 Running 0 116m 10.244.2.2 node-3 <none> <none>

default demo-7b86696648-nwwxf 1/1 Running 0 116m 10.244.2.3 node-3 <none> <none>

kube-system coredns-5c98db65d4-cqbbl 1/1 Running 0 30s 10.244.0.6 node-1 <none> <none>

kube-system coredns-5c98db65d4-g59qt 1/1 Running 2 28m 10.244.2.4 node-3 <none> <none>

kube-system etcd-node-1 1/1 Running 0 13m 10.254.100.101 node-1 <none> <none>

kube-system kube-apiserver-node-1 1/1 Running 0 13m 10.254.100.101 node-1 <none> <none>

kube-system kube-controller-manager-node-1 1/1 Running 0 13m 10.254.100.101 node-1 <none> <none>

kube-system kube-flannel-ds-amd64-99tjl 1/1 Running 1 26h 10.254.100.101 node-1 <none> <none>

kube-system kube-flannel-ds-amd64-jp594 1/1 Running 0 26h 10.254.100.103 node-3 <none> <none>

kube-system kube-flannel-ds-amd64-tm6wj 1/1 Running 0 26h 10.254.100.102 node-2 <none> <none>

kube-system kube-proxy-2wqhj 1/1 Running 0 28m 10.254.100.102 node-2 <none> <none>

kube-system kube-proxy-k7c4f 1/1 Running 1 27m 10.254.100.101 node-1 <none> <none>

kube-system kube-proxy-zffgq 1/1 Running 0 28m 10.254.100.103 node-3 <none> <none>

kube-system kube-scheduler-node-1 1/1 Running 0 13m 10.254.100.101 node-1 <none> <none>3、升級worker節點

[root@node-2 ~]# kubeadm upgrade node

[upgrade] Reading configuration from the cluster...

[upgrade] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[upgrade] Skipping phase. Not a control plane node[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.15" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[upgrade] The configuration for this node was successfully updated!

[upgrade] Now you should go ahead and upgrade the kubelet package using your package manager.4、重啟kubelet服務

[root@node-2 ~]# systemctl daemon-reload

[root@node-2 ~]# systemctl restart kubelet5、取消節點調度標志,確保worker節點可正常調度

[root@node-1 ~]# kubectl uncordon node-2

node/node-2 uncordoned

[root@node-1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

node-1 Ready master 27h v1.15.1

node-2 Ready <none> 27h v1.15.1 #已升級成功

node-3 Ready <none> 27h v1.14.1按照上述步驟升級node-3節點,如下是升級完成后所有節點版本狀態:

[root@node-1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

node-1 Ready master 27h v1.15.1

node-2 Ready <none> 27h v1.15.1

node-3 Ready <none> 27h v1.15.11、kubeadm upgrade apply執行動作

2、kubeadm upgrade node執行動作

截止至2019.9.15,當前kubernetes社區最新版本是1.15.3,本文演示以在線的方式升級kubernetes集群至1.15.3版本,步驟和前文操作類似。

1、安裝最新軟件包,kubeadm,kubelet,kubectl,三個節點均需要安裝

[root@node-1 ~]# yum install kubeadm kubectl kubelet

已加載插件:fastestmirror, langpacks

Loading mirror speeds from cached hostfile

正在解決依賴關系

--> 正在檢查事務

---> 軟件包 kubeadm.x86_64.0.1.15.1-0 將被 升級

---> 軟件包 kubeadm.x86_64.0.1.15.3-0 將被 更新

---> 軟件包 kubectl.x86_64.0.1.15.1-0 將被 升級

---> 軟件包 kubectl.x86_64.0.1.15.3-0 將被 更新

---> 軟件包 kubelet.x86_64.0.1.15.1-0 將被 升級

---> 軟件包 kubelet.x86_64.0.1.15.3-0 將被 更新

--> 解決依賴關系完成

依賴關系解決

========================================================================================================================================================================

Package 架構 版本 源 大小

========================================================================================================================================================================

正在更新:

kubeadm x86_64 1.15.3-0 kubernetes 8.9 M

kubectl x86_64 1.15.3-0 kubernetes 9.5 M

kubelet x86_64 1.15.3-0 kubernetes 22 M

事務概要

========================================================================================================================================================================

升級 3 軟件包2、升級master節點

查看升級計劃

[root@node-1 ~]# kubeadm upgrade plan

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[preflight] Running pre-flight checks.

[upgrade] Making sure the cluster is healthy:

[upgrade] Fetching available versions to upgrade to

[upgrade/versions] Cluster version: v1.15.1

[upgrade/versions] kubeadm version: v1.15.3

[upgrade/versions] Latest stable version: v1.15.3

[upgrade/versions] Latest version in the v1.15 series: v1.15.3

Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':

COMPONENT CURRENT AVAILABLE

Kubelet 3 x v1.15.1 v1.15.3

Upgrade to the latest version in the v1.15 series:

COMPONENT CURRENT AVAILABLE

API Server v1.15.1 v1.15.3

Controller Manager v1.15.1 v1.15.3

Scheduler v1.15.1 v1.15.3

Kube Proxy v1.15.1 v1.15.3

CoreDNS 1.3.1 1.3.1

Etcd 3.3.10 3.3.10

You can now apply the upgrade by executing the following command:

kubeadm upgrade apply v1.15.3

_____________________________________________________________________

升級master節點

[root@node-1 ~]# kubeadm upgrade apply v1.15.33、升級worker節點,以此升級node-2和node-3節點

設置五污點去驅逐

[root@node-1 ~]# kubectl drain node-2 --ignore-daemonsets

執行升級操作

[root@node-2 ~]# kubeadm upgrade node

[root@node-2 ~]# systemctl daemon-reload

[root@node-2 ~]# systemctl restart kubelet

取消調度標志位

[root@node-1 ~]# kubectl uncordon node-2

node/node-2 uncordoned

確認版本升級

[root@node-1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

node-1 Ready master 27h v1.15.3

node-2 Ready <none> 27h v1.15.3

node-3 Ready <none> 27h v1.15.14、所有節點升級后的狀態

所有node狀態

[root@node-1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

node-1 Ready master 27h v1.15.3

node-2 Ready <none> 27h v1.15.3

node-3 Ready <none> 27h v1.15.3

查看組件狀態

[root@node-1 ~]# kubectl get componentstatuses

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

查看應用狀態

[root@node-1 ~]# kubectl get deployments --all-namespaces

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE

default demo 3/3 3 3 160m

kube-system coredns 2/2 2 2 27h

查看DaemonSets狀態

[root@node-1 ~]# kubectl get daemonsets --all-namespaces

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-system kube-flannel-ds-amd64 3 3 3 3 3 beta.kubernetes.io/arch=amd64 27h

kube-system kube-proxy 3 3 3 3 3 beta.kubernetes.io/os=linux 27h看完上述內容,你們對kubernetes的 升級更新有進一步的了解嗎?如果還想學到更多技能或想了解更多相關內容,歡迎關注億速云行業資訊頻道,感謝各位的閱讀。

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。