溫馨提示×

您好,登錄后才能下訂單哦!

點擊 登錄注冊 即表示同意《億速云用戶服務條款》

您好,登錄后才能下訂單哦!

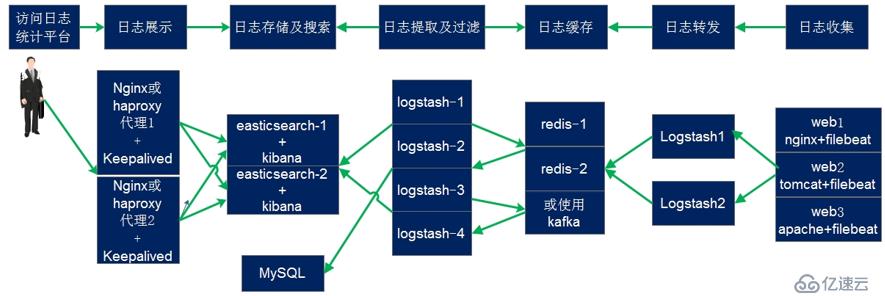

系統: CentOS Linux release 7.5.1804

ELK版本: filebeat-6.8.5-x86_64.rpm, logstash-6.8.5.rpm, elasticsearch-6.8.5.rpm, kibana-6.8.5-x86_64.rpm kafka_2.11-2.0.0 zookeeper-3.4.12

| 地址 | 名稱 | 功能, 按圖左至右 |

|---|---|---|

| 192.168.9.133 | test1.xiong.com | nginx + 虛擬主機 + filebeat |

| 192.168.9.134 | test2.xiong.com | nginx + 虛擬主機 + filebeat |

| 192.168.9.135 | test3.xiong.com | elasticsearch + kibana + logstash |

| 192.168.9.136 | test4.xiong.com | elasticsearch + kibana + logstash |

| 192.168.9.137 | test5.xiong.com | redis + logstash (這里使用kafka) |

| 192.168.9.138 | test6.xiong.com | redis + logstash (這里使用kafka) |

實踐并不需要這么多 準備4臺即可

~]# cat /etc/hosts

192.168.9.133 test1.xiong.com

192.168.9.134 test2.xiong.com

192.168.9.135 test3.xiong.com

192.168.9.136 test4.xiong.com

192.168.9.137 test5.xiong.com

192.168.9.138 test6.xiong.com

# 關閉防火墻 以及selinux

systemctl stop firewalld

sed -i '/SELINUX/s/enforcing/disabled/' /etc/selinux/config

~]# crontab -l # 時間同步

*/1 * * * * /usr/sbin/ntpdate pool.ntp.org &>/dev/null

# 安裝jdk 135, 136, 137, 138需要安裝

~]# tar xf jdk-8u181-linux-x64.tar.gz -C /usr/java/

cd /usr/java/

ln -sv jdk1.8.0_181/ default

ln -sv default/ jdk

# 設置打開文件的個數

echo "* hard nofile 65536" >> /etc/security/limits.conf

echo "* soft nofile 65536" >> /etc/security/limits.conf

java]# cat /etc/profile.d/java.sh

export JAVA_HOME=/usr/java/jdk

export PATH=$JAVA_HOME/bin:$PATH

java]# source /etc/profile.d/java.sh

java]# java -version

java version "1.8.0_181"

Java(TM) SE Runtime Environment (build 1.8.0_181-b13)

Java HotSpot(TM) 64-Bit Server VM (build 25.181-b13, mixed mode)這里配置主機 9.135、9.136

# 安裝服務端的ELK

~]# rpm -vih elasticsearch-6.8.5.rpm kibana-6.8.5-x86_64.rpm logstash-6.8.5.rpm

# 修改配置

~]# cd /etc/elasticsearch

# 修改完之后同步,只需要修改Network.host\node.name

elasticsearch]# grep -v "^#" elasticsearch.yml

cluster.name: myElks # 集群名稱

node.name: test3.xiong.com # 根據主機修改主機名

path.data: /opt/elasticsearch/data # 數據目錄

path.logs: /opt/elasticsearch/logs # 日志目錄

network.host: 0.0.0.0

network.publish_host: 192.168.9.136 # 監聽地址

# 發現地址ping

discovery.zen.ping.unicast.hosts: ["192.168.9.135", "192.168.9.136"]

# 最小需要多少個節點 節點數計算 (N/2)+1

discovery.zen.minimum_master_nodes: 2

# 開啟跨域訪問支持

http.cors.enabled: true

http.cors.allow-origin: "*"

# 修改數據目錄以及日志 注意權限問題

elasticsearch]# mkdir /opt/elasticsearch/{data,logs} -pv

elasticsearch]# chown elasticsearch.elasticsearch /opt/elasticsearch/ -R

# 修改啟動文件

elasticsearch]# vim /usr/lib/systemd/system/elasticsearch.service

# 在[Service]下添加環境變量

Environment=JAVA_HOME=/usr/java/jdk # 指定java家目錄

LimitMEMELOCK=infinity # 最大化使用內存

elasticsearch]# vim jvm.options # 修改啟動的jvm內存,這里應該為內存的一半或不大于30G

-Xms2g

-Xmx2g

# 啟動服務 需要注意的是兩臺主機都需要同樣配置, 可以使用ansible之類的工具

systemctl daemon-reload

systemctl enable elasticsearch.service

systemctl restart elasticsearch

# 檢查服務端口是否監聽成功, 或查看 systemctl status elasticsearch

elasticsearch]# ss -tnl | grep 92

LISTEN 0 128 ::ffff:192.168.9.136:9200 :::*

LISTEN 0 128 ::ffff:192.168.9.136:9300 :::*

# 查看主機是否加入集群

elasticsearch]# curl 192.168.9.135:9200/_cat/nodes

192.168.9.136 7 95 1 0.00 0.06 0.11 mdi * test4.xiong.com

192.168.9.135 7 97 20 0.45 0.14 0.09 mdi - test3.xiong.com

# 查看master

elasticsearch]# curl 192.168.9.135:9200/_cat/master

fVkp7Ld3RDGmWlGpm6t7kg 192.168.9.136 192.168.9.136 test4.xiong.com# 兩臺主機 9.135 9.136 安裝

1、安裝nmp

]# yum -y install epel-release # 需要先安裝epel源

]# yum -y install npm

2、安裝elasticsearch-head插件

]# cd /usr/local/src/

]# git clone git://github.com/mobz/elasticsearch-head.git

]# cd /usr/local/src/elasticsearch-head/

elasticsearch-head ]# npm install grunt -save # 生成執行文件

elasticsearch-head]# ll node_modules/grunt # 確定文件是否產生

elasticsearch-head ]# npm install

3、啟動head

node_modules]# nohup npm run start &

ss -tnl | grep 9100 # 查看端口是否存在,存在之后直接訪問web

9.135:9100 與9.136:9100 可以只配一臺

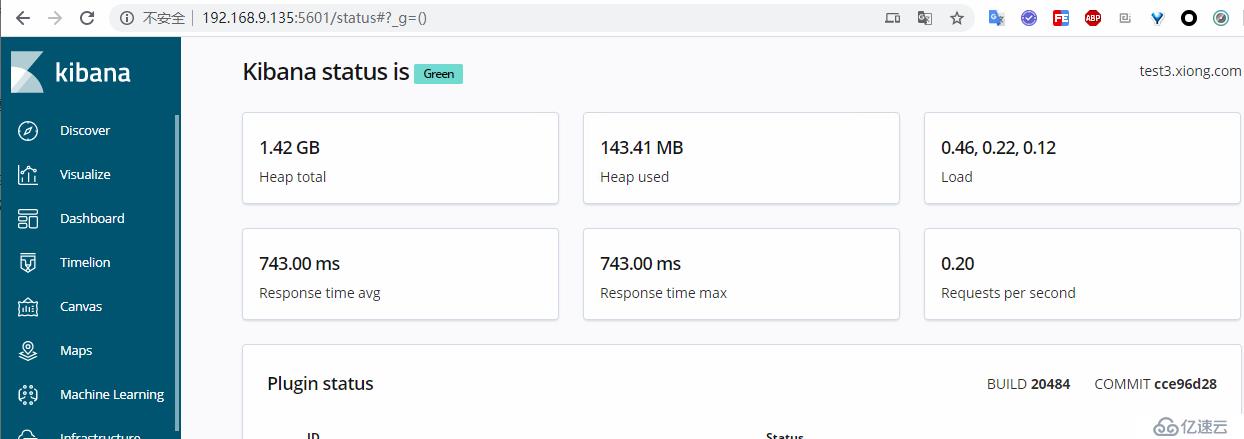

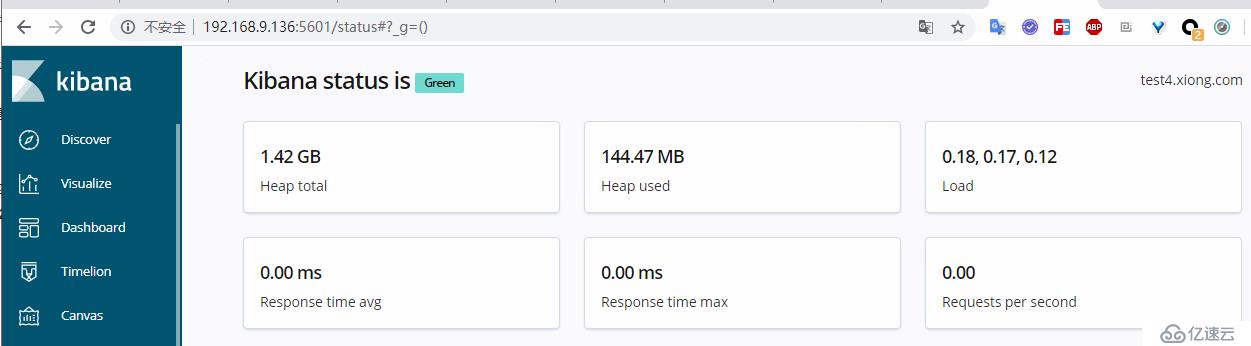

kibana]# grep -v "^#" kibana.yml | grep -v "^$"

server.port: 5601

server.host: "0.0.0.0"

server.name: "test3.xiong.com" # 另一臺只需要修改主機名

elasticsearch.hosts: ["http://192.168.9.135:9200", "http://192.168.9.135:9200"]

kibana]# systemctl restart kibana

kibana]# ss -tnl | grep 5601 # 檢查端口是否監聽

LISTEN 0 128 *:5601 *:*

logstash]# vim /etc/default/logstash

JAVA_HOME="/usr/java/jdk" # 增加java環境變量主機: 192.168.9.133, 9.134

~]# cat /etc/yum.repos.d/nginx.repo # 配置nginx yum源

[nginx-stable]

name=nginx stable repo

baseurl=http://nginx.org/packages/centos/$releasever/$basearch/

gpgcheck=1

enabled=1

gpgkey=https://nginx.org/keys/nginx_signing.key

module_hotfixes=true

[nginx-mainline]

name=nginx mainline repo

baseurl=http://nginx.org/packages/mainline/centos/$releasever/$basearch/

gpgcheck=1

enabled=0

gpgkey=https://nginx.org/keys/nginx_signing.key

module_hotfixes=true

~]# yum -y install nginx

~]# rpm -vih filebeat-6.8.5-x86_64.rpm ]# vim /etc/nginx/nginx.conf

http {

# 添加日志格式, log_format

log_format access_json '{"@timestamp":"$time_iso8601",'

'"host":"$server_addr",'

'"clientip":"$remote_addr",'

'"size":$body_bytes_sent,'

'"responsetime":$request_time,'

'"upstreamtime":"$upstream_response_time",'

'"upstreamhost":"$upstream_addr",'

'"http_host":"$host",'

'"url":"$uri",'

'"domain":"$host",'

'"xff":"$http_x_forwarded_for",'

'"referer":"$http_referer",'

'"status":"$status"}';

}

server { # 在server段中使用

access_log /var/log/nginx/default_access.log access_json;

}

~]# vim /etc/nginx/nginx.conf 兩臺nginx都需要添加

# http段中添加, 另外一臺當備份,

upstream kibana {

server 192.168.9.135:5601 max_fails=3 fail_timeout=30s;

server 192.168.9.136:5601 backup;

}

~]# vim /etc/nginx/conf.d/two.conf

server {

listen 5601;

server_name 192.168.9.133; # 注意修改主機地址

access_log /var/log/nginx/kinaba_access.log access_json;

location / {

proxy_pass http://kibana;

}

}主機: 192.168.9.137, 9.138

1、 安裝jdk 版本1.8

2、安裝kafka與zookeeper 注意: 安裝兩臺機器除了監聽地址,其它保持一致

mv kafka_2.11-2.0.0/ zookeeper-3.4.12/ /opt/hadoop/

cd /opt/hadoop/

ln -sv kafka_2.11-2.0.0/ kafka

ln -sv zookeeper-3.4.12/ zookeeper

cd /opt/hadoop/kafka/config

vim server.properties # 修改監聽地址

listeners=PLAINTEXT://192.168.9.138:9092

log.dirs=/opt/logs/kafka_logs

vim zookeeper.properties

dataDir=/opt/logs/zookeeper

將/opt/hadoop/zookeeper/conf/zoo_sample.cfg 復制為zoo.cfg

vim /opt/hadoop/zookeeper/conf/zoo.cfg

dataDir=/opt/logs/zookeeperDataDir

mkdir /opt/logs/{zookeeper,kafka_logs,zookeeperDataDir} -pv

chmod +x /opt/hadoop/zookeeper/bin/*.sh

chmod +x /opt/hadoop/kafka/bin/*.sh

3、自啟

cat kafka.service

[Unit]

Description=kafka 9092

# 定義kafka.server 應該在zookeeper之后啟動

After=zookeeper.service

# 強依賴, 必須zookeeper先啟動

Requires=zookeeper.service

[Service]

Type=simple

Environment=JAVA_HOME=/usr/java/default

Environment=KAFKA_PATH=/opt/hadoop/kafka:/opt/hadoop/kafka/bin

ExecStart=/opt/hadoop/kafka/bin/kafka-server-start.sh /opt/hadoop/kafka/config/server.properties

ExecStop=/opt/hadoop/kafka/bin/kafka-server-stop.sh

Restart=always

[Install]

WantedBy=multi-user.target

cat zookeeper.service

[Unit]

Description=Zookeeper Service

After=network.target

ConditionPathExists=/opt/hadoop/zookeeper/conf/zoo.cfg

[Service]

Type=forking

Environment=JAVA_HOME=/usr/java/default

ExecStart=/opt/hadoop/zookeeper/bin/zkServer.sh start

ExecStop=/opt/hadoop/zookeeper/bin/zkServer.sh stop

Restart=always

[Install]

WantedBy=multi-user.target

4、啟動

mv kafka.service zookeeper.service /usr/lib/systemd/system

systemctl restart zookeeper kafka

systemctl status zookeeper

systemctl status kafka

ss -tnl

LISTEN 0 50 ::ffff:192.168.9.138:9092 :::*

LISTEN 0 50 :::2181 :::*

LISTEN 0 50 ::ffff:192.168.9.137:9092 :::*1、安裝logstash

rpm -ivh logstash-6.8.5.rpm

# 或直接yum安裝\ 創建repo倉庫

]# vim /etc/yum.repos.d/logstash.repo

[logstash-6.x]

name=Elastic repository for 6.x packages

baseurl=https://artifacts.elastic.co/packages/6.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

2、配置logstash啟動文件

sed -i "1a\JAVA_HOME="/usr/java/jdk"" /etc/default/logstash # 查看nginx上 filebeat配置 地址: 192.168.9.133

~]# grep -v "#" /etc/filebeat/filebeat.yml | grep -v "^$"

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/kinaba_access.log # 注意這個文件需要給755權限

exclude_lines: ['^DBG']

exclude_files: ['.gz$']

fields:

type: kinaba-access-9133

ip: 192.168.9.133

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 3

output.logstash:

hosts: ["192.168.9.137:5044"]

worker: 2 # 開啟兩個工作線程~]# cat /etc/logstash/conf.d/nginx-filebeats.conf

input {

beats {

port => 5044

codec => "json"

}

}

output {

# stdout { # 養成習慣 先打印 rubydebug輸出至屏幕,然后在添加kafka

# codec => "rubydebug"

# }

kafka {

bootstrap_servers => "192.168.9.137:9092"

codec => "json"

topic_id => "logstash-kinaba-nginx-access"

}

}

# 屏幕輸出: /usr/share/logstash/bin/logstash -f nginx-filebeats.conf

# 檢查: /usr/share/logstash/bin/logstash -f nginx-filebeats.conf -t

# 重啟logstash

# 查看日志:tailf /var/log/logstash/logstash-plain.log

# 查看主題

~]# /opt/hadoop/kafka/bin/kafka-topics.sh --list --zookeeper 192.168.9.137:2181

logstash-kinaba-nginx-access

# 查看主題內容

~]# /opt/hadoop/kafka/bin/kafka-console-consumer.sh --bootstrap-server 192.168.9.137:9092 --topic logstash-kinaba-nginx-access --from-beginning

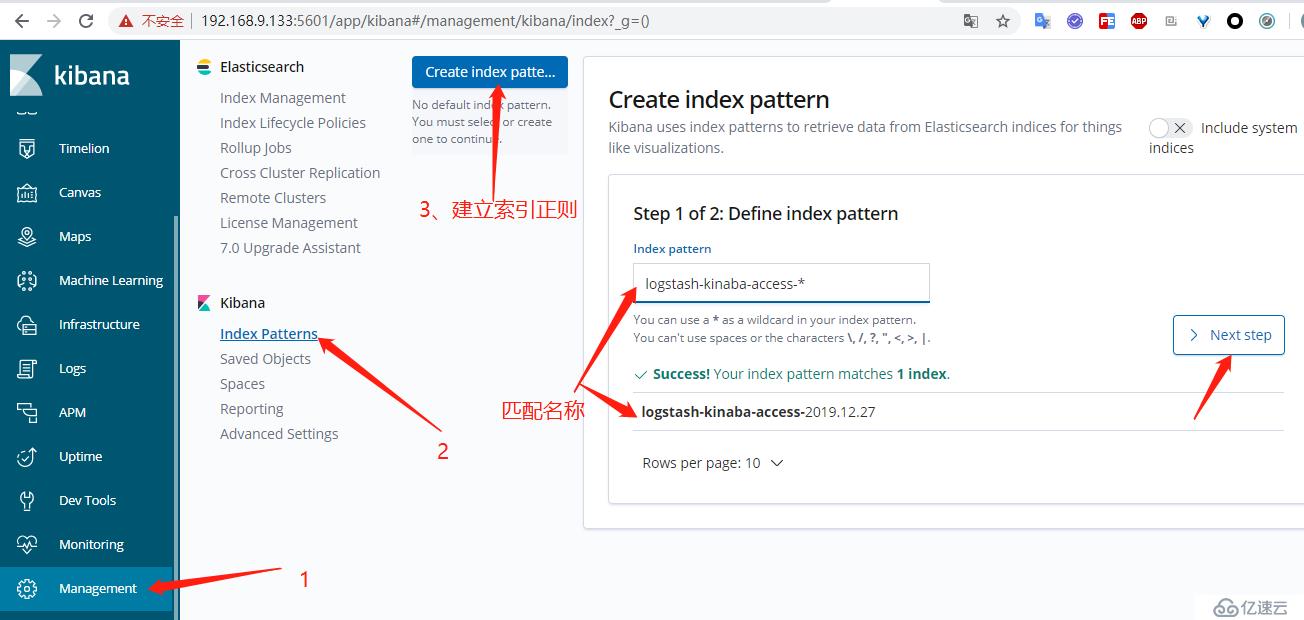

{"host":{"architecture":"x86_64","containerized":false,"os":{"version":"7 (Core)","codename":"Core","platform":"centos","family":"redhat","name":"CentOS Linux"},"name":"test1.xiong.com","id":"e70c4e18a6f243c69211533f14283599"},"@timestamp":"2019-12-27T02:06:17.326Z","log":{"file":{"path":"/var/log/nginx/kinaba_access.log"}},"fields":{"type":"kinaba-access-9133","ip":"192.168.9.133"},"message":"{\"@timestamp\":\:\"-\",\"referer\":\"http://192.168.9.133:5601/app/timelion\",\"status\":\"304\"}","source":"/var/log/nginx/kinaba_access.log","@version":"1","offset":83382,"beat":{"version":"6.8.5","hostname":"test1.xiong.com","name":"test1.xiong.com"},"prospector":{"type":"log"},"input":{"type":"log"},"tags":["beats_input_codec_plain_applied"]}# 主機: 192.168.9.135

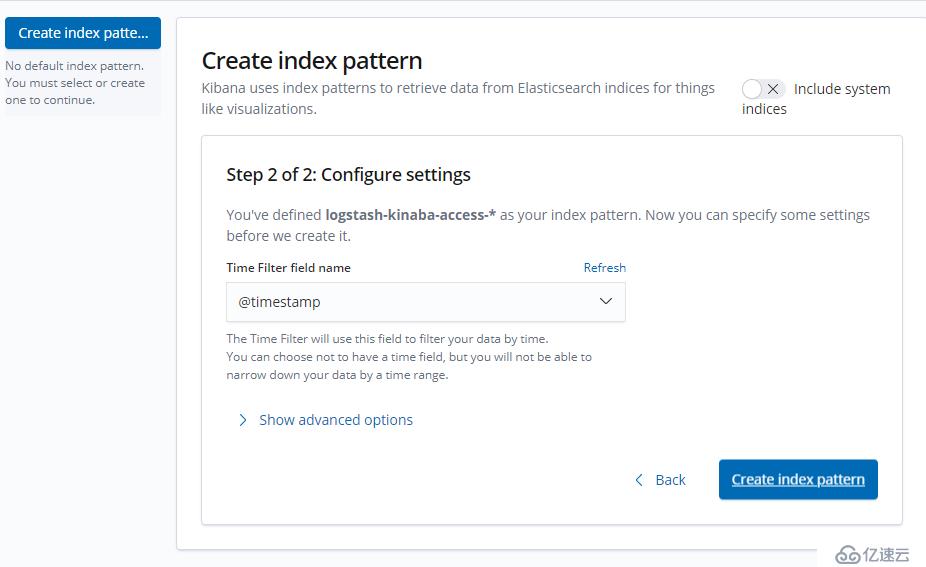

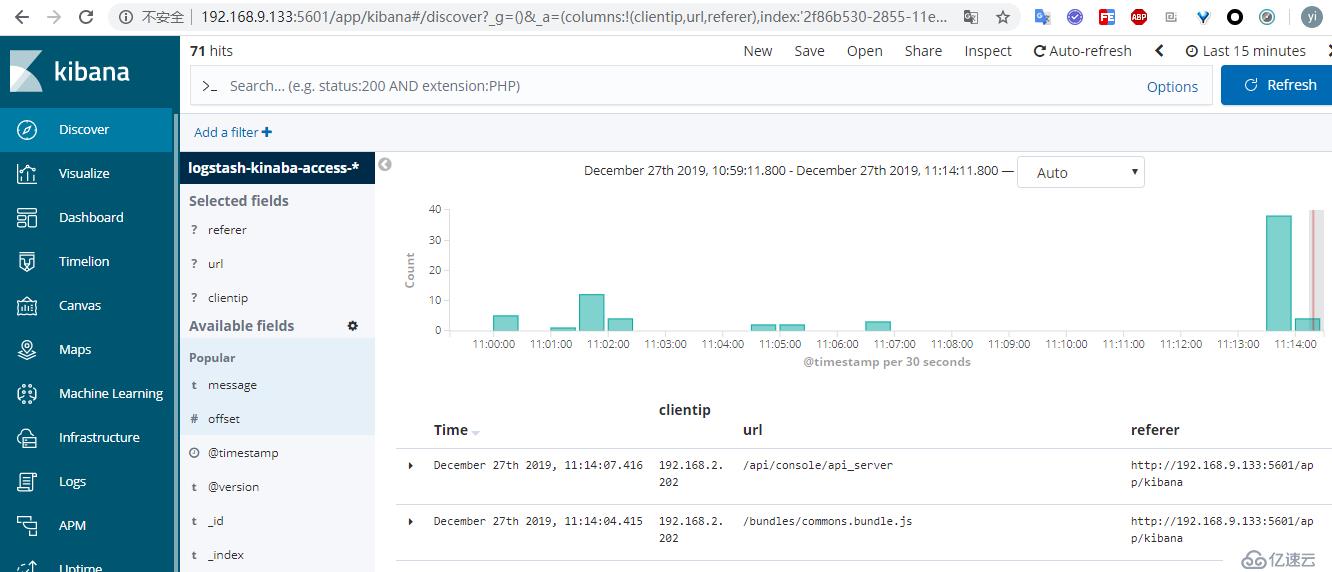

]# cat /etc/logstash/conf.d/logstash-kinaba-nginx.conf

input {

kafka {

bootstrap_servers => "192.168.9.137:9092"

decorate_events => true

consumer_threads => 2

topics => "logstash-kinaba-nginx-access"

auto_offset_reset => "latest"

}

}

output {

# stdout { # 養成好習慣,每次都必打印

# codec => "rubydebug"

# }

if [fields][type] == "kinaba-access-9133" {

elasticsearch {

hosts => ["192.168.9.135:9200"]

codec => "json"

index => "logstash-kinaba-access-%{+YYYY.MM.dd}"

}

}

}

# 屏幕輸出: /usr/share/logstash/bin/logstash -f logstash-kinaba-nginx.conf

# 檢查: /usr/share/logstash/bin/logstash -f logstash-kinaba-nginx.conf -t

# 查看日志:tailf /var/log/logstash/logstash-plain.log

# 重啟logstash

# 靜待一會, 多訪問幾次web, 然后在查看索引

~]# curl http://192.168.9.135:9200/_cat/indices

green open logstash-kinaba-access-2019.12.27 AcCjLtCPTryt6DZkl5KbPw 5 1 100 0 327.7kb 131.8kb

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。