您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

這篇文章主要講解了“C++中內存池的原理及實現方法是什么”,文中的講解內容簡單清晰,易于學習與理解,下面請大家跟著小編的思路慢慢深入,一起來研究和學習“C++中內存池的原理及實現方法是什么”吧!

C++程序默認的內存管理(new,delete,malloc,free)會頻繁地在堆上分配和釋放內存,導致性能的損失,產生大量的內存碎片,降低內存的利用率。默認的內存管理因為被設計的比較通用,所以在性能上并不能做到極致。

因此,很多時候需要根據業務需求設計專用內存管理器,便于針對特定數據結構和使用場合的內存管理,比如:內存池。

內存池的思想是,在真正使用內存之前,預先申請分配一定數量、大小預設的內存塊留作備用。當有新的內存需求時,就從內存池中分出一部分內存塊,若內存塊不夠再繼續申請新的內存,當內存釋放后就回歸到內存塊留作后續的復用,使得內存使用效率得到提升,一般也不會產生不可控制的內存碎片。

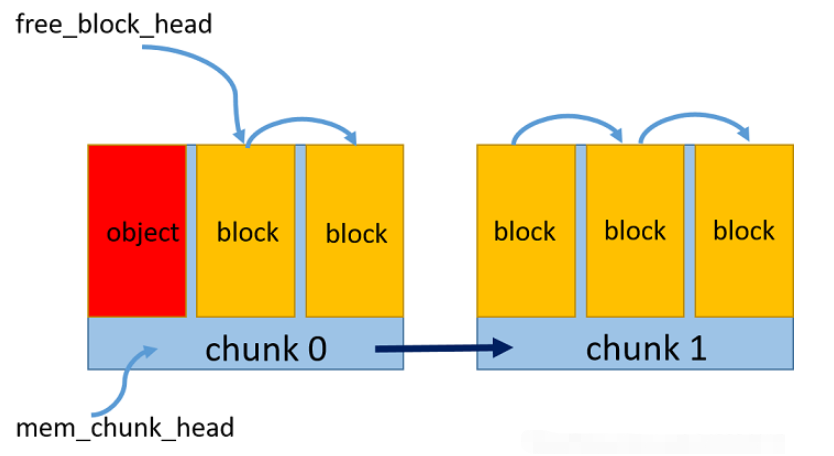

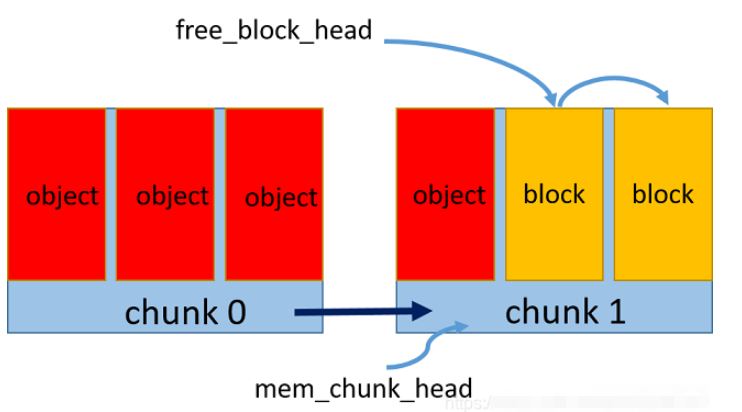

算法原理:

1.預申請一個內存區chunk,將內存中按照對象大小劃分成多個內存塊block

2.維持一個空閑內存塊鏈表,通過指針相連,標記頭指針為第一個空閑塊

3.每次新申請一個對象的空間,則將該內存塊從空閑鏈表中去除,更新空閑鏈表頭指針

4.每次釋放一個對象的空間,則重新將該內存塊加到空閑鏈表頭

5.如果一個內存區占滿了,則新開辟一個內存區,維持一個內存區的鏈表,同指針相連,頭指針指向最新的內存區,新的內存塊從該區內重新劃分和申請

如圖所示:

memory_pool.hpp

#ifndef _MEMORY_POOL_H_

#define _MEMORY_POOL_H_

#include <stdint.h>

#include <mutex>

template<size_t BlockSize, size_t BlockNum = 10>

class MemoryPool

{

public:

MemoryPool()

{

std::lock_guard<std::mutex> lk(mtx); // avoid race condition

// init empty memory pointer

free_block_head = NULL;

mem_chunk_head = NULL;

}

~MemoryPool()

{

std::lock_guard<std::mutex> lk(mtx); // avoid race condition

// destruct automatically

MemChunk* p;

while (mem_chunk_head)

{

p = mem_chunk_head->next;

delete mem_chunk_head;

mem_chunk_head = p;

}

}

void* allocate()

{

std::lock_guard<std::mutex> lk(mtx); // avoid race condition

// allocate one object memory

// if no free block in current chunk, should create new chunk

if (!free_block_head)

{

// malloc mem chunk

MemChunk* new_chunk = new MemChunk;

new_chunk->next = NULL;

// set this chunk's first block as free block head

free_block_head = &(new_chunk->blocks[0]);

// link the new chunk's all blocks

for (int i = 1; i < BlockNum; i++)

new_chunk->blocks[i - 1].next = &(new_chunk->blocks[i]);

new_chunk->blocks[BlockNum - 1].next = NULL; // final block next is NULL

if (!mem_chunk_head)

mem_chunk_head = new_chunk;

else

{

// add new chunk to chunk list

mem_chunk_head->next = new_chunk;

mem_chunk_head = new_chunk;

}

}

// allocate the current free block to the object

void* object_block = free_block_head;

free_block_head = free_block_head->next;

return object_block;

}

void* allocate(size_t size)

{

std::lock_guard<std::mutex> lk(array_mtx); // avoid race condition for continuous memory

// calculate objects num

int n = size / BlockSize;

// allocate n objects in continuous memory

// FIXME: make sure n > 0

void* p = allocate();

for (int i = 1; i < n; i++)

allocate();

return p;

}

void deallocate(void* p)

{

std::lock_guard<std::mutex> lk(mtx); // avoid race condition

// free object memory

FreeBlock* block = static_cast<FreeBlock*>(p);

block->next = free_block_head; // insert the free block to head

free_block_head = block;

}

private:

// free node block, every block size exactly can contain one object

struct FreeBlock

{

unsigned char data[BlockSize];

FreeBlock* next;

};

FreeBlock* free_block_head;

// memory chunk, every chunk contains blocks number with fixed BlockNum

struct MemChunk

{

FreeBlock blocks[BlockNum];

MemChunk* next;

};

MemChunk* mem_chunk_head;

// thread safe related

std::mutex mtx;

std::mutex array_mtx;

};

#endif // !_MEMORY_POOL_H_main.cpp

#include <iostream>

#include "memory_pool.hpp"

class MyObject

{

public:

MyObject(int x): data(x)

{

//std::cout << "contruct object" << std::endl;

}

~MyObject()

{

//std::cout << "destruct object" << std::endl;

}

int data;

// override new and delete to use memory pool

void* operator new(size_t size);

void operator delete(void* p);

void* operator new[](size_t size);

void operator delete[](void* p);

};

// define memory pool with block size as class size

MemoryPool<sizeof(MyObject), 3> gMemPool;

void* MyObject::operator new(size_t size)

{

//std::cout << "new object space" << std::endl;

return gMemPool.allocate();

}

void MyObject::operator delete(void* p)

{

//std::cout << "free object space" << std::endl;

gMemPool.deallocate(p);

}

void* MyObject::operator new[](size_t size)

{

// TODO: not supported continuous memoery pool for now

//return gMemPool.allocate(size);

return NULL;

}

void MyObject::operator delete[](void* p)

{

// TODO: not supported continuous memoery pool for now

//gMemPool.deallocate(p);

}

int main(int argc, char* argv[])

{

MyObject* p1 = new MyObject(1);

std::cout << "p1 " << p1 << " " << p1->data<< std::endl;

MyObject* p2 = new MyObject(2);

std::cout << "p2 " << p2 << " " << p2->data << std::endl;

delete p2;

MyObject* p3 = new MyObject(3);

std::cout << "p3 " << p3 << " " << p3->data << std::endl;

MyObject* p4 = new MyObject(4);

std::cout << "p4 " << p4 << " " << p4->data << std::endl;

MyObject* p5 = new MyObject(5);

std::cout << "p5 " << p5 << " " << p5->data << std::endl;

MyObject* p6 = new MyObject(6);

std::cout << "p6 " << p6 << " " << p6->data << std::endl;

delete p1;

delete p2;

//delete p3;

delete p4;

delete p5;

delete p6;

getchar();

return 0;

}運行結果

p1 00000174BEDE0440 1

p2 00000174BEDE0450 2

p3 00000174BEDE0450 3

p4 00000174BEDE0460 4

p5 00000174BEDD5310 5

p6 00000174BEDD5320 6

可以看到內存地址是連續,并且回收一個節點后,依然有序地開辟內存

對象先開辟內存再構造,先析構再釋放內存

注意

在內存分配和釋放的環節需要加鎖來保證線程安全

還沒有實現對象數組的分配和釋放

感謝各位的閱讀,以上就是“C++中內存池的原理及實現方法是什么”的內容了,經過本文的學習后,相信大家對C++中內存池的原理及實現方法是什么這一問題有了更深刻的體會,具體使用情況還需要大家實踐驗證。這里是億速云,小編將為大家推送更多相關知識點的文章,歡迎關注!

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。