您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

本篇內容主要講解“nvidia-smi命令怎么使用”,感興趣的朋友不妨來看看。本文介紹的方法操作簡單快捷,實用性強。下面就讓小編來帶大家學習“nvidia-smi命令怎么使用”吧!

在深度學習等場景中,nvidia-smi命令是我們經常接觸到的一個命令,用來查看GPU的占用情況,可以說是一個必須要學會的命令了,普通用戶一般用的比較多的就是nvidia-smi的命令,其實掌握了這一個命令也就能夠覆蓋絕大多數場景了,但是本質求真務實的態度,本文調研了相關資料,整理了一些比較常用的nvidia-smi命令的其他用法。

NVIDIA 的 SMI 工具基本上支持自 2011 年以來發布的任何 NVIDIA GPU。其中包括來自 Fermi 和更高架構系列(Kepler、Maxwell、Pascal、Volta , Turing, Ampere等)的 Tesla、Quadro 和 GeForce 設備。

支持的產品包括:

特斯拉:S1070、S2050、C1060、C2050/70、M2050/70/90、X2070/90、K10、K20、K20X、K40、K80、M40、P40、P100、V100、A100、H100。

Quadro:4000、5000、6000、7000、M2070-Q、K 系列、M 系列、P 系列、RTX 系列

GeForce:不同級別的支持,可用的指標少于 Tesla 和 Quadro 產品。

(1)nvidia-smi

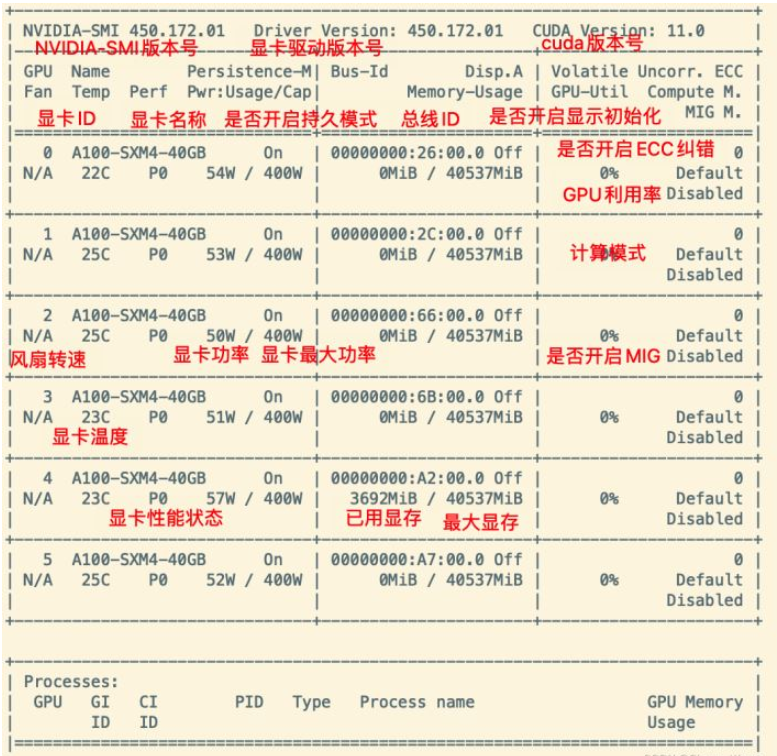

用戶名@主機名:~# nvidia-smi Mon Dec 5 08:48:49 2022 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 450.172.01 Driver Version: 450.172.01 CUDA Version: 11.0 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |===============================+======================+======================| | 0 A100-SXM4-40GB On | 00000000:26:00.0 Off | 0 | | N/A 22C P0 54W / 400W | 0MiB / 40537MiB | 0% Default | | | | Disabled | +-------------------------------+----------------------+----------------------+ | 1 A100-SXM4-40GB On | 00000000:2C:00.0 Off | 0 | | N/A 25C P0 53W / 400W | 0MiB / 40537MiB | 0% Default | | | | Disabled | +-------------------------------+----------------------+----------------------+ | 2 A100-SXM4-40GB On | 00000000:66:00.0 Off | 0 | | N/A 25C P0 50W / 400W | 0MiB / 40537MiB | 0% Default | | | | Disabled | +-------------------------------+----------------------+----------------------+ | 3 A100-SXM4-40GB On | 00000000:6B:00.0 Off | 0 | | N/A 23C P0 51W / 400W | 0MiB / 40537MiB | 0% Default | | | | Disabled | +-------------------------------+----------------------+----------------------+ | 4 A100-SXM4-40GB On | 00000000:A2:00.0 Off | 0 | | N/A 23C P0 57W / 400W | 0MiB / 40537MiB | 0% Default | | | | Disabled | +-------------------------------+----------------------+----------------------+ | 5 A100-SXM4-40GB On | 00000000:A7:00.0 Off | 0 | | N/A 25C P0 52W / 400W | 0MiB / 40537MiB | 0% Default | | | | Disabled | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: | | GPU GI CI PID Type Process name GPU Memory | | ID ID Usage | |=============================================================================| +-----------------------------------------------------------------------------+

下面詳細介紹一下每個部分分別表示什么:

下面是上圖的一些具體介紹,比較簡單的我就不列舉了,只列舉一些不常見的:

持久模式:persistence mode 能夠讓 GPU 更快響應任務,待機功耗增加。

關閉 persistence mode 同樣能夠啟動任務。

持續模式雖然耗能大,但是在新的GPU應用啟動時,花費的時間更少

風扇轉速:主動散熱的顯卡一般會有這個參數,服務器顯卡一般是被動散熱,這個參數顯示N/A。

從0到100%之間變動,這個速度是計算機期望的風扇轉速,實際情況下如果風扇堵轉,可能打不到顯示的轉速。

有的設備不會返回轉速,因為它不依賴風扇冷卻而是通過其他外設保持低溫(比如有些實驗室的服務器是常年放在空調房間里的)。

溫度:單位是攝氏度。

性能狀態:從P0到P12,P0表示最大性能,P12表示狀態最小性能。

Disp.A:Display Active,表示GPU的顯示是否初始化。

ECC糾錯:這個只有近幾年的顯卡才具有這個功能,老版顯卡不具備這個功能。

MIG:Multi-Instance GPU,多實例顯卡技術,支持將一張顯卡劃分成多張顯卡使用,目前只支持安培架構顯卡。

新的多實例GPU (MIG)特性允許GPU(從NVIDIA安培架構開始)被安全地劃分為多達7個獨立的GPU實例。

用于CUDA應用,為多個用戶提供獨立的GPU資源,以實現最佳的GPU利用率。

對于GPU計算能力未完全飽和的工作負載,該特性尤其有益,因此用戶可能希望并行運行不同的工作負載,以最大化利用率。

開啟MIG的顯卡使用nvidia-smi命令如下所示:

Mon Dec 5 16:48:56 2022 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 450.172.01 Driver Version: 450.172.01 CUDA Version: 11.0 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |===============================+======================+======================| | 0 A100-SXM4-40GB On | 00000000:26:00.0 Off | 0 | | N/A 22C P0 54W / 400W | 0MiB / 40537MiB | 0% Default | | | | Disabled | +-------------------------------+----------------------+----------------------+ | 1 A100-SXM4-40GB On | 00000000:2C:00.0 Off | 0 | | N/A 24C P0 53W / 400W | 0MiB / 40537MiB | 0% Default | | | | Disabled | +-------------------------------+----------------------+----------------------+ | 2 A100-SXM4-40GB On | 00000000:66:00.0 Off | 0 | | N/A 25C P0 50W / 400W | 0MiB / 40537MiB | 0% Default | | | | Disabled | +-------------------------------+----------------------+----------------------+ | 3 A100-SXM4-40GB On | 00000000:6B:00.0 Off | 0 | | N/A 23C P0 51W / 400W | 0MiB / 40537MiB | 0% Default | | | | Disabled | +-------------------------------+----------------------+----------------------+ | 4 A100-SXM4-40GB On | 00000000:A2:00.0 Off | 0 | | N/A 23C P0 56W / 400W | 3692MiB / 40537MiB | 0% Default | | | | Disabled | +-------------------------------+----------------------+----------------------+ | 5 A100-SXM4-40GB On | 00000000:A7:00.0 Off | 0 | | N/A 25C P0 52W / 400W | 0MiB / 40537MiB | 0% Default | | | | Disabled | +-------------------------------+----------------------+----------------------+ | 6 A100-SXM4-40GB On | 00000000:E1:00.0 Off | On | | N/A 24C P0 43W / 400W | 22MiB / 40537MiB | N/A Default | | | | Enabled | +-------------------------------+----------------------+----------------------+ | 7 A100-SXM4-40GB On | 00000000:E7:00.0 Off | On | | N/A 22C P0 52W / 400W | 19831MiB / 40537MiB | N/A Default | | | | Enabled | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | MIG devices: | +------------------+----------------------+-----------+-----------------------+ | GPU GI CI MIG | Memory-Usage | Vol| Shared | | ID ID Dev | BAR1-Usage | SM Unc| CE ENC DEC OFA JPG| | | | ECC| | |==================+======================+===========+=======================| | 6 1 0 0 | 11MiB / 20096MiB | 42 0 | 3 0 2 0 0 | | | 0MiB / 32767MiB | | | +------------------+----------------------+-----------+-----------------------+ | 6 2 0 1 | 11MiB / 20096MiB | 42 0 | 3 0 2 0 0 | | | 0MiB / 32767MiB | | | +------------------+----------------------+-----------+-----------------------+ | 7 1 0 0 | 11MiB / 20096MiB | 42 0 | 3 0 2 0 0 | | | 0MiB / 32767MiB | | | +------------------+----------------------+-----------+-----------------------+ | 7 2 0 1 | 19820MiB / 20096MiB | 42 0 | 3 0 2 0 0 | | | 4MiB / 32767MiB | | | +------------------+----------------------+-----------+-----------------------+ +-----------------------------------------------------------------------------+ | Processes: | | GPU GI CI PID Type Process name GPU Memory | | ID ID Usage | |=============================================================================| | 4 N/A N/A 22888 C python 3689MiB | | 7 2 0 37648 C ...vs/tf2.4-py3.8/bin/python 19805MiB | +-----------------------------------------------------------------------------+

如上圖我將GPU 6和7兩張顯卡使用MIG技術虛擬化成4張顯卡,分別是GPU6:0,GPU6:1,GPU7:0,GPU7:1

每張卡的顯存為20GB,MIG技術支持將A100顯卡虛擬化成7張顯卡,具體介紹我會在之后的博客中更新。

輸入 nvidia-smi -h 可查看該命令的幫助手冊,如下所示:

用戶名@主機名:$ nvidia-smi -h NVIDIA System Management Interface -- v450.172.01 NVSMI provides monitoring information for Tesla and select Quadro devices. The data is presented in either a plain text or an XML format, via stdout or a file. NVSMI also provides several management operations for changing the device state. Note that the functionality of NVSMI is exposed through the NVML C-based library. See the NVIDIA developer website for more information about NVML. Python wrappers to NVML are also available. The output of NVSMI is not guaranteed to be backwards compatible; NVML and the bindings are backwards compatible. http://developer.nvidia.com/nvidia-management-library-nvml/ http://pypi.python.org/pypi/nvidia-ml-py/ Supported products: - Full Support - All Tesla products, starting with the Kepler architecture - All Quadro products, starting with the Kepler architecture - All GRID products, starting with the Kepler architecture - GeForce Titan products, starting with the Kepler architecture - Limited Support - All Geforce products, starting with the Kepler architecture nvidia-smi [OPTION1 [ARG1]] [OPTION2 [ARG2]] ... -h, --help Print usage information and exit. LIST OPTIONS: -L, --list-gpus Display a list of GPUs connected to the system. -B, --list-blacklist-gpus Display a list of blacklisted GPUs in the system. SUMMARY OPTIONS: <no arguments> Show a summary of GPUs connected to the system. [plus any of] -i, --id= Target a specific GPU. -f, --filename= Log to a specified file, rather than to stdout. -l, --loop= Probe until Ctrl+C at specified second interval. QUERY OPTIONS: -q, --query Display GPU or Unit info. [plus any of] -u, --unit Show unit, rather than GPU, attributes. -i, --id= Target a specific GPU or Unit. -f, --filename= Log to a specified file, rather than to stdout. -x, --xml-format Produce XML output. --dtd When showing xml output, embed DTD. -d, --display= Display only selected information: MEMORY, UTILIZATION, ECC, TEMPERATURE, POWER, CLOCK, COMPUTE, PIDS, PERFORMANCE, SUPPORTED_CLOCKS, PAGE_RETIREMENT, ACCOUNTING, ENCODER_STATS, FBC_STATS, ROW_REMAPPER Flags can be combined with comma e.g. ECC,POWER. Sampling data with max/min/avg is also returned for POWER, UTILIZATION and CLOCK display types. Doesn't work with -u or -x flags. -l, --loop= Probe until Ctrl+C at specified second interval. -lms, --loop-ms= Probe until Ctrl+C at specified millisecond interval. SELECTIVE QUERY OPTIONS: Allows the caller to pass an explicit list of properties to query. [one of] --query-gpu= Information about GPU. Call --help-query-gpu for more info. --query-supported-clocks= List of supported clocks. Call --help-query-supported-clocks for more info. --query-compute-apps= List of currently active compute processes. Call --help-query-compute-apps for more info. --query-accounted-apps= List of accounted compute processes. Call --help-query-accounted-apps for more info. This query is not supported on vGPU host. --query-retired-pages= List of device memory pages that have been retired. Call --help-query-retired-pages for more info. --query-remapped-rows= Information about remapped rows. Call --help-query-remapped-rows for more info. [mandatory] --format= Comma separated list of format options: csv - comma separated values (MANDATORY) noheader - skip the first line with column headers nounits - don't print units for numerical values [plus any of] -i, --id= Target a specific GPU or Unit. -f, --filename= Log to a specified file, rather than to stdout. -l, --loop= Probe until Ctrl+C at specified second interval. -lms, --loop-ms= Probe until Ctrl+C at specified millisecond interval. DEVICE MODIFICATION OPTIONS: [any one of] -pm, --persistence-mode= Set persistence mode: 0/DISABLED, 1/ENABLED -e, --ecc-config= Toggle ECC support: 0/DISABLED, 1/ENABLED -p, --reset-ecc-errors= Reset ECC error counts: 0/VOLATILE, 1/AGGREGATE -c, --compute-mode= Set MODE for compute applications: 0/DEFAULT, 1/EXCLUSIVE_PROCESS, 2/PROHIBITED --gom= Set GPU Operation Mode: 0/ALL_ON, 1/COMPUTE, 2/LOW_DP -r --gpu-reset Trigger reset of the GPU. Can be used to reset the GPU HW state in situations that would otherwise require a machine reboot. Typically useful if a double bit ECC error has occurred. Reset operations are not guarenteed to work in all cases and should be used with caution. -vm --virt-mode= Switch GPU Virtualization Mode: Sets GPU virtualization mode to 3/VGPU or 4/VSGA Virtualization mode of a GPU can only be set when it is running on a hypervisor. -lgc --lock-gpu-clocks= Specifies <minGpuClock,maxGpuClock> clocks as a pair (e.g. 1500,1500) that defines the range of desired locked GPU clock speed in MHz. Setting this will supercede application clocks and take effect regardless if an app is running. Input can also be a singular desired clock value (e.g. <GpuClockValue>). -rgc --reset-gpu-clocks Resets the Gpu clocks to the default values. -ac --applications-clocks= Specifies <memory,graphics> clocks as a pair (e.g. 2000,800) that defines GPU's speed in MHz while running applications on a GPU. -rac --reset-applications-clocks Resets the applications clocks to the default values. -acp --applications-clocks-permission= Toggles permission requirements for -ac and -rac commands: 0/UNRESTRICTED, 1/RESTRICTED -pl --power-limit= Specifies maximum power management limit in watts. -cc --cuda-clocks= Overrides or restores default CUDA clocks. In override mode, GPU clocks higher frequencies when running CUDA applications. Only on supported devices starting from the Volta series. Requires administrator privileges. 0/RESTORE_DEFAULT, 1/OVERRIDE -am --accounting-mode= Enable or disable Accounting Mode: 0/DISABLED, 1/ENABLED -caa --clear-accounted-apps Clears all the accounted PIDs in the buffer. --auto-boost-default= Set the default auto boost policy to 0/DISABLED or 1/ENABLED, enforcing the change only after the last boost client has exited. --auto-boost-permission= Allow non-admin/root control over auto boost mode: 0/UNRESTRICTED, 1/RESTRICTED -mig --multi-instance-gpu= Enable or disable Multi Instance GPU: 0/DISABLED, 1/ENABLED Requires root. [plus optional] -i, --id= Target a specific GPU. -eow, --error-on-warning Return a non-zero error for warnings. UNIT MODIFICATION OPTIONS: -t, --toggle-led= Set Unit LED state: 0/GREEN, 1/AMBER [plus optional] -i, --id= Target a specific Unit. SHOW DTD OPTIONS: --dtd Print device DTD and exit. [plus optional] -f, --filename= Log to a specified file, rather than to stdout. -u, --unit Show unit, rather than device, DTD. --debug= Log encrypted debug information to a specified file. STATISTICS: (EXPERIMENTAL) stats Displays device statistics. "nvidia-smi stats -h" for more information. Device Monitoring: dmon Displays device stats in scrolling format. "nvidia-smi dmon -h" for more information. daemon Runs in background and monitor devices as a daemon process. This is an experimental feature. Not supported on Windows baremetal "nvidia-smi daemon -h" for more information. replay Used to replay/extract the persistent stats generated by daemon. This is an experimental feature. "nvidia-smi replay -h" for more information. Process Monitoring: pmon Displays process stats in scrolling format. "nvidia-smi pmon -h" for more information. TOPOLOGY: topo Displays device/system topology. "nvidia-smi topo -h" for more information. DRAIN STATES: drain Displays/modifies GPU drain states for power idling. "nvidia-smi drain -h" for more information. NVLINK: nvlink Displays device nvlink information. "nvidia-smi nvlink -h" for more information. CLOCKS: clocks Control and query clock information. "nvidia-smi clocks -h" for more information. ENCODER SESSIONS: encodersessions Displays device encoder sessions information. "nvidia-smi encodersessions -h" for more information. FBC SESSIONS: fbcsessions Displays device FBC sessions information. "nvidia-smi fbcsessions -h" for more information. GRID vGPU: vgpu Displays vGPU information. "nvidia-smi vgpu -h" for more information. MIG: mig Provides controls for MIG management. "nvidia-smi mig -h" for more information. Please see the nvidia-smi(1) manual page for more detailed information.

輸入 nvidia-smi -L 可以列出所有的GPU設備及其UUID,如下所示:

用戶名@主機名:$ nvidia-smi -L GPU 0: A100-SXM4-40GB (UUID: GPU-9f2df045-8650-7b2e-d442-cc0d7ba0150d) GPU 1: A100-SXM4-40GB (UUID: GPU-ad117600-5d0e-557d-c81d-7c4d60555eaa) GPU 2: A100-SXM4-40GB (UUID: GPU-087ca21d-0c14-66d0-3869-59ff65a48d58) GPU 3: A100-SXM4-40GB (UUID: GPU-eb9943a0-d78e-4220-fdb2-e51f2b490064) GPU 4: A100-SXM4-40GB (UUID: GPU-8302a1f7-a8be-753f-eb71-6f60c5de1703) GPU 5: A100-SXM4-40GB (UUID: GPU-ee1be011-0d98-3d6f-8c89-b55c28966c63) GPU 6: A100-SXM4-40GB (UUID: GPU-8b3c7f5b-3fb4-22c3-69c9-4dd6a9f31586) MIG 3g.20gb Device 0: (UUID: MIG-GPU-8b3c7f5b-3fb4-22c3-69c9-4dd6a9f31586/1/0) MIG 3g.20gb Device 1: (UUID: MIG-GPU-8b3c7f5b-3fb4-22c3-69c9-4dd6a9f31586/2/0) GPU 7: A100-SXM4-40GB (UUID: GPU-fab40b5f-c286-603f-8909-cdb73e5ffd6c) MIG 3g.20gb Device 0: (UUID: MIG-GPU-fab40b5f-c286-603f-8909-cdb73e5ffd6c/1/0) MIG 3g.20gb Device 1: (UUID: MIG-GPU-fab40b5f-c286-603f-8909-cdb73e5ffd6c/2/0)

輸入 nvidia-smi -q 可以列出所有GPU設備的詳細信息。如果只想列出某一GPU的詳細信息,可使用 -i 選項指定,如下圖所示:

用戶名@主機名:$ nvidia-smi -q -i 0 ==============NVSMI LOG============== Timestamp : Mon Dec 5 17:31:45 2022 Driver Version : 450.172.01 CUDA Version : 11.0 Attached GPUs : 8 GPU 00000000:26:00.0 Product Name : A100-SXM4-40GB Product Brand : Tesla Display Mode : Enabled Display Active : Disabled Persistence Mode : Enabled MIG Mode Current : Disabled Pending : Disabled Accounting Mode : Disabled Accounting Mode Buffer Size : 4000 Driver Model Current : N/A Pending : N/A Serial Number : 1564720004631 GPU UUID : GPU-9f2df045-8650-7b2e-d442-cc0d7ba0150d Minor Number : 2 VBIOS Version : 92.00.19.00.10 MultiGPU Board : No Board ID : 0x2600 GPU Part Number : 692-2G506-0200-002 Inforom Version Image Version : G506.0200.00.04 OEM Object : 2.0 ECC Object : 6.16 Power Management Object : N/A GPU Operation Mode Current : N/A Pending : N/A GPU Virtualization Mode Virtualization Mode : None Host VGPU Mode : N/A IBMNPU Relaxed Ordering Mode : N/A PCI Bus : 0x26 Device : 0x00 Domain : 0x0000 Device Id : 0x20B010DE Bus Id : 00000000:26:00.0 Sub System Id : 0x134F10DE GPU Link Info PCIe Generation Max : 4 Current : 4 Link Width Max : 16x Current : 16x Bridge Chip Type : N/A Firmware : N/A Replays Since Reset : 0 Replay Number Rollovers : 0 Tx Throughput : 0 KB/s Rx Throughput : 0 KB/s Fan Speed : N/A Performance State : P0 Clocks Throttle Reasons Idle : Active Applications Clocks Setting : Not Active SW Power Cap : Not Active HW Slowdown : Not Active HW Thermal Slowdown : Not Active HW Power Brake Slowdown : Not Active Sync Boost : Not Active SW Thermal Slowdown : Not Active Display Clock Setting : Not Active FB Memory Usage Total : 40537 MiB Used : 0 MiB Free : 40537 MiB BAR1 Memory Usage Total : 65536 MiB Used : 29 MiB Free : 65507 MiB Compute Mode : Default Utilization Gpu : 0 % Memory : 0 % Encoder : 0 % Decoder : 0 % Encoder Stats Active Sessions : 0 Average FPS : 0 Average Latency : 0 FBC Stats Active Sessions : 0 Average FPS : 0 Average Latency : 0 Ecc Mode Current : Enabled Pending : Enabled ECC Errors Volatile SRAM Correctable : 0 SRAM Uncorrectable : 0 DRAM Correctable : 0 DRAM Uncorrectable : 0 Aggregate SRAM Correctable : 0 SRAM Uncorrectable : 0 DRAM Correctable : 0 DRAM Uncorrectable : 0 Retired Pages Single Bit ECC : N/A Double Bit ECC : N/A Pending Page Blacklist : N/A Remapped Rows Correctable Error : 0 Uncorrectable Error : 0 Pending : No Remapping Failure Occurred : No Bank Remap Availability Histogram Max : 640 bank(s) High : 0 bank(s) Partial : 0 bank(s) Low : 0 bank(s) None : 0 bank(s) Temperature GPU Current Temp : 26 C GPU Shutdown Temp : 92 C GPU Slowdown Temp : 89 C GPU Max Operating Temp : 85 C Memory Current Temp : 38 C Memory Max Operating Temp : 95 C Power Readings Power Management : Supported Power Draw : 55.79 W Power Limit : 400.00 W Default Power Limit : 400.00 W Enforced Power Limit : 400.00 W Min Power Limit : 100.00 W Max Power Limit : 400.00 W Clocks Graphics : 210 MHz SM : 210 MHz Memory : 1215 MHz Video : 585 MHz Applications Clocks Graphics : 1095 MHz Memory : 1215 MHz Default Applications Clocks Graphics : 1095 MHz Memory : 1215 MHz Max Clocks Graphics : 1410 MHz SM : 1410 MHz Memory : 1215 MHz Video : 1290 MHz Max Customer Boost Clocks Graphics : 1410 MHz Clock Policy Auto Boost : N/A Auto Boost Default : N/A Processes : None

輸入 nvidia-smi -l [second] 后會每隔 second 秒刷新一次面板。監控GPU利用率通常會選擇每隔1秒刷新一次,例如:

nvidia-smi -l 2

在 Linux 上,您可以將 GPU 設置為持久模式,以保持加載 NVIDIA 驅動程序,即使沒有應用程序正在訪問這些卡。 當您運行一系列短作業時,這特別有用。 持久模式在每個空閑 GPU 上使用更多瓦特,但可以防止每次啟動 GPU 應用程序時出現相當長的延遲。 如果您為 GPU 分配了特定的時鐘速度或功率限制(因為卸載 NVIDIA 驅動程序時這些更改會丟失),這也是必要的。 通過運行以下命令在所有 GPU 上啟用持久性模式:

nvidia-smi -pm 1

也可以指定開啟某個顯卡的持久模式:

nvidia-smi -pm 1 -i 0

以 1 秒的更新間隔監控整體 GPU 使用情況

以 1 秒的更新間隔監控每個進程的 GPU 使用情況:

(9)…

要正確利用更高級的 NVIDIA GPU 功能(例如 GPU Direct),正確配置系統拓撲至關重要。 拓撲是指各種系統設備(GPU、InfiniBand HCA、存儲控制器等)如何相互連接以及如何連接到系統的 CPU。 某些拓撲類型會降低性能甚至導致某些功能不可用。 為了幫助解決此類問題,nvidia-smi 支持系統拓撲和連接查詢:

nvidia-smi topo --matrix #查看系統/GPU 拓撲 nvidia-smi nvlink --status #查詢 NVLink 連接本身以確保狀態、功能和運行狀況。 nvidia-smi nvlink --capabilities #查詢 NVLink 連接本身以確保狀態、功能和運行狀況。

到此,相信大家對“nvidia-smi命令怎么使用”有了更深的了解,不妨來實際操作一番吧!這里是億速云網站,更多相關內容可以進入相關頻道進行查詢,關注我們,繼續學習!

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。