您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

最近有個SQL運行時長超過兩個小時,所以準備優化下

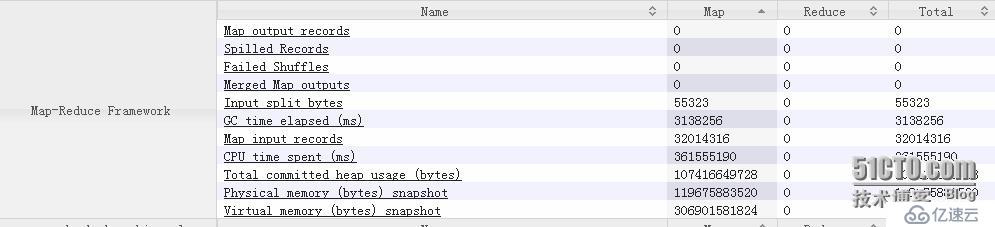

首先查看hive sql 產生job的counter數據發現

總的CPU time spent 過高估計100.4319973小時

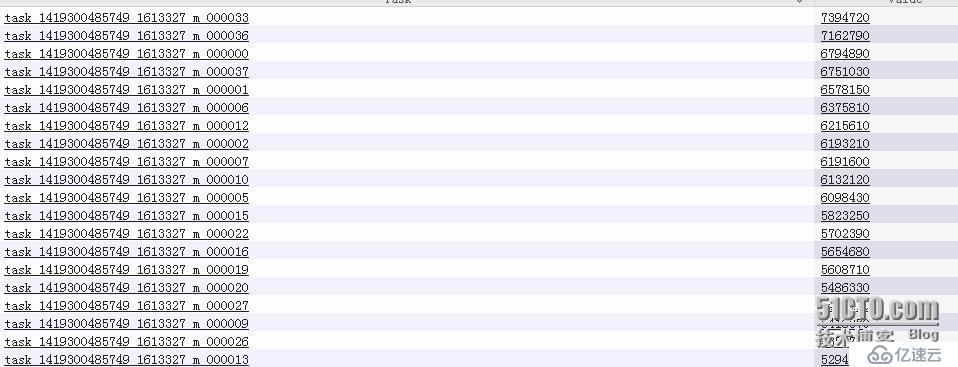

每個map的CPU time spent

排第一的耗了2.0540889小時

建議設置如下參數:

1、mapreduce.input.fileinputformat.split.maxsize現在是256000000 往下調增加map數(此招立竿見影,我設為32000000產生了500+的map,最后任務由原先的2小時提速到47分鐘就完成)

2、優化UDF getPageID getSiteId getPageValue (這幾個方法用了很多正則表達式的文本匹配)

2.1 正則表達式處理優化可以參考

http://www.fasterj.com/articles/regex1.shtml

http://www.fasterj.com/articles/regex2.shtml

2.2 UDF優化見

1 Also you should use class level privatete members to save on object

incantation and garbage collection.

2 You also get benefits by matching the args with what you would normally

expect from upstream. Hive converts text to string when needed, but if the

data normally coming into the method is text you could try and match the

argument and see if it is any faster.

Exapmle:

優化前:

>>>> import org.apache.hadoop.hive.ql.exec.UDF;

>>>> import java.net.URLDecoder;

>>>>

>>>> public final class urldecode extends UDF {

>>>>

>>>> public String evaluate(final String s) {

>>>> if (s == null) { return null; }

>>>> return getString(s);

>>>> }

>>>>

>>>> public static String getString(String s) {

>>>> String a;

>>>> try {

>>>> a = URLDecoder.decode(s);

>>>> } catch ( Exception e) {

>>>> a = "";

>>>> }

>>>> return a;

>>>> }

>>>>

>>>> public static void main(String args[]) {

>>>> String t = "%E5%A4%AA%E5%8E%9F-%E4%B8%89%E4%BA%9A";

>>>> System.out.println( getString(t) );

>>>> }

>>>> }優化后:

import java.net.URLDecoder;

public final class urldecode extends UDF {

private Text t = new Text();

public Text evaluate(Text s) {

if (s == null) { return null; }

try {

t.set( URLDecoder.decode( s.toString(), "UTF-8" ));

return t;

} catch ( Exception e) {

return null;

}

}

//public static void main(String args[]) {

//String t = "%E5%A4%AA%E5%8E%9F-%E4%B8%89%E4%BA%9A";

//System.out.println( getString(t) );

//}

}3 繼承實現GenericUDF

3、如果是Hive 0.14 + 可以開啟hive.cache.expr.evaluation UDF Cache功能

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。