您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

上一篇文章中kubernetes系列教程(七)深入玩轉pod調度介紹了kubernetes中Pod的調度機制,通過實戰演練介紹Pod調度到node的幾種方法:1. 通過nodeName固定選擇調度,2. 通過nodeSelector定向選擇調度,3. 通過node Affinity親和力調度,接下來介紹kubernetes系列教程pod的健康檢查機制。

應用在運行過程中難免會出現錯誤,如程序異常,軟件異常,硬件故障,網絡故障等,kubernetes提供Health Check健康檢查機制,當發現應用異常時會自動重啟容器,將應用從service服務中剔除,保障應用的高可用性。k8s定義了三種探針Probe:

每種探測機制支持三種健康檢查方法,分別是命令行exec,httpGet和tcpSocket,其中exec通用性最強,適用與大部分場景,tcpSocket適用于TCP業務,httpGet適用于web業務。

每種探測方法能支持幾個相同的檢查參數,用于設置控制檢查時間:

許多應用程序運行過程中無法檢測到內部故障,如死鎖,出現故障時通過重啟業務可以恢復,kubernetes提供liveness在線健康檢查機制,我們以exec為例,創建一個容器啟動過程中創建一個文件/tmp/liveness-probe.log,10s后將其刪除,定義liveness健康檢查機制在容器中執行命令ls -l /tmp/liveness-probe.log,通過文件的返回碼判斷健康狀態,如果返回碼非0,暫停20s后kubelet會自動將該容器重啟。

[root@node-1 demo]# cat centos-exec-liveness-probe.yaml

apiVersion: v1

kind: Pod

metadata:

name: exec-liveness-probe

annotations:

kubernetes.io/description: "exec-liveness-probe"

spec:

containers:

- name: exec-liveness-probe

image: centos:latest

imagePullPolicy: IfNotPresent

args: #容器啟動命令,生命周期為30s

- /bin/sh

- -c

- touch /tmp/liveness-probe.log && sleep 10 && rm -f /tmp/liveness-probe.log && sleep 20

livenessProbe:

exec: #健康檢查機制,通過ls -l /tmp/liveness-probe.log返回碼判斷容器的健康狀態

command:

- ls

- l

- /tmp/liveness-probe.log

initialDelaySeconds: 1

periodSeconds: 5

timeoutSeconds: 1[root@node-1 demo]# kubectl apply -f centos-exec-liveness-probe.yaml

pod/exec-liveness-probe created[root@node-1 demo]# kubectl describe pods exec-liveness-probe | tail

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 28s default-scheduler Successfully assigned default/exec-liveness-probe to node-3

Normal Pulled 27s kubelet, node-3 Container image "centos:latest" already present on machine

Normal Created 27s kubelet, node-3 Created container exec-liveness-probe

Normal Started 27s kubelet, node-3 Started container exec-liveness-probe

#容器已啟動

Warning Unhealthy 20s (x2 over 25s) kubelet, node-3 Liveness probe failed: /tmp/liveness-probe.log

ls: cannot access l: No such file or directory #執行健康檢查,檢查異常

Warning Unhealthy 15s kubelet, node-3 Liveness probe failed: ls: cannot access l: No such file or directory

ls: cannot access /tmp/liveness-probe.log: No such file or directory

Normal Killing 15s kubelet, node-3 Container exec-liveness-probe failed liveness probe, will be restarted

#重啟容器[root@node-1 demo]# kubectl get pods exec-liveness-probe

NAME READY STATUS RESTARTS AGE

exec-liveness-probe 1/1 Running 6 5m19s[root@node-1 demo]# cat nginx-httpGet-liveness-readiness.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-httpget-livess-readiness-probe

annotations:

kubernetes.io/description: "nginx-httpGet-livess-readiness-probe"

spec:

containers:

- name: nginx-httpget-livess-readiness-probe

image: nginx:latest

ports:

- name: http-80-port

protocol: TCP

containerPort: 80

livenessProbe: #健康檢查機制,通過httpGet實現實現檢查

httpGet:

port: 80

scheme: HTTP

path: /index.html

initialDelaySeconds: 3

periodSeconds: 10

timeoutSeconds: 3[root@node-1 demo]# kubectl apply -f nginx-httpGet-liveness-readiness.yaml

pod/nginx-httpget-livess-readiness-probe created

[root@node-1 demo]# kubectl get pods nginx-httpget-livess-readiness-probe

NAME READY STATUS RESTARTS AGE

nginx-httpget-livess-readiness-probe 1/1 Running 0 6s

查詢pod所屬的節點

[root@node-1 demo]# kubectl get pods nginx-httpget-livess-readiness-probe -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-httpget-livess-readiness-probe 1/1 Running 1 3m9s 10.244.2.19 node-3 <none> <none>

登錄到pod中將文件刪除

[root@node-1 demo]# kubectl exec -it nginx-httpget-livess-readiness-probe /bin/bash

root@nginx-httpget-livess-readiness-probe:/# ls -l /usr/share/nginx/html/index.html

-rw-r--r-- 1 root root 612 Sep 24 14:49 /usr/share/nginx/html/index.html

root@nginx-httpget-livess-readiness-probe:/# rm -f /usr/share/nginx/html/index.html [root@node-1 demo]# kubectl get pods nginx-httpget-livess-readiness-probe

NAME READY STATUS RESTARTS AGE

nginx-httpget-livess-readiness-probe 1/1 Running 1 4m22s

[root@node-1 demo]# kubectl describe pods nginx-httpget-livess-readiness-probe | tail

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 5m45s default-scheduler Successfully assigned default/nginx-httpget-livess-readiness-probe to node-3

Normal Pulling 3m29s (x2 over 5m45s) kubelet, node-3 Pulling image "nginx:latest"

Warning Unhealthy 3m29s (x3 over 3m49s) kubelet, node-3 Liveness probe failed: HTTP probe failed with statuscode: 404

Normal Killing 3m29s kubelet, node-3 Container nginx-httpget-livess-readiness-probe failed liveness probe, will be restarted

Normal Pulled 3m25s (x2 over 5m41s) kubelet, node-3 Successfully pulled image "nginx:latest"

Normal Created 3m25s (x2 over 5m40s) kubelet, node-3 Created container nginx-httpget-livess-readiness-probe

Normal Started 3m25s (x2 over 5m40s) kubelet, node-3 Started container nginx-httpget-livess-readiness-probe[root@node-1 demo]# cat nginx-tcp-liveness.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-tcp-liveness-probe

annotations:

kubernetes.io/description: "nginx-tcp-liveness-probe"

spec:

containers:

- name: nginx-tcp-liveness-probe

image: nginx:latest

ports:

- name: http-80-port

protocol: TCP

containerPort: 80

livenessProbe: #健康檢查為tcpSocket,探測TCP 80端口

tcpSocket:

port: 80

initialDelaySeconds: 3

periodSeconds: 10

timeoutSeconds: 3[root@node-1 demo]# kubectl apply -f nginx-tcp-liveness.yaml

pod/nginx-tcp-liveness-probe created

[root@node-1 demo]# kubectl get pods nginx-tcp-liveness-probe

NAME READY STATUS RESTARTS AGE

nginx-tcp-liveness-probe 1/1 Running 0 6s

獲取pod所在node

[root@node-1 demo]# kubectl get pods nginx-tcp-liveness-probe -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-tcp-liveness-probe 1/1 Running 0 99s 10.244.2.20 node-3 <none> <none>

登錄到pod中

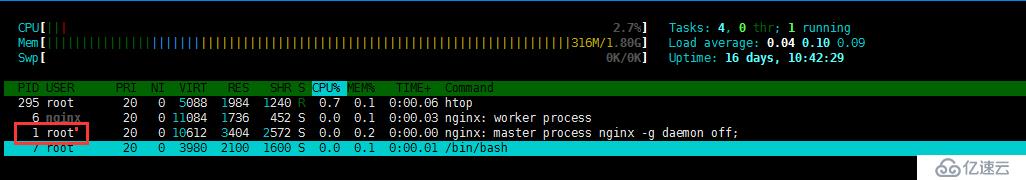

[root@node-1 demo]# kubectl exec -it nginx-httpget-livess-readiness-probe /bin/bash

#執行apt-get update更新和apt-get install htop安裝工具

root@nginx-httpget-livess-readiness-probe:/# apt-get update

Get:1 http://cdn-fastly.deb.debian.org/debian buster InRelease [122 kB]

Get:2 http://security-cdn.debian.org/debian-security buster/updates InRelease [39.1 kB]

Get:3 http://cdn-fastly.deb.debian.org/debian buster-updates InRelease [49.3 kB]

Get:4 http://security-cdn.debian.org/debian-security buster/updates/main amd64 Packages [95.7 kB]

Get:5 http://cdn-fastly.deb.debian.org/debian buster/main amd64 Packages [7899 kB]

Get:6 http://cdn-fastly.deb.debian.org/debian buster-updates/main amd64 Packages [5792 B]

Fetched 8210 kB in 3s (3094 kB/s)

Reading package lists... Done

root@nginx-httpget-livess-readiness-probe:/# apt-get install htop

Reading package lists... Done

Building dependency tree

Reading state information... Done

Suggested packages:

lsof strace

The following NEW packages will be installed:

htop

0 upgraded, 1 newly installed, 0 to remove and 5 not upgraded.

Need to get 92.8 kB of archives.

After this operation, 230 kB of additional disk space will be used.

Get:1 http://cdn-fastly.deb.debian.org/debian buster/main amd64 htop amd64 2.2.0-1+b1 [92.8 kB]

Fetched 92.8 kB in 0s (221 kB/s)

debconf: delaying package configuration, since apt-utils is not installed

Selecting previously unselected package htop.

(Reading database ... 7203 files and directories currently installed.)

Preparing to unpack .../htop_2.2.0-1+b1_amd64.deb ...

Unpacking htop (2.2.0-1+b1) ...

Setting up htop (2.2.0-1+b1) ...

root@nginx-httpget-livess-readiness-probe:/# kill 1

root@nginx-httpget-livess-readiness-probe:/# command terminated with exit code 137

查看pod情況

[root@node-1 demo]# kubectl get pods nginx-tcp-liveness-probe

NAME READY STATUS RESTARTS AGE

nginx-tcp-liveness-probe 1/1 Running 1 13m[root@node-1 demo]# kubectl describe pods nginx-tcp-liveness-probe | tail

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 14m default-scheduler Successfully assigned default/nginx-tcp-liveness-probe to node-3

Normal Pulling 44s (x2 over 14m) kubelet, node-3 Pulling image "nginx:latest"

Normal Pulled 40s (x2 over 14m) kubelet, node-3 Successfully pulled image "nginx:latest"

Normal Created 40s (x2 over 14m) kubelet, node-3 Created container nginx-tcp-liveness-probe

Normal Started 40s (x2 over 14m) kubelet, node-3 Started container nginx-tcp-liveness-probe就緒檢查用于應用接入到service的場景,用于判斷應用是否已經就緒完畢,即是否可以接受外部轉發的流量,健康檢查正常則將pod加入到service的endpoints中,健康檢查異常則從service的endpoints中刪除,避免影響業務的訪問。

[root@node-1 demo]# cat httpget-liveness-readiness-probe.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-tcp-liveness-probe

annotations:

kubernetes.io/description: "nginx-tcp-liveness-probe"

labels: #需要定義labels,后面定義的service需要調用

app: nginx

spec:

containers:

- name: nginx-tcp-liveness-probe

image: nginx:latest

ports:

- name: http-80-port

protocol: TCP

containerPort: 80

livenessProbe: #存活檢查探針

httpGet:

port: 80

path: /index.html

scheme: HTTP

initialDelaySeconds: 3

periodSeconds: 10

timeoutSeconds: 3

readinessProbe: #就緒檢查探針

httpGet:

port: 80

path: /test.html

scheme: HTTP

initialDelaySeconds: 3

periodSeconds: 10

timeoutSeconds: 3[root@node-1 demo]# cat nginx-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx

name: nginx-service

spec:

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx

type: ClusterIP[root@node-1 demo]# kubectl apply -f httpget-liveness-readiness-probe.yaml

pod/nginx-tcp-liveness-probe created

[root@node-1 demo]# kubectl apply -f nginx-service.yaml

service/nginx-service created[root@node-1 ~]# kubectl get pods nginx-httpget-livess-readiness-probe

NAME READY STATUS RESTARTS AGE

nginx-httpget-livess-readiness-probe 1/1 Running 2 153m

#readiness健康檢查異常,404報錯(最后一行)

[root@node-1 demo]# kubectl describe pods nginx-tcp-liveness-probe | tail

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m6s default-scheduler Successfully assigned default/nginx-tcp-liveness-probe to node-3

Normal Pulling 2m5s kubelet, node-3 Pulling image "nginx:latest"

Normal Pulled 2m1s kubelet, node-3 Successfully pulled image "nginx:latest"

Normal Created 2m1s kubelet, node-3 Created container nginx-tcp-liveness-probe

Normal Started 2m1s kubelet, node-3 Started container nginx-tcp-liveness-probe

Warning Unhealthy 2s (x12 over 112s) kubelet, node-3 Readiness probe failed: HTTP probe failed with statuscode: 404[root@node-1 ~]# kubectl describe services nginx-service

Name: nginx-service

Namespace: default

Labels: app=nginx

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"app":"nginx"},"name":"nginx-service","namespace":"default"},"s...

Selector: app=nginx

Type: ClusterIP

IP: 10.110.54.40

Port: http 80/TCP

TargetPort: 80/TCP

Endpoints: <none> #Endpoints對象為空

Session Affinity: None

Events: <none>

#endpoints狀態

[root@node-1 demo]# kubectl describe endpoints nginx-service

Name: nginx-service

Namespace: default

Labels: app=nginx

Annotations: endpoints.kubernetes.io/last-change-trigger-time: 2019-09-30T14:27:37Z

Subsets:

Addresses: <none>

NotReadyAddresses: 10.244.2.22 #pod處于NotReady狀態

Ports:

Name Port Protocol

---- ---- --------

http 80 TCP

Events: <none>[root@node-1 ~]# kubectl exec -it nginx-httpget-livess-readiness-probe /bin/bash

root@nginx-httpget-livess-readiness-probe:/# echo "readiness probe demo" >/usr/share/nginx/html/test.html健康檢查正常

[root@node-1 demo]# curl http://10.244.2.22/test.html

查看endpoints情況

readines[root@node-1 demo]# kubectl describe endpoints nginx-service

Name: nginx-service

Namespace: default

Labels: app=nginx

Annotations: endpoints.kubernetes.io/last-change-trigger-time: 2019-09-30T14:33:01Z

Subsets:

Addresses: 10.244.2.22 #就緒地址,已從NotReady中提出,加入到正常的Address列表中

NotReadyAddresses: <none>

Ports:

Name Port Protocol

---- ---- --------

http 80 TCP

查看service狀態

[root@node-1 demo]# kubectl describe services nginx-service

Name: nginx-service

Namespace: default

Labels: app=nginx

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"app":"nginx"},"name":"nginx-service","namespace":"default"},"s...

Selector: app=nginx

Type: ClusterIP

IP: 10.110.54.40

Port: http 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.2.22:80 #已和endpoints關聯

Session Affinity: None

Events: <none>刪除站點信息,使健康檢查異常

[root@node-1 demo]# kubectl exec -it nginx-tcp-liveness-probe /bin/bash

root@nginx-tcp-liveness-probe:/# rm -f /usr/share/nginx/html/test.html

查看pod健康檢查event日志

[root@node-1 demo]# kubectl get pods nginx-tcp-liveness-probe

NAME READY STATUS RESTARTS AGE

nginx-tcp-liveness-probe 0/1 Running 0 11m

[root@node-1 demo]# kubectl describe pods nginx-tcp-liveness-probe | tail

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 12m default-scheduler Successfully assigned default/nginx-tcp-liveness-probe to node-3

Normal Pulling 12m kubelet, node-3 Pulling image "nginx:latest"

Normal Pulled 11m kubelet, node-3 Successfully pulled image "nginx:latest"

Normal Created 11m kubelet, node-3 Created container nginx-tcp-liveness-probe

Normal Started 11m kubelet, node-3 Started container nginx-tcp-liveness-probe

Warning Unhealthy 119s (x32 over 11m) kubelet, node-3 Readiness probe failed: HTTP probe failed with statuscode: 404

查看endpoints

[root@node-1 demo]# kubectl describe endpoints nginx-service

Name: nginx-service

Namespace: default

Labels: app=nginx

Annotations: endpoints.kubernetes.io/last-change-trigger-time: 2019-09-30T14:38:01Z

Subsets:

Addresses: <none>

NotReadyAddresses: 10.244.2.22 #健康檢查異常,此時加入到NotReady狀態

Ports:

Name Port Protocol

---- ---- --------

http 80 TCP

Events: <none>

查看service狀態,此時endpoints為空

[root@node-1 demo]# kubectl describe services nginx-service

Name: nginx-service

Namespace: default

Labels: app=nginx

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"app":"nginx"},"name":"nginx-service","namespace":"default"},"s...

Selector: app=nginx

Type: ClusterIP

IP: 10.110.54.40

Port: http 80/TCP

TargetPort: 80/TCP

Endpoints: #為空

Session Affinity: None

Events: <none>TKE中可以設定應用的健康檢查機制,健康檢查機制包含在不同的Workload中,可以通過模板生成健康監測機制,定義過程中可以選擇高級選項,默認健康檢查機制是關閉狀態,包含前面介紹的兩種探針:存活探針livenessProbe和就緒探針readinessProbe,根據需要分別開啟

開啟探針之后進入設置健康檢查,支持上述介紹的三種方法:執行命令檢查、TCP端口檢查,HTTP請求檢查

選擇不同的檢查方法填寫不同的參數即可,如啟動間隔,檢查間隔,響應超時,等參數,以HTTP請求檢查方法為例:

設置完成后創建workload時候會自動生成yaml文件,以剛創建的deployment為例,生成健康檢查yaml文件內容如下:

apiVersion: apps/v1beta2

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

description: tke-health-check-demo

creationTimestamp: "2019-09-30T12:28:42Z"

generation: 1

labels:

k8s-app: tke-health-check-demo

qcloud-app: tke-health-check-demo

name: tke-health-check-demo

namespace: default

resourceVersion: "2060365354"

selfLink: /apis/apps/v1beta2/namespaces/default/deployments/tke-health-check-demo

uid: d6cf1f25-e37d-11e9-87fd-567eb17a3218

spec:

minReadySeconds: 10

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: tke-health-check-demo

qcloud-app: tke-health-check-demo

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

k8s-app: tke-health-check-demo

qcloud-app: tke-health-check-demo

spec:

containers:

- image: nginx:latest

imagePullPolicy: Always

livenessProbe: #通過模板生成的健康檢查機制

failureThreshold: 1

httpGet:

path: /

port: 80

scheme: HTTP

periodSeconds: 3

successThreshold: 1

timeoutSeconds: 2

name: tke-health-check-demo

resources:

limits:

cpu: 500m

memory: 1Gi

requests:

cpu: 250m

memory: 256Mi

securityContext:

privileged: false

procMount: Default

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

imagePullSecrets:

- name: qcloudregistrykey

- name: tencenthubkey

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30本章介紹kubernetes中健康檢查兩種Probe:livenessProbe和readinessProbe,livenessProbe主要用于存活檢查,檢查容器內部運行狀態,readiness主要用于就緒檢查,是否可以接受流量,通常需要和service的endpoints結合,當就緒準備妥當時加入到endpoints中,當就緒異常時從endpoints中刪除,從而實現了services的健康檢查和服務探測機制。對于Probe機制提供了三種檢測的方法,分別適用于不同的場景:1. exec命令行,通過命令或shell實現健康檢查,2. tcpSocket通過TCP協議探測端口,建立tcp連接,3. httpGet通過建立http請求探測,讀者可多實操掌握其用法。

健康檢查:https://kubernetes.io/docs/tasks/configure-pod-container/configure-liveness-readiness-startup-probes/

TKE健康檢查設置方法:https://cloud.tencent.com/document/product/457/32815

當你的才華撐不起你的野心時,你就應該靜下心來學習

返回kubernetes系列教程目錄

**如果覺得文章對您有幫助,請訂閱專欄,分享給有需要的朋友吧

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。