您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

作為一個多年的DBA,hadoop家族中,最親切的產品就是hive了。畢竟SQL的使用還是很熟悉的。再也不用擔心編寫Mapreducer的痛苦了。

首先還是簡單介紹一下Hive吧

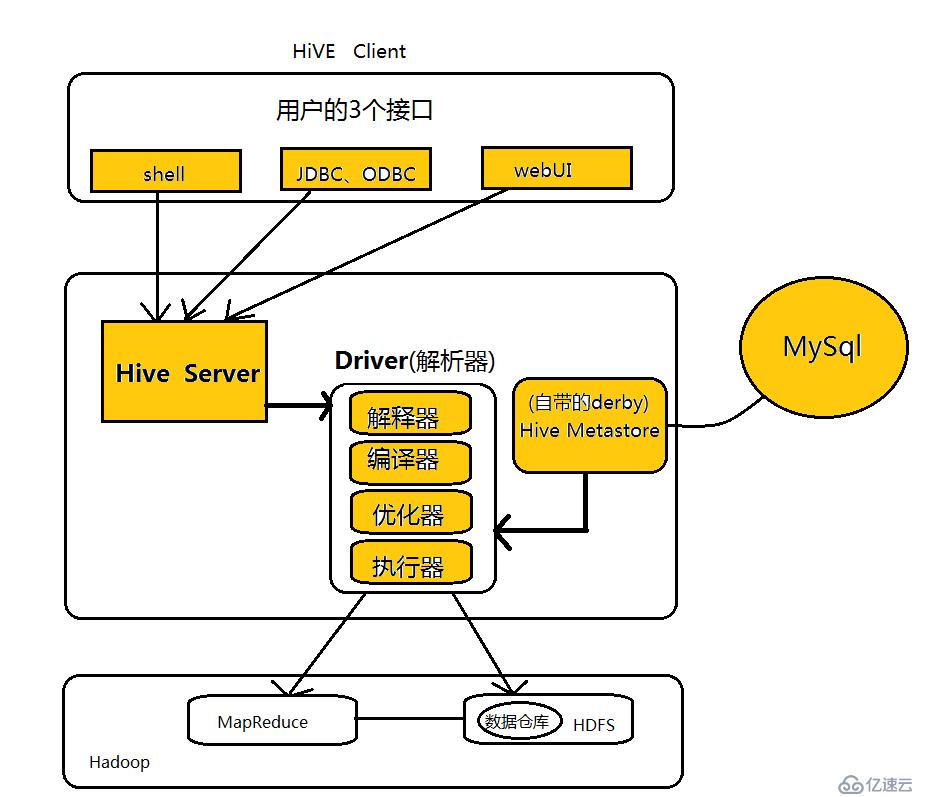

Hive是基于Hadoop的數據倉庫解決方案。由于Hadoop本身在數據存儲和計算方面有很好的可擴展性和高容錯性,因此使用Hive構建的數據倉庫也秉承了這些特性。

簡單來說,Hive就是在Hadoop上架了一層SQL接口,可以將SQL翻譯成MapReduce去Hadoop上執行,這樣就使得數據開發和分析人員很方便的使用SQL來完成海量數據的統計和分析,而不必使用編程語言開發MapReduce那么麻煩。

下面開始Hive的安裝, 安裝hive的前提,是hdfs,yarn已經安裝完成并啟動。hdfs安裝,可以參考

Hadoop集群(一) Zookeeper搭建

Hadoop集群(二) HDFS搭建

Hadoop集群(三) Hbase搭建

Hive軟件的下載,我使用版本是hive-1.2.1,現在已經無法下載了。大家可以根據需要下載新版本。

http://hive.apache.org/downloads.html

tar -xzvf apache-hive-1.2.1-bin.tar.gz

##javax.jdo.option.ConnectionURL,將該name對應的value修改為MySQL的地址,例如:

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true</value>

##javax.jdo.option.ConnectionDriverName,將該name對應的value修改為MySQL驅動類路徑,例如我的修改后是:

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

##javax.jdo.option.ConnectionUserName,將對應的value修改為MySQL數據庫登錄名:

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

##javax.jdo.option.ConnectionPassword,將對應的value修改為MySQL數據庫的登錄密碼:

<name>javax.jdo.option.ConnectionPassword</name>

<value>change to your password</value>

##hive.metastore.schema.verification,將對應的value修改為false:

<name>hive.metastore.schema.verification</name>

<value>false</value>創建對應目錄

mkdir -p /data1/hiveLogs-security/;chown -R hive:hadoop /data1/hiveLogs-security/

mkdir -p /data1/hiveData-security/;chown -R hive:hadoop /data1/hiveData-security/

mkdir -p /tmp/hive-security/operation_logs; chown -R hive:hadoop /tmp/hive-security/operation_logs創建hdfs目錄

hadoop fs -mkdir /tmp

hadoop fs -mkdir -p /user/hive/warehouse

hadoop fs -chmod g+w /tmp

hadoop fs -chmod g+w /user/hive/warehouse初始化hive

[hive@aznbhivel01 ~]$ schematool -initSchema -dbType mysql

Metastore connection URL: jdbc:mysql://172.16.13.88:3306/hive_beta?useUnicode=true&characterEncoding=UTF-8&createDatabaseIfNotExist=true

Metastore Connection Driver : com.mysql.jdbc.Driver

Metastore connection User: envision

Starting metastore schema initialization to 1.2.0

Initialization script hive-schema-1.2.0.mysql.sql

Initialization script completed

schemaTool completed-7. 解決方法:啟動Hive 的 Metastore Server服務進程,執行如下命令,,遇到下一個問題

[hive@aznbhivel01 ~]$ Starting Hive Metastore Server

hiveorg.apache.thrift.transport.TTransportException: java.io.IOException: Login failure for hive/aznbhivel01.liang.com@ENVISIONCN.COM from keytab /etc/security/keytab/hive.keytab: javax.security.auth.login.LoginException: Unable to obtain password from user

at org.apache.hadoop.hive.thrift.HadoopThriftAuthBridge$Server.<init>(HadoopThriftAuthBridge.java:358)

at org.apache.hadoop.hive.thrift.HadoopThriftAuthBridge.createServer(HadoopThriftAuthBridge.java:102)

at org.apache.hadoop.hive.metastore.HiveMetaStore.startMetaStore(HiveMetaStore.java:5990)

at org.apache.hadoop.hive.metastore.HiveMetaStore.main(HiveMetaStore.java:5909)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

Caused by: java.io.IOException: Login failure for hive/aznbhivel01.liang.com@ENVISIONCN.COM from keytab /etc/security/keytab/hive.keytab: javax.security.auth.login.LoginException: Unable to obtain password from user```

-8. keytab 沒找到,修正hive.keytab文件權限問題。

$ ll /etc/security/keytab/

total 100

-r-------- 1 hbase hadoop 18002 Dec 5 17:06 hbase.keytab

-r-------- 1 hdfs hadoop 18002 Dec 5 17:04 hdfs.keytab

-r-------- 1 hive hadoop 18002 Dec 5 17:06 hive.keytab

-r-------- 1 mapred hadoop 18002 Dec 5 17:06 mapred.keytab

-r-------- 1 yarn hadoop 18002 Dec 5 17:06 yarn.keytab-9. 再次重啟metastore

$ hive --service metastore &

[2] 41285

[1] Killed hive --service metastore (wd: ~)

(wd now: /etc/security)

[hive@aznbhivel01 security]$ Starting Hive Metastore Server-10. 然后啟動hiveserverhive --service hiveserver2 &

-11. 啟動依然失敗,很困惑。問題很明顯,就是說kerberos的KDC中無法找到這個server。但是已經kinit并且成功了。而且日志前面也說了,認證成功。

嘗試重新生成keytab也無效。最后考慮是不是hive-site.xml中寫的是IP的原因?修改成主機名,這個問題解決“thrift://aznbhivel01.liang.com:9083”

~~~~需要修改的配置文件信息~~~

<name>hive.metastore.uris</name>

<value>thrift://aznbhivel01.liang.com:9083</value>

<description>Thrift URI for the remote metastore. Used by metastore client to connect to remote metastore.</description>~~~~~~~~~log信息~~~~~~

2017-12-07 16:16:04,300 DEBUG [main]: security.UserGroupInformation (UserGroupInformation.java:login(221)) - hadoop login

2017-12-07 16:16:04,302 DEBUG [main]: security.UserGroupInformation (UserGroupInformation.java:commit(156)) - hadoop login commit

2017-12-07 16:16:04,303 DEBUG [main]: security.UserGroupInformation (UserGroupInformation.java:commit(170)) - using kerberos user:hive/aznbhivel01.liang.com@LIANG.COM

2017-12-07 16:16:04,303 DEBUG [main]: security.UserGroupInformation (UserGroupInformation.java:commit(192)) - Using user: "hive/aznbhivel01.liang.com@LIANG.COM" with name hive/aznbhivel01.liang.com@LIANG.COM

2017-12-07 16:16:04,303 DEBUG [main]: security.UserGroupInformation (UserGroupInformation.java:commit(202)) - User entry: "hive/aznbhivel01.liang.com@LIANG.COM"

2017-12-07 16:16:04,304 INFO [main]: security.UserGroupInformation (UserGroupInformation.java:loginUserFromKeytab(965)) - Login successful for user hive/aznbhivel01.liang.com@LIANG.COM using keytab file /etc/security/keytab/hive.keytab

........

Client Addresses Null

2017-12-07 16:16:04,408 INFO [main]: hive.metastore (HiveMetaStoreClient.java:open(386)) - Trying to connect to metastore with URI thrift://172.16.13.88:9083

2017-12-07 16:16:04,446 DEBUG [main]: security.UserGroupInformation (UserGroupInformation.java:logPrivilegedAction(1681)) - PrivilegedAction as:hive/aznbhivel01.liang.com@LIANG.COM (auth:KERBEROS) from:org.apache.hadoop.hive.thrift.client.TUGIAssumingTransport.open(TUGIAssumingTransport.java:49)

2017-12-07 16:16:04,448 DEBUG [main]: transport.TSaslTransport (TSaslTransport.java:open(261)) - opening transport org.apache.thrift.transport.TSaslClientTransport@5bb4d6c0

2017-12-07 16:16:04,460 ERROR [main]: transport.TSaslTransport (TSaslTransport.java:open(315)) - SASL negotiation failure

javax.security.sasl.SaslException: GSS initiate failed [Caused by GSSException: No valid credentials provided (Mechanism level: Server not found in Kerberos database (7) - UNKNOWN_SERVER)]

at com.sun.security.sasl.gsskerb.GssKrb5Client.evaluateChallenge(GssKrb5Client.java:212)

at org.apache.thrift.transport.TSaslClientTransport.handleSaslStartMessage(TSaslClientTransport.java:94)-12. 然后又遇到權限錯誤,他也不是哪里權限不對。hdfs已經可以看到hive寫入的文件了,權限應該正確。繼續分析.....

2017-12-07 20:30:59,168 DEBUG [IPC Client (1612726596) connection to AZcbetannL02.liang.com/172.16.13.77:9000 from hive/aznbhivel01.liang.com@LIANG.COM]: ipc.Client (Client.java:receiveRpcResponse(1089)) - IPC Client (1612726596) connection to AZcbetannL02.liang.com/172.16.13.77:9000 from hive/aznbhivel01.liang.com@LIANG.COM got value #8

2017-12-07 20:30:59,168 DEBUG [main]: ipc.ProtobufRpcEngine (ProtobufRpcEngine.java:invoke(250)) - Call: getFileInfo took 2ms

2017-12-07 20:30:59,168 INFO [main]: server.HiveServer2 (HiveServer2.java:stop(305)) - Shutting down HiveServer2

2017-12-07 20:30:59,169 INFO [main]: server.HiveServer2 (HiveServer2.java:startHiveServer2(368)) - Exception caught when calling stop of HiveServer2 before retrying start

java.lang.NullPointerException

at org.apache.hive.service.server.HiveServer2.stop(HiveServer2.java:309)

at org.apache.hive.service.server.HiveServer2.startHiveServer2(HiveServer2.java:366)

at org.apache.hive.service.server.HiveServer2.access$700(HiveServer2.java:74)

at org.apache.hive.service.server.HiveServer2$StartOptionExecutor.execute(HiveServer2.java:588)

at org.apache.hive.service.server.HiveServer2.main(HiveServer2.java:461)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

2017-12-07 20:30:59,170 WARN [main]: server.HiveServer2 (HiveServer2.java:startHiveServer2(376)) - Error starting HiveServer2 on attempt 1, will retry in 60 seconds

java.lang.RuntimeException: Error applying authorization policy on hive configuration: java.lang.RuntimeException: java.io.IOException: Permission denied

at org.apache.hive.service.cli.CLIService.init(CLIService.java:114)

at org.apache.hive.service.CompositeService.init(CompositeService.java:59)

at org.apache.hive.service.server.HiveServer2.init(HiveServer2.java:100)

at org.apache.hive.service.server.HiveServer2.startHiveServer2(HiveServer2.java:345)

at org.apache.hive.service.server.HiveServer2.access$700(HiveServer2.java:74)

at org.apache.hive.service.server.HiveServer2$StartOptionExecutor.execute(HiveServer2.java:588)

at org.apache.hive.service.server.HiveServer2.main(HiveServer2.java:461)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

Caused by: java.lang.RuntimeException: java.lang.RuntimeException: java.io.IOException: Permission denied

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:528)

at org.apache.hive.service.cli.CLIService.applyAuthorizationConfigPolicy(CLIService.java:127)

at org.apache.hive.service.cli.CLIService.init(CLIService.java:112)

... 12 more

Caused by: java.lang.RuntimeException: java.io.IOException: Permission denied

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:521)

... 14 more

Caused by: java.io.IOException: Permission denied

at java.io.UnixFileSystem.createFileExclusively(Native Method)

at java.io.File.createNewFile(File.java:1006)

at java.io.File.createTempFile(File.java:1989)

at org.apache.hadoop.hive.ql.session.SessionState.createTempFile(SessionState.java:824)

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:519)

... 14 moreGoogle到的strace方法,看是什么權限問題

strace is your friend if you are on Linux. Try the following from the

shell in which you are starting hive...

strace -f -e trace=file service hive-server2 start 2>&1 | grep ermission

You should see the file it can't read/write.

上面的問題,最后發現 /tmp/hive-security路徑的權限不對,修改之后,這個問題過去了。

<name>hive.exec.local.scratchdir</name>

<value>/tmp/hive-security</value>-13. 下一個問題,繼續:

2017-12-07 20:58:56,749 DEBUG [IPC Parameter Sending Thread #0]: ipc.Client (Client.java:run(1032)) - IPC Client (1612726596) connection to AZcbetannL02.liang.com/172.16.13.77:9000 from hive/aznbhivel01.liang.com@LIANG.COM sending #8

2017-12-07 20:58:56,750 DEBUG [IPC Client (1612726596) connection to AZcbetannL02.liang.com/172.16.13.77:9000 from hive/aznbhivel01.liang.com@LIANG.COM]: ipc.Client (Client.java:receiveRpcResponse(1089)) - IPC Client (1612726596) connection to AZcbetannL02.liang.com/172.16.13.77:9000 from hive/aznbhivel01.liang.com@LIANG.COM got value #8

2017-12-07 20:58:56,750 DEBUG [main]: ipc.ProtobufRpcEngine (ProtobufRpcEngine.java:invoke(250)) - Call: getFileInfo took 2ms

2017-12-07 20:58:56,763 ERROR [main]: session.SessionState (SessionState.java:setupAuth(749)) - Error setting up authorization: java.lang.ClassNotFoundException: org.apache.ranger.authorization.hive.authorizer.RangerHiveAuthorizerFactory

org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.ClassNotFoundException: org.apache.ranger.authorization.hive.authorizer.RangerHiveAuthorizerFactory

at org.apache.hadoop.hive.ql.metadata.HiveUtils.getAuthorizeProviderManager(HiveUtils.java:391)google查詢關鍵字org.apache.ranger.authorization.hive.authorizer.RangerHiveAuthorizerFactory

找到文章

https://www.cnblogs.com/wyl9527/p/6835620.html

執行啟動命令后需要進行重啟hive服務.

安裝結束后:

會看見多了幾個配置文件。

修改hiveserver2-site.xml 文件

<property>

<name>hive.security.authorization.enabled</name>

<value>true</value>

</property>

<property>

<name>hive.security.authorization.manager</name>

<value>org.apache.ranger.authorization.hive.authorizer.RangerHiveAuthorizerFactory</value>

</property>

<property>

<name>hive.security.authenticator.manager</name>

<value>org.apache.hadoop.hive.ql.security.SessionStateUserAuthenticator</value>

</property>

<property>

<name>hive.conf.restricted.list</name>

<value>hive.security.authorization.enabled,hive.security.authorization.manager,hive.security.authenticator.manager</value>

</property>目前沒有使用ranger安全認證,決定取消它。怎么取消呢?

干脆刪除hiveserver2-site.xml 文件。又向前爬了一步, hiveserver2啟動成功了。hive進去了,遇到下一個錯誤。

-14. 可以正常啟動hive了,也可以通過hive命令進入查詢,但是可以看到,命令執行是OK的,但是不能正常返回查詢結果

[hive@aznbhivel01 hive]$ hive

Logging initialized using configuration in file:/usr/local/hadoop/apache-hive-1.2.1/conf/hive-log4j.properties

hive> show databases;

OK

Failed with exception java.io.IOException:java.lang.RuntimeException: Error in configuring object

Time taken: 0.867 seconds 百度解決方法

http://blog.csdn.net/wodedipang_/article/details/72720257

但是我的配置是,沒有文中說到的情況。懷疑是這個文件夾的權限等問題

<property>

<name>hive.exec.local.scratchdir</name>

<value>/tmp/hive-security</value>

<description>Local scratch space for Hive jobs</description>

</property>最后在日志hive.log中有如下錯誤,說明缺少jar包

Caused by: java.lang.IllegalArgumentException: Compression codec com.hadoop.compression.lzo.LzoCodec not found.

at org.apache.hadoop.io.compress.CompressionCodecFactory.getCodecClasses(CompressionCodecFactory.java:139)

at org.apache.hadoop.io.compress.CompressionCodecFactory.<init>(CompressionCodecFactory.java:179)

at org.apache.hadoop.mapred.TextInputFormat.configure(TextInputFormat.java:45)

... 26 more

Caused by: java.lang.ClassNotFoundException: Class com.hadoop.compression.lzo.LzoCodec not found

at org.apache.hadoop.conf.Configuration.getClassByName(Configuration.java:2101)

at org.apache.hadoop.io.compress.CompressionCodecFactory.getCodecClasses(CompressionCodecFactory.java:132)

... 28 more-15. 是hadoop的core-site.xml中有設置,有設置lzo.LzoCodec的壓縮方式,所以需要對應的jar包支持,才能正常執行Mapreducer

<property>

<name>io.compression.codecs</name><value>org.apache.hadoop.io.compress.DefaultCodec,org.apache.hadoop.io.compress.GzipCodec,org.apache.hadoop.io.compress.BZip2Codec,com.hadoop.compression.lzo.LzoCodec,org.apache.hadoop.io.compress.SnappyCodec,com.hadoop.compression.lzo.LzopCodec</value>

</property>

<property>

<name>io.compression.codec.lzo.class</name>

<value>com.hadoop.compression.lzo.LzoCodec</value>

</property>

<property>

<name>hadoop.http.staticuser.user</name>

<value>hadoop</value>

</property>

<property>

<name>lzo.text.input.format.ignore.nonlzo</name>

<value>false</value>將需要的包,從其他正常的環境copy過來,解決了。

注意,lzo jar包不只是在hive服務器上,在全部的yarn/MapReduce機器上,都需要有這個jar包,不然在調用mapreduce過程中,涉及到lzo壓縮的話,就會出問題,而不只是hive發起的任務會遇到問題。

# pwd

/usr/local/hadoop/hadoop-release/share/hadoop/common

# ls |grep lzo

hadoop-lzo-0.4.21-SNAPSHOT.jar至此,hive安裝完成了。

爬過一個有一個坑,來感受一下hive查詢的輸出吧:

hive> select count(*) from test.testxx;

Query ID = hive_20171224121853_d96ed531-7e09-438d-b383-bc2a715753fc

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

Starting Job = job_1513915190261_0008, Tracking URL = https://aznbrmnl02.liang.com:8089/proxy/application_1513915190261_0008/

Kill Command = /usr/local/hadoop/hadoop-2.7.1/bin/hadoop job -kill job_1513915190261_0008

Hadoop job information for Stage-1: number of mappers: 3; number of reducers: 1

2017-12-24 12:19:11,332 Stage-1 map = 0%, reduce = 0%

2017-12-24 12:19:19,666 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 3.85 sec

2017-12-24 12:19:30,064 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 5.43 sec

MapReduce Total cumulative CPU time: 5 seconds 430 msec

Ended Job = job_1513915190261_0008

MapReduce Jobs Launched:

Stage-Stage-1: Map: 3 Reduce: 1 Cumulative CPU: 5.43 sec HDFS Read: 32145 HDFS Write: 5 SUCCESS

Total MapReduce CPU Time Spent: 5 seconds 430 msec

OK

2526

Time taken: 38.834 seconds, Fetched: 1 row(s)需要注意的點

-1. mysql的字符集是latin1,這個字符集在安裝hive的時候是適合的,但是后面使用的時候,尤其有中午文件存入的時候,就會無法正常顯示。所以建議,安裝完hive之后,修改字符集到UTF8

mysql> SHOW VARIABLES LIKE 'character%';

+--------------------------+----------------------------+

| Variable_name | Value |

+--------------------------+----------------------------+

| character_set_client | utf8 |

| character_set_connection | utf8 |

| character_set_database | latin1 |

| character_set_filesystem | binary |

| character_set_results | utf8 |

| character_set_server | latin1 |

| character_set_system | utf8 |

| character_sets_dir | /usr/share/mysql/charsets/ |

+--------------------------+----------------------------+

8 rows in set (0.00 sec)-2修改字符集

-# vi /etc/my.cnf

[mysqld]

datadir=/var/lib/mysql

socket=/var/lib/mysql/mysql.sock

default-character-set = utf8

character_set_server = utf8

-# Disabling symbolic-links is recommended to prevent assorted security risks

symbolic-links=0

log-error=/var/log/mysqld.log

pid-file=/var/run/mysqld/mysqld.pid-3修改后

mysql> SHOW VARIABLES LIKE 'character%';

+--------------------------+----------------------------+

| Variable_name | Value |

+--------------------------+----------------------------+

| character_set_client | utf8 |

| character_set_connection | utf8 |

| character_set_database | utf8 |

| character_set_filesystem | binary |

| character_set_results | utf8 |

| character_set_server | utf8 |

| character_set_system | utf8 |

| character_sets_dir | /usr/share/mysql/charsets/ |

+--------------------------+----------------------------+

8 rows in set (0.00 sec)連接hive的方式

a. hive直接連接的方式,如果有kerberos,注意先kinit認證

su - hive

hiveb. beeline連接beeline -u "jdbc:hive2://hive-hostname:10000/default;principal=hive/_HOST@LIANG.COM"

如果是hiveserver2 HA的架構,連接方式如下:beeline -u "jdbc:hive2://zookeeper1-ip:2181,zookeeper2-ip:2181,zookeeper3-ip:2181/;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hiveserver2_zk;principal=hive/_HOST@LIANG.COM"

如果沒有kerberos等安全認證的情況下,beeline連接hive,需要指明登陸的用戶。

beeline -u "jdbc:hive2://127.0.0.1:10000/default;" -n hive

另外,Hive在執行過程中,是否會走mapreducer?

hive 0.10.0為了執行效率考慮,簡單的查詢,就是只是select,不帶count,sum,group by這樣的,都不走map/reduce,直接讀取hdfs文件進行filter過濾。這樣做的好處就是不新開mr任務,執行效率要提高不少,但是不好的 地方就是用戶界面不友好,有時候數據量大還是要等很長時間,但是又沒有任何返回。

改這個很簡單,在hive-site.xml里面有個配置參數叫

hive.fetch.task.conversion

將這個參數設置為more,簡單查詢就不走map/reduce了,設置為minimal,就任何簡單select都會走map/reduce

----Update 2018.2.11-----

如果重新初始化hive的mysql庫,需要先登陸mysql,drop原有的庫,不然會遇到下面錯誤

-# su - hive

[hive@aznbhivel01 ~]$ schematool -initSchema -dbType mysql

Metastore connection URL: jdbc:mysql://10.24.101.88:3306/hive_beta?useUnicode=true&characterEncoding=UTF-8&createDatabaseIfNotExist=true

Metastore Connection Driver : com.mysql.jdbc.Driver

Metastore connection User: envision

Starting metastore schema initialization to 1.2.0

Initialization script hive-schema-1.2.0.mysql.sql

Error: Specified key was too long; max key length is 3072 bytes (state=42000,code=1071)

org.apache.hadoop.hive.metastore.HiveMetaException: Schema initialization FAILED! Metastore state would be inconsistent !!

schemaTool failed

刪除原有hive庫之后,再次初始化,就直接OK了

[hive@aznbhivel01 ~]$ schematool -initSchema -dbType mysql

Metastore connection URL: jdbc:mysql://10.24.101.88:3306/hive_beta?useUnicode=true&characterEncoding=UTF-8&createDatabaseIfNotExist=true

Metastore Connection Driver : com.mysql.jdbc.Driver

Metastore connection User: envision

Starting metastore schema initialization to 1.2.0

Initialization script hive-schema-1.2.0.mysql.sql

Initialization script completed

schemaTool completed

Hive的啟動與關閉:

1.啟動metastore

nohup /usr/local/hadoop/hive-release/bin/hive --service metastore --hiveconf hive.log4j.file=/usr/local/hadoop/hive-release/conf/meta-log4j.properties > /data1/hiveLogs-security/metastore.log 2>&1 &

2.啟動hiveserver2

nohup /usr/local/hadoop/hive-release/bin/hive --service hiveserver2 > /data1/hiveLogs-security/hiveserver2.log 2>&1 &

3.關閉HiveServer2kill -9ps ax --cols 2000 | grep java | grep HiveServer2 | grep -v 'ps ax' | awk '{print $1;}'``

4.關閉metastorekill -9ps ax --cols 2000 | grep java | grep MetaStore | grep -v 'ps ax' | awk '{print $1;}'``

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。