您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

本節內容:

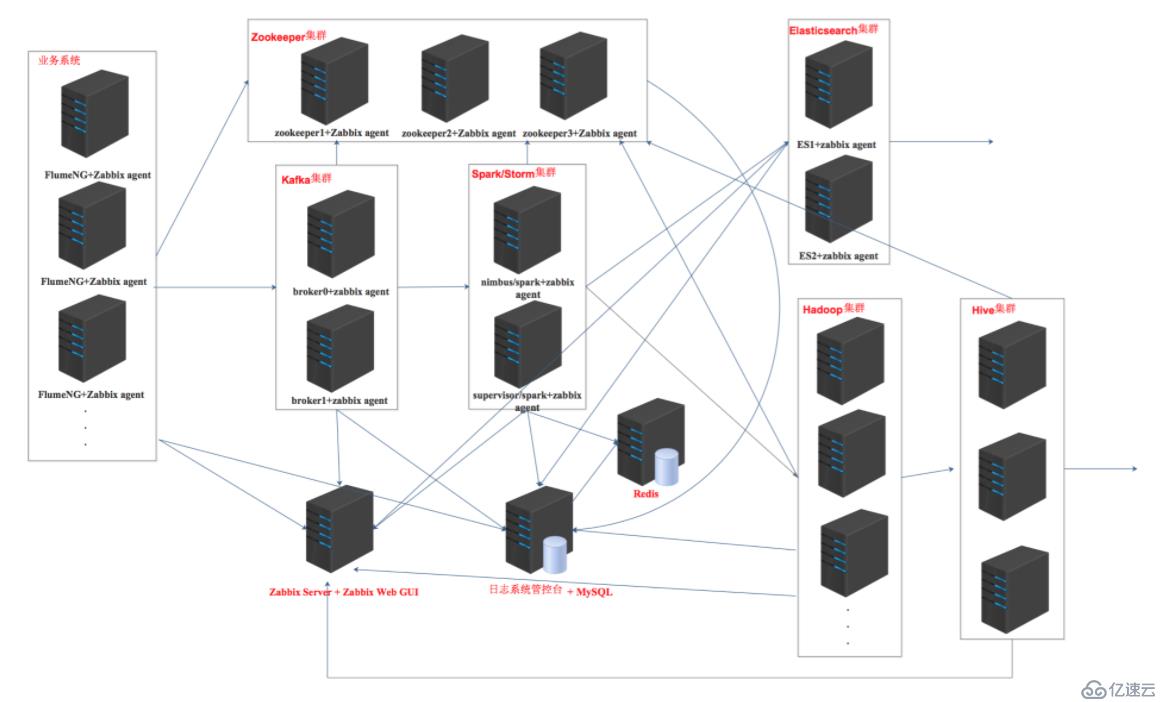

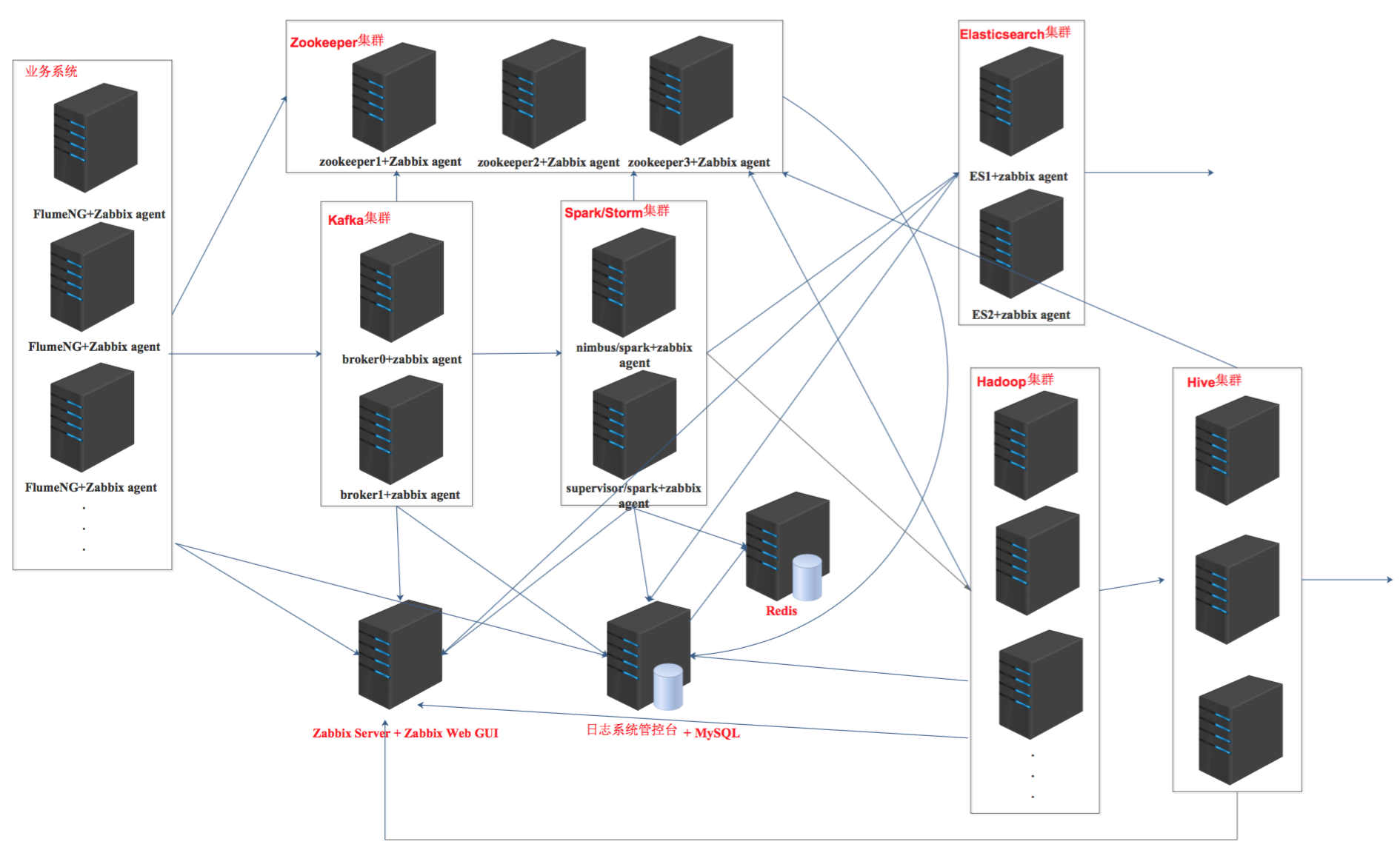

背景

分布式日志系統架構圖

創建和使用roles

產品組在開發一個分布式日志系統,用的組件較多,單獨手工部署一各個個軟件比較繁瑣,花的時間比較長,于是就想到了使用ansible playbook + roles進行部署,效率大大提高。

每一個軟件或集群都創建一個單獨的角色。

[root@node1 ~]# mkdir -pv ansible_playbooks/roles/{db_server,web_server,redis_server,zk_server,kafka_server,es_server,tomcat_server,flume_agent,hadoop,spark,hbase,hive,jdk7,jdk8}/{tasks,files,templates,meta,handlers,vars}

[root@node1 jdk7]# pwd/root/ansible_playbooks/roles/jdk7 [root@node1 jdk7]# lsfiles handlers meta tasks templates vars

1. 上傳軟件包

將jdk-7u80-linux-x64.gz上傳到files目錄下。

2. 編寫tasks

[root@node1 jdk7]# vim tasks/main.yml

- name: mkdir necessary catalog

file: path=/usr/java state=directory mode=0755- name: copy and unzip jdk

unarchive: src={{jdk_package_name}} dest=/usr/java/

- name: set env

lineinfile: dest={{env_file}} insertafter="{{item.position}}" line="{{item.value}}" state=present

with_items: - {position: EOF, value: "\n"} - {position: EOF, value: "export JAVA_HOME=/usr/java/{{jdk_version}}"} - {position: EOF, value: "export PATH=$JAVA_HOME/bin:$PATH"} - {position: EOF, value: "export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar"}- name: enforce env

shell: source {{env_file}}

3. 編寫vars

[root@node1 jdk7]# vim vars/main.yml jdk_package_name: jdk-7u80-linux-x64.gz env_file: /etc/profile jdk_version: jdk1.7.0_80

4. 使用角色

在roles同級目錄,創建一個jdk.yml文件,里面定義好你的playbook。

[root@node1 ansible_playbooks]# vim jdk.yml - hosts: jdk remote_user: root roles: - jdk7

運行playbook安裝JDK7:

[root@node1 ansible_playbooks]# ansible-playbook jdk.yml

使用jdk7 role可以需要根據實際環境修改vars/main.yml里的變量以及/etc/ansible/hosts文件里定義的主機。

[root@node1 jdk8]# pwd/root/ansible_playbooks/roles/jdk8 [root@node1 jdk8]# lsfiles handlers meta tasks templates vars

1. 上傳安裝包

將jdk-8u73-linux-x64.gz上傳到files目錄下。

2. 編寫tasks

[root@node1 jdk8]# vim tasks/main.yml

- name: mkdir necessary catalog

file: path=/usr/java state=directory mode=0755- name: copy and unzip jdk

unarchive: src={{jdk_package_name}} dest=/usr/java/

- name: set env

lineinfile: dest={{env_file}} insertafter="{{item.position}}" line="{{item.value}}" state=present

with_items: - {position: EOF, value: "\n"} - {position: EOF, value: "export JAVA_HOME=/usr/java/{{jdk_version}}"} - {position: EOF, value: "export PATH=$JAVA_HOME/bin:$PATH"} - {position: EOF, value: "export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar"}- name: enforce env

shell: source {{env_file}}

3. 編寫vars

[root@node1 jdk8]# vim vars/main.yml jdk_package_name: jdk-8u73-linux-x64.gz env_file: /etc/profile jdk_version: jdk1.8.0_73

4. 使用角色

在roles同級目錄,創建一個jdk.yml文件,里面定義好你的playbook。

[root@node1 ansible_playbooks]# vim jdk.yml - hosts: jdk remote_user: root roles: - jdk8

運行playbook安裝JDK8:

[root@node1 ansible_playbooks]# ansible-playbook jdk.yml

使用jdk8 role可以需要根據實際環境修改vars/main.yml里的變量以及/etc/ansible/hosts文件里定義的主機。

Zookeeper集群節點配置好/etc/hosts文件,配置集群各節點主機名和ip地址的對應關系。

[root@node1 zk_server]# pwd/root/ansible_playbooks/roles/zk_server [root@node1 zk_server]# lsfiles handlers meta tasks templates vars

1. 上傳安裝包

將zookeeper-3.4.6.tar.gz和clean_zklog.sh上傳到files目錄。clean_zklog.sh是清理Zookeeper日志的腳本。

2. 編寫tasks

zookeeper tasks

zookeeper tasks

3. 編寫templates

將zookeeper-3.4.6.tar.gz包中的默認配置文件上傳到../roles/zk_server/templates/目錄下,重命名為zoo.cfg.j2,并修改其中的內容。

[root@node1 ansible_playbooks]# vim roles/zk_server/templates/zoo.cfg.j2

配置文件內容過多,具體見github,地址是https://github.com/jkzhao/ansible-godseye。配置文件內容也不在解釋,在前面博客中的文章中都已寫明。

4. 編寫vars

[root@node1 zk_server]# vim vars/main.yml server1_hostname: hadoop27 server2_hostname: hadoop28 server3_hostname: hadoop29

另外在tasks中還使用了個變量{{myid}},該變量每臺主機的值是不一樣的,所以定義在了/etc/ansible/hosts文件中:

[zk_servers]172.16.206.27 myid=1172.16.206.28 myid=2172.16.206.29 myid=3

5. 設置主機組

/etc/ansible/hosts文件:

[zk_servers]172.16.206.27 myid=1172.16.206.28 myid=2172.16.206.29 myid=3

6. 使用角色

在roles同級目錄,創建一個zk.yml文件,里面定義好你的playbook。

[root@node1 ansible_playbooks]# vim zk.yml - hosts: zk_servers remote_user: root roles: - zk_server

運行playbook安裝Zookeeper集群:

[root@node1 ansible_playbooks]# ansible-playbook zk.yml

使用zk_server role需要根據實際環境修改vars/main.yml里的變量以及/etc/ansible/hosts文件里定義的主機。

[root@node1 kafka_server]# pwd/root/ansible_playbooks/roles/kafka_server [root@node1 kafka_server]# lsfiles handlers meta tasks templates vars

1. 上傳安裝包

將kafka_2.11-0.9.0.1.tar.gz、kafka-manager-1.3.0.6.zip和clean_kafkalog.sh上傳到files目錄。clean_kafkalog.sh是清理kafka日志的腳本。

2. 編寫tasks

kafka tasks

kafka tasks

3. 編寫templates

[root@node1 kafka_server]# vim templates/server.properties.j2

配置文件內容過多,具體見github,地址是https://github.com/jkzhao/ansible-godseye。配置文件內容也不再解釋,在前面博客中的文章中都已寫明。

4. 編寫vars

[root@node1 kafka_server]# vim vars/main.yml zk_cluster: 172.16.7.151:2181,172.16.7.152:2181,172.16.7.153:2181kafka_manager_ip: 172.16.7.151

另外在template的文件中還使用了個變量{{broker_id}},該變量每臺主機的值是不一樣的,所以定義在了/etc/ansible/hosts文件中:

[kafka_servers]172.16.206.17 broker_id=0172.16.206.31 broker_id=1172.16.206.32 broker_id=2

5. 設置主機組

/etc/ansible/hosts文件:

[kafka_servers]172.16.206.17 broker_id=0172.16.206.31 broker_id=1172.16.206.32 broker_id=2

6. 使用角色

在roles同級目錄,創建一個kafka.yml文件,里面定義好你的playbook。

[root@node1 ansible_playbooks]# vim kafka.yml - hosts: kafka_servers remote_user: root roles: - kafka_server

運行playbook安裝kafka集群:

[root@node1 ansible_playbooks]# ansible-playbook kafka.yml

使用kafka_server role需要根據實際環境修改vars/main.yml里的變量以及/etc/ansible/hosts文件里定義的主機。

[root@node1 es_server]# pwd/root/ansible_playbooks/roles/es_server [root@node1 es_server]# lsfiles handlers meta tasks templates vars

1. 上傳安裝包

將elasticsearch-2.3.3.tar.gz elasticsearch-analysis-ik-1.9.3.zip上傳到files目錄。

2. 編寫tasks

Elasticsearch tasks

Elasticsearch tasks

3. 編寫templates

將模板elasticsearch.in.sh.j2和elasticsearch.yml.j2放入templates目錄下

注意模板里的變量名中間不能用.。比如:{{node.name}}這樣的變量名是不合法的。

配置文件內容過多,具體見github,地址是https://github.com/jkzhao/ansible-godseye。配置文件內容也不再解釋,在前面博客中的文章中都已寫明。

4. 編寫vars

[root@node1 es_server]# vim vars/main.yml ES_MEM: 2g cluster_name: wisedu master_ip: 172.16.7.151

另外在template的文件中還使用了個變量{{node_master}},該變量每臺主機的值是不一樣的,所以定義在了/etc/ansible/hosts文件中:

[es_servers]172.16.7.151 node_master=true172.16.7.152 node_master=false172.16.7.153 node_master=false

5. 設置主機組

/etc/ansible/hosts文件:

[es_servers]172.16.7.151 node_master=true172.16.7.152 node_master=false172.16.7.153 node_master=false

6. 使用角色

在roles同級目錄,創建一個es.yml文件,里面定義好你的playbook。

[root@node1 ansible_playbooks]# vim es.yml - hosts: es_servers remote_user: root roles: - es_server

運行playbook安裝Elasticsearch集群:

[root@node1 ansible_playbooks]# ansible-playbook es.yml

使用es_server role需要根據實際環境修改vars/main.yml里的變量以及/etc/ansible/hosts文件里定義的主機。

[root@node1 db_server]# pwd/root/ansible_playbooks/roles/db_server [root@node1 db_server]# lsfiles handlers meta tasks templates vars

1. 上傳安裝包

將制作好的rpm包mysql-5.6.27-1.x86_64.rpm放到/root/ansible_playbooks/roles/db_server/files/目錄下。

【注意】:這個rpm包是自己打包制作的,打包成rpm會使得部署的效率提高。關于如何打包成rpm見之前的博客《速成RPM包制作》。

2. 編寫tasks

mysql tasks

mysql tasks

3. 設置主機組

# vim /etc/ansible/hosts [db_servers]172.16.7.152

4. 使用角色

在roles同級目錄,創建一個db.yml文件,里面定義好你的playbook。

[root@node1 ansible_playbooks]# vim db.yml - hosts: mysql_server remote_user: root roles: - db_server

運行playbook安裝MySQL:

[root@node1 ansible_playbooks]# ansible-playbook db.yml

使用db_server role需要根據實際環境修改/etc/ansible/hosts文件里定義的主機。

[root@node1 web_server]# pwd/root/ansible_playbooks/roles/web_server [root@node1 web_server]# lsfiles handlers meta tasks templates vars

1. 上傳安裝包

將制作好的rpm包openresty-for-godseye-1.9.7.3-1.x86_64.rpm放到/root/ansible_playbooks/roles/web_server/files/目錄下。

【注意】:做成rpm包,在安裝時省去了編譯nginx的過程,提升了部署效率。這個包里面打包了很多與我們系統相關的文件。

2. 編寫tasks

Nginx tasks

Nginx tasks

3. 編寫templates

將模板nginx.conf.j2放入templates目錄下.

配置文件內容過多,具體見github,地址是https://github.com/jkzhao/ansible-godseye。配置文件內容也不再解釋,在前面博客中的文章中都已寫明。

4. 編寫vars

[root@node1 web_server]# vim vars/main.yml elasticsearch_cluster: server 172.16.7.151:9200;server 172.16.7.152:9200;server 172.16.7.153:9200; kafka_server1: 172.16.7.151kafka_server2: 172.16.7.152kafka_server3: 172.16.7.153

經過測試,變量里面不能有逗號。

5. 設置主機組

/etc/ansible/hosts文件:

# vim /etc/ansible/hosts [nginx_servers]172.16.7.153

6. 使用角色

在roles同級目錄,創建一個nginx.yml文件,里面定義好你的playbook。

[root@node1 ansible_playbooks]# vim nginx.yml - hosts: nginx_servers remote_user: root roles: - web_server

運行playbook安裝Nginx:

[root@node1 ansible_playbooks]# ansible-playbook nginx.yml

使用web_server role需要根據實際環境修改vars/main.yml里的變量以及/etc/ansible/hosts文件里定義的主機。

[root@node1 redis_server]# pwd/root/ansible_playbooks/roles/redis_server [root@node1 redis_server]# lsfiles handlers meta tasks templates vars

1. 上傳安裝包

將制作好的rpm包redis-3.2.2-1.x86_64.rpm放到/root/ansible_playbooks/roles/redis_server/files/目錄下。

2. 編寫tasks

Redis tasks

Redis tasks

3. 設置主機組

/etc/ansible/hosts文件:

# vim /etc/ansible/hosts [redis_servers]172.16.7.152

4. 使用角色

在roles同級目錄,創建一個redis.yml文件,里面定義好你的playbook。

[root@node1 ansible_playbooks]# vim redis.yml - hosts: redis_servers remote_user: root roles: - redis_server

運行playbook安裝redis:

[root@node1 ansible_playbooks]# ansible-playbook redis.yml

使用redis_server role需要根據實際環境修改vars/main.yml里的變量以及/etc/ansible/hosts文件里定義的主機。

完全分布式集群部署,NameNode和ResourceManager高可用。

提前配置集群節點的/etc/hosts文件、節點時間同步、某些集群主節點登錄其他節點不需要輸入密碼。

[root@node1 hadoop]# pwd/root/ansible_playbooks/roles/hadoop [root@node1 hadoop]# lsfiles handlers meta tasks templates vars

1. 上傳安裝包

將hadoop-2.7.2.tar.gz放到/root/ansible_playbooks/roles/hadoop/files/目錄下。

2. 編寫tasks

- name: install dependency package yum: name={{ item }} state=present

with_items: - openssh - rsync- name: create hadoop user

user: name=hadoop password={{password}}

vars:

# created with:

# python -c 'import crypt; print crypt.crypt("This is my Password", "$1$SomeSalt$")'

# >>> import crypt

# >>> crypt.crypt('wisedu123', '$1$bigrandomsalt$')

# '$1$bigrando$wzfZ2ifoHJPvaMuAelsBq0'

password: $1$bigrando$wzfZ2ifoHJPvaMuAelsBq0- name: copy and unzip hadoop

#unarchive module owner and group only effect on directory.

unarchive: src=hadoop-2.7.2.tar.gz dest=/usr/local/

- name: create hadoop soft link file: src=/usr/local/hadoop-2.7.2 dest=/usr/local/hadoop state=link- name: create hadoop logs directory file: dest=/usr/local/hadoop/logs mode=0775 state=directory- name: change hadoop soft link owner and group

#recurse=yes make all files in a directory changed. file: path=/usr/local/hadoop owner=hadoop group=hadoop recurse=yes- name: change hadoop-2.7.2 directory owner and group

#recurse=yes make all files in a directory changed. file: path=/usr/local/hadoop-2.7.2 owner=hadoop group=hadoop recurse=yes- name: set hadoop env

lineinfile: dest={{env_file}} insertafter="{{item.position}}" line="{{item.value}}" state=present

with_items: - {position: EOF, value: "\n"} - {position: EOF, value: "# Hadoop environment"} - {position: EOF, value: "export HADOOP_HOME=/usr/local/hadoop"} - {position: EOF, value: "export PATH=$PATH:${HADOOP_HOME}/bin:${HADOOP_HOME}/sbin"}- name: enforce env

shell: source {{env_file}}- name: install configuration file hadoop-env.sh.j2 for hadoop

template: src=hadoop-env.sh.j2 dest=/usr/local/hadoop/etc/hadoop/hadoop-env.sh owner=hadoop group=hadoop- name: install configuration file core-site.xml.j2 for hadoop

template: src=core-site.xml.j2 dest=/usr/local/hadoop/etc/hadoop/core-site.xml owner=hadoop group=hadoop- name: install configuration file hdfs-site.xml.j2 for hadoop

template: src=hdfs-site.xml.j2 dest=/usr/local/hadoop/etc/hadoop/hdfs-site.xml owner=hadoop group=hadoop- name: install configuration file mapred-site.xml.j2 for hadoop

template: src=mapred-site.xml.j2 dest=/usr/local/hadoop/etc/hadoop/mapred-site.xml owner=hadoop group=hadoop- name: install configuration file yarn-site.xml.j2 for hadoop

template: src=yarn-site.xml.j2 dest=/usr/local/hadoop/etc/hadoop/yarn-site.xml owner=hadoop group=hadoop- name: install configuration file slaves.j2 for hadoop

template: src=slaves.j2 dest=/usr/local/hadoop/etc/hadoop/slaves owner=hadoop group=hadoop- name: install configuration file hadoop-daemon.sh.j2 for hadoop

template: src=hadoop-daemon.sh.j2 dest=/usr/local/hadoop/sbin/hadoop-daemon.sh owner=hadoop group=hadoop- name: install configuration file yarn-daemon.sh.j2 for hadoop

template: src=yarn-daemon.sh.j2 dest=/usr/local/hadoop/sbin/yarn-daemon.sh owner=hadoop group=hadoop

# make sure zookeeper started, and then start hadoop.

# start journalnode- name: start journalnode

shell: /usr/local/hadoop/sbin/hadoop-daemon.sh start journalnode

become: true

become_method: su

become_user: hadoop

when: datanode == "true"# format namenode- name: format active namenode hdfs

shell: /usr/local/hadoop/bin/hdfs namenode -format

become: true

become_method: su

become_user: hadoop

when: namenode_active == "true"- name: start active namenode hdfs

shell: /usr/local/hadoop/sbin/hadoop-daemon.sh start namenode

become: true

become_method: su

become_user: hadoop

when: namenode_active == "true"- name: format standby namenode hdfs

shell: /usr/local/hadoop/bin/hdfs namenode -bootstrapStandby

become: true

become_method: su

become_user: hadoop

when: namenode_standby == "true"- name: stop active namenode hdfs

shell: /usr/local/hadoop/sbin/hadoop-daemon.sh stop namenode

become: true

become_method: su

become_user: hadoop

when: namenode_active == "true"# format ZKFC- name: format ZKFC

shell: /usr/local/hadoop/bin/hdfs zkfc -formatZK

become: true

become_method: su

become_user: hadoop

when: namenode_active == "true"# start hadoop cluster- name: start namenode

shell: /usr/local/hadoop/sbin/start-dfs.sh

become: true

become_method: su

become_user: hadoop

when: namenode_active == "true"- name: start yarn

shell: /usr/local/hadoop/sbin/start-yarn.sh

become: true

become_method: su

become_user: hadoop

when: namenode_active == "true"- name: start standby rm

shell: /usr/local/hadoop/sbin/yarn-daemon.sh start resourcemanager

become: true

become_method: su

become_user: hadoop

when: namenode_standby == "true"

3. 編寫templates

將模板core-site.xml.j2、hadoop-daemon.sh.j2、hadoop-env.sh.j2、hdfs-site.xml.j2、mapred-site.xml.j2、slaves.j2、yarn-daemon.sh.j2、yarn-site.xml.j2放入templates目錄下。

配置文件內容過多,具體見github,地址是https://github.com/jkzhao/ansible-godseye。配置文件內容也不再解釋,在前面博客中的文章中都已寫明。

4. 編寫vars

[root@node1 hadoop]# vim vars/main.yml env_file: /etc/profile # hadoop-env.sh.j2 file variables. JAVA_HOME: /usr/java/jdk1.8.0_73 # core-site.xml.j2 file variables. ZK_NODE1: node1:2181ZK_NODE2: node2:2181ZK_NODE3: node3:2181# hdfs-site.xml.j2 file variables. NAMENODE1_HOSTNAME: node1 NAMENODE2_HOSTNAME: node2 DATANODE1_HOSTNAME: node3 DATANODE2_HOSTNAME: node4 DATANODE3_HOSTNAME: node5 # mapred-site.xml.j2 file variables. MR_MODE: yarn # yarn-site.xml.j2 file variables. RM1_HOSTNAME: node1 RM2_HOSTNAME: node2

5. 設置主機組

/etc/ansible/hosts文件:

# vim /etc/ansible/hosts [hadoop]172.16.7.151 namenode_active=true namenode_standby=false datanode=false172.16.7.152 namenode_active=false namenode_standby=true datanode=false172.16.7.153 namenode_active=false namenode_standby=false datanode=true172.16.7.154 namenode_active=false namenode_standby=false datanode=true172.16.7.155 namenode_active=false namenode_standby=false datanode=true

6. 使用角色

在roles同級目錄,創建一個hadoop.yml文件,里面定義好你的playbook。

[root@node1 ansible_playbooks]# vim hadoop.yml - hosts: hadoop remote_user: root roles: - jdk8 - hadoop

運行playbook安裝hadoop集群:

[root@node1 ansible_playbooks]# ansible-playbook hadoop.yml

使用hadoop role需要根據實際環境修改vars/main.yml里的變量以及/etc/ansible/hosts文件里定義的主機。

Standalone模式部署spark (無HA)

[root@node1 spark]# pwd/root/ansible_playbooks/roles/spark [root@node1 spark]# lsfiles handlers meta tasks templates vars

1. 上傳安裝包

將scala-2.10.6.tgz和spark-1.6.1-bin-hadoop2.6.tgz放到/root/ansible_playbooks/roles/hadoop/files/目錄下。

2. 編寫tasks

- name: copy and unzip scala

unarchive: src=scala-2.10.6.tgz dest=/usr/local/

- name: set scala env

lineinfile: dest={{env_file}} insertafter="{{item.position}}" line="{{item.value}}" state=present

with_items:

- {position: EOF, value: "\n"}

- {position: EOF, value: "# Scala environment"}

- {position: EOF, value: "export SCALA_HOME=/usr/local/scala-2.10.6"}

- {position: EOF, value: "export PATH=$SCALA_HOME/bin:$PATH"}

- name: copy and unzip spark

unarchive: src=spark-1.6.1-bin-hadoop2.6.tgz dest=/usr/local/

- name: rename spark directory

command: mv /usr/local/spark-1.6.1-bin-hadoop2.6 /usr/local/spark-1.6.1

- name: set spark env

lineinfile: dest={{env_file}} insertafter="{{item.position}}" line="{{item.value}}" state=present

with_items:

- {position: EOF, value: "\n"}

- {position: EOF, value: "# Spark environment"}

- {position: EOF, value: "export SPARK_HOME=/usr/local/spark-1.6.1"}

- {position: EOF, value: "export PATH=$SPARK_HOME/bin:$PATH"}

- name: enforce env

shell: source {{env_file}}

- name: install configuration file for spark

template: src=slaves.j2 dest=/usr/local/spark-1.6.1/conf/slaves

- name: install configuration file for spark

template: src=spark-env.sh.j2 dest=/usr/local/spark-1.6.1/conf/spark-env.sh

- name: start spark cluster

shell: /usr/local/spark-1.6.1/sbin/start-all.sh

tags:

- start

Spark tasks

3. 編寫templates

將模板slaves.j2和spark-env.sh.j2放到/root/ansible_playbooks/roles/spark/templates/目錄下。

配置文件內容過多,具體見github,地址是https://github.com/jkzhao/ansible-godseye。配置文件內容也不再解釋,在前面博客中的文章中都已寫明。

4. 編寫vars

[root@node1 spark]# vim vars/main.yml env_file: /etc/profile # spark-env.sh.j2 file variables JAVA_HOME: /usr/java/jdk1.8.0_73 SCALA_HOME: /usr/local/scala-2.10.6SPARK_MASTER_HOSTNAME: node1 SPARK_HOME: /usr/local/spark-1.6.1SPARK_WORKER_MEMORY: 256M HIVE_HOME: /usr/local/apache-hive-2.1.0-bin HADOOP_CONF_DIR: /usr/local/hadoop/etc/hadoop/# slave.j2 file variables SLAVE1_HOSTNAME: node2 SLAVE2_HOSTNAME: node3

5. 設置主機組

/etc/ansible/hosts文件:

# vim /etc/ansible/hosts [spark]172.16.7.151172.16.7.152172.16.7.153

6. 使用角色

在roles同級目錄,創建一個spark.yml文件,里面定義好你的playbook。

[root@node1 ansible_playbooks]# vim spark.yml - hosts: spark remote_user: root roles: - spark

運行playbook安裝spark集群:

[root@node1 ansible_playbooks]# ansible-playbook spark.yml

使用spark role需要根據實際環境修改vars/main.yml里的變量以及/etc/ansible/hosts文件里定義的主機。

【注】:所有的文件都在github上,https://github.com/jkzhao/ansible-godseye。

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。