您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

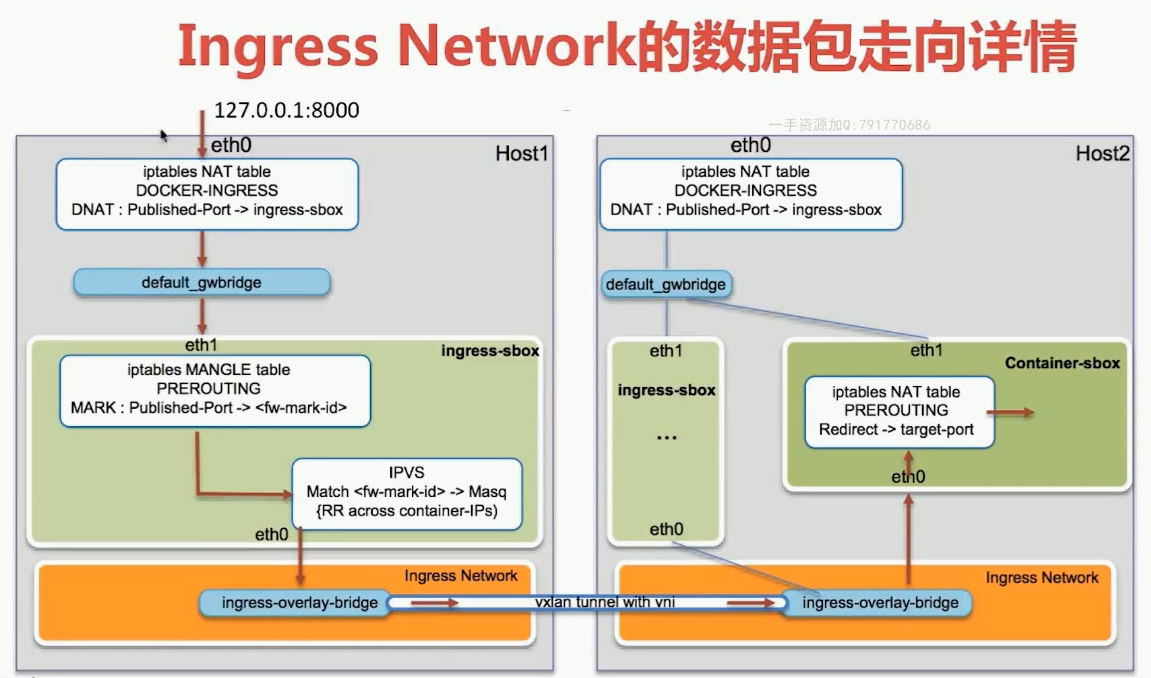

docker中RoutingMesh--Ingress負載均衡是什么,相信很多沒有經驗的人對此束手無策,為此本文總結了問題出現的原因和解決方法,通過這篇文章希望你能解決這個問題。

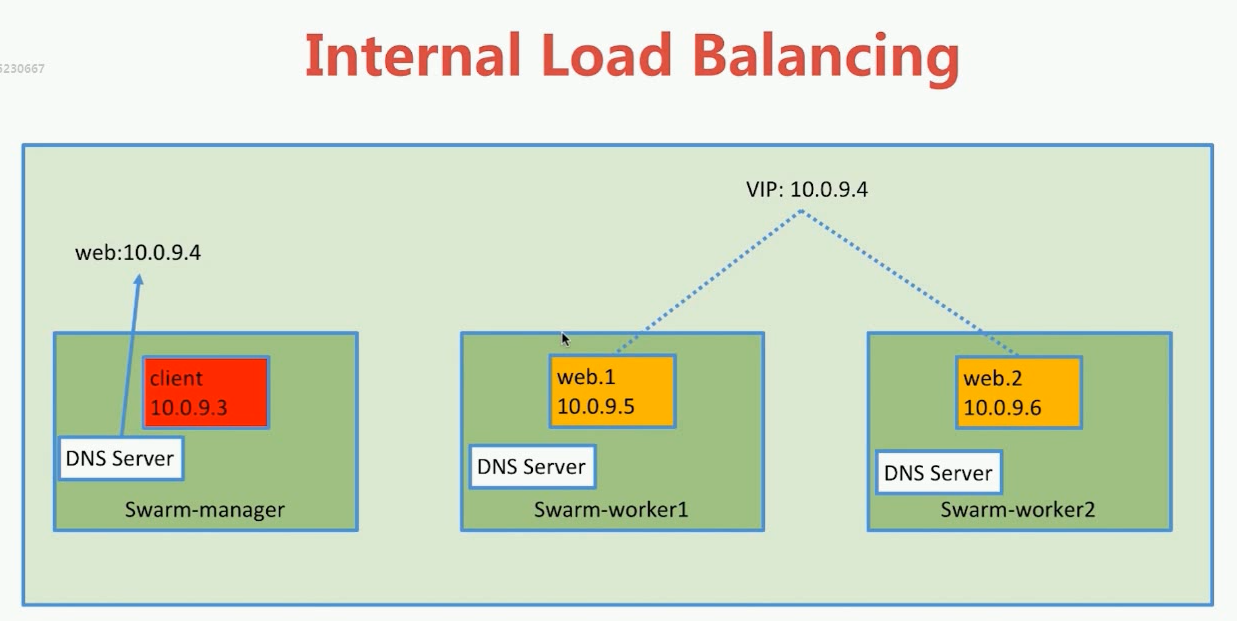

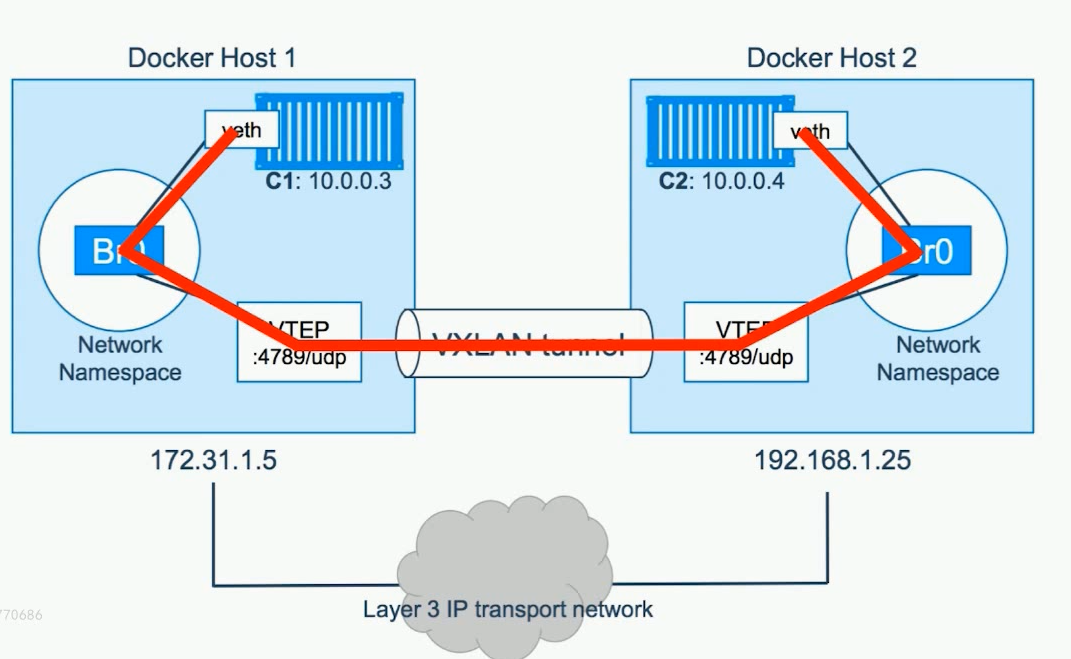

我們知道容器之間的通信,例如上圖中的10.0.9.3與10.0.9.5通信是通過overlay網絡,是通過一個VXLAN tannel來實現的。

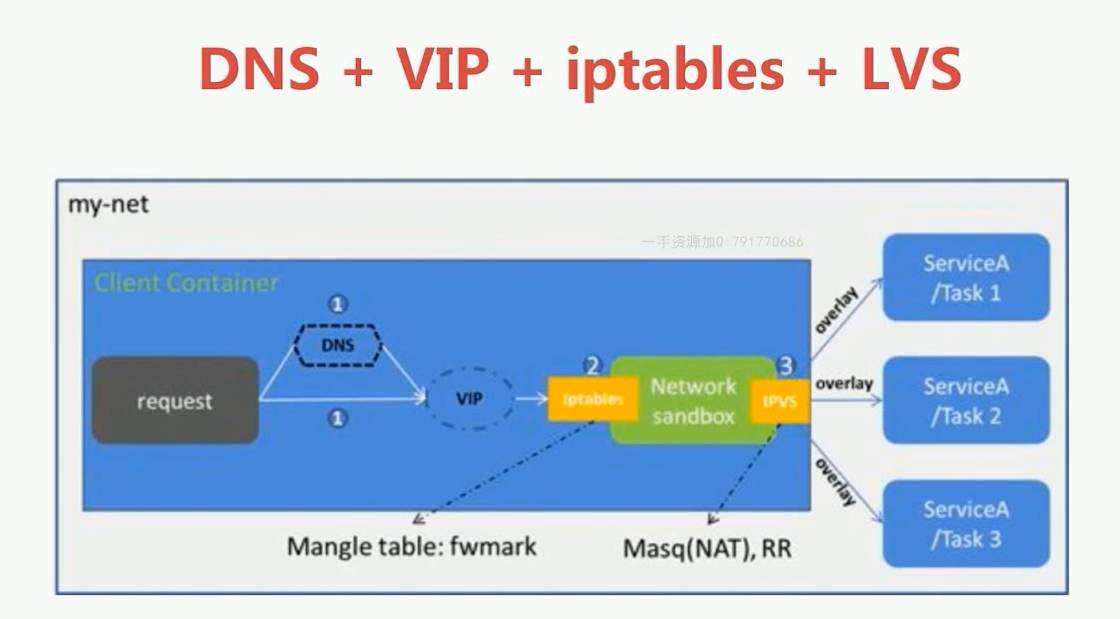

但是service和service之間通信是通過VIP實現的。例如client的service與web 的service進行通信,而web有一個scale,因此client訪問web是通過訪問虛擬IP(VIP)來實現的。那么VIP是怎么映射到具體的10.0.9.5或者10.0.9.6呢?這是通過LVS實現的。

LVS,Linux Virtual Server。可以實現在系統級別的負載均衡。

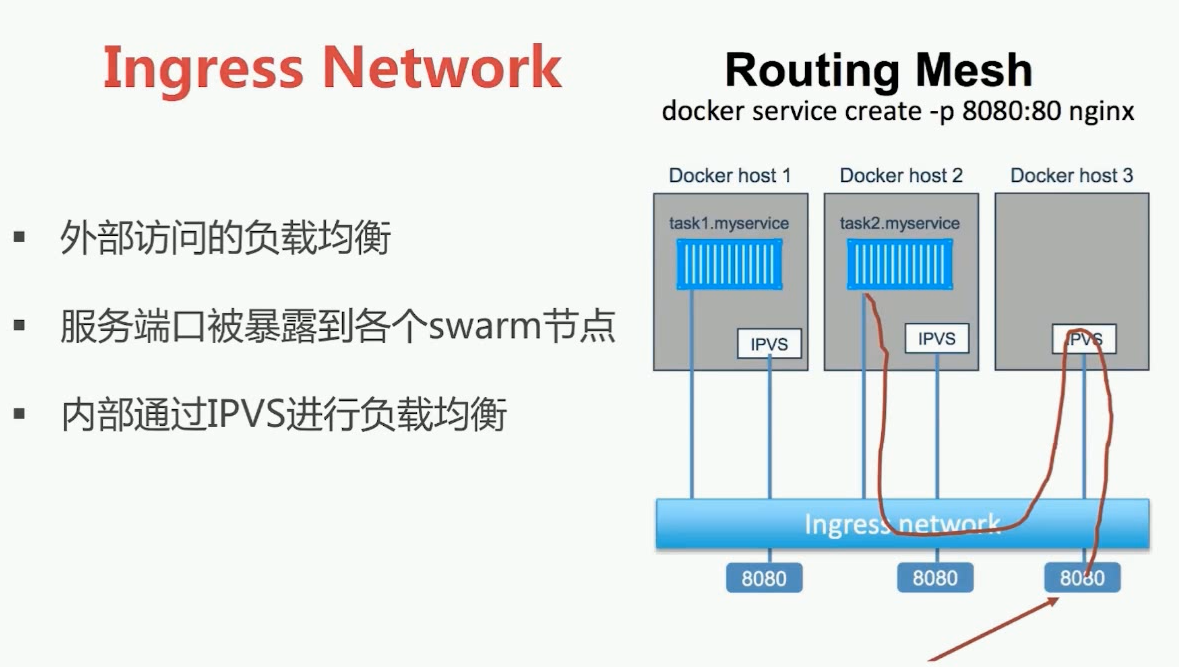

我們可以在三個節點上任何一個節點訪問80端口都可以訪問到wordpress,這個實現就是IngressNetWork的作用。任何一臺swarm節點上去訪問端口服務時,會通過端口服務通過本節點IPVS(IP Virtual Service),并通過LVS給loadbanlance到真正具有service上面,例如上圖中我們通過訪問Docker Host3

轉發到另外兩個節點中。

我們的實驗環境跟上一節一樣,我們將whoami 的scale 變為2

iie4bu@swarm-manager:~$ docker service ls ID NAME MODE REPLICAS IMAGE PORTS h5wlczp85sw5 client replicated 1/1 busybox:1.28.3 9i6wz6cg4koc whoami replicated 3/3 jwilder/whoami:latest *:8000->8000/tcp iie4bu@swarm-manager:~$ docker service scale whoami=2 whoami scaled to 2 overall progress: 2 out of 2 tasks 1/2: running [==================================================>] 2/2: running [==================================================>] verify: Service converged

查看whoami的運行情況:

iie4bu@swarm-manager:~$ docker service ps whoami ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS 6hhuf528spdw whoami.1 jwilder/whoami:latest swarm-manager Running Running 17 hours ago 9idgk9jbrlcm whoami.3 jwilder/whoami:latest swarm-worker2 Running Running 16 hours ago

分別運行在swarm-manager和swarm-worker2節點上。

在swarm-manager中訪問whoami:

iie4bu@swarm-manager:~$ curl 127.0.0.1:8000 I'm cc9f97cc5056 iie4bu@swarm-manager:~$ curl 127.0.0.1:8000 I'm f47e05019fd9

在swarm-worker1中訪問whoami:

iie4bu@swarm-worker1:~$ curl 127.0.0.1:8000 I'm f47e05019fd9 iie4bu@swarm-worker1:~$ curl 127.0.0.1:8000 I'm cc9f97cc5056

為什么對于swarm-worker1來講本地并沒有whoami的service,確能訪問8000端口?

通過iptables可以看到本地的轉發規則:

iie4bu@swarm-worker1:~$ sudo iptables -nL -t nat [sudo] password for iie4bu: Chain PREROUTING (policy ACCEPT) target prot opt source destination DOCKER-INGRESS all -- 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL DOCKER all -- 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL Chain INPUT (policy ACCEPT) target prot opt source destination Chain OUTPUT (policy ACCEPT) target prot opt source destination DOCKER-INGRESS all -- 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL DOCKER all -- 0.0.0.0/0 !127.0.0.0/8 ADDRTYPE match dst-type LOCAL Chain POSTROUTING (policy ACCEPT) target prot opt source destination MASQUERADE all -- 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match src-type LOCAL MASQUERADE all -- 172.17.0.0/16 0.0.0.0/0 MASQUERADE all -- 172.19.0.0/16 0.0.0.0/0 MASQUERADE all -- 172.18.0.0/16 0.0.0.0/0 MASQUERADE tcp -- 172.17.0.2 172.17.0.2 tcp dpt:443 MASQUERADE tcp -- 172.17.0.2 172.17.0.2 tcp dpt:80 MASQUERADE tcp -- 172.17.0.2 172.17.0.2 tcp dpt:22 Chain DOCKER (2 references) target prot opt source destination RETURN all -- 0.0.0.0/0 0.0.0.0/0 RETURN all -- 0.0.0.0/0 0.0.0.0/0 RETURN all -- 0.0.0.0/0 0.0.0.0/0 DNAT tcp -- 0.0.0.0/0 0.0.0.0/0 tcp dpt:443 to:172.17.0.2:443 DNAT tcp -- 0.0.0.0/0 0.0.0.0/0 tcp dpt:81 to:172.17.0.2:80 DNAT tcp -- 0.0.0.0/0 0.0.0.0/0 tcp dpt:23 to:172.17.0.2:22 Chain DOCKER-INGRESS (2 references) target prot opt source destination DNAT tcp -- 0.0.0.0/0 0.0.0.0/0 tcp dpt:8000 to:172.19.0.2:8000 RETURN all -- 0.0.0.0/0 0.0.0.0/0

我們看到DOCKER-INGRESS,它的轉發規則是如果我們訪問tcp8000duank端口,它會轉發到172.19.0.2:8000上,那么這個172.19.0.2:8000是什么呢?

我們先看一下本地的ip:

iie4bu@swarm-worker1:~$ ifconfig br-3f2fc691f5da Link encap:Ethernet HWaddr 02:42:c8:f4:03:ad inet addr:172.18.0.1 Bcast:172.18.255.255 Mask:255.255.0.0 UP BROADCAST MULTICAST MTU:1500 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:0 (0.0 B) TX bytes:0 (0.0 B) docker0 Link encap:Ethernet HWaddr 02:42:43:69:b7:60 inet addr:172.17.0.1 Bcast:172.17.255.255 Mask:255.255.0.0 inet6 addr: fe80::42:43ff:fe69:b760/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:83 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:0 (0.0 B) TX bytes:9208 (9.2 KB) docker_gwbridge Link encap:Ethernet HWaddr 02:42:41:bf:4d:15 inet addr:172.19.0.1 Bcast:172.19.255.255 Mask:255.255.0.0 inet6 addr: fe80::42:41ff:febf:4d15/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:42 errors:0 dropped:0 overruns:0 frame:0 TX packets:142 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:3556 (3.5 KB) TX bytes:13857 (13.8 KB) ...... ......

我們可以看到本地有個網絡是docker_gwbridge,它的ip是172.19.0.1,這個地址與172.19.0.2在同一個網段。所以說我們可以猜測172.19.0.2肯定是與docker_gwbridge相連的一個網絡。可以通過brctl show 查看:

iie4bu@swarm-worker1:~$ brctl show bridge name bridge id STP enabled interfaces br-3f2fc691f5da 8000.0242c8f403ad no docker0 8000.02424369b760 no veth75a496d docker_gwbridge 8000.024241bf4d15 no veth500f4b4 veth54af5f8

可以看到docker_gwbridge有兩個interface,這兩個interface哪個是呢?

通過docker network ls:

iie4bu@swarm-worker1:~$ docker network ls NETWORK ID NAME DRIVER SCOPE bdf23298113d bridge bridge local 969e60257ba5 docker_gwbridge bridge local cdcffe1b31cb host host local uz1kgf9j6m48 ingress overlay swarm cojus8blvkdo my-demo overlay swarm 3f2fc691f5da network_default bridge local dba4587ee914 none null local

查看docker_gwbridge的詳細信息:

iie4bu@swarm-worker1:~$ docker network inspect docker_gwbridge

[

{

"Name": "docker_gwbridge",

"Id": "969e60257ba50b070374d31ea43a0550d6cd3ae3e68623746642fe8736dee5a4",

"Created": "2019-04-08T09:47:59.343371327+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.19.0.0/16",

"Gateway": "172.19.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"5559895ccaea972dad4f2fe52c0ec754d2d7c485dceb35083719768f611552e7": {

"Name": "gateway_bf5031da0049",

"EndpointID": "6ad54f228134798de719549c0f93c804425beb85dd97f024408c4f7fc393fdf9",

"MacAddress": "02:42:ac:13:00:03",

"IPv4Address": "172.19.0.3/16",

"IPv6Address": ""

},

"ingress-sbox": {

"Name": "gateway_ingress-sbox",

"EndpointID": "177757eca7a18630ae91c01b8ac67bada25ce1ea050dad4ac5cc318093062003",

"MacAddress": "02:42:ac:13:00:02",

"IPv4Address": "172.19.0.2/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.enable_icc": "false",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.name": "docker_gwbridge"

},

"Labels": {}

}

]可以看到與docker_gwbridge相連的container有兩個,分別是gateway_bf5031da0049和gateway_ingress-sbox,而gateway_ingress-sbox的ip正是172.19.0.2。也就是說數據被轉發到這個gateway_ingress-sbox network namespace中去了。

進入gateway_ingress-sbox:

iie4bu@swarm-worker1:~$ sudo ls /var/run/docker/netns 1-cojus8blvk 1-uz1kgf9j6m 44e6e70b2177 b1ba5b4dd9f2 bf5031da0049 ingress_sbox iie4bu@swarm-worker1:~$ sudo nsenter --net=//var/run/docker/netns/ingress_sbox -bash: /home/iie4bu/anaconda3/etc/profiel.d/conda.sh: No such file or directory CommandNotFoundError: Your shell has not been properly configured to use 'conda activate'. If your shell is Bash or a Bourne variant, enable conda for the current user with $ echo ". /home/iie4bu/anaconda3/etc/profile.d/conda.sh" >> ~/.bashrc or, for all users, enable conda with $ sudo ln -s /home/iie4bu/anaconda3/etc/profile.d/conda.sh /etc/profile.d/conda.sh The options above will permanently enable the 'conda' command, but they do NOT put conda's base (root) environment on PATH. To do so, run $ conda activate in your terminal, or to put the base environment on PATH permanently, run $ echo "conda activate" >> ~/.bashrc Previous to conda 4.4, the recommended way to activate conda was to modify PATH in your ~/.bashrc file. You should manually remove the line that looks like export PATH="/home/iie4bu/anaconda3/bin:$PATH" ^^^ The above line should NO LONGER be in your ~/.bashrc file! ^^^ root@swarm-worker1:~#

這樣就進入了ingress_sbox的namespace了。查看ip

root@swarm-worker1:~# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 13: eth0@if14: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default link/ether 02:42:0a:ff:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 10.255.0.3/16 brd 10.255.255.255 scope global eth0 valid_lft forever preferred_lft forever inet 10.255.0.168/32 brd 10.255.0.168 scope global eth0 valid_lft forever preferred_lft forever 15: eth2@if16: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:ac:13:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 1 inet 172.19.0.2/16 brd 172.19.255.255 scope global eth2 valid_lft forever preferred_lft forever

發現ip是172.19.0.2。

為了掩飾LVS,我們退出ingress_sbox的namespace。在swarm-worker1中安裝ipvsadm,這是lvs的一個管理工具。

iie4bu@swarm-worker1:~$ sudo apt-get install ipvsadm

安裝成功之后,在進入到ingress_sbox中,然后輸入命令iptables -nL -t mangle

root@swarm-worker1:~# iptables -nL -t mangle Chain PREROUTING (policy ACCEPT) target prot opt source destination MARK tcp -- 0.0.0.0/0 0.0.0.0/0 tcp dpt:8000 MARK set 0x100 Chain INPUT (policy ACCEPT) target prot opt source destination MARK all -- 0.0.0.0/0 10.255.0.168 MARK set 0x100 Chain FORWARD (policy ACCEPT) target prot opt source destination Chain OUTPUT (policy ACCEPT) target prot opt source destination Chain POSTROUTING (policy ACCEPT) target prot opt source destination

MARK一行就表示負載均衡。

輸入命令ipvsadm -l

root@swarm-worker1:~# ipvsadm -l IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn FWM 256 rr -> 10.255.0.5:0 Masq 1 0 0 -> 10.255.0.7:0 Masq 1 0 0

10.255.0.5 10.255.0.7就是whoami的service地址。

在swarm-manager上查看whoami:

iie4bu@swarm-manager:~$ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES cc9f97cc5056 jwilder/whoami:latest "/app/http" 19 hours ago Up 19 hours 8000/tcp whoami.1.6hhuf528spdw9j9pla7l3tv3t iie4bu@swarm-manager:~$ docker exec cc9 ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet 10.255.0.168/32 brd 10.255.0.168 scope global lo valid_lft forever preferred_lft forever inet 10.0.2.5/32 brd 10.0.2.5 scope global lo valid_lft forever preferred_lft forever 18: eth0@if19: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue state UP link/ether 02:42:0a:ff:00:05 brd ff:ff:ff:ff:ff:ff inet 10.255.0.5/16 brd 10.255.255.255 scope global eth0 valid_lft forever preferred_lft forever 20: eth2@if21: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP link/ether 02:42:ac:12:00:03 brd ff:ff:ff:ff:ff:ff inet 172.18.0.3/16 brd 172.18.255.255 scope global eth2 valid_lft forever preferred_lft forever 23: eth3@if24: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue state UP link/ether 02:42:0a:00:02:07 brd ff:ff:ff:ff:ff:ff inet 10.0.2.7/24 brd 10.0.2.255 scope global eth3 valid_lft forever preferred_lft forever iie4bu@swarm-manager:~$

whoami的IP正是10.255.0.5。

在swarm-worker2上查看:

iie4bu@swarm-worker2:~$ docker container ls CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES f47e05019fd9 jwilder/whoami:latest "/app/http" 20 hours ago Up 20 hours 8000/tcp whoami.3.9idgk9jbrlcm3ufvkmbmvv2t8 633ddfc082b9 busybox:1.28.3 "sh -c 'while true; …" 20 hours ago Up 20 hours client.1.3iv3gworpyr5vdo0h9eortlw0 iie4bu@swarm-worker2:~$ docker exec -it f47 ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet 10.255.0.168/32 brd 10.255.0.168 scope global lo valid_lft forever preferred_lft forever inet 10.0.2.5/32 brd 10.0.2.5 scope global lo valid_lft forever preferred_lft forever 24: eth3@if25: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue state UP link/ether 02:42:0a:00:02:0d brd ff:ff:ff:ff:ff:ff inet 10.0.2.13/24 brd 10.0.2.255 scope global eth3 valid_lft forever preferred_lft forever 26: eth2@if27: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP link/ether 02:42:ac:12:00:04 brd ff:ff:ff:ff:ff:ff inet 172.18.0.4/16 brd 172.18.255.255 scope global eth2 valid_lft forever preferred_lft forever 28: eth0@if29: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue state UP link/ether 02:42:0a:ff:00:07 brd ff:ff:ff:ff:ff:ff inet 10.255.0.7/16 brd 10.255.255.255 scope global eth0 valid_lft forever preferred_lft forever

whoami的IP正是10.255.0.7。

因此當我們的數據包進入到ingress_sbox通過lvs做了一個負載均衡,也就是說我們訪問8000端口,它會把數據包轉發到10.255.0.5和10.255.0.7做一個負載。然后就會進入到swarm節點中了。

看完上述內容,你們掌握docker中RoutingMesh--Ingress負載均衡是什么的方法了嗎?如果還想學到更多技能或想了解更多相關內容,歡迎關注億速云行業資訊頻道,感謝各位的閱讀!

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。