溫馨提示×

您好,登錄后才能下訂單哦!

點擊 登錄注冊 即表示同意《億速云用戶服務條款》

您好,登錄后才能下訂單哦!

爬取鏈家二手房源信息

import requests

import re

from bs4 import BeautifulSoup

import csv

url = ['https://cq.lianjia.com/ershoufang/']

for i in range(2,101):

url.append('https://cq.lianjia.com/ershoufang/pg%s/'%(str(i)))

# 模擬谷歌瀏覽器

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.100 Safari/537.36'}

for u in url:

r = requests.get(u,headers=headers)

soup = BeautifulSoup(r.text,'lxml').find_all('li', class_='clear LOGCLICKDATA')

for i in soup:

ns = i.select('div[class="positionInfo"]')[0].get_text()

region = ns.split('-')[1].replace(' ','').encode('gbk')

rem = ns.split('-')[0].replace(' ','').encode('gbk')

ns = i.select('div[class="houseInfo"]')[0].get_text()

xiaoqu_name = ns.split('|')[0].replace(' ','').encode('gbk')

huxing = ns.split('|')[1].replace(' ','').encode('gbk')

pingfang = ns.split('|')[2].replace(' ','').encode('gbk')

chaoxiang = ns.split('|')[3].replace(' ','').encode('gbk')

zhuangxiu = ns.split('|')[4].replace(' ','').encode('gbk')

danjia = re.findall("\d+",i.select('div[class="unitPrice"]')[0].string)[0]

zongjia = i.select('div[class="totalPrice"]')[0].get_text().encode('gbk')

out=open("/data/data.csv",'a')

csv_write=csv.writer(out)

data = [region,xiaoqu_name,rem,huxing,pingfang,chaoxiang,zhuangxiu,danjia,zongjia]

csv_write.writerow(data)

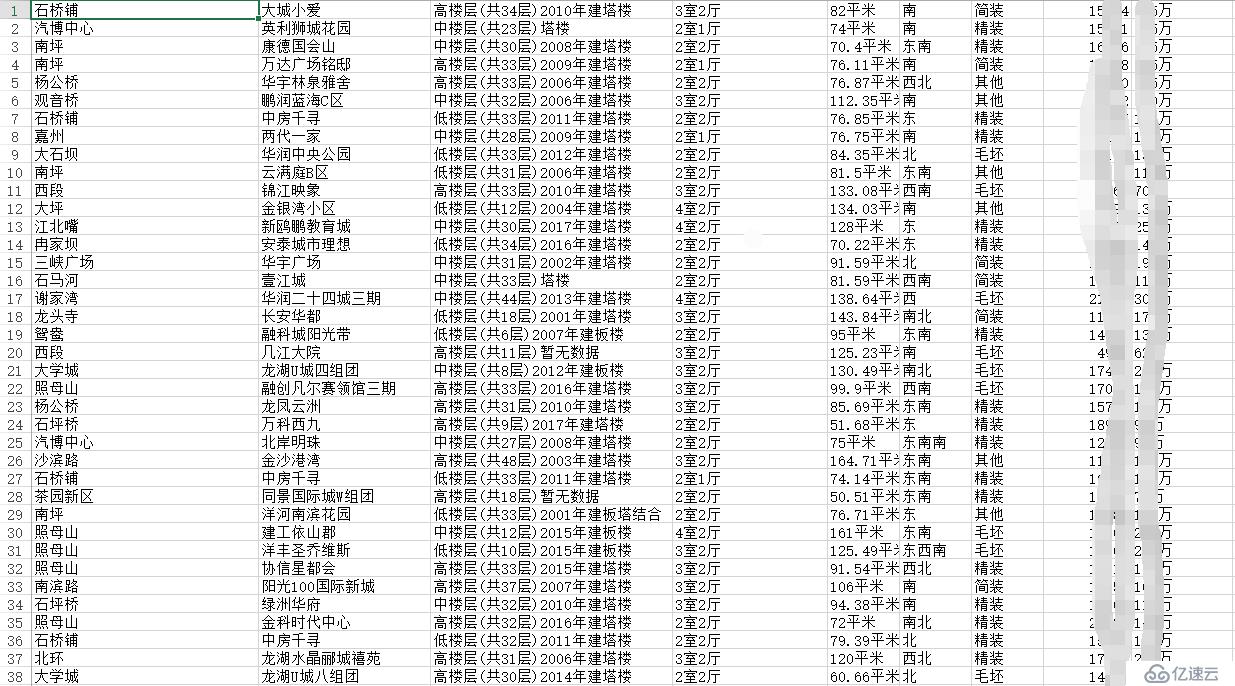

out.close()數據結果

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。