您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

本篇內容介紹了“Hadoop2.7.1分布式安裝配置過程”的有關知識,在實際案例的操作過程中,不少人都會遇到這樣的困境,接下來就讓小編帶領大家學習一下如何處理這些情況吧!希望大家仔細閱讀,能夠學有所成!

VirtualBox5(三臺),CentOS7,Hadoop2.7.1

先完成Hadoop2.7.1分布式安裝-準備篇

注意:Hadoop-2.5.1開始終于不用再編譯64位的libhadoop.so.1.0.0了,再早版本的hadoop自帶的是32位的,如需64位需要自省編譯,具體見Hadoop2.4.1分布式安裝。

從apache hadoop官網下載并解壓hadoop壓縮包到本地目錄/home/wukong/local/hadoop-2.7.1/

在打算做namenode的機器上,wget或其他方式下載hadoop的壓縮包,并解壓到本地指定目錄。下載解壓命令參考Linux常用命令。

共有七個文件,位于/home/wukong/local/hadoop-2.7.1/etc/hadoop,以下分別描述:

hadoop-env.sh

# 必配 # The java implementation to use. export JAVA_HOME=/opt/jdk1.7.0_79 # 選配。考慮是虛擬機,所以少配一點 # The maximum amount of heap to use, in MB. Default is 1000. export HADOOP_HEAPSIZE=500 export HADOOP_NAMENODE_INIT_HEAPSIZE="100"

yarn-env.sh

# some Java parameters export JAVA_HOME=/opt/jdk1.7.0_79 if [ "$JAVA_HOME" != "" ]; then #echo "run java in $JAVA_HOME" JAVA_HOME=$JAVA_HOME fi if [ "$JAVA_HOME" = "" ]; then echo "Error: JAVA_HOME is not set." exit 1 fi JAVA=$JAVA_HOME/bin/java JAVA_HEAP_MAX=-Xmx600m # 默認的heap_max是1000m,我的虛擬機沒這么大內存,所以改小了

slaves

#寫入你slave的節點。如果是多個就每行一個,寫入host名 bd02 bd03

core-site.xml

<configuration> <property> <name>fs.defaultFS</name> <value>hdfs://bd01:9000</value> </property> <property> <name>io.file.buffer.size</name> <value>131072</value> </property> <property> <name>hadoop.tmp.dir</name> <value>file:/home/wukong/local/hdp-data/tmp</value> <description>Abase for other temporary directories.</description> </property> <property> <name>hadoop.proxyuser.hduser.hosts</name> <value>*</value> </property> <property> <name>hadoop.proxyuser.hduser.groups</name> <value>*</value> </property> </configuration>

其中hdp-data目錄是原來沒有的,需要自行創建

hdfs-site.xml

<configuration> <property> <name>dfs.namenode.secondary.http-address</name> <value>bd01:9001</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>file:/home/wukong/local/hdp-data/name</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/home/wukong/a_usr/hdp-data/data</value> </property> <property> <name>dfs.replication</name> <value>3</value> </property> <property> <name>dfs.webhdfs.enabled</name> <value>true</value> </property> <oconfiguration>

mapred-site.xml

<configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <property> <name>mapreduce.jobhistory.address</name> <value>bd01:10020</value> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>bd01.19888</value> </property> </configuration>

yarn-site.xml

<configuration> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name> <value>org.apache.hadoop.mapred.ShuffleHandler</value> </property> <property> <name>yarn.resourcemanager.address</name> <value>bd01:8032</value> </property> <property> <name>yarn.resourcemanager.scheduler.address</name> <value>bd01:8030</value> </property> <property> <name>yarn.resourcemanager.resource-tracker.address</name> <value>bd01:8031</value> </property> <property> <name>yarn.resourcemanager.admin.address</name> <value>bd01:8033</value> </property> <property> <name>yarn.resourcemanager.webapp.address</name> <value>bd01:8088</value> </property> </configuration>

遠程拷貝的方法見Linux常用命令

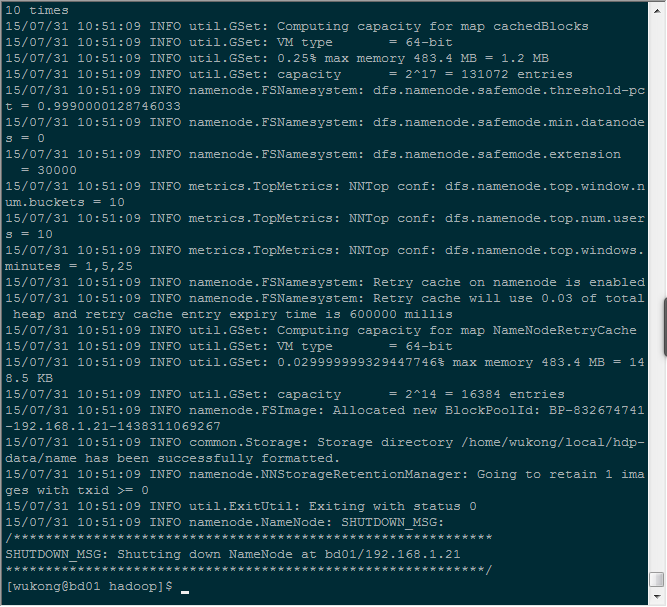

[wukong@bd01 hadoop-2.7.1]$ hdfs namenode -format

當執行完畢,沒有拋異常,并且看到這一句時,就是成功了

15/07/31 10:51:09 INFO common.Storage: Storage directory /home/wukong/local/hdp-data/name has been successfully formatted.

[wukong@bd01 ~]$ start-dfs.sh Starting namenodes on [bd01] bd01: starting namenode, logging to /home/wukong/local/hadoop-2.7.1/logs/hadoop-wukong-namenode-bd01.out bd02: starting datanode, logging to /home/wukong/local/hadoop-2.7.1/logs/hadoop-wukong-datanode-bd02.out bd03: starting datanode, logging to /home/wukong/local/hadoop-2.7.1/logs/hadoop-wukong-datanode-bd03.out Starting secondary namenodes [bd01] bd01: starting secondarynamenode, logging to /home/wukong/local/hadoop-2.7.1/logs/hadoop-wukong-secondarynamenode-bd01.out [wukong@bd01 ~]

通過jps和日志看是否啟動成功。jps查看機器啟動的進程情況。正常情況下master上應該有namenode和sencondarynamenode。slave上有datanode。

[wukong@bd01 hadoop]$ jps 5224 Jps 5074 SecondaryNameNode 4923 NameNode [wukong@bd02 ~]$ jps 2307 Jps 2206 DataNode [wukong@bd03 ~]$ jps 2298 Jps 2198 DataNode

[wukong@bd01 ~]$ start-yarn.sh starting yarn daemons starting resourcemanager, logging to /home/wukong/local/hadoop-2.7.1/logs/yarn-wukong-resourcemanager-bd01.out bd03: starting nodemanager, logging to /home/wukong/local/hadoop-2.7.1/logs/yarn-wukong-nodemanager-bd03.out bd02: starting nodemanager, logging to /home/wukong/local/hadoop-2.7.1/logs/yarn-wukong-nodemanager-bd02.out [wukong@bd01 ~]$

通過jps和日志驗證啟動是否成功

[wukong@bd01 ~]$ jps 5830 ResourceManager 6106 Jps 5074 SecondaryNameNode 4923 NameNode [wukong@bd01 ~]$ [wukong@bd02 ~]$ jps 4615 Jps 2206 DataNode 4502 NodeManager [wukong@bd02 ~]$ [wukong@bd03 ~]$ jps 4608 Jps 4495 NodeManager 2198 DataNode [wukong@bd03 ~]$

[wukong@bd01 ~]$ start-dfs.sh Starting namenodes on [bd01] The authenticity of host 'bd01 (192.168.1.21)' can't be established. ECDSA key fingerprint is af:96:74:e1:41:ec:af:ec:d8:8e:df:cd:99:61:33:0d. Are you sure you want to continue connecting (yes/no)? yes bd01: Warning: Permanently added 'bd01,192.168.1.21' (ECDSA) to the list of known hosts. bd01: Error: JAVA_HOME is not set and could not be found. bd03: Error: JAVA_HOME is not set and could not be found. bd02: Error: JAVA_HOME is not set and could not be found. Starting secondary namenodes [bd01] bd01: Error: JAVA_HOME is not set and could not be found. [wukong@bd01 some_log]$ java -version java version "1.7.0_79" Java(TM) SE Runtime Environment (build 1.7.0_79-b15) Java HotSpot(TM) 64-Bit Server VM (build 24.79-b02, mixed mode) [wukong@bd01 ~]

# .bash_profile # Get the aliases and functions if [ -f ~/.bashrc ]; then . ~/.bashrc fi # 把hadoop可執行文件和腳本的路徑都配進來 PATH=$PATH:$HOME/local/hadoop-2.7.1/bin:$HOME/local/hadoop-2.7.1/sbin export PATH ~ ~ ~ ".bash_profile" 16L, 267C

“Hadoop2.7.1分布式安裝配置過程”的內容就介紹到這里了,感謝大家的閱讀。如果想了解更多行業相關的知識可以關注億速云網站,小編將為大家輸出更多高質量的實用文章!

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。