您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

前言:首先有這樣一個需求,需要統計一篇10000字的文章,需要統計里面哪些詞出現的頻率比較高,這里面比較重要的是如何對文章中的一段話進行分詞,例如“北京是×××的首都”,“北京”,“×××”,“中華”,“華人”,“人民”,“共和國”,“首都”這些是一個詞,需要切分出來,而“京是”“民共”這些就不是有意義的詞,所以不能分出來。這些分詞的規則如果自己去寫,是一件很麻煩的事,利用開源的IK分詞,就可以很容易的做到。并且可以根據分詞的模式來決定分詞的顆粒度。

ik_max_word: 會將文本做最細粒度的拆分,比如會將“×××國歌”拆分為“×××,中華人民,中華,華人,人民共和國,人民,人,民,共和國,共和,和,國國,國歌”,會窮盡各種可能的組合;

ik_smart: 會做最粗粒度的拆分,比如會將“×××國歌”拆分為“×××,國歌”。

一:首先要準備環境

如果有ES環境可以跳過前兩步,這里我假設你只有一臺剛裝好的CentOS6.X系統,方便你跑通這個流程。

(1)安裝jdk。

$ wget http://download.oracle.com/otn-pub/java/jdk/8u111-b14/jdk-8u111-linux-x64.rpm $ rpm -ivh jdk-8u111-linux-x64.rpm

(2)安裝ES

$ wget https://download.elastic.co/elasticsearch/release/org/elasticsearch/distribution/rpm/elasticsearch/2.4.2/elasticsearch-2.4.2.rpm $ rpm -iv elasticsearch-2.4.2.rpm

(3)安裝IK分詞器

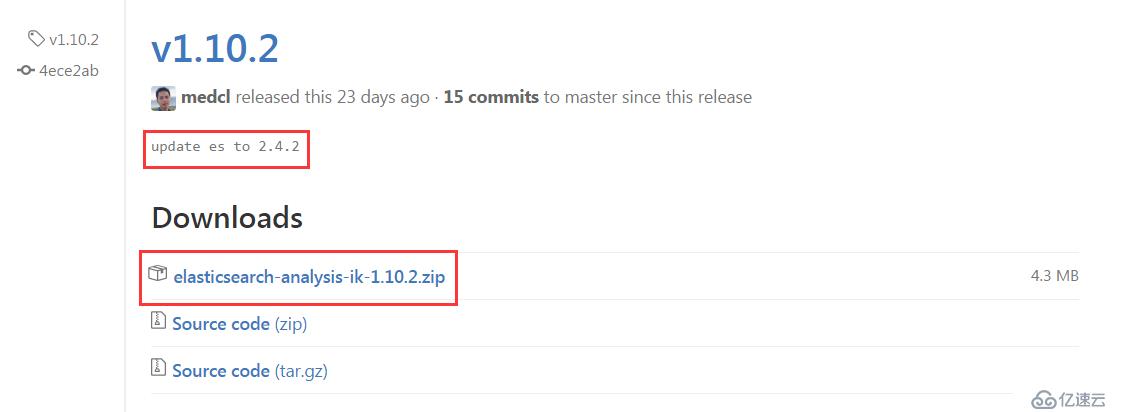

在github上面下載1.10.2版本的ik分詞,注意:es版本為2.4.2,兼容的版本為1.10.2。

$ mkdir /usr/share/elasticsearch/plugins/ik $ wget https://github.com/medcl/elasticsearch-analysis-ik/releases/download/v1.10.2/elasticsearch-analysis-ik-1.10.2.zip $ unzip elasticsearch-analysis-ik-1.10.2.zip -d /usr/share/elasticsearch/plugins/ik

(4)配置ES

$ vim /etc/elasticsearch/elasticsearch.yml ###### Cluster ###### cluster.name: test ###### Node ###### node.name: test-10.10.10.10 node.master: true node.data: true ###### Index ###### index.number_of_shards: 5 index.number_of_replicas: 0 ###### Path ###### path.data: /data/elk/es path.logs: /var/log/elasticsearch path.plugins: /usr/share/elasticsearch/plugins ###### Refresh ###### refresh_interval: 5s ###### Memory ###### bootstrap.mlockall: true ###### Network ###### network.publish_host: 10.10.10.10 network.bind_host: 0.0.0.0 transport.tcp.port: 9300 ###### Http ###### http.enabled: true http.port : 9200 ###### IK ######## index.analysis.analyzer.ik.alias: [ik_analyzer] index.analysis.analyzer.ik.type: ik index.analysis.analyzer.ik_max_word.type: ik index.analysis.analyzer.ik_max_word.use_smart: false index.analysis.analyzer.ik_smart.type: ik index.analysis.analyzer.ik_smart.use_smart: true index.analysis.analyzer.default.type: ik

(5)啟動ES

$ /etc/init.d/elasticsearch start

(6)檢查es節點狀態

$ curl localhost:9200/_cat/nodes?v #看到一個節點正常 host ip heap.percent ram.percent load node.role master name 10.10.10.10 10.10.10.10 16 52 0.00 d * test-10.10.10.10 $ curl localhost:9200/_cat/health?v #集群狀態為green epoch timestamp cluster status node.total node.data shards pri relo init 1483672233 11:10:33 test green 1 1 0 0 0 0

二:檢測分詞功能

(1)創建測試索引

$ curl -XPUT http://localhost:9200/test

(2)創建mapping

$ curl -XPOST http://localhost:9200/test/fulltext/_mapping -d'

{

"fulltext": {

"_all": {

"analyzer": "ik"

},

"properties": {

"content": {

"type" : "string",

"boost" : 8.0,

"term_vector" : "with_positions_offsets",

"analyzer" : "ik",

"include_in_all" : true

}

}

}

}'(3)測試數據

$ curl 'http://localhost:9200/index/_analyze?analyzer=ik&pretty=true' -d '{ "text":"美國留給伊拉克的是個爛攤子嗎" }'返回內容:

{

"tokens" : [ {

"token" : "美國",

"start_offset" : 0,

"end_offset" : 2,

"type" : "CN_WORD",

"position" : 0

}, {

"token" : "留給",

"start_offset" : 2,

"end_offset" : 4,

"type" : "CN_WORD",

"position" : 1

}, {

"token" : "伊拉克",

"start_offset" : 4,

"end_offset" : 7,

"type" : "CN_WORD",

"position" : 2

}, {

"token" : "伊",

"start_offset" : 4,

"end_offset" : 5,

"type" : "CN_WORD",

"position" : 3

}, {

"token" : "拉",

"start_offset" : 5,

"end_offset" : 6,

"type" : "CN_CHAR",

"position" : 4

}, {

"token" : "克",

"start_offset" : 6,

"end_offset" : 7,

"type" : "CN_WORD",

"position" : 5

}, {

"token" : "個",

"start_offset" : 9,

"end_offset" : 10,

"type" : "CN_CHAR",

"position" : 6

}, {

"token" : "爛攤子",

"start_offset" : 10,

"end_offset" : 13,

"type" : "CN_WORD",

"position" : 7

}, {

"token" : "攤子",

"start_offset" : 11,

"end_offset" : 13,

"type" : "CN_WORD",

"position" : 8

}, {

"token" : "攤",

"start_offset" : 11,

"end_offset" : 12,

"type" : "CN_WORD",

"position" : 9

}, {

"token" : "子",

"start_offset" : 12,

"end_offset" : 13,

"type" : "CN_CHAR",

"position" : 10

}, {

"token" : "嗎",

"start_offset" : 13,

"end_offset" : 14,

"type" : "CN_CHAR",

"position" : 11

} ]

}三:開始導入真正的數據

(1)將中文的文本文件上傳到linux上面。

$ cat /tmp/zhongwen.txt 京津冀重污染天氣持續 督查發現有企業惡意生產 《孤芳不自賞》被指“摳像演戲” 制片人:特效不到位 奧巴馬不顧特朗普反對堅持外遷關塔那摩監獄囚犯 . . . . 韓媒:日本叫停韓日貨幣互換磋商 韓財政部表遺憾 中國百萬年薪須交40多萬個稅 精英無奈出國發展

注意:確保文本文件編碼為utf-8,否則后面傳到es會亂碼。

$ vim /tmp/zhongwen.txt

命令模式下輸入:set fineencoding,即可看到fileencoding=utf-8。

如果是 fileencoding=utf-16le,則輸入:set fineencoding=utf-8

(2)創建索引和mapping

創建索引

$ curl -XPUT http://localhost:9200/index

創建mapping #對要分詞的字段message進行分詞器設置和fielddata設置。

$ curl -XPOST http://localhost:9200/index/logs/_mapping -d '

{

"logs": {

"_all": {

"analyzer": "ik"

},

"properties": {

"path": {

"type": "string"

},

"@timestamp": {

"format": "strict_date_optional_time||epoch_millis",

"type": "date"

},

"@version": {

"type": "string"

},

"host": {

"type": "string"

},

"message": {

"include_in_all": true,

"analyzer": "ik",

"term_vector": "with_positions_offsets",

"boost": 8,

"type": "string",

"fielddata" : { "format" : "true" }

},

"tags": {

"type": "string"

}

}

}

}'(3)使用logstash 將文本文件寫入到es中

安裝logstash

$ wget https://download.elasticsearch.org/elasticsearch/release/org/elasticsearch/distribution/rpm/elasticsearch/2.1.1/elasticsearch-2.1.1.rpm $ rpm -ivh logstash-2.1.1.rpm

配置logstash

$ vim /etc/logstash/conf.d/logstash.conf

input {

file {

codec => 'json'

path => "/tmp/zhongwen.txt"

start_position => "beginning"

}

}

output {

elasticsearch {

hosts => "10.10.10.10:9200"

index => "index"

flush_size => 3000

idle_flush_time => 2

workers => 4

}

stdout { codec => rubydebug }

}啟動

$ /etc/init.d/logstash start

查看stdout輸出,就能判斷是否寫入es中。

$ tail -f /var/log/logstash.stdout

(4)檢查索引中是否有數據

$ curl 'localhost:9200/_cat/indices/index?v' #可以看到有6007條數據。 health status index pri rep docs.count docs.deleted store.size pri.store.size green open index 5 0 6007 0 2.5mb 2.5mb

$ curl -XPOST "http://localhost:9200/index/_search?pretty"

{

"took" : 1,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"failed" : 0

},

"hits" : {

"total" : 5227,

"max_score" : 1.0,

"hits" : [ {

"_index" : "index",

"_type" : "logs",

"_id" : "AVluC7Dpbw7ZlXPmUTSG",

"_score" : 1.0,

"_source" : {

"message" : "中國百萬年薪須交40多萬個稅 精英無奈出國發展",

"tags" : [ "_jsonparsefailure" ],

"@version" : "1",

"@timestamp" : "2017-01-05T09:52:56.150Z",

"host" : "0.0.0.0",

"path" : "/tmp/333.log"

}

}, {

"_index" : "index",

"_type" : "logs",

"_id" : "AVluC7Dpbw7ZlXPmUTSN",

"_score" : 1.0,

"_source" : {

"message" : "奧巴馬不顧特朗普反對堅持外遷關塔那摩監獄囚犯",

"tags" : [ "_jsonparsefailure" ],

"@version" : "1",

"@timestamp" : "2017-01-05T09:52:56.222Z",

"host" : "0.0.0.0",

"path" : "/tmp/333.log"

}

}四:開始計算分詞的詞頻,排序

(1)查詢所有詞出現頻率最高的top10

$ curl -XGET "http://localhost:9200/index/_search?pretty" -d'

{

"size" : 0,

"aggs" : {

"messages" : {

"terms" : {

"size" : 10,

"field" : "message"

}

}

}

}'返回結果

{

"took" : 3,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"failed" : 0

},

"hits" : {

"total" : 6007,

"max_score" : 0.0,

"hits" : [ ]

},

"aggregations" : {

"messages" : {

"doc_count_error_upper_bound" : 154,

"sum_other_doc_count" : 94992,

"buckets" : [ {

"key" : "一",

"doc_count" : 1582

}, {

"key" : "后",

"doc_count" : 560

}, {

"key" : "人",

"doc_count" : 541

}, {

"key" : "家",

"doc_count" : 538

}, {

"key" : "出",

"doc_count" : 489

}, {

"key" : "發",

"doc_count" : 451

}, {

"key" : "個",

"doc_count" : 440

}, {

"key" : "州",

"doc_count" : 421

}, {

"key" : "歲",

"doc_count" : 405

}, {

"key" : "子",

"doc_count" : 402

} ]

}

}

}(2)查詢所有兩字詞出現頻率最高的top10

$ curl -XGET "http://localhost:9200/index/_search?pretty" -d'

{

"size" : 0,

"aggs" : {

"messages" : {

"terms" : {

"size" : 10,

"field" : "message",

"include" : "[\u4E00-\u9FA5][\u4E00-\u9FA5]"

}

}

},

"highlight": {

"fields": {

"message": {}

}

}

}'返回

{

"took" : 22,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"failed" : 0

},

"hits" : {

"total" : 6007,

"max_score" : 0.0,

"hits" : [ ]

},

"aggregations" : {

"messages" : {

"doc_count_error_upper_bound" : 73,

"sum_other_doc_count" : 42415,

"buckets" : [ {

"key" : "女子",

"doc_count" : 291

}, {

"key" : "男子",

"doc_count" : 264

}, {

"key" : "竟然",

"doc_count" : 257

}, {

"key" : "上海",

"doc_count" : 255

}, {

"key" : "這個",

"doc_count" : 238

}, {

"key" : "女孩",

"doc_count" : 174

}, {

"key" : "這些",

"doc_count" : 167

}, {

"key" : "一個",

"doc_count" : 159

}, {

"key" : "注意",

"doc_count" : 143

}, {

"key" : "這樣",

"doc_count" : 142

} ]

}

}

}(3)查詢所有兩字詞且不包含“女”字,出現頻率最高的top10

curl -XGET "http://localhost:9200/index/_search?pretty" -d'

{

"size" : 0,

"aggs" : {

"messages" : {

"terms" : {

"size" : 10,

"field" : "message",

"include" : "[\u4E00-\u9FA5][\u4E00-\u9FA5]",

"exclude" : "女.*"

}

}

},

"highlight": {

"fields": {

"message": {}

}

}

}'返回

{

"took" : 19,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"failed" : 0

},

"hits" : {

"total" : 5227,

"max_score" : 0.0,

"hits" : [ ]

},

"aggregations" : {

"messages" : {

"doc_count_error_upper_bound" : 71,

"sum_other_doc_count" : 41773,

"buckets" : [ {

"key" : "男子",

"doc_count" : 264

}, {

"key" : "竟然",

"doc_count" : 257

}, {

"key" : "上海",

"doc_count" : 255

}, {

"key" : "這個",

"doc_count" : 238

}, {

"key" : "這些",

"doc_count" : 167

}, {

"key" : "一個",

"doc_count" : 159

}, {

"key" : "注意",

"doc_count" : 143

}, {

"key" : "這樣",

"doc_count" : 142

}, {

"key" : "重慶",

"doc_count" : 142

}, {

"key" : "結果",

"doc_count" : 137

} ]

}

}

}還有更多的分詞策略,例如設置近義詞(設置“番茄”和“西紅柿”為同義詞,搜索“番茄”,“西紅柿”也會出來),設置拼音分詞(搜索“zhonghua”,“中華”也可以搜索出來)等等。

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。