溫馨提示×

您好,登錄后才能下訂單哦!

點擊 登錄注冊 即表示同意《億速云用戶服務條款》

您好,登錄后才能下訂單哦!

小編給大家分享一下python爬蟲案例之如何獲取招聘要求,相信大部分人都還不怎么了解,因此分享這篇文章給大家參考一下,希望大家閱讀完這篇文章后大有收獲,下面讓我們一起去了解一下吧!

大致流程如下:

1.從代碼中取出pid

2.根據pid拼接網址 => 得到 detail_url,使用requests.get,防止爬蟲掛掉,一旦發現爬取的detail重復,就重新啟動爬蟲

3.根據detail_url獲取網頁html信息 => requests - > html,使用BeautifulSoup

若爬取太快,就等著解封

if html.status_code!=200

print('status_code if {}'.format(html.status_code))4.根據html得到soup => soup

5.從soup中獲取特定元素內容 => 崗位信息

6.保存數據到MongoDB中

代碼:

# @author: limingxuan

# @contect: limx2011@hotmail.com

# @blog: https://www.jianshu.com/p/a5907362ba72

# @time: 2018-07-21

import requests

from bs4 import BeautifulSoup

import time

from pymongo import MongoClient

headers = {

'accept': "application/json, text/javascript, */*; q=0.01",

'accept-encoding': "gzip, deflate, br",

'accept-language': "zh-CN,zh;q=0.9,en;q=0.8",

'content-type': "application/x-www-form-urlencoded; charset=UTF-8",

'cookie': "JSESSIONID=""; __c=1530137184; sid=sem_pz_bdpc_dasou_title; __g=sem_pz_bdpc_dasou_title; __l=r=https%3A%2F%2Fwww.zhipin.com%2Fgongsi%2F5189f3fadb73e42f1HN40t8~.html&l=%2Fwww.zhipin.com%2Fgongsir%2F5189f3fadb73e42f1HN40t8~.html%3Fka%3Dcompany-jobs&g=%2Fwww.zhipin.com%2F%3Fsid%3Dsem_pz_bdpc_dasou_title; Hm_lvt_194df3105ad7148dcf2b98a91b5e727a=1531150234,1531231870,1531573701,1531741316; lastCity=101010100; toUrl=https%3A%2F%2Fwww.zhipin.com%2Fjob_detail%2F%3Fquery%3Dpython%26scity%3D101010100; Hm_lpvt_194df3105ad7148dcf2b98a91b5e727a=1531743361; __a=26651524.1530136298.1530136298.1530137184.286.2.285.199",

'origin': "https://www.zhipin.com",

'referer': "https://www.zhipin.com/job_detail/?query=python&scity=101010100",

'user-agent': "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_13_5) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.99 Safari/537.36"

}

conn = MongoClient('127.0.0.1',27017)

db = conn.zhipin_jobs

def init():

items = db.Python_jobs.find().sort('pid')

for item in items:

if 'detial' in item.keys(): #當爬蟲掛掉時,跳過已爬取的頁

continue

detail_url = 'https://www.zhipin.com/job_detail/{}.html'.format(item['pid']) #單引號和雙引號相同,str.format()新格式化方式

#第一階段順利打印出崗位頁面的url

print(detail_url)

#返回的html是 Response 類的結果

html = requests.get(detail_url,headers = headers)

if html.status_code != 200:

print('status_code is {}'.format(html.status_code))

break

#返回值soup表示一個文檔的全部內容(html.praser是html解析器)

soup = BeautifulSoup(html.text,'html.parser')

job = soup.select('.job-sec .text')

print(job)

#???

if len(job)<1:

continue

item['detail'] = job[0].text.strip() #職位描述

location = soup.select(".job-sec .job-location .location-address")

item['location'] = location[0].text.strip() #工作地點

item['updated_at'] = time.strftime("%Y-%m-%d %H:%M:%S",time.localtime()) #實時爬取時間

#print(item['detail'])

#print(item['location'])

#print(item['updated_at'])

res = save(item) #調用保存數據結構

print(res)

time.sleep(40)#爬太快IP被封了24小時==

#保存數據到MongoDB中

def save(item):

return db.Python_jobs.update_one({'_id':item['_id']},{'$set':item}) #why item ???

# 保存數據到MongoDB

if __name__ == '__main__':

init()

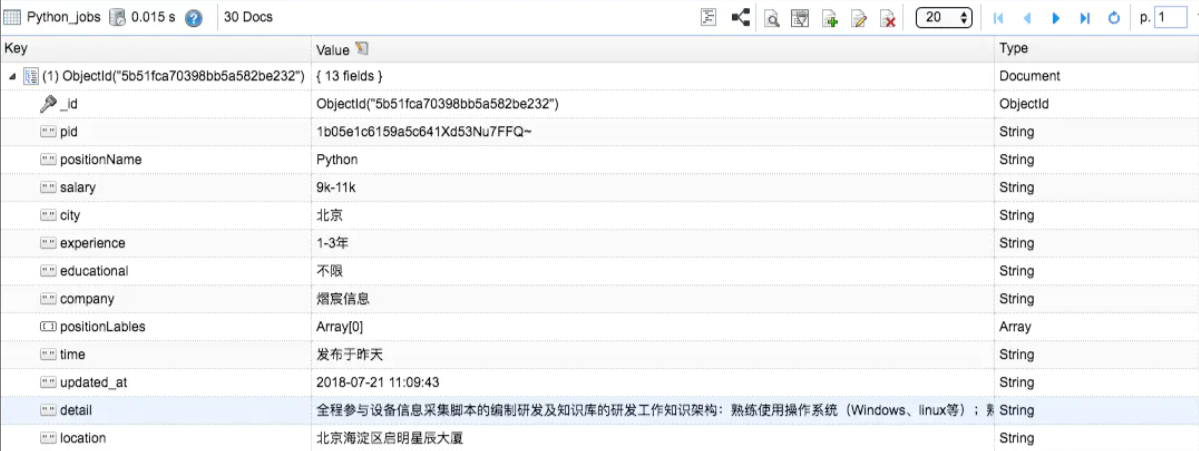

最終結果就是在MongoBooster中看到新增了detail和location的數據內容

以上是python爬蟲案例之如何獲取招聘要求的所有內容,感謝各位的閱讀!相信大家都有了一定的了解,希望分享的內容對大家有所幫助,如果還想學習更多知識,歡迎關注億速云行業資訊頻道!

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。