您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

1 apiserver 提供資源操作的唯一入口,并提供認證,授權,訪問控制API注冊和發現等機制

2 scheduler 負責資源的調度,按照預定的調度策略將POD調度到相應節點上

3 controller 負責維護集群的狀態,如故障檢測,自動擴展,滾動更新等

1 kubelet 維護容器的聲明周期,同時也負載掛載和網絡管理

2 kube-proxy 負責為service提供cluster內部服務的服務發現和負載均衡

1 etcd 保存整個集群狀態

2 flannel 為集群提供網絡環境

1 coreDNS 負責為整個集群提供DNS服務

2 ingress controller 為服務提供外網入口

3 promentheus 提供資源監控

4 dashboard 提供GUI

| 角色 | IP地址 | 相關組件 |

|---|---|---|

| master1 | 192.168.1.10 | docker, etcd,kubectl,flannel,kube-apiserver,kube-controller-manager,kube-scheduler |

| master2 | 192.168.1.20 | docker,etcd,kubectl,flannel,kube-apiserver,kube-controller-manager,kube-scheduler |

| node1 | 192.168.1.30 | kubelet,kube-proxy,docker,flannel,etcd |

| node2 | 192.168.1.40 | kubelet,kube-proxy,docker,flannel |

| nginx 負載均衡器 | 192.168.1.100 | nginx |

備注:

1 關閉selinux

2 firewalled防火墻關閉(關閉開機自啟動)

3 設置時間同步服務器

4 配置master和node節點之間的域名解析,可直接配置在/etc/hosts中

5 配置禁用交換分區

echo "vm.swappiness = 0">> /etc/sysctl.conf

sysctl -p

etcd 是一個鍵值存儲功能的數據庫,其可以實現節點之間的leader選舉功能,集群的所有轉換信息都在etcd中存儲,其他的etcd服務節點就會成為follower,在此過程供其他的follower會同步leader的數據,由于etcd集群必須能夠選擇出leader才能正常工作,因此其部署必須是奇數

相關etcd 選型

在考慮etcd讀寫效率以及穩定性的情況下,基本可以選型如下:

只有單臺或者兩臺服務器做kubernetes的服務集群,只需要部署一臺etcd節點即可;

只有三臺或者四臺服務器做kubernetes的服務集群,只需要部署三臺etcd節點即可;

只有五臺或者六臺服務器做kubernetes的服務集群,只需要部署五臺etcd節點即可;

etcd 內部通信使用是點到點的HTTPS通信

向外部通信是加密的點到點通信,其在kubernetes集群中其是通過與apiserver交互實現互相之間的通信

etcd之間的通信需要CA證書,etcd與客戶端之間的通信也需要CA證書,與api server 也需要證書

1 使用cfssl 生成自簽名證書,下載對應工具

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd642 授權并移動

chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64

mv cfssl_linux-amd64 /usr/local/bin/cfssl

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo3 創建文件,并生成對應證書

1 ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}2 ca-csr.json

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Shaanxi",

"ST": "xi'an"

}

]

}3 server-csr.json

{

"CN": "etcd",

"hosts": [

"192.168.1.10",

"192.168.1.20",

"192.168.1.30"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Shaanxi",

"ST": "xi'an"

}

]

}4 生成證書

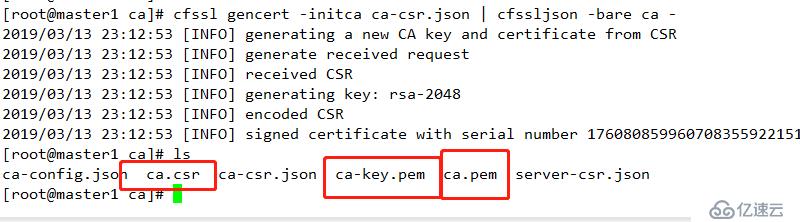

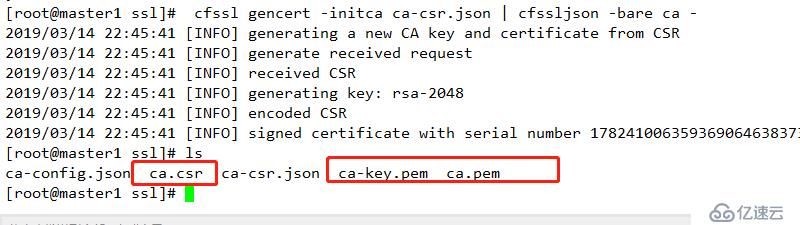

1 cfssl gencert -initca ca-csr.json | cfssljson -bare ca -結果如下

2 cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server結果如下

1 下載相關軟件包

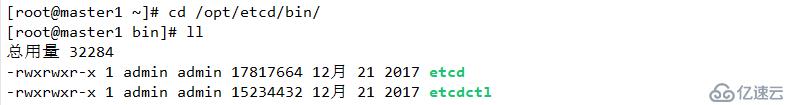

wget https://github.com/etcd-io/etcd/releases/download/v3.2.12/etcd-v3.2.12-linux-amd64.tar.gz2 創建相關配置文件目錄并解壓相關配置

mkdir /opt/etcd/{bin,cfg,ssl} -p

tar xf etcd-v3.2.12-linux-amd64.tar.gz

mv etcd-v3.2.12-linux-amd64/{etcd,etcdctl} /opt/etcd/bin/結果如下:

3 創建配置文件etcd

#[Member]

ETCD_NAME="etcd01"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.1.10:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.1.10:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.1.10:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.1.10:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.1.10:2380,etcd02=https://192.168.1.20:2380,etcd03=https://192.168.1.30:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

名詞解析:

ETCD_NAME #節點名稱

ETCD_DATA_DIR # 數據目錄,用于存儲節點ID,集群ID,等數據

ETCD_LISTEN_PEER_URLS #監聽URL,用于與其他節點通信(本地IP加端口)

ETCD_LISTEN_CLIENT_URLS # 客戶端訪問監聽地址

ETCD_INITAL_ADVERTISE_PEER_URLS #集群通告地址

ETCD_ADVERTISE_CLIENT_URLS #客戶端通告地址,告知其他節點通訊

ETCD_INITIAL_CLUSTER #集群節點地址,集群中所有節點地址

ETCD_INITIAL_CLUSTER_TOKEN #集群token

ETCD_INITIAL_CLUSTER_STATE # 加入集群的當前狀態,new 是新集群,exitsting 表示加入已有集群

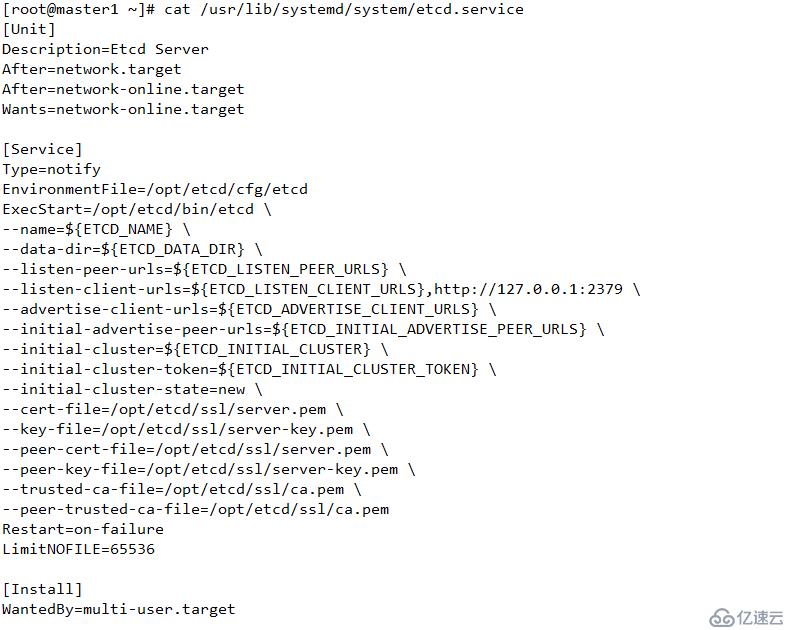

4 創建啟動文件 etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/opt/etcd/cfg/etcd

ExecStart=/opt/etcd/bin/etcd \

--name=${ETCD_NAME} \

--data-dir=${ETCD_DATA_DIR} \

--listen-peer-urls=${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls=${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

--advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster=${ETCD_INITIAL_CLUSTER} \

--initial-cluster-token=${ETCD_INITIAL_CLUSTER_TOKEN} \

--initial-cluster-state=new \

--cert-file=/opt/etcd/ssl/server.pem \

--key-file=/opt/etcd/ssl/server-key.pem \

--peer-cert-file=/opt/etcd/ssl/server.pem \

--peer-key-file=/opt/etcd/ssl/server-key.pem \

--trusted-ca-file=/opt/etcd/ssl/ca.pem \

--peer-trusted-ca-file=/opt/etcd/ssl/ca.pem

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target結果如下

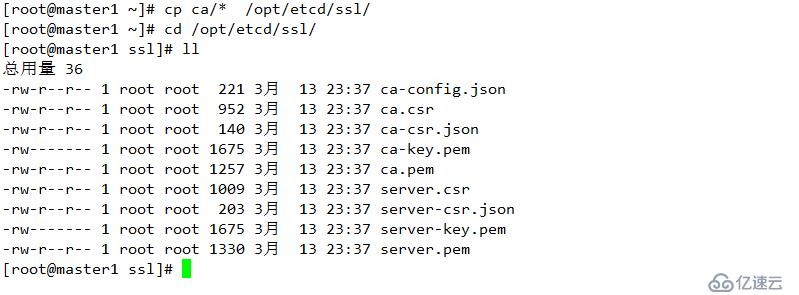

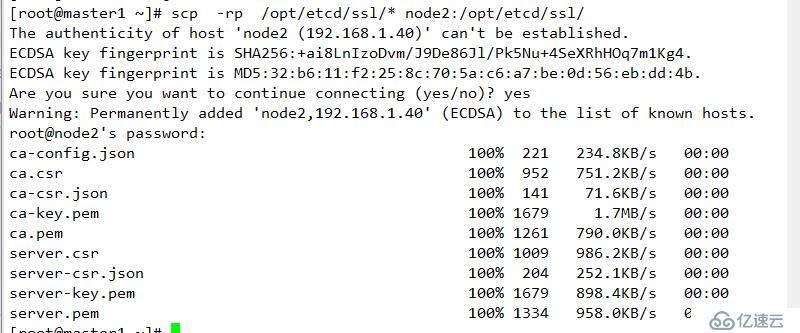

復制密鑰信息到指定位置

master2

1 創建文件

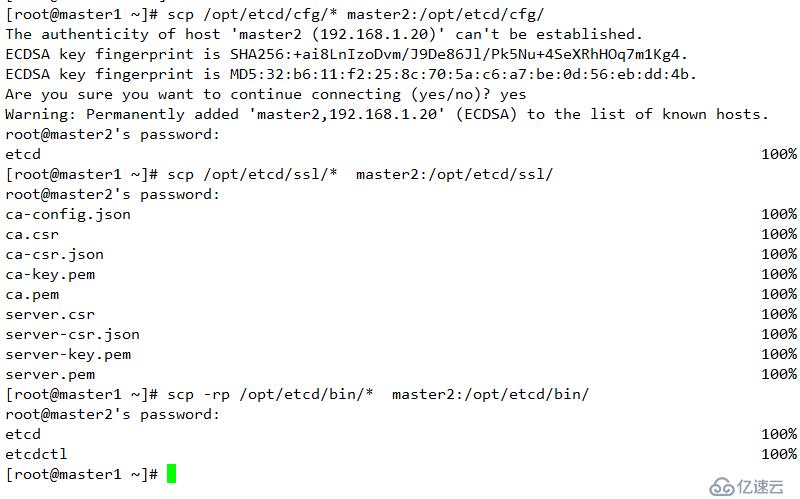

mkdir /opt/etcd/{bin,cfg,ssl} -p2 復制相關配置文件至指定節點master2

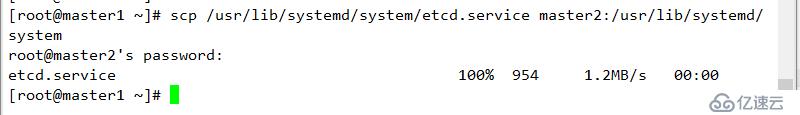

修改配置信息

etcd 配置

#[Member]

ETCD_NAME="etcd02"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.1.20:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.1.20:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.1.20:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.1.20:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.1.10:2380,etcd02=https://192.168.1.20:2380,etcd03=https://192.168.1.30:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"結果如下

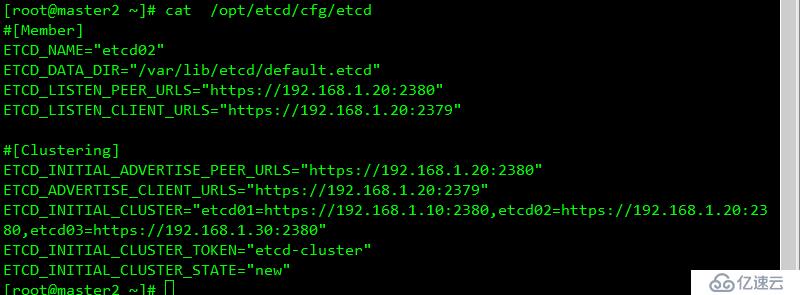

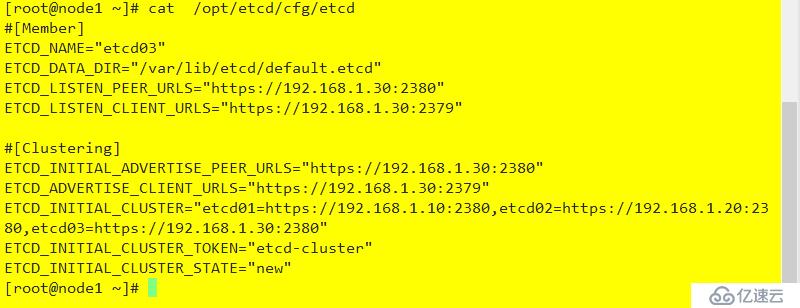

node1 節點配置,同master2

mkdir /opt/etcd/{bin,cfg,ssl} -p

scp /opt/etcd/cfg/* node1:/opt/etcd/cfg/

scp /opt/etcd/bin/* node1:/opt/etcd/bin/

scp /opt/etcd/ssl/* node1:/opt/etcd/ssl/

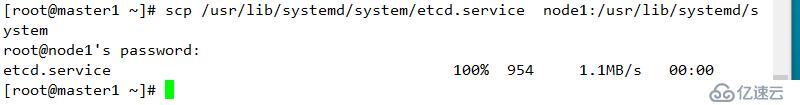

修改配置文件

#[Member]

ETCD_NAME="etcd03"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.1.30:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.1.30:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.1.30:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.1.30:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.1.10:2380,etcd02=https://192.168.1.20:2380,etcd03=https://192.168.1.30:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"配置結果如下

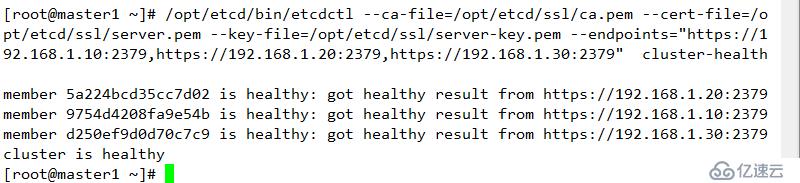

三個節點啟動并設置開機自啟動 (同時啟動)

systemctl start etcd

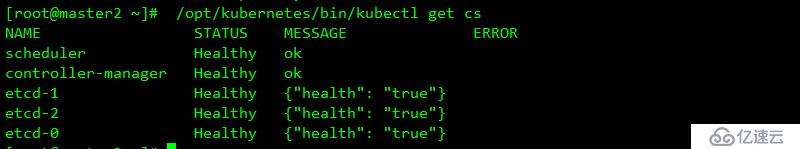

systemctl enable etcd.service驗證:

/opt/etcd/bin/etcdctl --ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.1.10:2379,https://192.168.1.20:2379,https://192.168.1.30:2379" cluster-health結果如下

**ETCD擴展:https://www.kubernetes.org.cn/5021.html

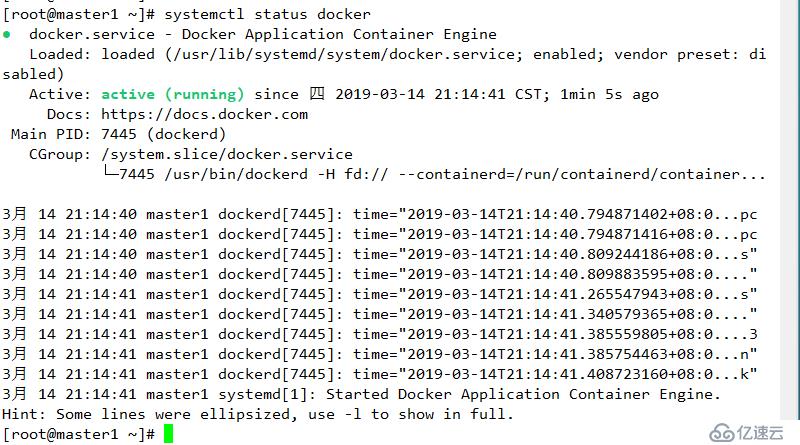

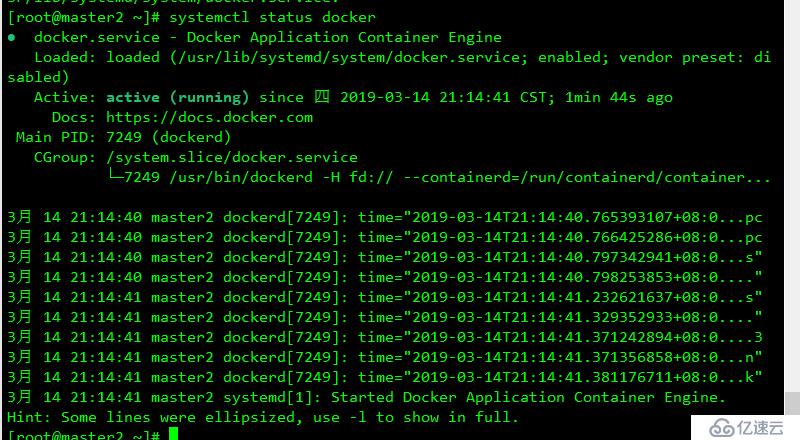

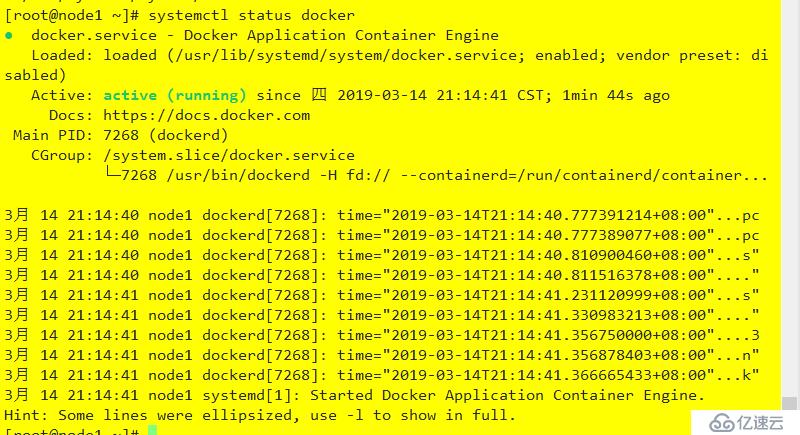

yum install -y yum-utils device-mapper-persistent-data lvm2yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo![] yum install docker-ce -ycurl -sSL https://get.daocloud.io/daotools/set_mirror.sh | sh -s http://bc437cce.m.daocloud.iosystemctl restart docker

systemctl enable docker結果如下

flannel 默認使用vxlan(Linux 內核自3.7.0之后支持)方式為后端網絡的傳輸機制,其不支持網絡策略,其是基于 Linux TUN/TAP 傳輸,借助etcd維護網絡的分配情況

flannel 對于子網沖突的解決方式:預留一個網絡(后面的寫入etcd的網絡),而后自動為每個節點的docker容器引擎分配一個子網,并將其分配的信息保存于etcd持久化存儲中。

flannel三種模式 :

1 vxlan

2升級版 vxlan(direct routing VXLAN) 同一網絡的節點使用host-gw通信,其他通信使用vxlan方式實現

3 host-gw: 及 host gateway,通過節點上創建到目標容器地址的路由直接完成報文轉發,這種方式要求各個節點必須在同一個三層網絡中,host-gw有較好的轉發性能,易于設定,若經過多個網絡,會牽扯更多路由,性能有所下降

4 UDP:使用普通的UDP報文完成隧道轉發,性能低,僅在前兩種不支持的情況下使用

flanneld,要用于etcd 存儲自身一個子網信息,因此需保證能夠成功鏈接etcd,寫入預定子網網段

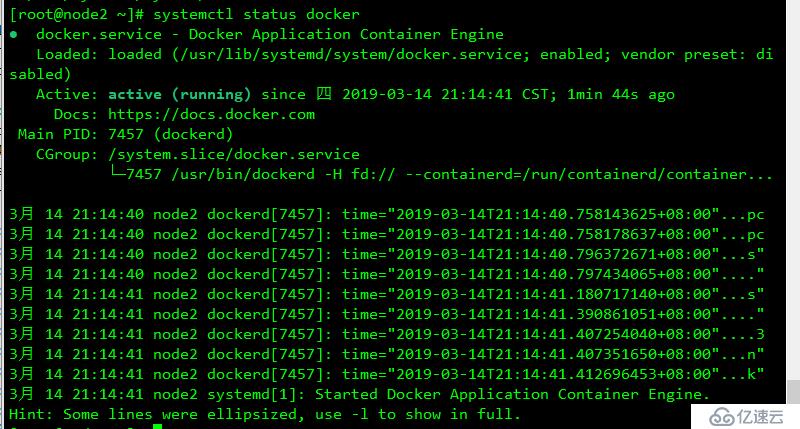

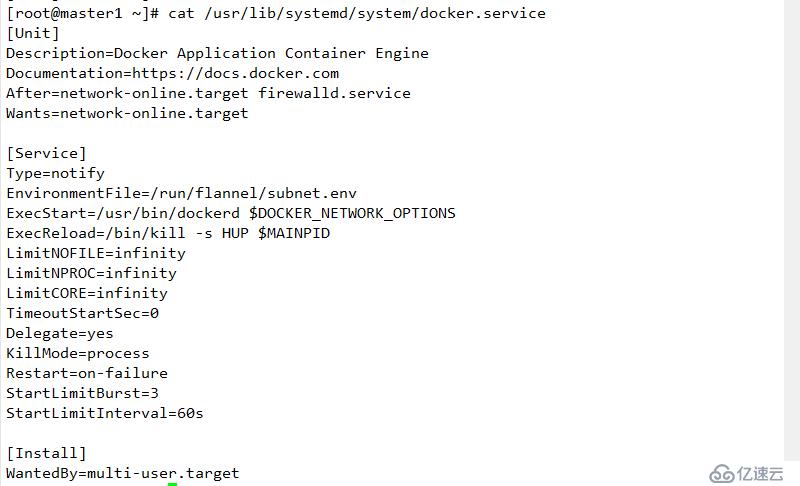

/opt/etcd/bin/etcdctl --ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.1.10:2379,https://192.168.1.20:2379,https://192.168.1.30:2379" set /coreos.com/network/config '{"Network":"172.17.0.0/16","Backend":{"Type":"vxlan"}}'![]wget https://github.com/coreos/flannel/releases/download/v0.10.0/flannel-v0.10.0-linux-amd64.tar.gztar xf flannel-v0.10.0-linux-amd64.tar.gz mkdir /opt/kubernetes/bin -p mv flanneld mk-docker-opts.sh /opt/kubernetes/bin/ mkdir /opt/kubernetes/cfgFLANNEL_OPTIONS="--etcd-endpoints=https://192.168.1.10:2379,https://192.168.1.20:2379,https://192.168.1.30:2379 -etcd-cafile=/opt/etcd/ssl/ca.pem -etcd-certfile=/opt/etcd/ssl/server.pem -etcd-keyfile=/opt/etcd/ssl/server-key.pem" cat /usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq $FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target結果如下

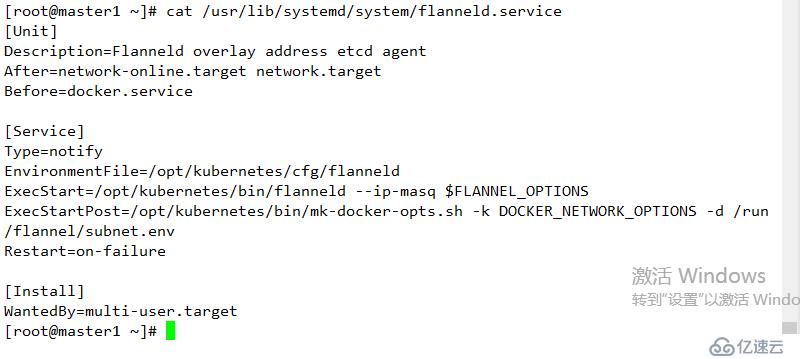

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/run/flannel/subnet.env

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target結果如下

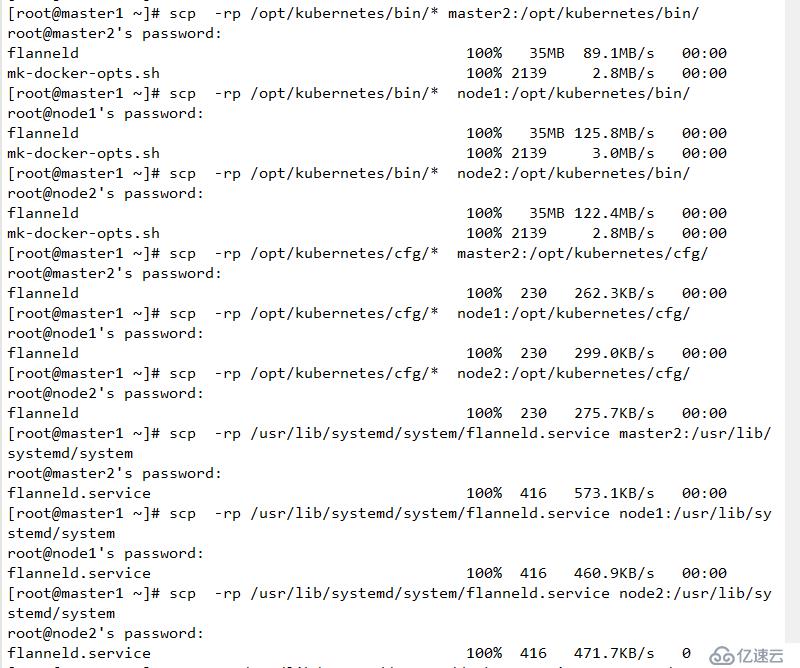

1 其他節點創建相關etcd目錄和相關kubernetes目錄

mkdir /opt/kubernetes/{cfg,bin} -p

mkdir /opt/etcd/ssl -p2 復制相關配置文件至指定主機

復制相關flannd訪問

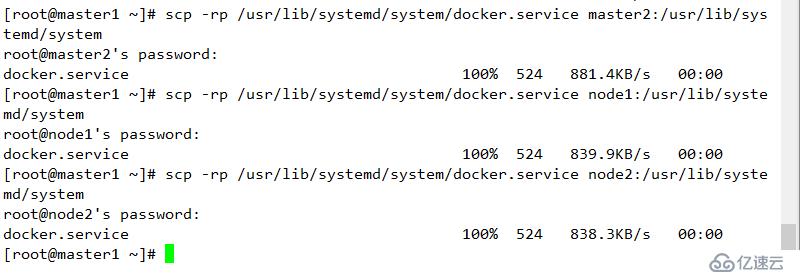

復制docker配置信息

systemctl daemon-reload

systemctl start flanneld

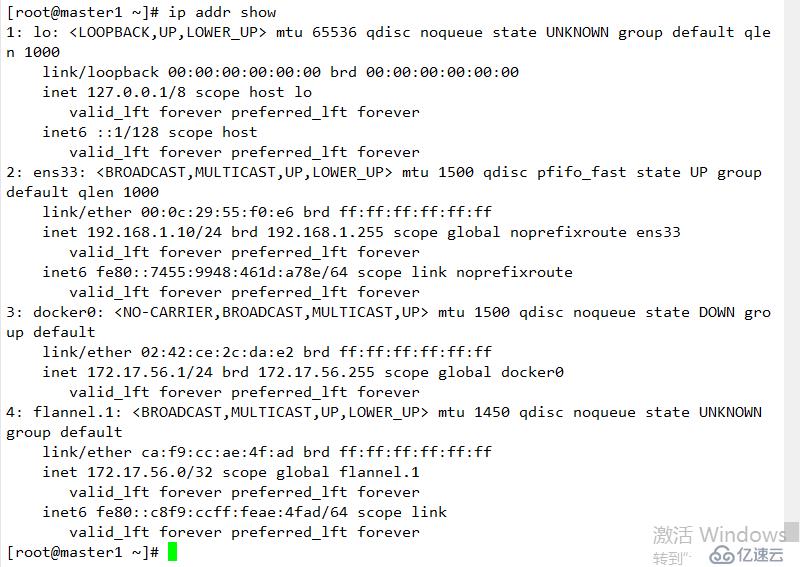

systemctl enable flanneld11 查看結果

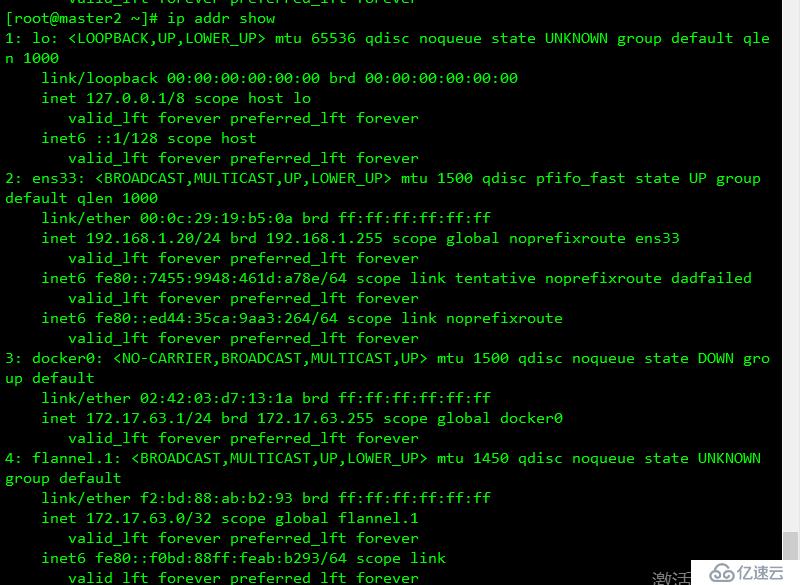

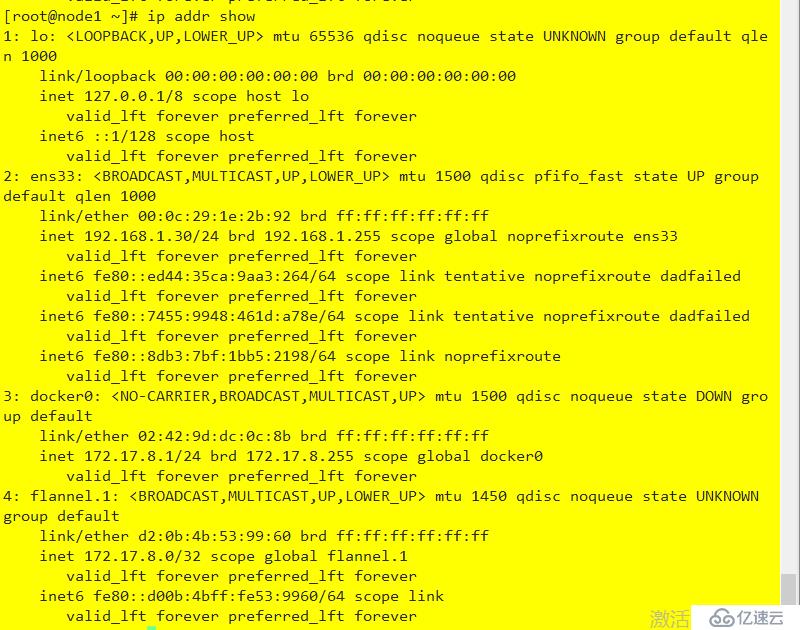

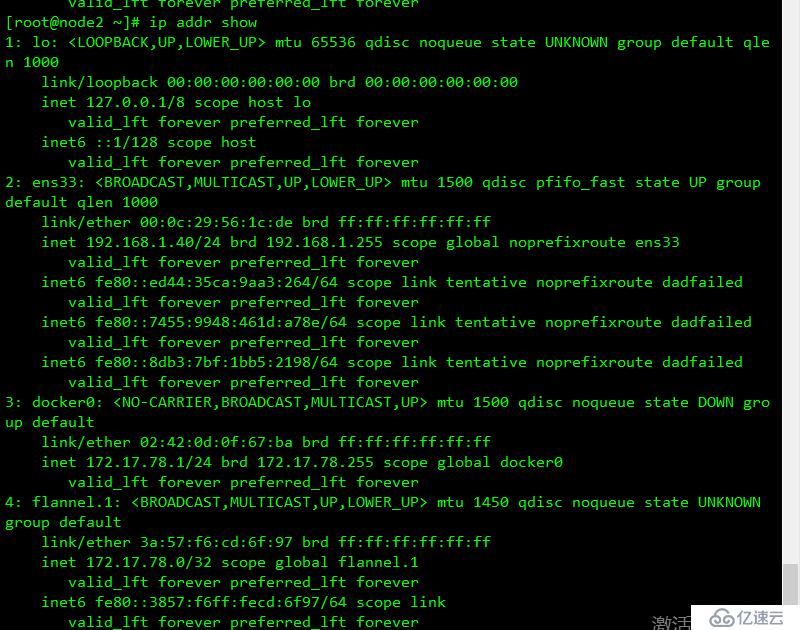

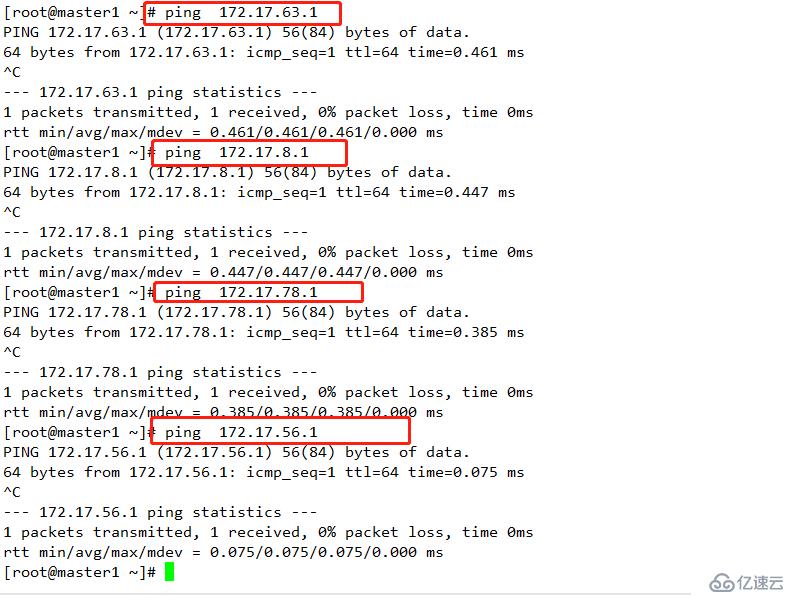

注意:(確保docker0 和 flannel.1在同一網段,且能每個節點能與其他節點docker0的IP通信)

結果如下

查看etcd中的flannel配置

/opt/etcd/bin/etcdctl --ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.1.10:2379,https://192.168.1.20:2379,https://192.168.1.30:2379" get /coreos.com/network/config

/opt/etcd/bin/etcdctl --ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.1.10:2379,https://192.168.1.20:2379,https://192.168.1.30:2379" get /coreos.com/network/subnets/172.17.56.0-24API-SERVER 提供了資源操作的唯一入口,并提供認證、授權、訪問控制、API 注冊和發現等機制

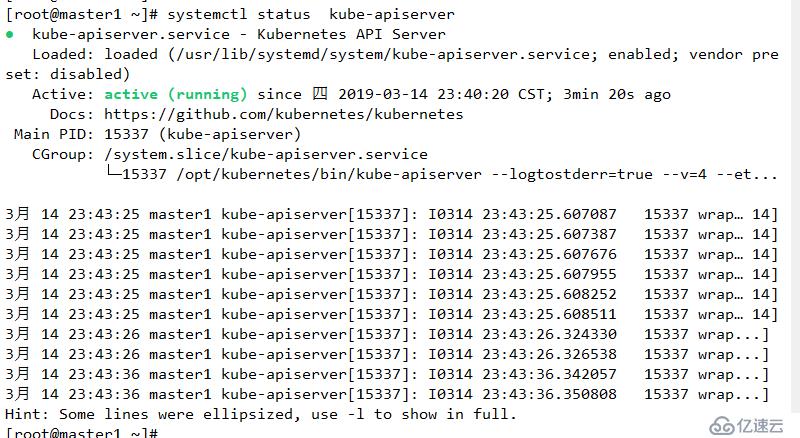

查看相關資源日志

journalctl -exu kube-apiserver

mkdir /opt/kubernetes/ssl cat ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}cat ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Shaanxi",

"ST": "xi'an",

"O": "k8s",

"OU": "System"

}

]

}cat server-csr.json

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"192.168.1.10",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"192.168.1.10",

"192.168.1.20",

"192.168.1.100",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Shannxi",

"ST": "xi'an",

"O": "k8s",

"OU": "System"

}

]

}

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Shannxi",

"ST": "xi'an",

"O": "k8s",

"OU": "System"

}

]

}kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Shaanxi",

"ST": "xi'an",

"O": "k8s",

"OU": "System"

}

]

}1

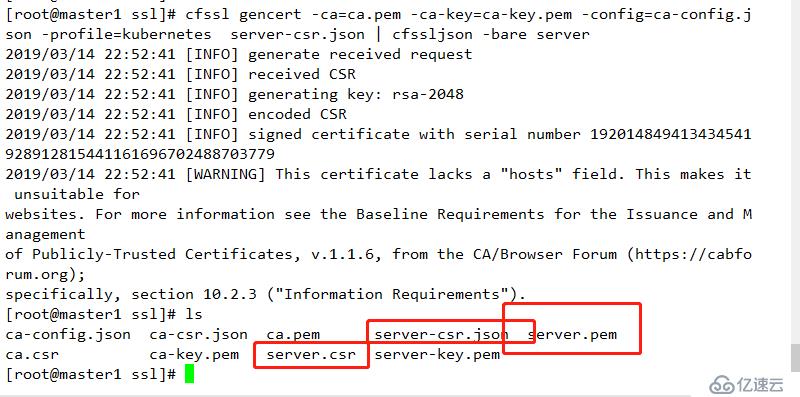

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -查看

2

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server 查看結果

3

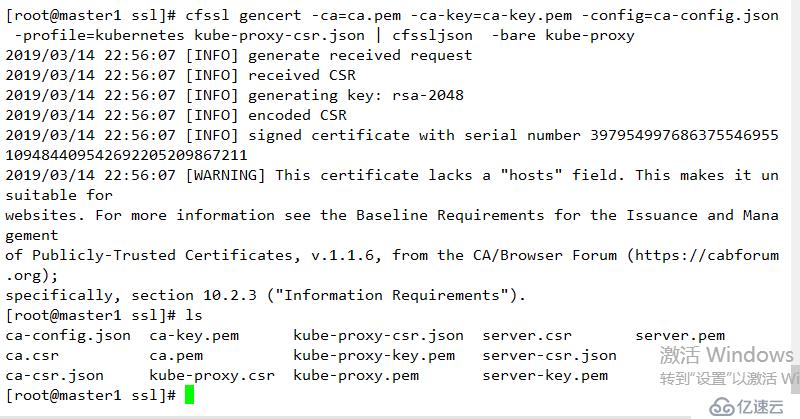

生成證書

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

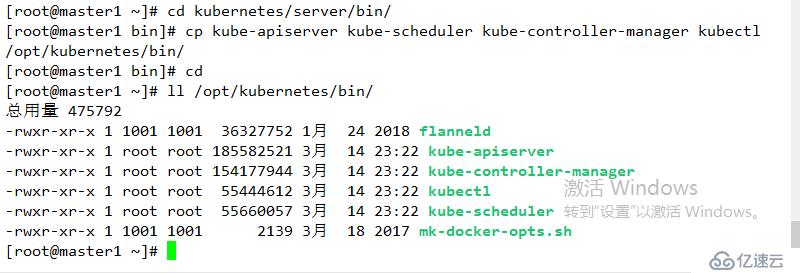

wget https://storage.googleapis.com/kubernetes-release/release/v1.11.6/kubernetes-server-linux-amd64.tar.gz解壓數據包

tar xf kubernetes-server-linux-amd64.tar.gz 進入指定目錄

cd kubernetes/server/bin/移動二進制文件到指定目錄:

cp kube-apiserver kube-scheduler kube-controller-manager kubectl /opt/kubernetes/bin/結果如下

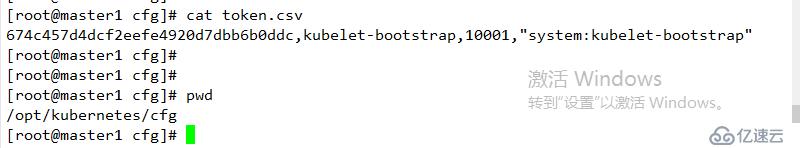

創建token,后面會用到

674c457d4dcf2eefe4920d7dbb6b0ddc,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

格式如下 :

說明 :

第一列: 隨機字符串,自己生成

第二列:用戶名

第三列:UID

第四列: 用戶組

結果如下:

KUBE_APISERVER_OPTS="--logtostderr=true \

--v=4 \

--etcd-servers=https://192.168.1.10:2379,https://192.168.1.20:2379,https://192.168.1.30:2379 \

--bind-address=192.168.1.10 \

--secure-port=6443 \

--advertise-address=192.168.1.10 \

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota,NodeRestriction \

--authorization-mode=RBAC,Node \

--enable-bootstrap-token-auth \

--token-auth-file=/opt/kubernetes/cfg/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/opt/kubernetes/ssl/server.pem \

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \

--client-ca-file=/opt/kubernetes/ssl/ca.pem \

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/opt/etcd/ssl/ca.pem \

--etcd-certfile=/opt/etcd/ssl/server.pem \

--etcd-keyfile=/opt/etcd/ssl/server-key.pem"

名詞解析

--logtostderr 啟用日志

--v 日志等級

--etcd-servers etcd 集群地址

--bind-address 監聽地址

--secure-port https安全端口

--advertise-address 集群通道地址

--allow-privileged 用戶授權

--service-cluster-ip-range Service 虛擬IP地址段

--enable-admission-plugins 準入控制模塊

--authorization-mode 認證授權,啟用RBAC 授權和節點自管理

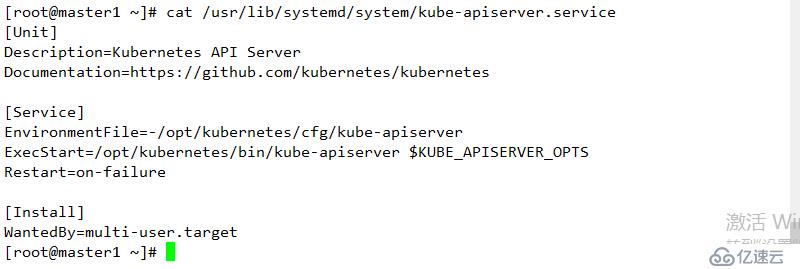

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-apiserver

ExecStart=/opt/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target結果如下:

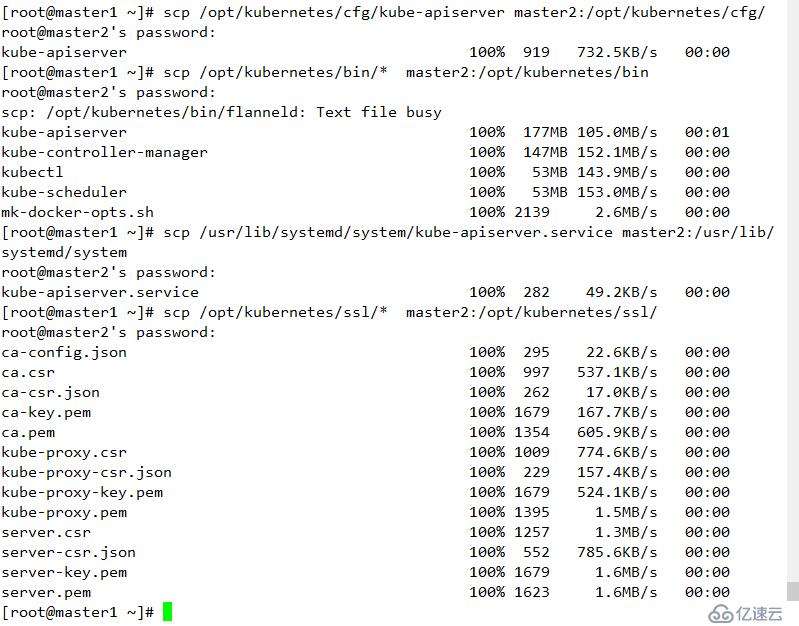

scp /opt/kubernetes/cfg/kube-apiserver master2:/opt/kubernetes/cfg/

scp /opt/kubernetes/bin/* master2:/opt/kubernetes/bin

scp /usr/lib/systemd/system/kube-apiserver.service master2:/usr/lib/systemd/system

scp /opt/kubernetes/ssl/* master2:/opt/kubernetes/ssl/

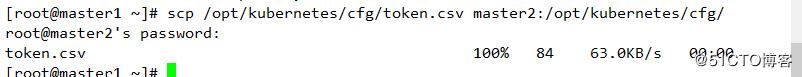

scp /opt/kubernetes/cfg/token.csv master2:/opt/kubernetes/cfg/結果:

/opt/kubernetes/cfg/kube-apiserver

KUBE_APISERVER_OPTS="--logtostderr=true \

--v=4 \

--etcd-servers=https://192.168.1.10:2379,https://192.168.1.20:2379,https://192.168.1.30:2379 \

--bind-address=192.168.1.20 \

--secure-port=6443 \

--advertise-address=192.168.1.20 \

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota,NodeRestriction \

--authorization-mode=RBAC,Node \

--enable-bootstrap-token-auth \

--token-auth-file=/opt/kubernetes/cfg/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/opt/kubernetes/ssl/server.pem \

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \

--client-ca-file=/opt/kubernetes/ssl/ca.pem \

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/opt/etcd/ssl/ca.pem \

--etcd-certfile=/opt/etcd/ssl/server.pem \

--etcd-keyfile=/opt/etcd/ssl/server-key.pem"systemctl daemon-reload

systemctl start kube-apiserver

systemctl enable kube-apiserver

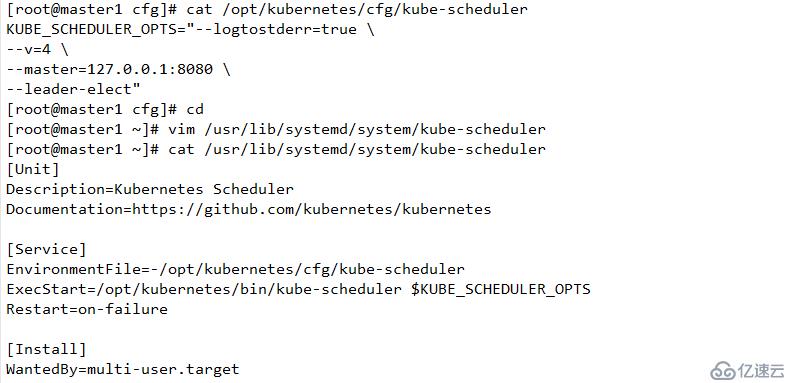

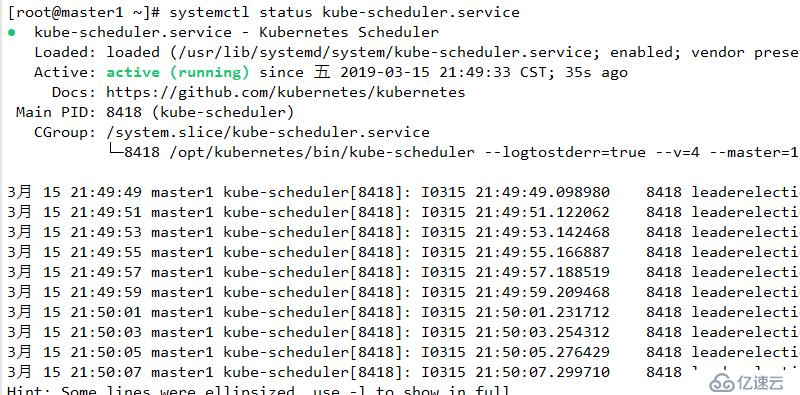

scheduler 負責資源的調度,按照預定的調度策略將POD調度到相應的節點上

/opt/kubernetes/cfg/kube-scheduler

KUBE_SCHEDULER_OPTS="--logtostderr=true \

--v=4 \

--master=127.0.0.1:8080 \

--leader-elect"參數說明:

--master 鏈接本地apiserver

--leader-elect "該組件啟動多個時,自動選舉"

/usr/lib/systemd/system/kube-scheduler

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-scheduler

ExecStart=/opt/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target結果

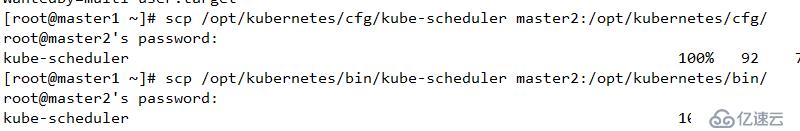

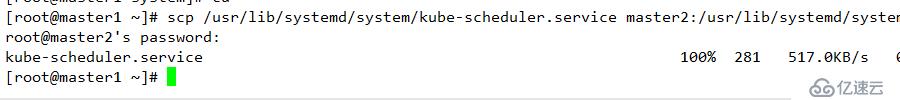

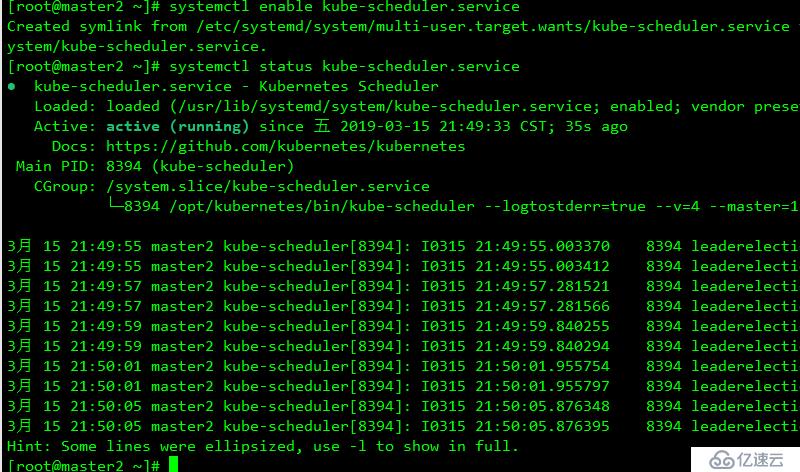

scp /opt/kubernetes/cfg/kube-scheduler master2:/opt/kubernetes/cfg/

scp /opt/kubernetes/bin/kube-scheduler master2:/opt/kubernetes/bin/

scp /usr/lib/systemd/system/kube-scheduler.service master2:/usr/lib/systemd/system

systemctl daemon-reload

systemctl start kube-scheduler.service

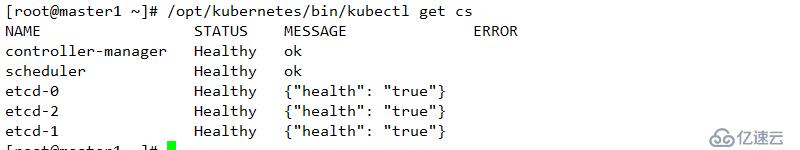

systemctl enable kube-scheduler.service查看結果

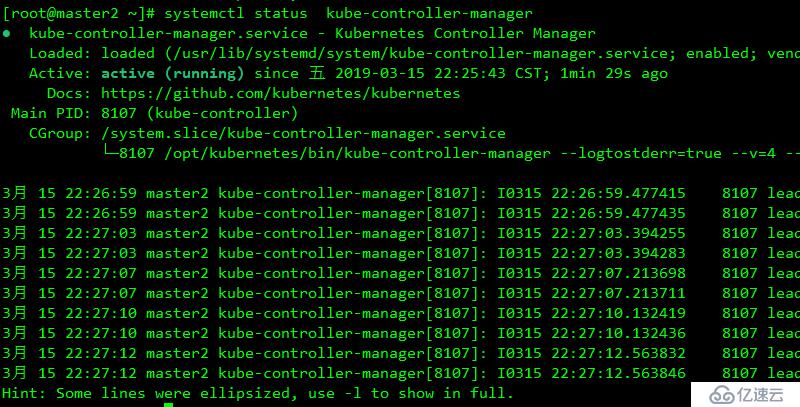

負責維護集群的狀態,如故障檢測,自動擴展,滾動更新等

/opt/kubernetes/cfg/kube-controller-manager

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \

--v=4 \

--master=127.0.0.1:8080 \

--leader-elect=true \

--address=127.0.0.1 \

--service-cluster-ip-range=10.0.0.0/24 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \

--root-ca-file=/opt/kubernetes/ssl/ca.pem \

--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem"/usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-controller-manager

ExecStart=/opt/kubernetes/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.targetscp /opt/kubernetes/bin/kube-controller-manager master2:/opt/kubernetes/bin/

scp /opt/kubernetes/cfg/kube-controller-manager master2:/opt/kubernetes/cfg/

scp /usr/lib/systemd/system/kube-controller-manager master2:/usr/lib/systemd/systemsystemctl daemon-reload

systemctl enable kube-controller-manager

systemctl restart kube-controller-manager查看結果

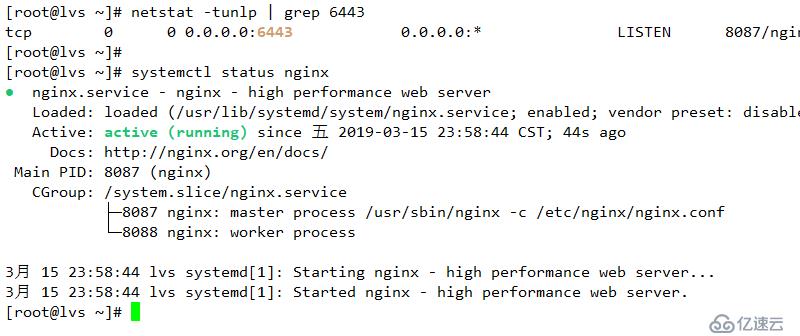

用于為master1和master2 節點提供負載均衡

/etc/yum.repos.d/nginx.repo

[nginx]

name=nginx repo

baseurl=http://nginx.org/packages/centos/7/x86_64/

gpgcheck=0

enabled=1yum -y install nginx

/etc/nginx/nginx.conf

user nginx;

worker_processes 1;

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

#gzip on;

include /etc/nginx/conf.d/*.conf;

}

stream {

upstream api-server {

server 192.168.1.10:6443;

server 192.168.1.20:6443;

}

server {

listen 6443;

proxy_pass api-server;

}

}systemctl start nginx

systemctl enable nginx

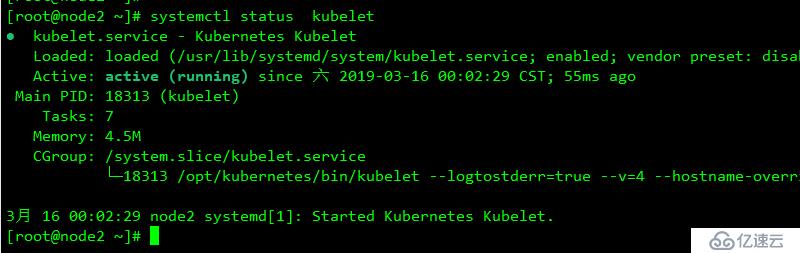

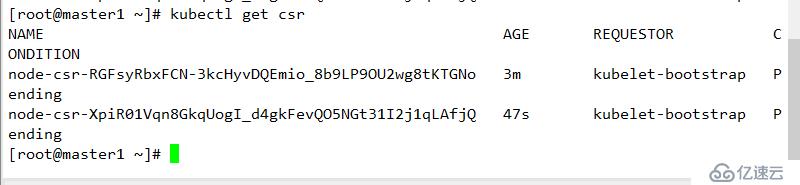

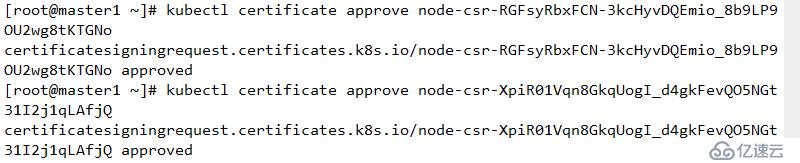

負責維護容器的生命周期,同時也負責掛載和網絡的管理

cd /opt/kubernetes/bin/

./kubectl create clusterrolebinding kubelet-bootstrap \

--clusterrole=system:node-bootstrapper \

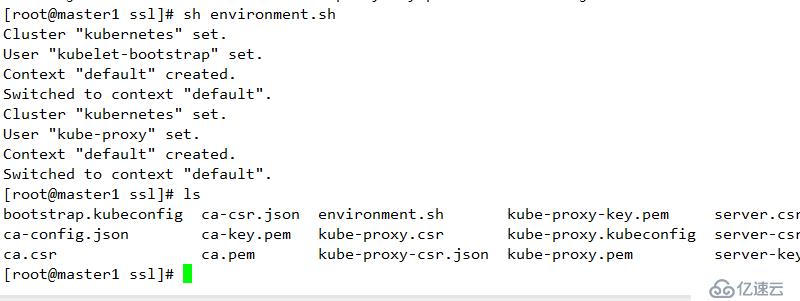

--user=kubelet-bootstrap在生成證書的目錄下執行

cd /opt/kubernetes/ssl/

腳本如下

environment.sh

# 創建kubelet bootstrapping kubeconfig

# 下面的隨機數為上面生成的隨機數

BOOTSTRAP_TOKEN=674c457d4dcf2eefe4920d7dbb6b0ddc

# 其IP地址和端口對應LVS負載均衡器的虛擬IP地址

KUBE_APISERVER="https://192.168.1.100:6443"

# 設置集群參數

kubectl config set-cluster kubernetes \

--certificate-authority=./ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig

# 設置客戶端認證參數

kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=bootstrap.kubeconfig

# 設置上下文參數

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

# 設置默認上下文

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

#----------------------

# 創建kube-proxy kubeconfig文件

kubectl config set-cluster kubernetes \

--certificate-authority=./ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=./kube-proxy.pem \

--client-key=./kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig/etc/profile

export PATH=/opt/kubernetes/bin:$PATH

source /etc/profile

sh environment.sh

scp -rp bootstrap.kubeconfig kube-proxy.kubeconfig node1:/opt/kubernetes/cfg/

scp -rp bootstrap.kubeconfig kube-proxy.kubeconfig node2:/opt/kubernetes/cfg/cd /root/kubernetes/server/binscp kubelet kube-proxy node1:/opt/kubernetes/bin/

scp kubelet kube-proxy node2:/opt/kubernetes/bin//opt/kubernetes/cfg/kubelet

KUBELET_OPTS="--logtostderr=true \

--v=4 \

--hostname-override=192.168.1.30 \

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \

--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \

--config=/opt/kubernetes/cfg/kubelet.config \

--cert-dir=/opt/kubernetes/ssl \

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"參數說明:

--hostname-override 在集群中顯示的主機名

-kuveconfig:指定kubeconfig 文件位置,自動生成

--bootstrap-kubecondig 指定文件配置

--cert-dir 頒發證書存在位置

--pod-infra-container-image 管理POD網絡鏡像

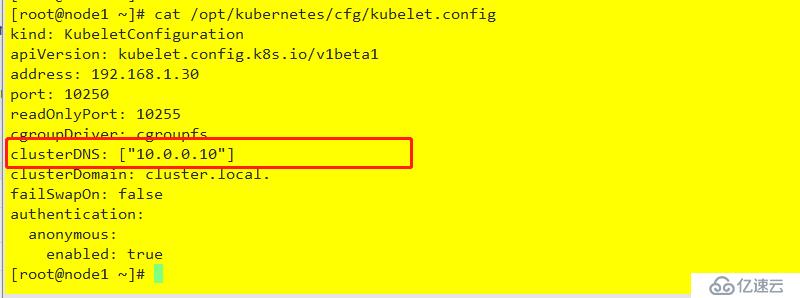

/opt/kubernetes/cfg/kubelet.config

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 192.168.1.30

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS: ["10.0.0.10"]

clusterDomain: cluster.local.

failSwapOn: false

authentication:

anonymous:

enabled: true/usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kubelet

ExecStart=/opt/kubernetes/bin/kubelet $KUBELET_OPTS

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.targetscp /opt/kubernetes/cfg/kubelet node2:/opt/kubernetes/cfg/

scp /opt/kubernetes/cfg/kubelet.config node2:/opt/kubernetes/cfg/

scp /usr/lib/systemd/system/kubelet.service node2:/usr/lib/systemd/system/opt/kubernetes/cfg/kubelet

KUBELET_OPTS="--logtostderr=true \

--v=4 \

--hostname-override=192.168.1.40 \

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \

--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \

--config=/opt/kubernetes/cfg/kubelet.config \

--cert-dir=/opt/kubernetes/ssl \

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"/opt/kubernetes/cfg/kubelet.config

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 192.168.1.40

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS: ["10.0.0.10"]

clusterDomain: cluster.local.

failSwapOn: false

authentication:

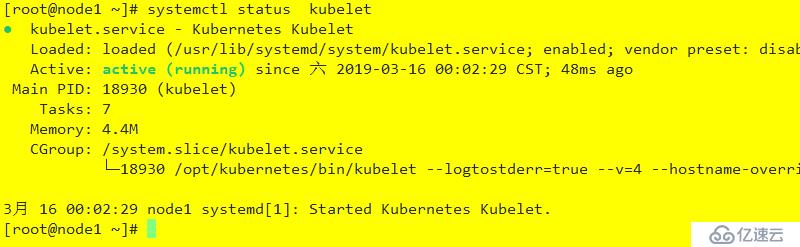

anonymous:

enabled: truesystemctl daemon-reload

systemctl enable kubelet

systemctl restart kubelet

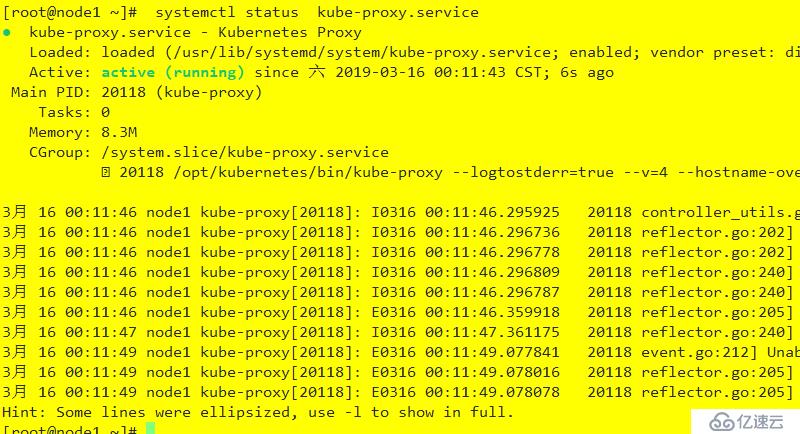

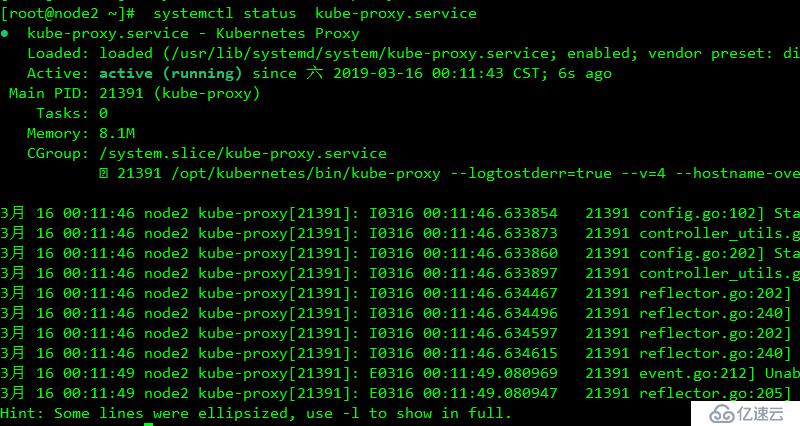

負責為service提供cluster內部的服務發現和負載均衡(通過創建相關的iptables和ipvs規則實現)

/opt/kubernetes/cfg/kube-proxy

KUBE_PROXY_OPTS="--logtostderr=true \

--v=4 \

--hostname-override=192.168.1.30 \

--cluster-cidr=10.0.0.0/24 \

--kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig"/usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-proxy

ExecStart=/opt/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.targetscp /opt/kubernetes/cfg/kube-proxy node2:/opt/kubernetes/cfg/

scp /usr/lib/systemd/system/kube-proxy.service node2:/usr/lib/systemd/system/opt/kubernetes/cfg/kube-proxy

KUBE_PROXY_OPTS="--logtostderr=true \

--v=4 \

--hostname-override=192.168.1.40 \

--cluster-cidr=10.0.0.0/24 \

--kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig" systemctl daemon-reload

systemctl enable kube-proxy.service

systemctl start kube-proxy.service

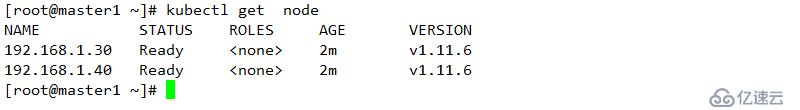

kubectl run nginx --image=nginx:1.14 --replicas=3

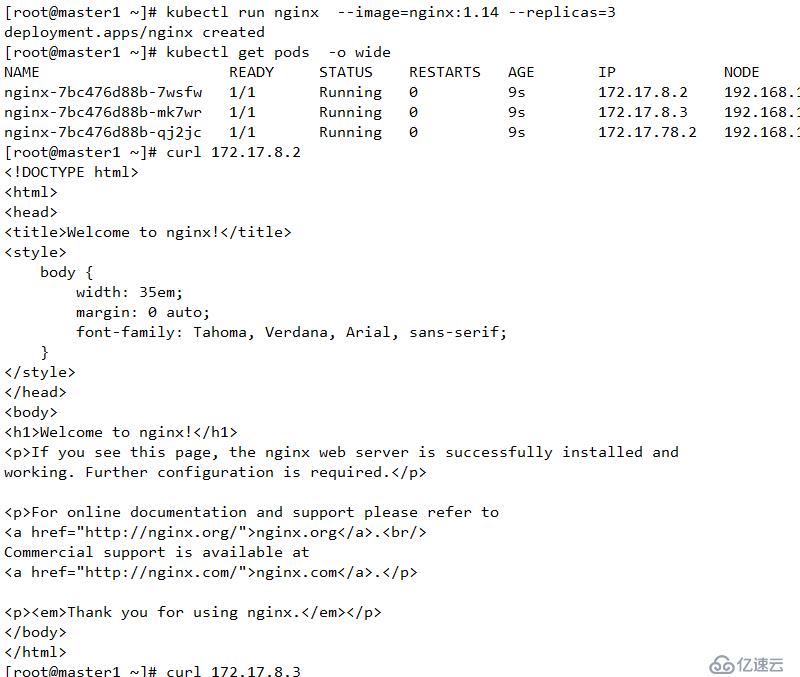

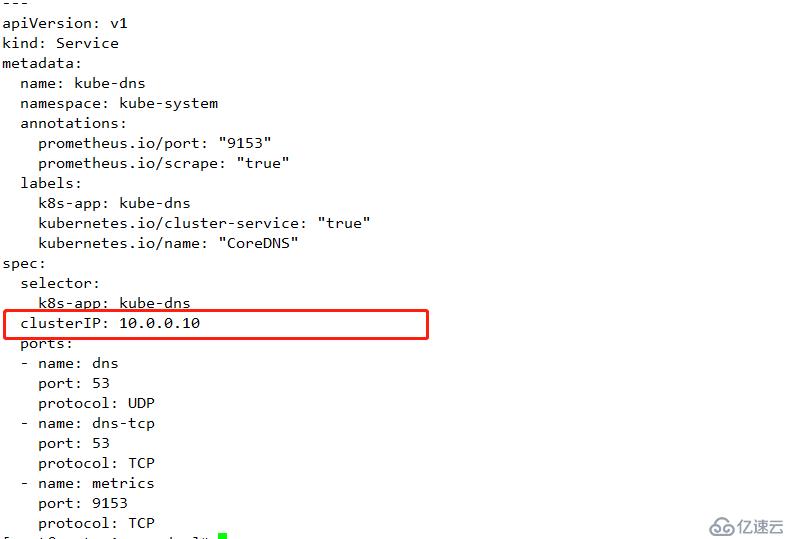

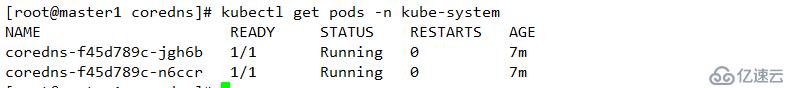

負責為整個集群提供內部DNS解析

mkdir coredns

wget https://raw.githubusercontent.com/coredns/deployment/master/kubernetes/coredns.yaml.sed上面內容及service的IP地址網段:

下面修改成與 /opt/kubernetes/cfg/kubelet.config 中clusterDNS配置的值相同

kubectl apply -f coredns.yaml.sed kubectl get pods -n kube-system

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

health

kubernetes cluster.local 10.0.0.0/24 { #此處是service的網絡地址范圍

pods insecure

upstream

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

proxy . /etc/resolv.conf

cache 30

loop

reload

loadbalance

}

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/name: "CoreDNS"

spec:

replicas: 2

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

serviceAccountName: coredns

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

beta.kubernetes.io/os: linux

containers:

- name: coredns

image: coredns/coredns:1.3.0

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.0.0.10 #此處是DNS的地址,其在service的集群網絡中

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

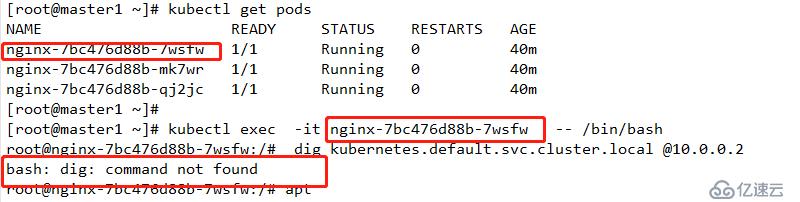

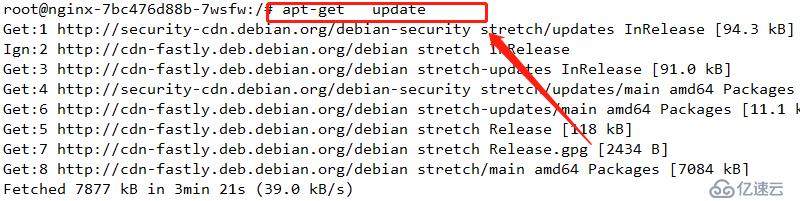

apt-get update

apt-get install dnsutils

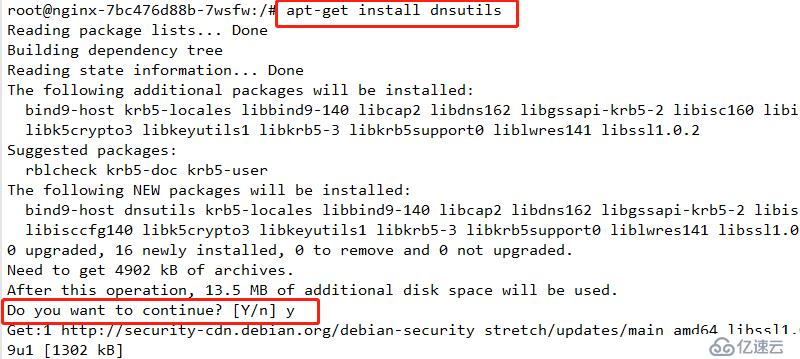

dig kubernetes.default.svc.cluster.local @10.0.0.10

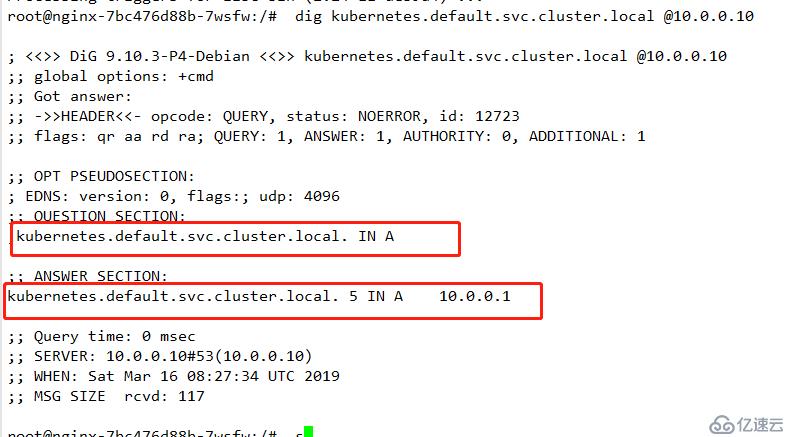

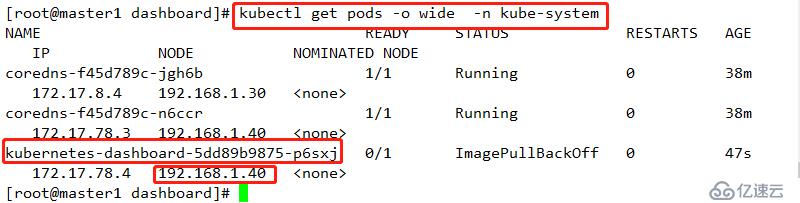

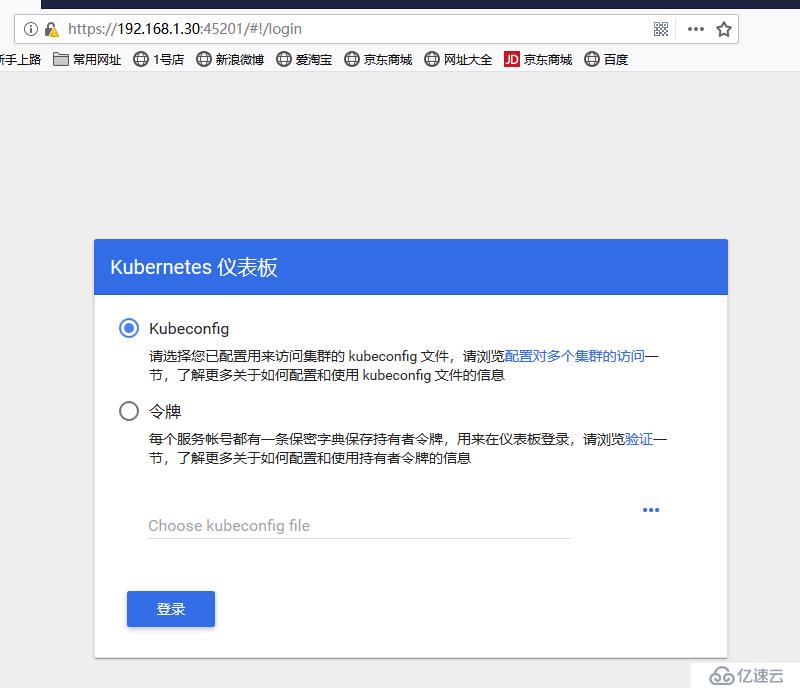

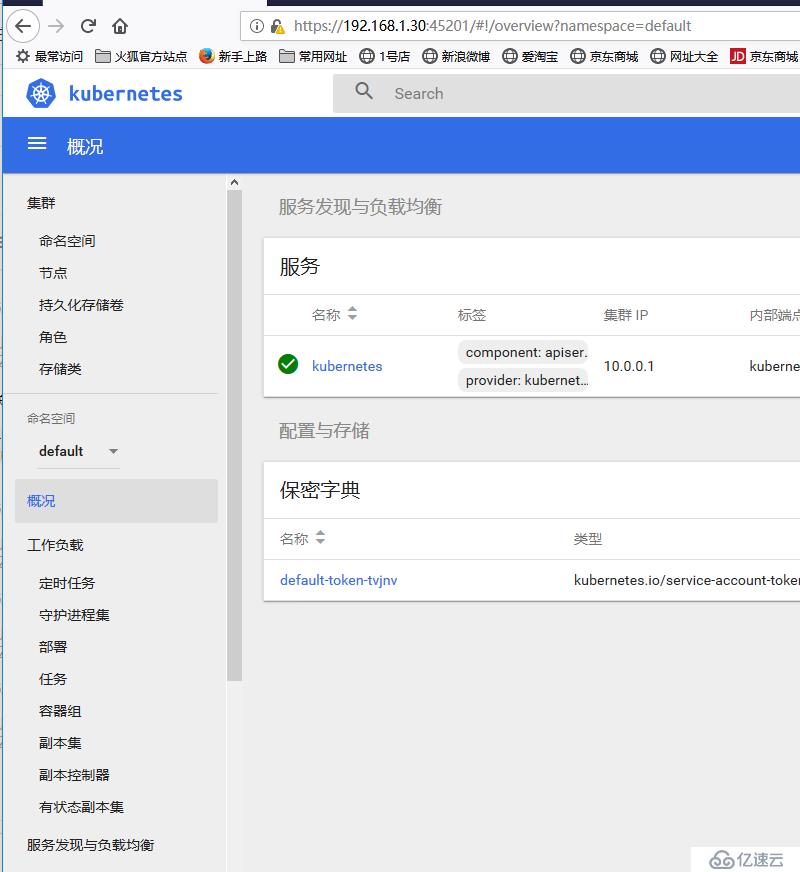

用于為集群提供圖形化接口

mkdir dashboardcd dashboard/

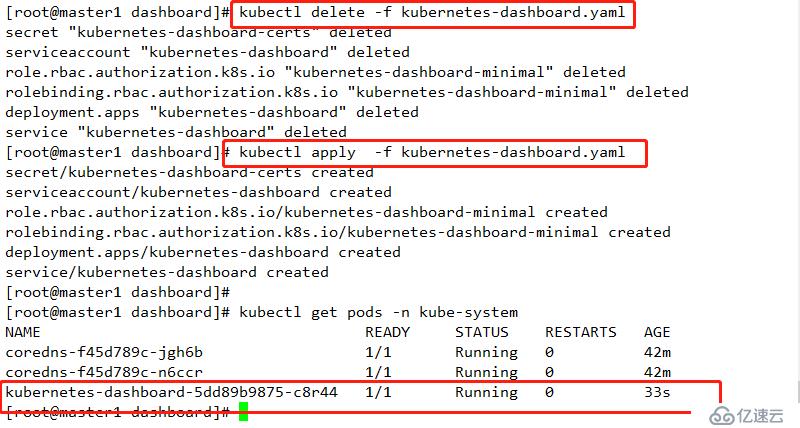

wget https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml kubectl apply -f kubernetes-dashboard.yaml kubectl get pods -o wide -n kube-system

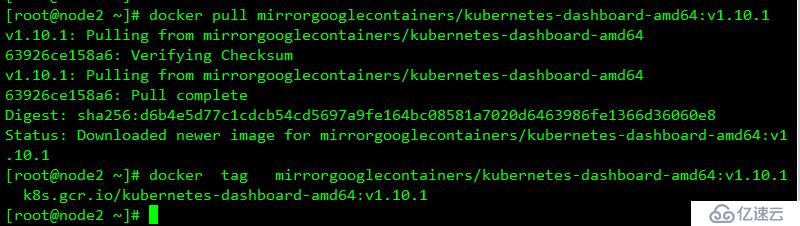

docker pull mirrorgooglecontainers/kubernetes-dashboard-amd64:v1.10.1

docker tag mirrorgooglecontainers/kubernetes-dashboard-amd64:v1.10.1 k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.1

kubectl delete -f kubernetes-dashboard.yaml

kubectl apply -f kubernetes-dashboard.yaml

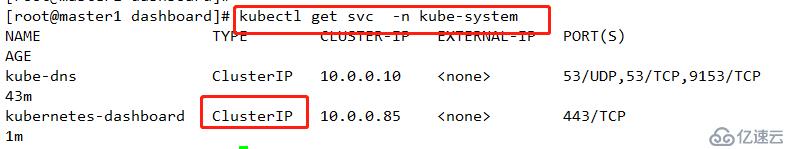

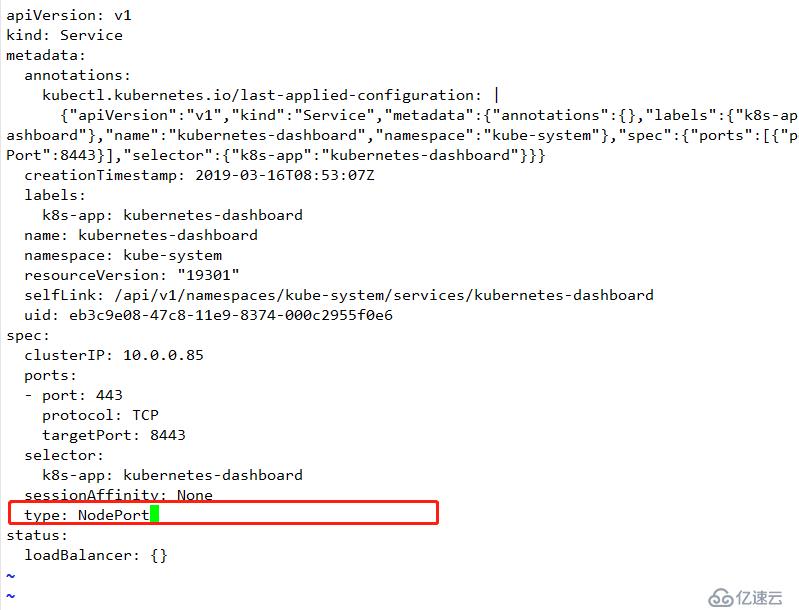

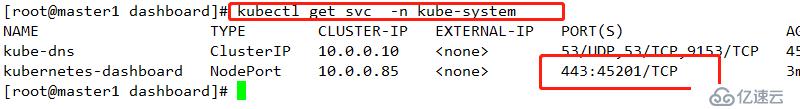

查看模式

kubectl edit svc -n kube-system kubernetes-dashboard

查看結果

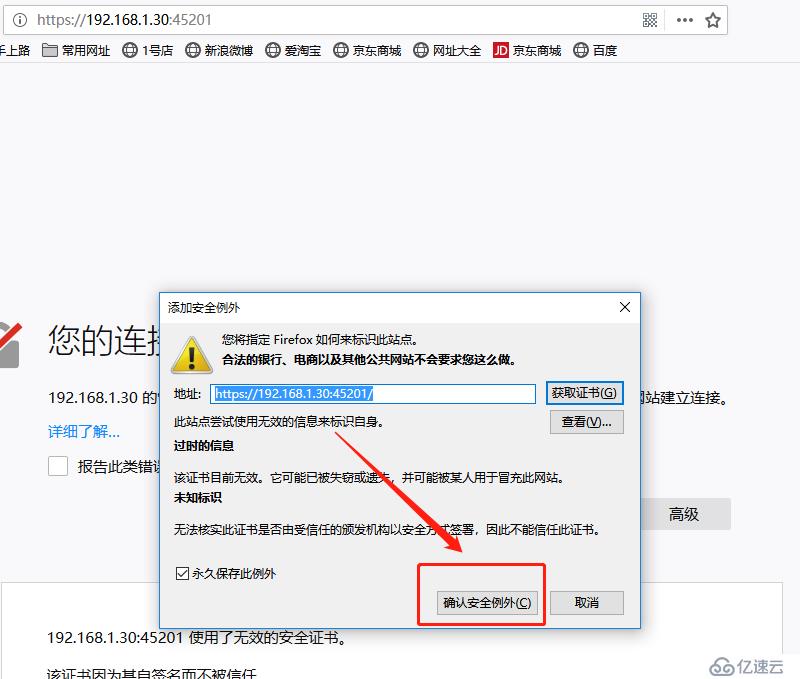

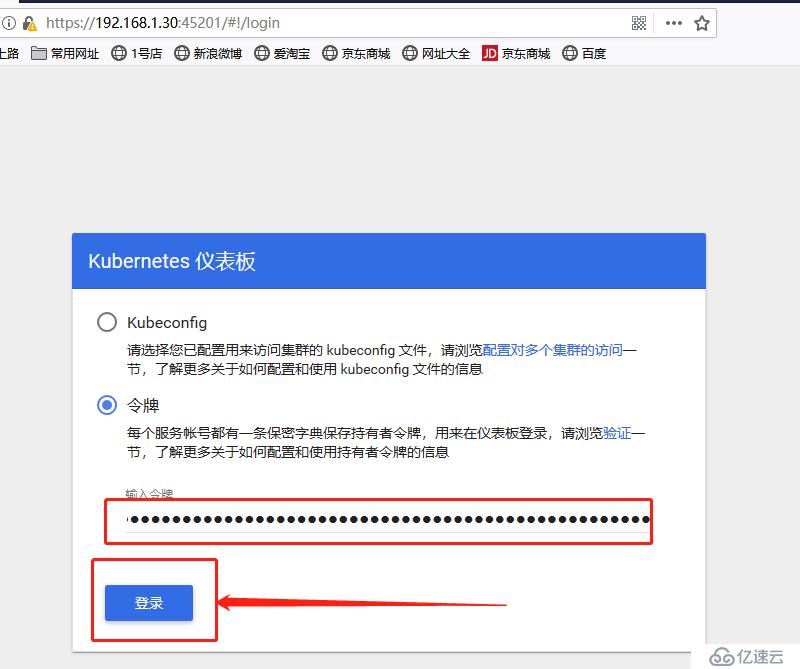

https://192.168.1.30:45201/ # 其必須是https,其次,其端口是上述的端口號添加例外

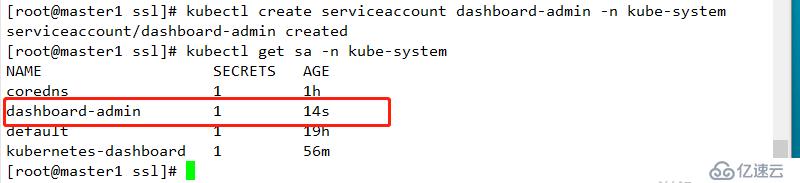

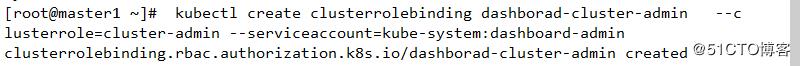

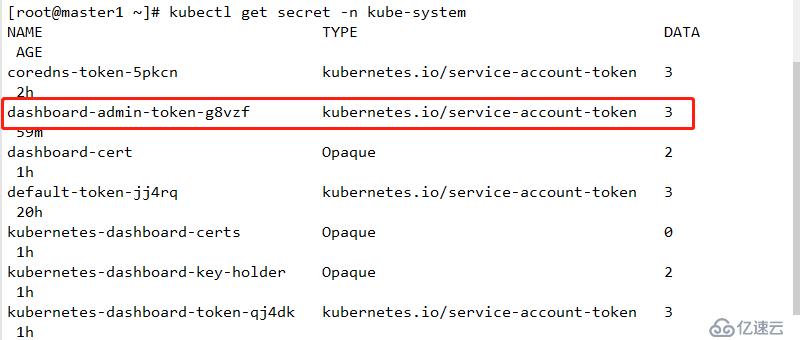

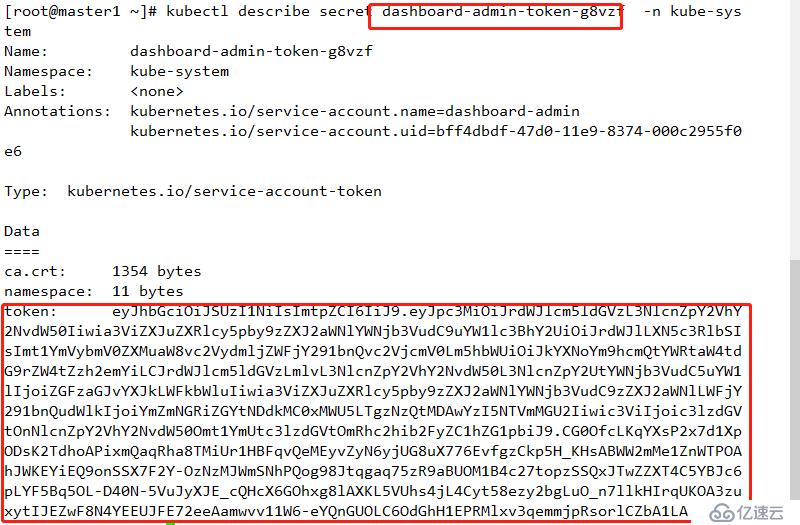

kubectl create serviceaccount dashboard-admin -n kube-system

kubectl create clusterrolebinding dashborad-cluster-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

mkdir ingress

cd ingress

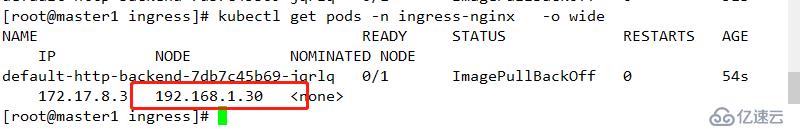

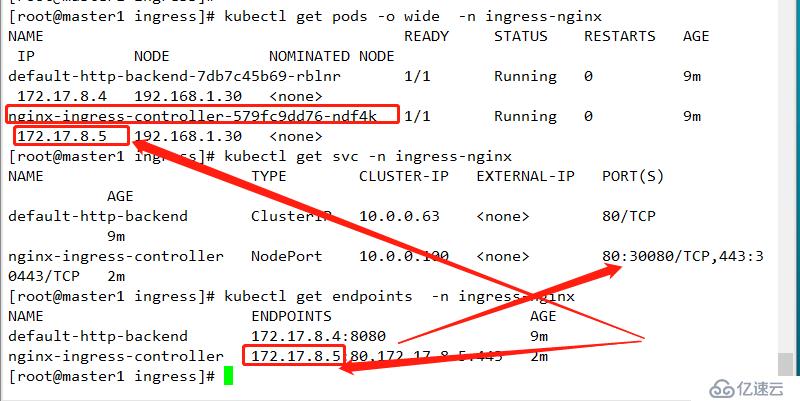

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/nginx-0.20.0/deploy/mandatory.yaml kubectl apply -f mandatory.yaml 查看運行節點

kubectl get pods -n ingress-nginx -o wide

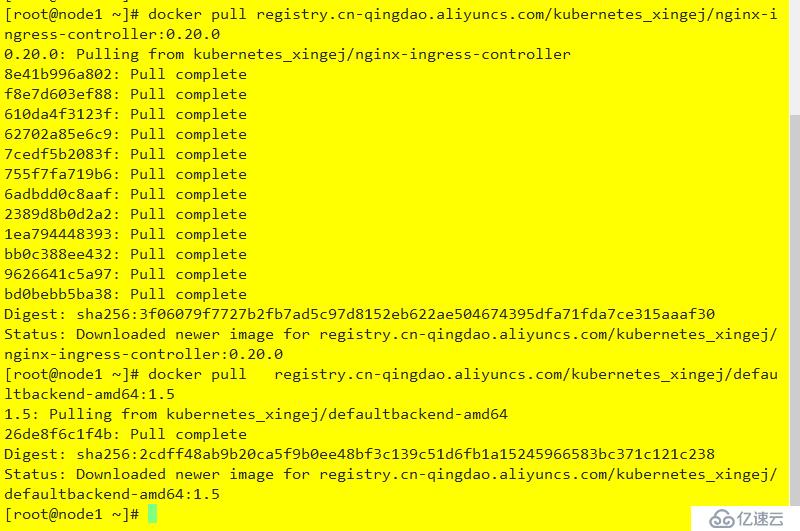

到指定節點拉取鏡像

docker pull registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/nginx-ingress-controller:0.20.0

docker pull registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/defaultbackend-amd64:1.5

docker tag registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/nginx-ingress-controller:0.20.0 quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.20.0

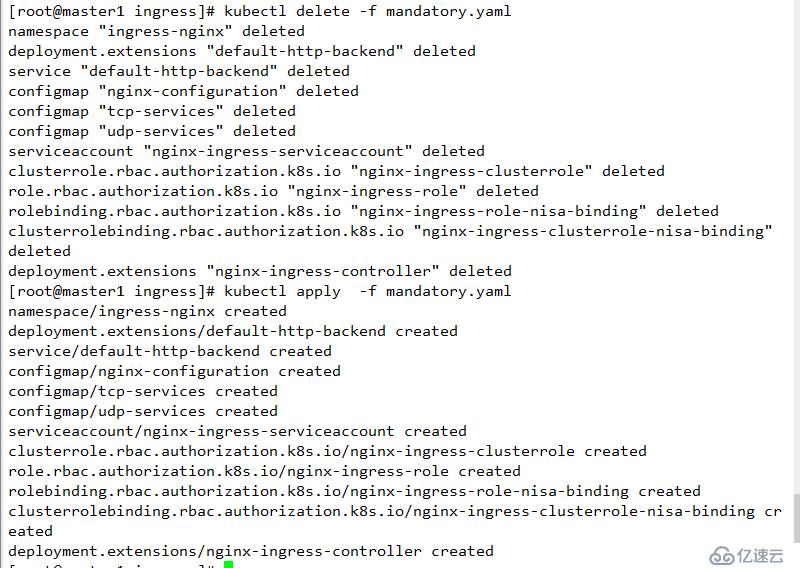

docker tag registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/defaultbackend-amd64:1.5 k8s.gcr.io/defaultbackend-amd64:1.5刪除重新部署

kubectl delete -f mandatory.yaml

kubectl apply -f mandatory.yaml

查看

kubectl get pods -n ingress-nginx

若此處無法創建,則可能是apiserver認證不過,可通過刪除/opt/kubernetes/cfg/kube-apiserver 中的enable-admission-plugins 中的SecurityContextDeny,ServiceAccount并重啟apiserver重新部署即可

apiVersion: v1

kind: Service

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

spec:

type: NodePort

clusterIP: 10.0.0.100

ports:

- port: 80

name: http

nodePort: 30080

- port: 443

name: https

nodePort: 30443

selector:

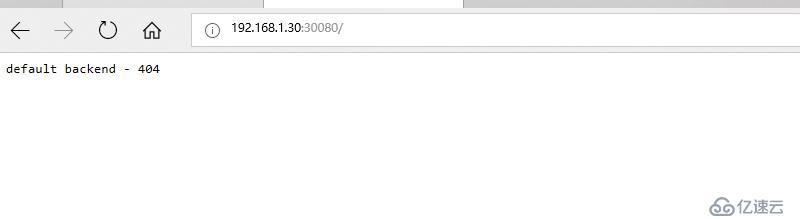

app.kubernetes.io/name: ingress-nginxkubectl apply -f service.yaml

查看

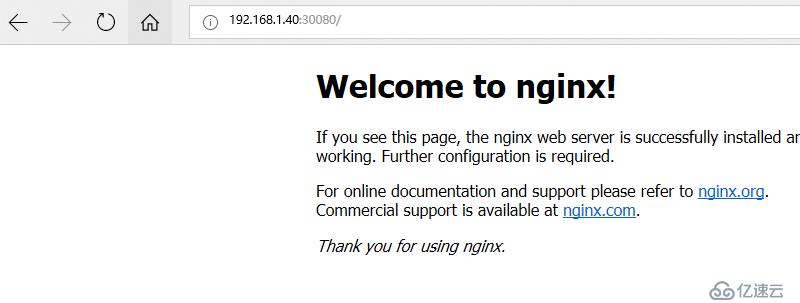

驗證

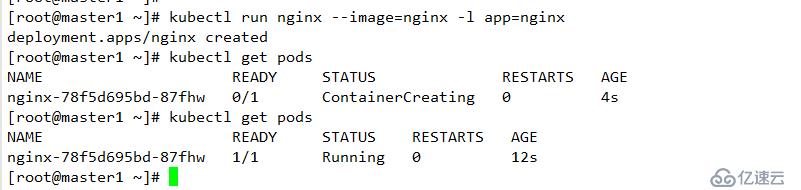

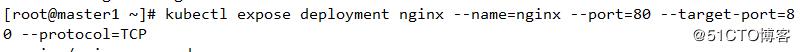

配置默認后端站點

#cat ingress/nginx.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: default-backend-nginx

namespace: default

spec:

backend:

serviceName: nginx

servicePort: 80部署

kubectl apply -f ingress/nginx.yaml 查看

mkdir metrics-server

cd metrics-server/

yum -y install git

git clone https://github.com/kubernetes-incubator/metrics-server.git #metrics-server-deployment.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: metrics-server

namespace: kube-system

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: metrics-server

namespace: kube-system

labels:

k8s-app: metrics-server

spec:

selector:

matchLabels:

k8s-app: metrics-server

template:

metadata:

name: metrics-server

labels:

k8s-app: metrics-server

spec:

serviceAccountName: metrics-server

volumes:

# mount in tmp so we can safely use from-scratch images and/or read-only containers

- name: tmp-dir

emptyDir: {}

containers:

- name: metrics-server

image: registry.cn-beijing.aliyuncs.com/minminmsn/metrics-server:v0.3.1

imagePullPolicy: Always

volumeMounts:

- name: tmp-dir

mountPath: /tmp

#service.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: metrics-ingress

namespace: kube-system

annotations:

nginx.ingress.kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/secure-backends: "true"

nginx.ingress.kubernetes.io/ssl-passthrough: "true"

spec:

tls:

- hosts:

- metrics.minminmsn.com

secretName: ingress-secret

rules:

- host: metrics.minminmsn.com

http:

paths:

- path: /

backend:

serviceName: metrics-server

servicePort: 443#/opt/kubernetes/cfg/kube-apiserver

KUBE_APISERVER_OPTS="--logtostderr=true \

--v=4 \

--etcd-servers=https://192.168.1.10:2379,https://192.168.1.20:2379,https://192.168.1.30:2379 \

--bind-address=192.168.1.10 \

--secure-port=6443 \

--advertise-address=192.168.1.10 \

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ResourceQuota,NodeRestriction \

--authorization-mode=RBAC,Node \

--enable-bootstrap-token-auth \

--token-auth-file=/opt/kubernetes/cfg/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/opt/kubernetes/ssl/server.pem \

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \

--client-ca-file=/opt/kubernetes/ssl/ca.pem \

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/opt/etcd/ssl/ca.pem \

--etcd-certfile=/opt/etcd/ssl/server.pem \

--etcd-keyfile=/opt/etcd/ssl/server-key.pem \

# 添加如下配置

--requestheader-client-ca-file=/opt/kubernetes/ssl/ca.pem \

--requestheader-allowed-names=aggregator \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-group-headers=X-Remote-Group \

--requestheader-username-headers=X-Remote-User \

--proxy-client-cert-file=/opt/kubernetes/ssl/kube-proxy.pem \

--proxy-client-key-file=/opt/kubernetes/ssl/kube-proxy-key.pem"重啟apiserver

systemctl restart kube-apiserver.servicecd metrics-server/deploy/1.8+/

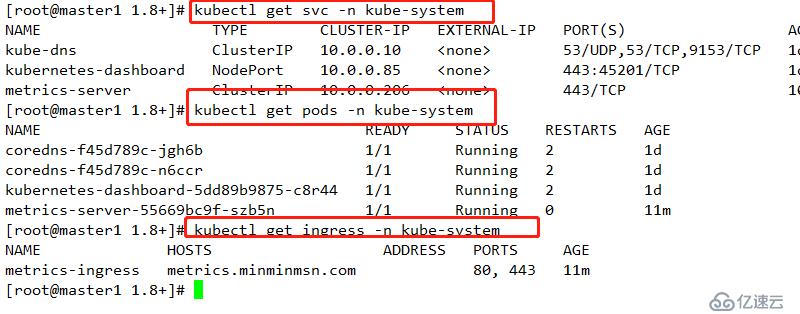

kubectl apply -f .查看配置

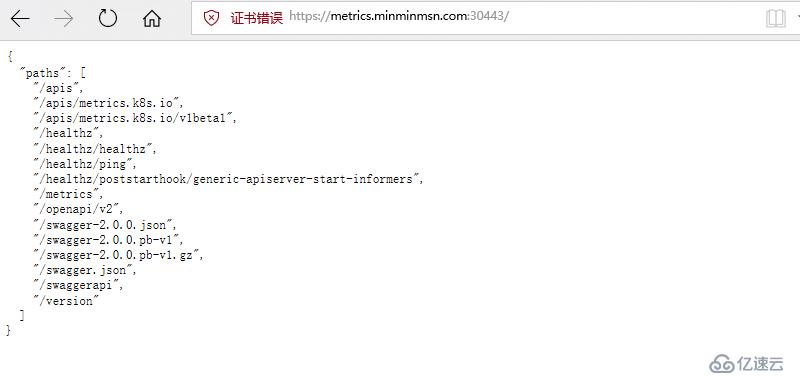

此處之前使用的是30443端口映射443端口,需要https進行訪問

git clone https://github.com/iKubernetes/k8s-prom.git

cd k8s-prom/

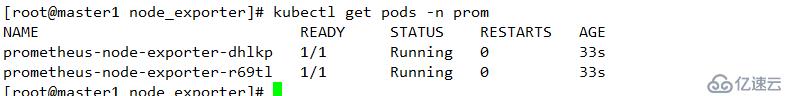

kubectl apply -f namespace.yamlcd node_exporter/

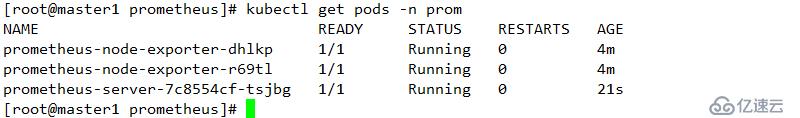

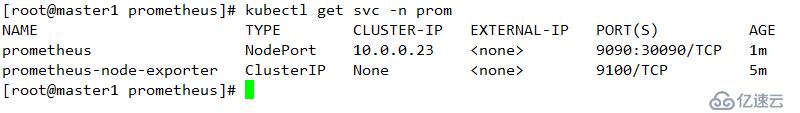

kubectl apply -f .查看

kubectl get pods -n prom

cd ../prometheus/

#prometheus-deploy.yaml

#刪除其中的limit限制,結果如下

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus-server

namespace: prom

labels:

app: prometheus

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

component: server

#matchExpressions:

#- {key: app, operator: In, values: [prometheus]}

#- {key: component, operator: In, values: [server]}

template:

metadata:

labels:

app: prometheus

component: server

annotations:

prometheus.io/scrape: 'false'

spec:

serviceAccountName: prometheus

containers:

- name: prometheus

image: prom/prometheus:v2.2.1

imagePullPolicy: Always

command:

- prometheus

- --config.file=/etc/prometheus/prometheus.yml

- --storage.tsdb.path=/prometheus

- --storage.tsdb.retention=720h

ports:

- containerPort: 9090

protocol: TCP

volumeMounts:

- mountPath: /etc/prometheus/prometheus.yml

name: prometheus-config

subPath: prometheus.yml

- mountPath: /prometheus/

name: prometheus-storage-volume

volumes:

- name: prometheus-config

configMap:

name: prometheus-config

items:

- key: prometheus.yml

path: prometheus.yml

mode: 0644

- name: prometheus-storage-volume

emptyDir: {}

# 部署

kubectl apply -f .查看

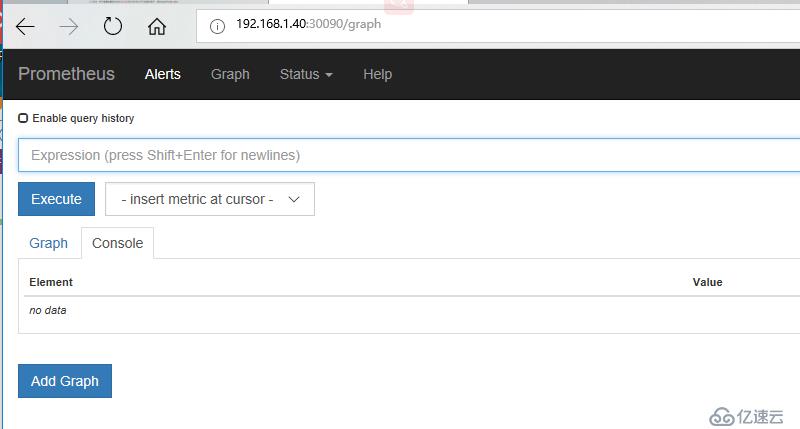

kubectl get pods -n prom

驗證

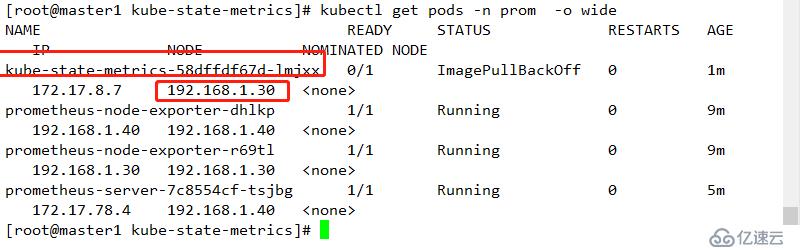

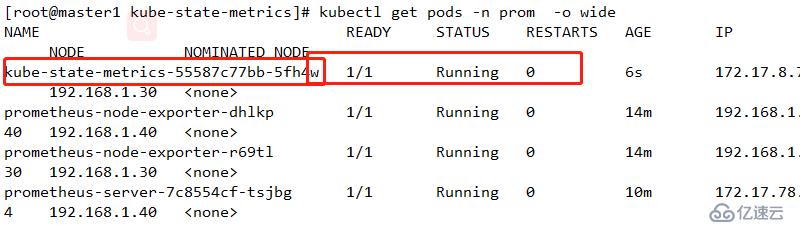

cd ../kube-state-metrics/

kubectl apply -f .查看部署節點

到指定節點拉取鏡像

docker pull quay.io/coreos/kube-state-metrics:v1.3.1

docker tag quay.io/coreos/kube-state-metrics:v1.3.1 gcr.io/google_containers/kube-state-metrics-amd64:v1.3.1

#重新部署

kubectl delete -f .

kubectl apply -f .查看

準備證書

cd /opt/kubernetes/ssl/

(umask 077;openssl genrsa -out serving.key 2048)

openssl req -new -key serving.key -out serving.csr -subj "/CN=serving"

openssl x509 -req -in serving.csr -CA ./kubelet.crt -CAkey ./kubelet.key -CAcreateserial -out serving.crt -days 3650

kubectl create secret generic cm-adapter-serving-certs --from-file=serving.crt=./serving.crt --from-file=serving.key=./serving.key -n prom查看

kubectl get secret -n prom

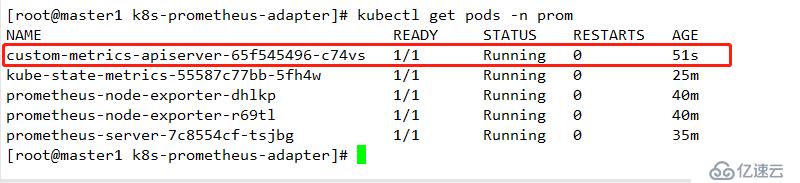

部署資源

cd k8s-prometheus-adapter/

mv custom-metrics-apiserver-deployment.yaml custom-metrics-apiserver-deployment.yaml.bak

wget https://raw.githubusercontent.com/DirectXMan12/k8s-prometheus-adapter/master/deploy/manifests/custom-metrics-apiserver-deployment.yaml

# 修改名稱空間

#custom-metrics-apiserver-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: custom-metrics-apiserver

name: custom-metrics-apiserver

namespace: prom

spec:

replicas: 1

selector:

matchLabels:

app: custom-metrics-apiserver

template:

metadata:

labels:

app: custom-metrics-apiserver

name: custom-metrics-apiserver

spec:

serviceAccountName: custom-metrics-apiserver

containers:

- name: custom-metrics-apiserver

image: directxman12/k8s-prometheus-adapter-amd64

args:

- --secure-port=6443

- --tls-cert-file=/var/run/serving-cert/serving.crt

- --tls-private-key-file=/var/run/serving-cert/serving.key

- --logtostderr=true

- --prometheus-url=http://prometheus.prom.svc:9090/

- --metrics-relist-interval=1m

- --v=10

- --config=/etc/adapter/config.yaml

ports:

- containerPort: 6443

volumeMounts:

- mountPath: /var/run/serving-cert

name: volume-serving-cert

readOnly: true

- mountPath: /etc/adapter/

name: config

readOnly: true

- mountPath: /tmp

name: tmp-vol

volumes:

- name: volume-serving-cert

secret:

secretName: cm-adapter-serving-certs

- name: config

configMap:

name: adapter-config

- name: tmp-vol

emptyDir: {}

wget https://raw.githubusercontent.com/DirectXMan12/k8s-prometheus-adapter/master/deploy/manifests/custom-metrics-config-map.yaml

#修改名稱空間

#custom-metrics-config-map.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: adapter-config

namespace: prom

data:

config.yaml: |

rules:

- seriesQuery: '{__name__=~"^container_.*",container_name!="POD",namespace!="",pod_name!=""}'

seriesFilters: []

resources:

overrides:

namespace:

resource: namespace

pod_name:

resource: pod

name:

matches: ^container_(.*)_seconds_total$

as: ""

metricsQuery: sum(rate(<<.Series>>{<<.LabelMatchers>>,container_name!="POD"}[1m])) by (<<.GroupBy>>)

- seriesQuery: '{__name__=~"^container_.*",container_name!="POD",namespace!="",pod_name!=""}'

seriesFilters:

- isNot: ^container_.*_seconds_total$

resources:

overrides:

namespace:

resource: namespace

pod_name:

resource: pod

name:

matches: ^container_(.*)_total$

as: ""

metricsQuery: sum(rate(<<.Series>>{<<.LabelMatchers>>,container_name!="POD"}[1m])) by (<<.GroupBy>>)

- seriesQuery: '{__name__=~"^container_.*",container_name!="POD",namespace!="",pod_name!=""}'

seriesFilters:

- isNot: ^container_.*_total$

resources:

overrides:

namespace:

resource: namespace

pod_name:

resource: pod

name:

matches: ^container_(.*)$

as: ""

metricsQuery: sum(<<.Series>>{<<.LabelMatchers>>,container_name!="POD"}) by (<<.GroupBy>>)

- seriesQuery: '{namespace!="",__name__!~"^container_.*"}'

seriesFilters:

- isNot: .*_total$

resources:

template: <<.Resource>>

name:

matches: ""

as: ""

metricsQuery: sum(<<.Series>>{<<.LabelMatchers>>}) by (<<.GroupBy>>)

- seriesQuery: '{namespace!="",__name__!~"^container_.*"}'

seriesFilters:

- isNot: .*_seconds_total

resources:

template: <<.Resource>>

name:

matches: ^(.*)_total$

as: ""

metricsQuery: sum(rate(<<.Series>>{<<.LabelMatchers>>}[1m])) by (<<.GroupBy>>)

- seriesQuery: '{namespace!="",__name__!~"^container_.*"}'

seriesFilters: []

resources:

template: <<.Resource>>

name:

matches: ^(.*)_seconds_total$

as: ""

metricsQuery: sum(rate(<<.Series>>{<<.LabelMatchers>>}[1m])) by (<<.GroupBy>>)

resourceRules:

cpu:

containerQuery: sum(rate(container_cpu_usage_seconds_total{<<.LabelMatchers>>}[1m])) by (<<.GroupBy>>)

nodeQuery: sum(rate(container_cpu_usage_seconds_total{<<.LabelMatchers>>, id='/'}[1m])) by (<<.GroupBy>>)

resources:

overrides:

instance:

resource: node

namespace:

resource: namespace

pod_name:

resource: pod

containerLabel: container_name

memory:

containerQuery: sum(container_memory_working_set_bytes{<<.LabelMatchers>>}) by (<<.GroupBy>>)

nodeQuery: sum(container_memory_working_set_bytes{<<.LabelMatchers>>,id='/'}) by (<<.GroupBy>>)

resources:

overrides:

instance:

resource: node

namespace:

resource: namespace

pod_name:

resource: pod

containerLabel: container_name

window: 1m部署

kubectl apply -f custom-metrics-config-map.yaml

kubectl apply -f .

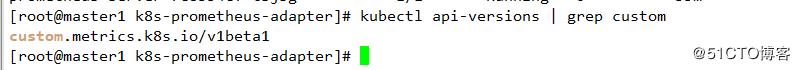

查看

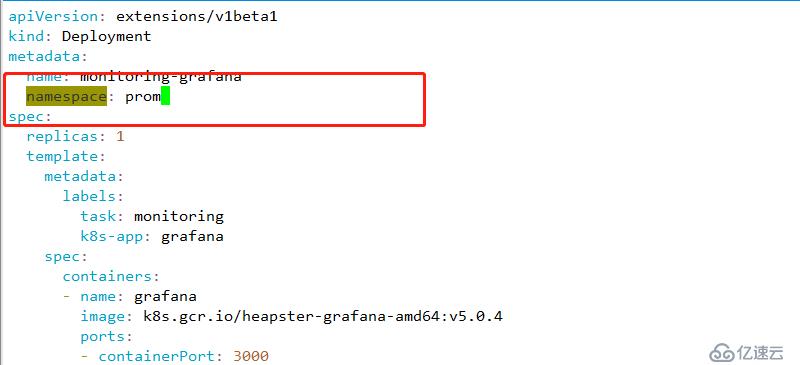

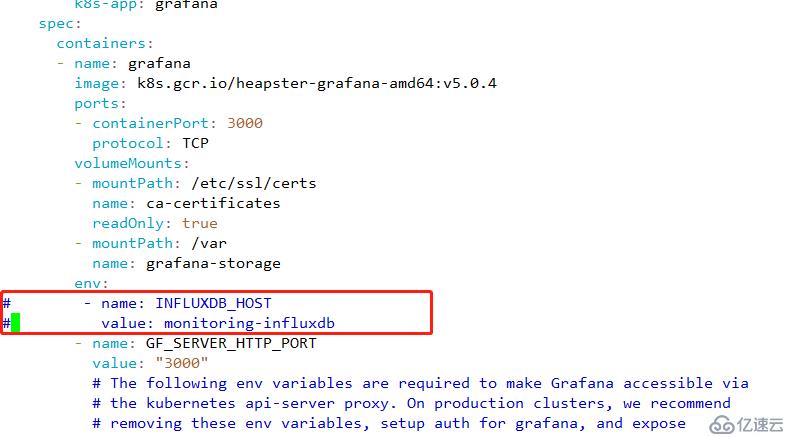

wget https://raw.githubusercontent.com/kubernetes-retired/heapster/master/deploy/kube-config/influxdb/grafana.yaml1 修改名稱空間

2 修改器默認使用的存儲

3 修改service名稱空間

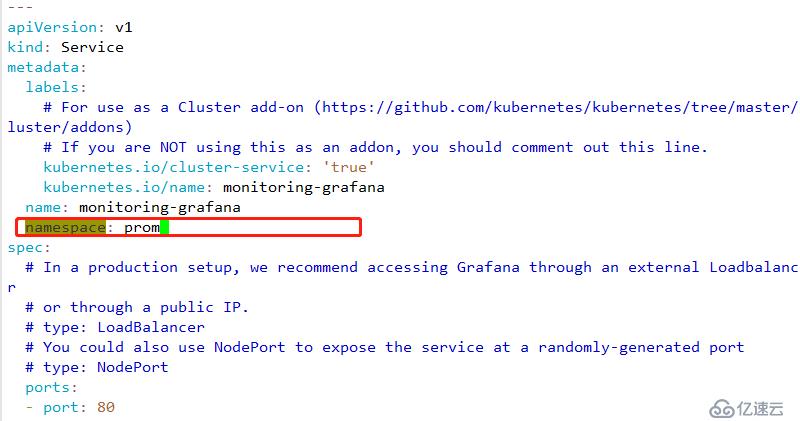

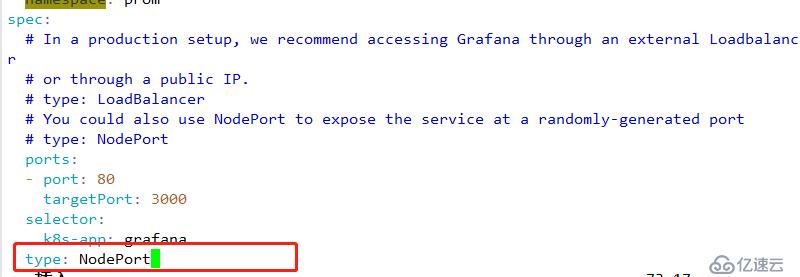

4 修改nodeport 以供外網訪問

5 配置文件如下:

#grafana.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: monitoring-grafana

namespace: prom

spec:

replicas: 1

template:

metadata:

labels:

task: monitoring

k8s-app: grafana

spec:

containers:

- name: grafana

image: registry.cn-hangzhou.aliyuncs.com/google_containers/heapster-grafana-amd64:v5.0.4

ports:

- containerPort: 3000

protocol: TCP

volumeMounts:

- mountPath: /etc/ssl/certs

name: ca-certificates

readOnly: true

- mountPath: /var

name: grafana-storage

env:

# - name: INFLUXDB_HOST

# value: monitoring-influxdb

- name: GF_SERVER_HTTP_PORT

value: "3000"

# The following env variables are required to make Grafana accessible via

# the kubernetes api-server proxy. On production clusters, we recommend

# removing these env variables, setup auth for grafana, and expose the grafana

# service using a LoadBalancer or a public IP.

- name: GF_AUTH_BASIC_ENABLED

value: "false"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ORG_ROLE

value: Admin

- name: GF_SERVER_ROOT_URL

# If you're only using the API Server proxy, set this value instead:

# value: /api/v1/namespaces/kube-system/services/monitoring-grafana/proxy

value: /

volumes:

- name: ca-certificates

hostPath:

path: /etc/ssl/certs

- name: grafana-storage

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

labels:

# For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon, you should comment out this line.

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: monitoring-grafana

name: monitoring-grafana

namespace: prom

spec:

# In a production setup, we recommend accessing Grafana through an external Loadbalancer

# or through a public IP.

# type: LoadBalancer

# You could also use NodePort to expose the service at a randomly-generated port

# type: NodePort

ports:

- port: 80

targetPort: 3000

selector:

k8s-app: grafana

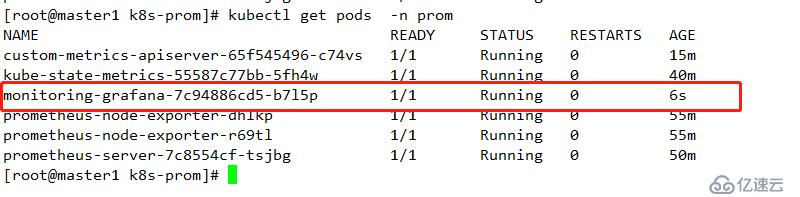

type: NodePortkubectl apply -f grafana.yaml

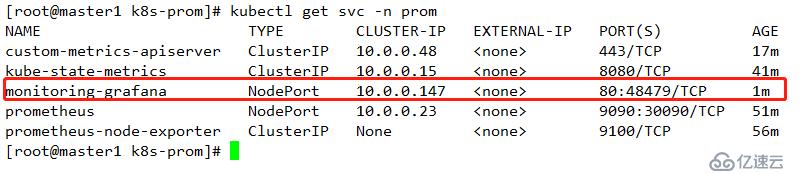

查看其運行節點

查看其映射端口

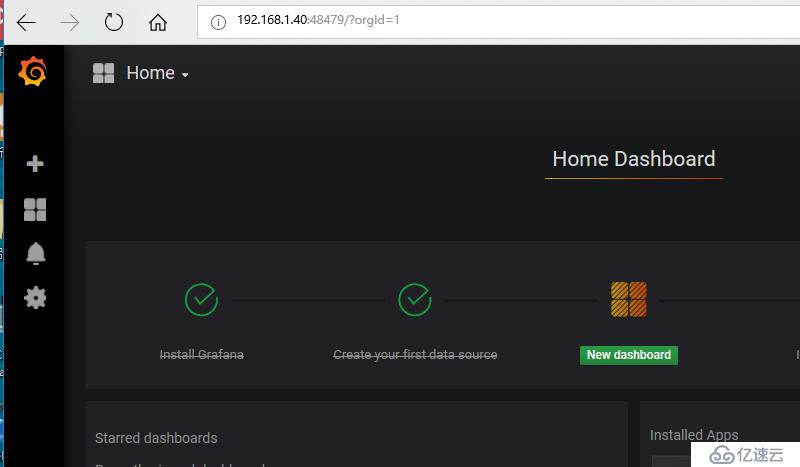

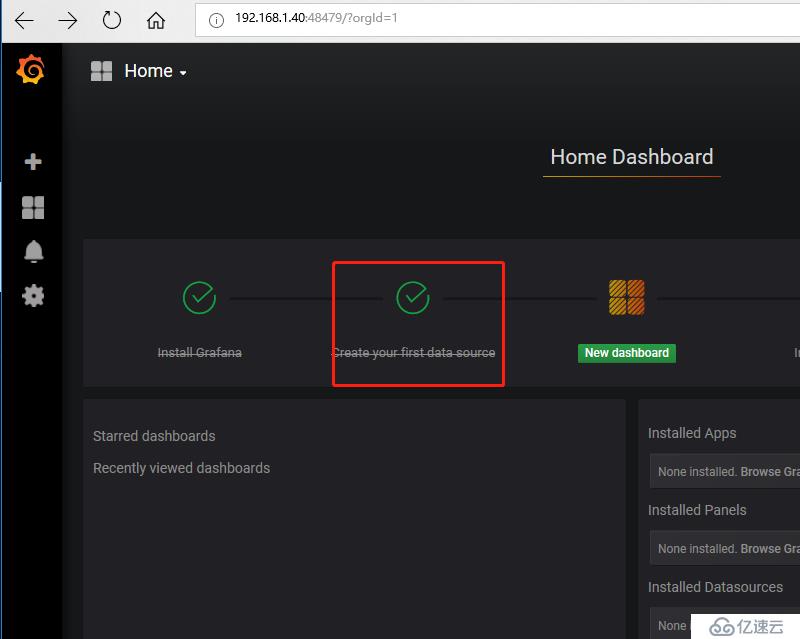

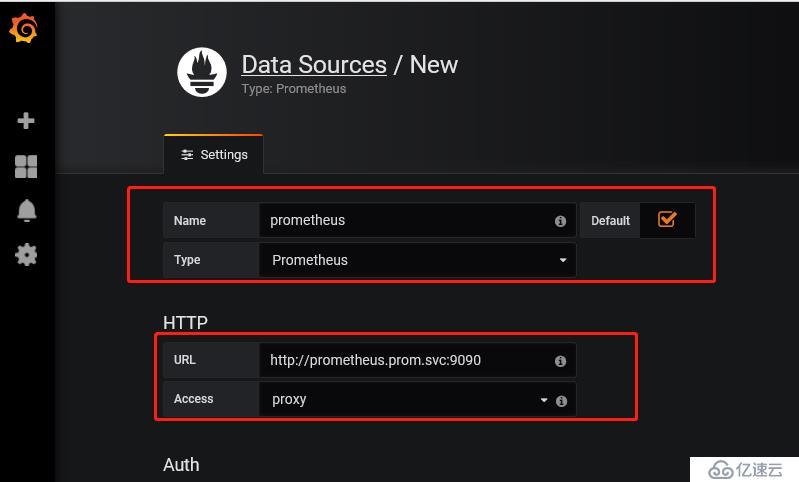

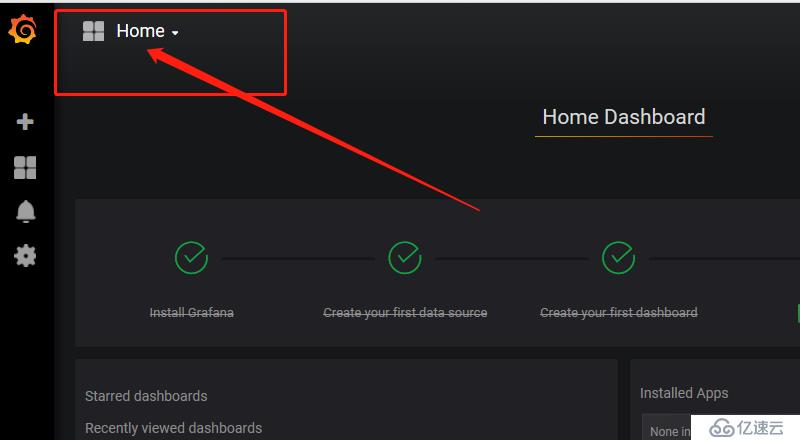

查看

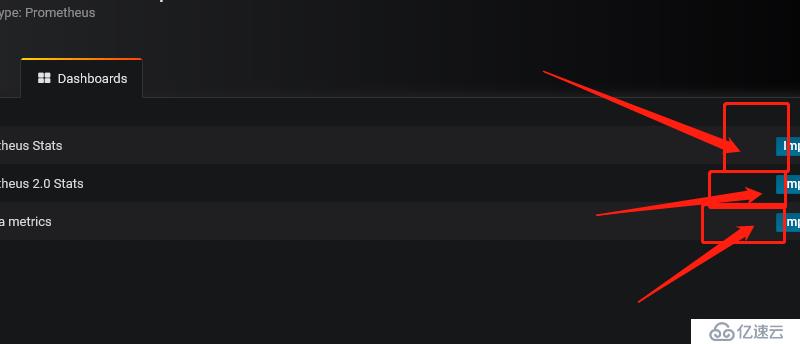

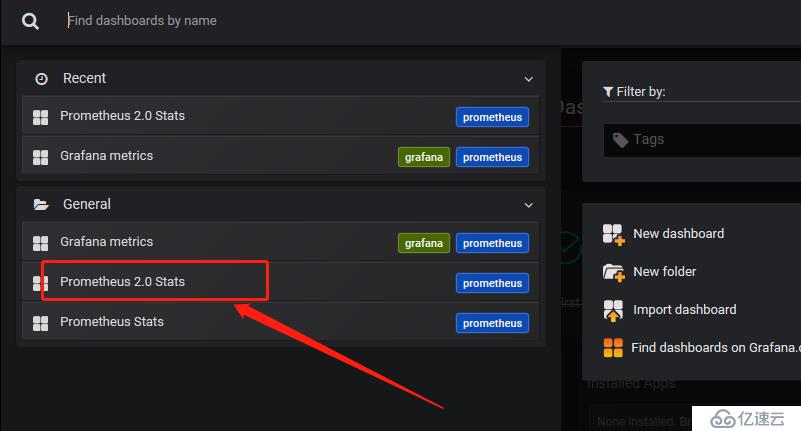

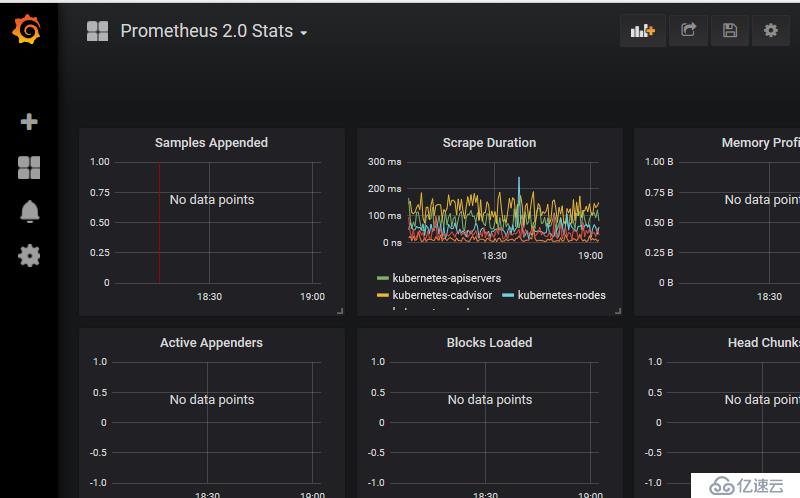

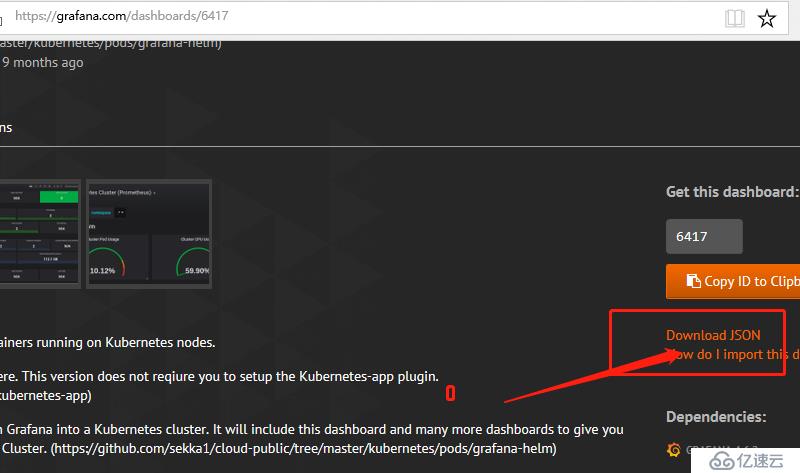

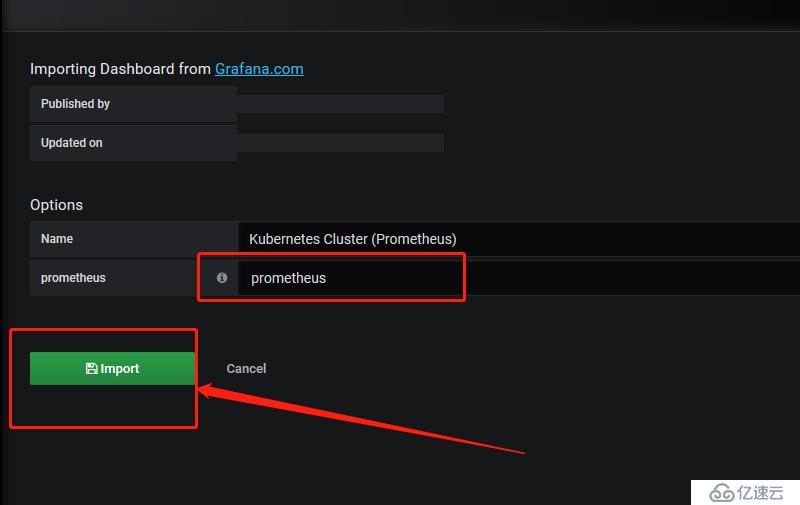

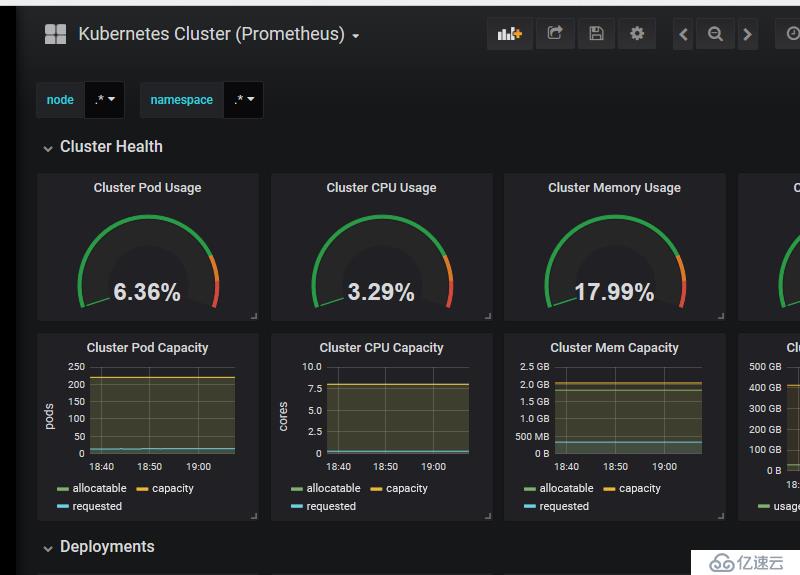

1 插件位置并進行下載

https://grafana.com/dashboards/6417

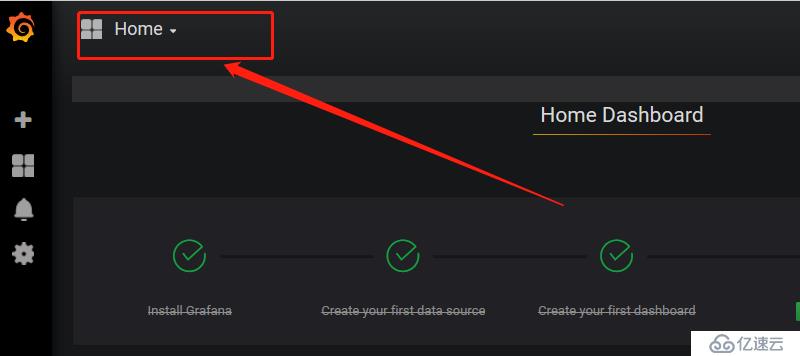

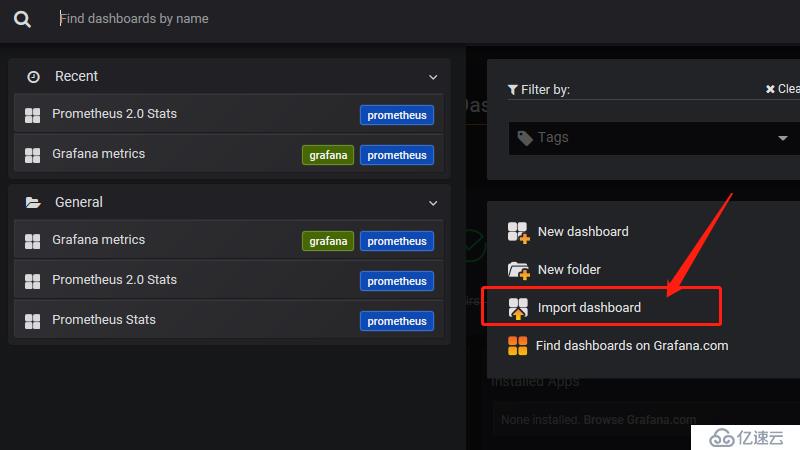

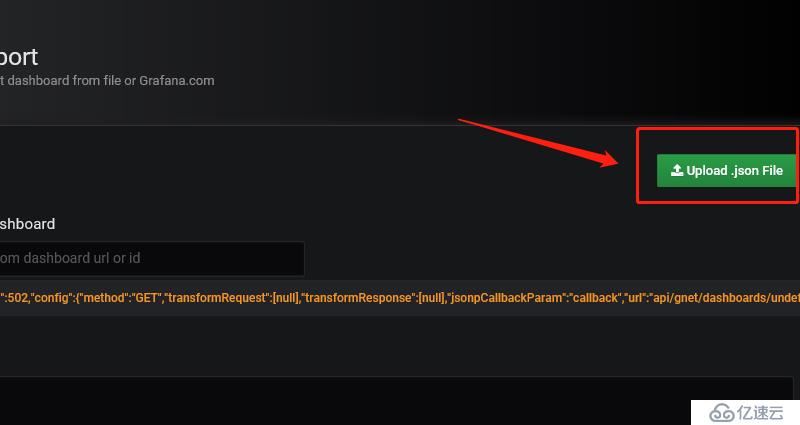

2 導入插件

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。