您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

1.安裝3臺虛擬機,或者使用其他物理機都OK;

2.三臺IP地址分別為:192.168.222.135,192.168.222.139,192.168.222.140

2.設置hostname,master節點設置

hostnamectl set-hostname master3.兩個node節點設置

hostnamectl set-hostname node14.退出shell重新登錄

1.在三臺服務器上都安裝docker、kubelet、kubeadm、kubectl,這里有個腳本,大家可以拿來使用。

~]# vi setup.sh

以下內容復制進文件

#/bin/sh

# install some tools

sudo yum install -y vim telnet bind-utils wget

sudo bash -c 'cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF'

# install and start docker

sudo yum install -y docker

sudo systemctl enable docker && sudo systemctl start docker

# Verify docker version is 1.12 and greater.

sudo setenforce 0

# install kubeadm, kubectl, and kubelet.

sudo yum install -y kubelet kubeadm kubectl

sudo bash -c 'cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward=1

EOF'

sudo sysctl --system

sudo systemctl stop firewalld

sudo systemctl disable firewalld

sudo swapoff -a

sudo systemctl enable kubelet && sudo systemctl start kubelet2.將setup.sh復制到另外兩臺服務器上

scp setup.sh root@192.168.222.139:/root/

scp setup.sh root@192.168.222.140:/root/3.分別在三臺服務器上執行這個腳本,腳本其實就是安裝一些組件,大家都應該能看得懂。

sh setup.sh分別在三臺機器上運行以下命令,確認是否安裝完畢需要的組件

[root@node2 ~]# which kubeadm

/usr/bin/kubeadm

[root@node2 ~]# which kubelet

/usr/bin/kubelet

[root@node2 ~]# which kubectl

/usr/local/bin/kubectl

[root@node2 ~]# docker version

Client:

Version: 1.13.1

API version: 1.26

Package version: docker-1.13.1-94.gitb2f74b2.el7.centos.x86_64

Go version: go1.10.3

Git commit: b2f74b2/1.13.1

Built: Tue Mar 12 10:27:24 2019

OS/Arch: linux/amd64

Server:

Version: 1.13.1

API version: 1.26 (minimum version 1.12)

Package version: docker-1.13.1-94.gitb2f74b2.el7.centos.x86_64

Go version: go1.10.3

Git commit: b2f74b2/1.13.1

Built: Tue Mar 12 10:27:24 2019

OS/Arch: linux/amd64

Experimental: false

確認沒問題后進入下一個步驟

1.主節點上進行kubeadmin init

sudo kubeadm init --pod-network-cidr 172.100.0.0/16 --apiserver-advertise-address 192.168.222.135

#--pod-network-cidr:pod節點的網段

#--apiserver-advertise-address:apiserver的IP地址,這里寫成master節點的IP即可

#-**-apiserver-cert-extra-sans: 如果需要使用公網IP,加上這一條,并且后面加上你的公網IP地址**2.如果發現拉取鏡像失敗,我是拉取失敗了~~~~ 所以去百度了一下,我們通過 docker.io/mirrorgooglecontainers 中轉一下,運行以下命令,直接復制即可

kubeadm config images list |sed -e 's/^/docker pull /g' -e 's#k8s.gcr.io#docker.io/mirrorgooglecontainers#g' |sh -x

docker images |grep mirrorgooglecontainers |awk '{print "docker tag ",$1":"$2,$1":"$2}' |sed -e 's#docker.io/mirrorgooglecontainers#k8s.gcr.io#2' |sh -x

docker images |grep mirrorgooglecontainers |awk '{print "docker rmi ", $1":"$2}' |sh -x

docker pull coredns/coredns:1.2.2

docker tag coredns/coredns:1.2.2 k8s.gcr.io/coredns:1.3.1

docker rmi coredns/coredns:1.2.2查看鏡像列表,沒毛病,如果發現init還報錯的話,按照kubeadm的報錯信息更改下docker鏡像的tag,具體各位百度下吧

[root@master ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/kube-proxy v1.14.1 20a2d7035165 2 days ago 82.1 MB

k8s.gcr.io/kube-apiserver v1.14.1 cfaa4ad74c37 2 days ago 210 MB

k8s.gcr.io/kube-controller-manager v1.14.1 efb3887b411d 2 days ago 158 MB

k8s.gcr.io/kube-scheduler v1.14.1 8931473d5bdb 2 days ago 81.6 MB

k8s.gcr.io/etcd 3.3.10 2c4adeb21b4f 4 months ago 258 MB

k8s.gcr.io/coredns 1.3.1 367cdc8433a4 7 months ago 39.2 MB

k8s.gcr.io/pause 3.1 da86e6ba6ca1 15 months ago 742 kB

3.kubeadm輸出的主要信息,如下

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.222.135:6443 --token srbxk3.x6xo6nhv3a4ng08m \

--discovery-token-ca-cert-hash sha256:b302232cbf1c5f3d418cea5553b641181d4bb384aadbce9b1d97b37cde477773 4.運行kubeadm配置

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config5.安裝網絡插件

kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')"6.確認是否安裝完成,查看pod內是否有weave-net即可

[root@master ~]# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-fb8b8dccf-glszh 1/1 Running 0 23m

kube-system coredns-fb8b8dccf-xcmtx 1/1 Running 0 23m

kube-system etcd-master 1/1 Running 0 22m

kube-system kube-apiserver-master 1/1 Running 0 22m

kube-system kube-controller-manager-master 1/1 Running 0 22m

kube-system kube-proxy-z84wh 1/1 Running 0 23m

kube-system kube-scheduler-master 1/1 Running 0 22m

kube-system weave-net-phwjz 2/2 Running 0 95s1.在兩臺node上分別運行主要信息內的命令,如下(這個不能復制,需要查看自己的kubeadm輸出的信息):

kubeadm join 192.168.222.135:6443 --token srbxk3.x6xo6nhv3a4ng08m \

--discovery-token-ca-cert-hash sha256:b302232cbf1c5f3d418cea5553b641181d4bb384aadbce9b1d97b37cde4777732.加入節點之后,發現node一直處于notready狀態,好糾結

[root@master bin]# kubectl get node

NAME STATUS ROLES AGE VERSION

master Ready master 167m v1.14.1

node1 NotReady <none> 142m v1.14.1

node2 NotReady <none> 142m v1.14.1

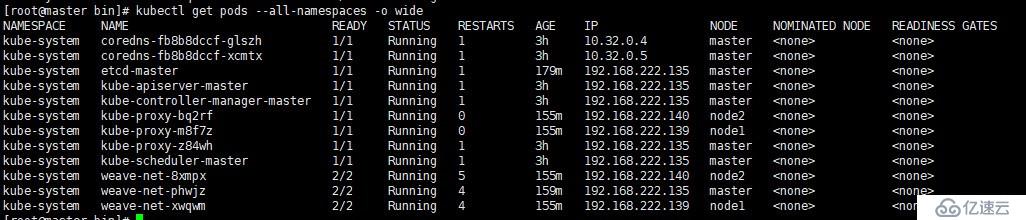

3.查看pod節點,發現weave沒啟動

[root@master bin]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-fb8b8dccf-glszh 1/1 Running 1 168m

kube-system coredns-fb8b8dccf-xcmtx 1/1 Running 1 168m

kube-system etcd-master 1/1 Running 1 167m

kube-system kube-apiserver-master 1/1 Running 1 167m

kube-system kube-controller-manager-master 1/1 Running 1 167m

kube-system kube-proxy-bq2rf 0/1 ContainerCreating 0 143m

kube-system kube-proxy-m8f7z 0/1 ContainerCreating 0 143m

kube-system kube-proxy-z84wh 1/1 Running 1 168m

kube-system kube-scheduler-master 1/1 Running 1 167m

kube-system weave-net-8xmpx 0/2 ContainerCreating 0 143m

kube-system weave-net-phwjz 2/2 Running 4 147m

kube-system weave-net-xwqwm 0/2 ContainerCreating 0 143m

4.使用describe pods發現報錯,沒有拉取鏡像,可是本機上已經有k8s.gcr.io/pause:3.1 鏡像了,在不懈的努力下,終于知道了,原來node節點上也需要該鏡像

kubectl describe pods --namespace=kube-system weave-net-8xmpx

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedCreatePodSandBox 77m (x8 over 120m) kubelet, node2 Failed create pod sandbox: rpc error: code = Unknown desc = failed pulling image "k8s.gcr.io/pause:3.1": Get https://k8s.gcr.io/v1/_ping: dial tcp 64.233.189.82:443: connect: connection refused

Warning FailedCreatePodSandBox 32m (x9 over 91m) kubelet, node2 Failed create pod sandbox: rpc error: code = Unknown desc = failed pulling image "k8s.gcr.io/pause:3.1": Get https://k8s.gcr.io/v1/_ping: dial tcp 64.233.188.82:443: connect: connection refused

Warning FailedCreatePodSandBox 22m (x23 over 133m) kubelet, node2 Failed create pod sandbox: rpc error: code = Unknown desc = failed pulling image "k8s.gcr.io/pause:3.1": Get https://k8s.gcr.io/v1/_ping: dial tcp 108.177.97.82:443: connect: connection refused

Warning FailedCreatePodSandBox 17m (x56 over 125m) kubelet, node2 Failed create pod sandbox: rpc error: code = Unknown desc = failed pulling image "k8s.gcr.io/pause:3.1": Get https://k8s.gcr.io/v1/_ping: dial tcp 74.125.203.82:443: connect: connection refused

Warning FailedCreatePodSandBox 12m (x24 over 110m) kubelet, node2 Failed create pod sandbox: rpc error: code = Unknown desc = failed pulling image "k8s.gcr.io/pause:3.1": Get https://k8s.gcr.io/v1/_ping: dial tcp 74.125.204.82:443: connect: connection refused

Warning FailedCreatePodSandBox 2m31s (x20 over 96m) kubelet, node2 Failed create pod sandbox: rpc error: code = Unknown desc = failed pulling image "k8s.gcr.io/pause:3.1": Get https://k8s.gcr.io/v1/_ping: dial tcp 108.177.125.82:443: connect: connection refused

5.node節點下載該鏡像,分別運行以下命令,執行后發現還需要k8s.gcr.io/kube-proxy:v1.14.1 該鏡像,那么一起處理吧(pull的鏡像版本可以自己改,不改的話根據我的命令執行也可以)。

docker pull mirrorgooglecontainers/pause:3.1

docker tag docker.io/mirrorgooglecontainers/pause:3.1 k8s.gcr.io/pause:3.1

docker pull mirrorgooglecontainers/kube-proxy-amd64:v1.11.3

docker tag docker.io/mirrorgooglecontainers/kube-proxy-amd64:v1.11.3 k8s.gcr.io/kube-proxy:v1.14.16.大功告成

1.配置一個yml文件

vim nginx.yml

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

app: web

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 802.創建pod

kubectl create -f nginx.yml3.查看IP并訪問

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx 1/1 Running 0 4m11s 10.44.0.1 node1 <none> <none>

[root@master ~]# curl 10.44.0.1

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h2>Welcome to nginx!</h2>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a >nginx.org</a>.<br/>

Commercial support is available at

<a >nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

感謝大家觀看

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。