您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

| 主機名 | Centos版本 | ip | docker version | flannel version | Keepalived version | 主機配置 | 備注 |

|---|---|---|---|---|---|---|---|

| lvs-keepalived01 | 7.6.1810 | 172.27.34.28 | / | / | v1.3.5 | 4C4G | lvs-keepalived |

| lvs-keepalived01 | 7.6.1810 | 172.27.34.29 | / | / | v1.3.5 | 4C4G | lvs-keepalived |

| master01 | 7.6.1810 | 172.27.34.35 | 18.09.9 | v0.11.0 | / | 4C4G | control plane |

| master02 | 7.6.1810 | 172.27.34.36 | 18.09.9 | v0.11.0 | / | 4C4G | control plane |

| master03 | 7.6.1810 | 172.27.34.37 | 18.09.9 | v0.11.0 | / | 4C4G | control plane |

| work01 | 7.6.1810 | 172.27.34.161 | 18.09.9 | / | / | 4C4G | worker nodes |

| work02 | 7.6.1810 | 172.27.34.162 | 18.09.9 | / | / | 4C4G | worker nodes |

| work03 | 7.6.1810 | 172.27.34.163 | 18.09.9 | / | / | 4C4G | worker nodes |

| VIP | 7.6.1810 | 172.27.34.222 | / | / | v1.3.5 | 4C4G | 在lvs-keepalived兩臺主機上浮動 |

| client | 7.6.1810 | 172.27.34.85 | / | / | / | 4C4G | client |

共有9臺服務器,2臺為lvs-keepalived集群,3臺control plane集群,3臺work集群,1臺client。

| 主機名 | kubelet version | kubeadm version | kubectl version | 備注 |

|---|---|---|---|---|

| master01 | v1.16.4 | v1.16.4 | v1.16.4 | kubectl選裝 |

| master02 | v1.16.4 | v1.16.4 | v1.16.4 | kubectl選裝 |

| master03 | v1.16.4 | v1.16.4 | v1.16.4 | kubectl選裝 |

| work01 | v1.16.4 | v1.16.4 | v1.16.4 | kubectl選裝 |

| work02 | v1.16.4 | v1.16.4 | v1.16.4 | kubectl選裝 |

| work03 | v1.16.4 | v1.16.4 | v1.16.4 | kubectl選裝 |

| client | / | / | v1.16.4 | client |

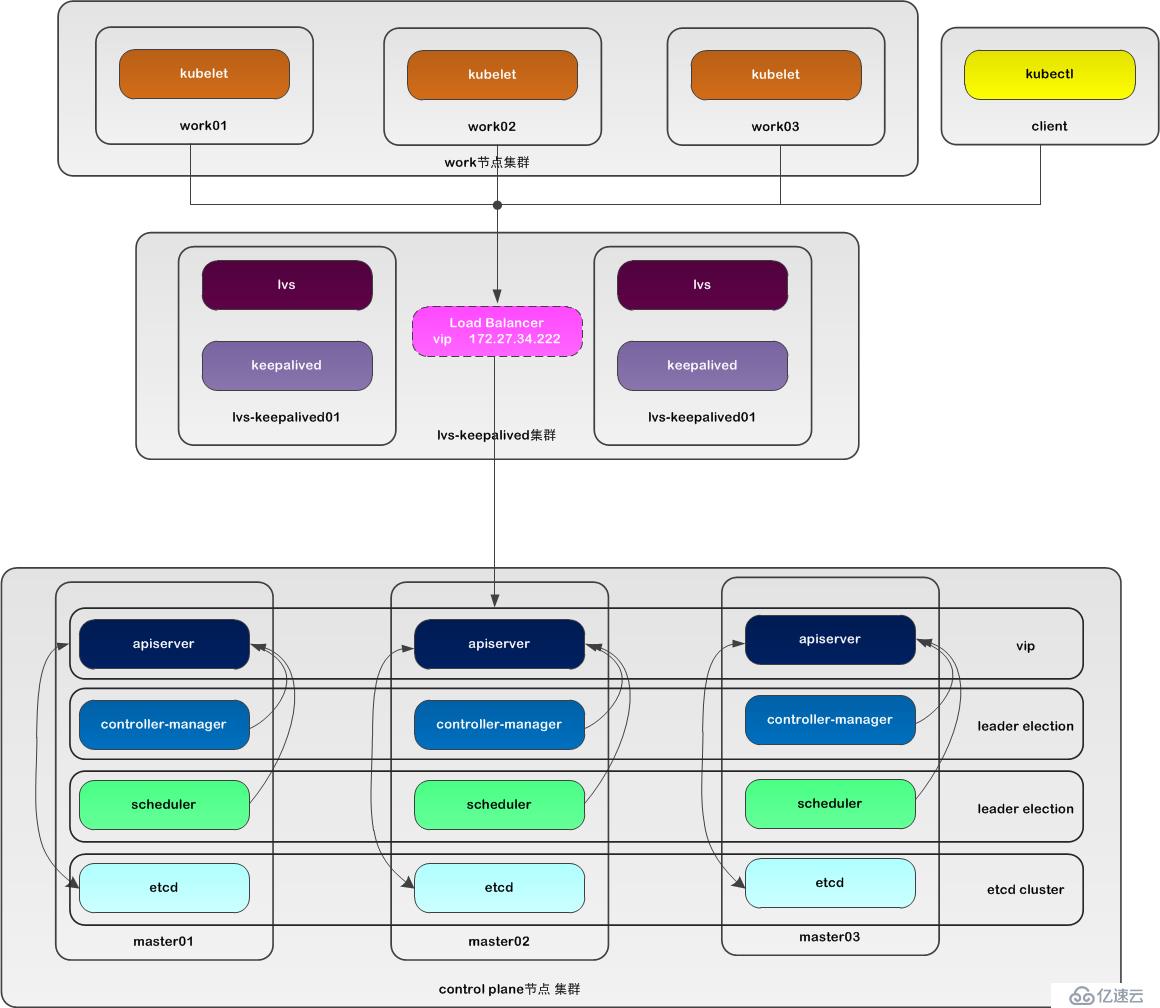

本文采用kubeadm方式搭建高可用k8s集群,k8s集群的高可用實際是k8s各核心組件的高可用,這里使用集群模式(針對apiserver來講),架構如下:

| 核心組件 | 高可用模式 | 高可用實現方式 |

|---|---|---|

| apiserver | 集群 | lvs+keepalived |

| controller-manager | 主備 | leader election |

| scheduler | 主備 | leader election |

| etcd | 集群 | kubeadm |

- apiserver 通過lvs-keepalived實現高可用,vip將請求分發至各個control plane節點的apiserver組件;

- controller-manager k8s內部通過選舉方式產生領導者(由--leader-elect 選型控制,默認為true),同一時刻集群內只有一個controller-manager組件運行;

- scheduler k8s內部通過選舉方式產生領導者(由--leader-elect 選型控制,默認為true),同一時刻集群內只有一個scheduler組件運行;

- etcd 通過運行kubeadm方式自動創建集群來實現高可用,部署的節點數為奇數,3節點方式最多容忍一臺機器宕機。

本文所有的服務器都為Centos7.6,Centos7.6安裝詳見:Centos7.6操作系統安裝及優化全紀錄

安裝Centos時已經禁用了防火墻和selinux并設置了阿里源。

control plane和work節點都執行本部分操作,以master01為例記錄搭建過程。

[root@centos7 ~]# hostnamectl set-hostname master01

[root@centos7 ~]# more /etc/hostname

master01退出重新登陸即可顯示新設置的主機名master01,各服務器修改為對應的主機名。

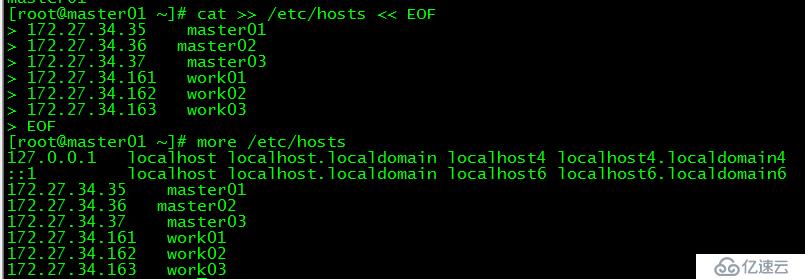

[root@master01 ~]# cat >> /etc/hosts << EOF

172.27.34.35 master01

172.27.34.36 master02

172.27.34.37 master03

172.27.34.161 work01

172.27.34.162 work02

172.27.34.163 work03

EOF

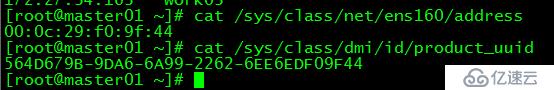

[root@master01 ~]# cat /sys/class/net/ens160/address

[root@master01 ~]# cat /sys/class/dmi/id/product_uuid

保證各節點mac和uuid唯一

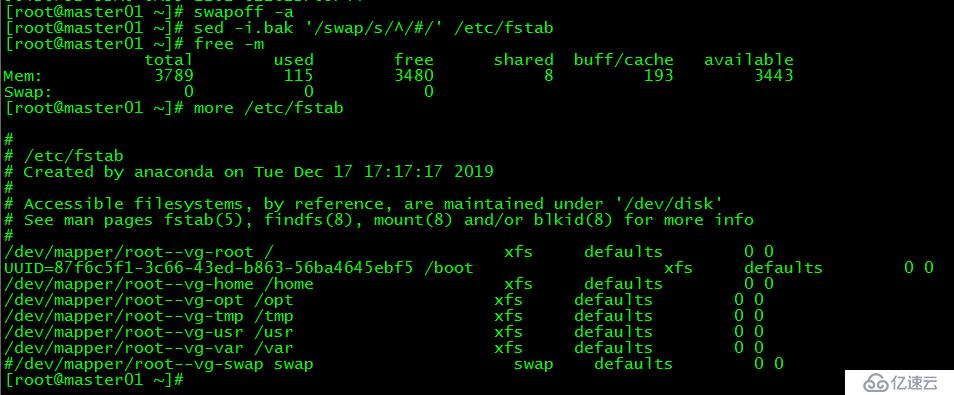

[root@master01 ~]# swapoff -a若需要重啟后也生效,在禁用swap后還需修改配置文件/etc/fstab,注釋swap

[root@master01 ~]# sed -i.bak '/swap/s/^/#/' /etc/fstab

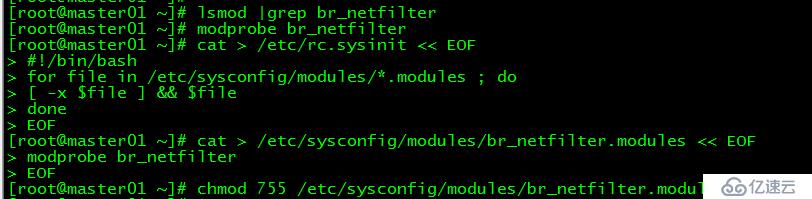

本文的k8s網絡使用flannel,該網絡需要設置內核參數bridge-nf-call-iptables=1,修改這個參數需要系統有br_netfilter模塊。

查看br_netfilter模塊:

[root@master01 ~]# lsmod |grep br_netfilter如果系統沒有br_netfilter模塊則執行下面的新增命令,如有則忽略。

臨時新增br_netfilter模塊:

[root@master01 ~]# modprobe br_netfilter該方式重啟后會失效

永久新增br_netfilter模塊:

[root@master01 ~]# cat > /etc/rc.sysinit << EOF

#!/bin/bash

for file in /etc/sysconfig/modules/*.modules ; do

[ -x $file ] && $file

done

EOF

[root@master01 ~]# cat > /etc/sysconfig/modules/br_netfilter.modules << EOF

modprobe br_netfilter

EOF

[root@master01 ~]# chmod 755 /etc/sysconfig/modules/br_netfilter.modules

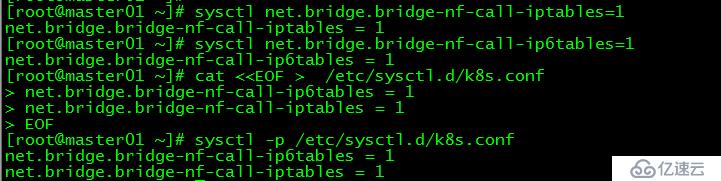

[root@master01 ~]# sysctl net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-iptables = 1

[root@master01 ~]# sysctl net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-ip6tables = 1[root@master01 ~]# cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

[root@master01 ~]# sysctl -p /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@master01 ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

- [] 中括號中的是repository id,唯一,用來標識不同倉庫

- name 倉庫名稱,自定義

- baseurl 倉庫地址

- enable 是否啟用該倉庫,默認為1表示啟用

- gpgcheck 是否驗證從該倉庫獲得程序包的合法性,1為驗證

- repo_gpgcheck 是否驗證元數據的合法性 元數據就是程序包列表,1為驗證

- gpgkey=URL 數字簽名的公鑰文件所在位置,如果gpgcheck值為1,此處就需要指定gpgkey文件的位置,如果gpgcheck值為0就不需要此項了

[root@master01 ~]# yum clean all

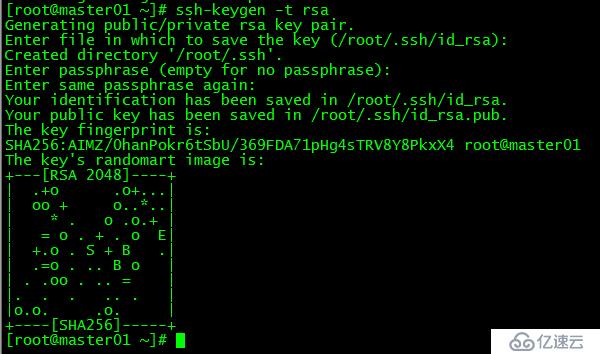

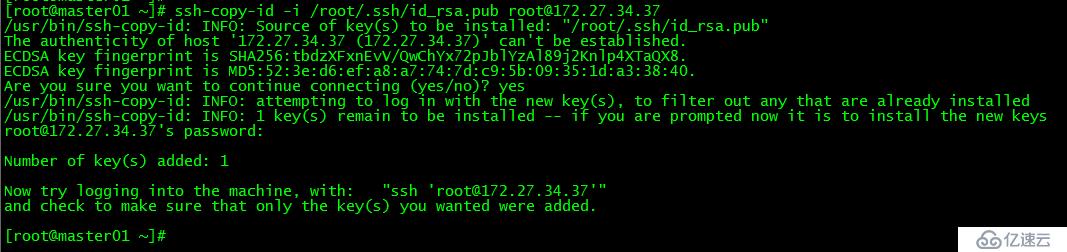

[root@master01 ~]# yum -y makecache配置master01到master02、master03免密登錄,本步驟只在master01上執行。

[root@master01 ~]# ssh-keygen -t rsa

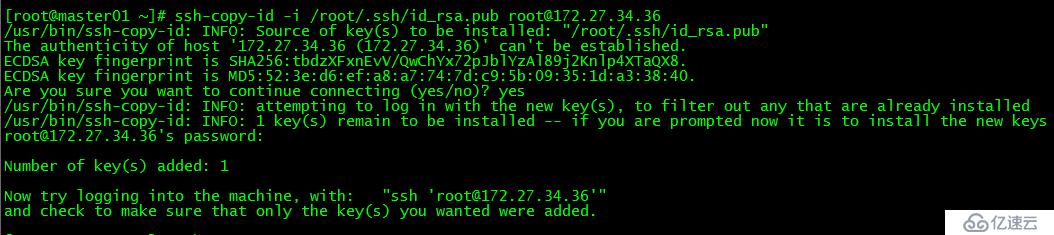

[root@master01 ~]# ssh-copy-id -i /root/.ssh/id_rsa.pub root@172.27.34.35

[root@master01 ~]# ssh-copy-id -i /root/.ssh/id_rsa.pub root@172.27.34.36

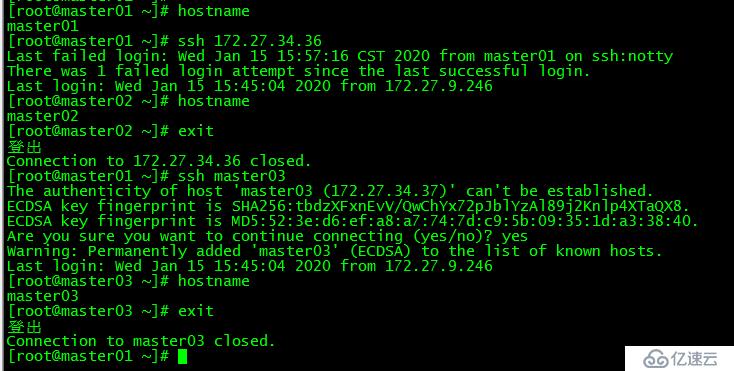

[root@master01 ~]# ssh 172.27.34.36

[root@master01 ~]# ssh master03

master01可以直接登錄master02和master03,不需要輸入密碼。

重啟各control plane和work節點。

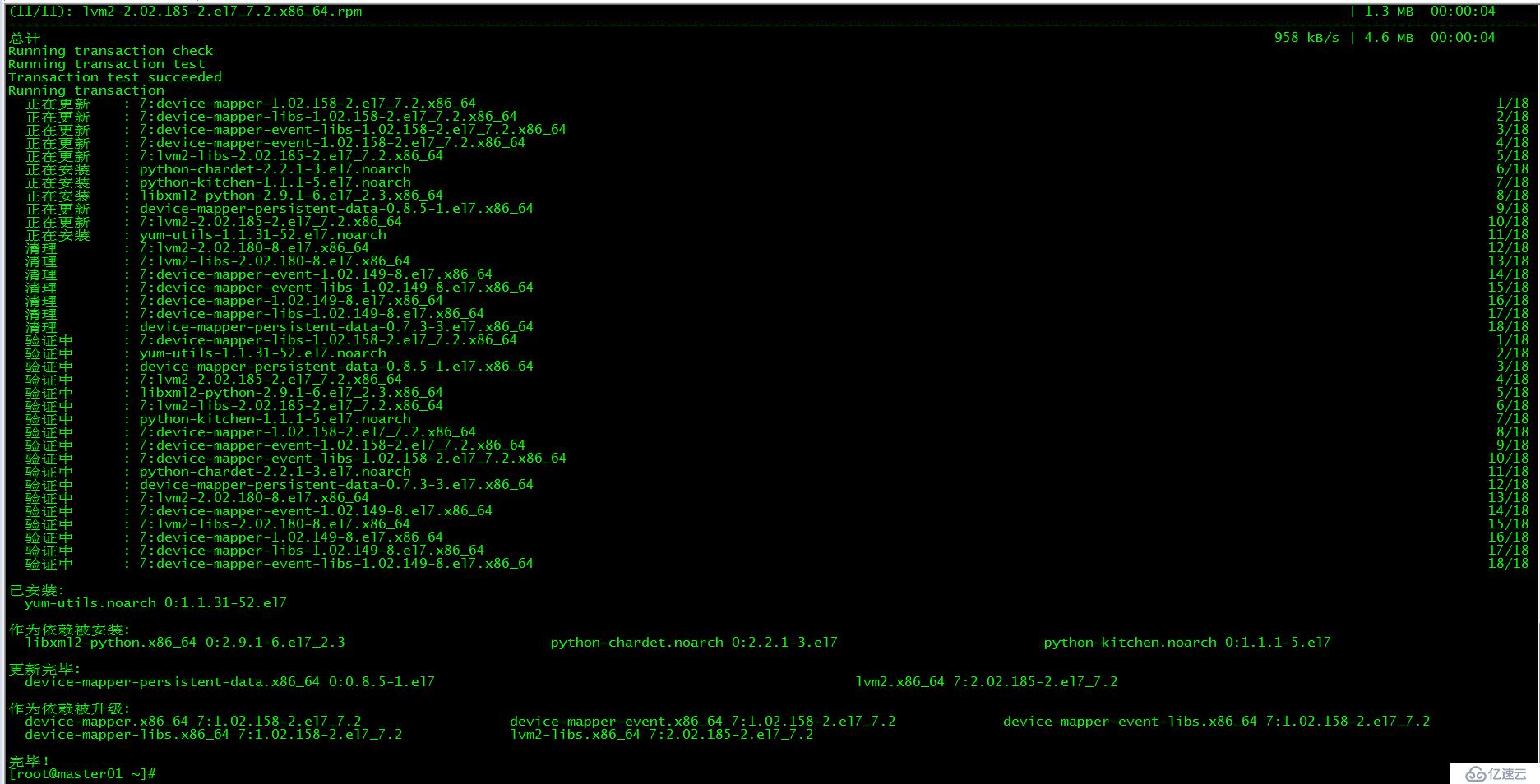

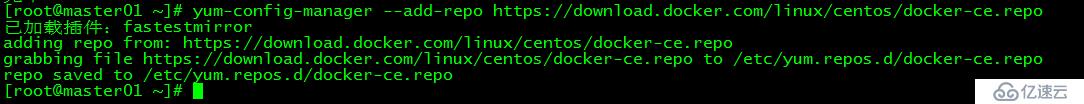

control plane和work節點都執行本部分操作。

[root@master01 ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@master01 ~]# yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

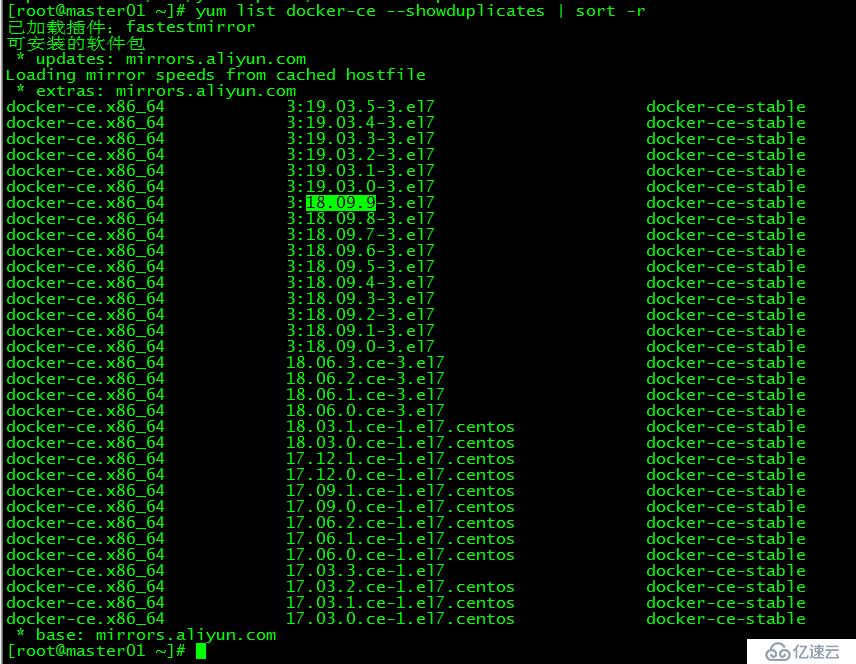

[root@master01 ~]# yum list docker-ce --showduplicates | sort -r

[root@master01 ~]# yum install docker-ce-18.09.9 docker-ce-cli-18.09.9 containerd.io -y

指定安裝的docker版本為18.09.9

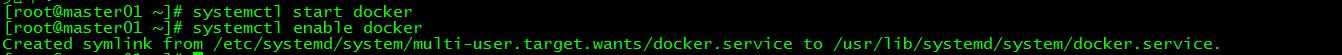

[root@master01 ~]# systemctl start docker

[root@master01 ~]# systemctl enable docker

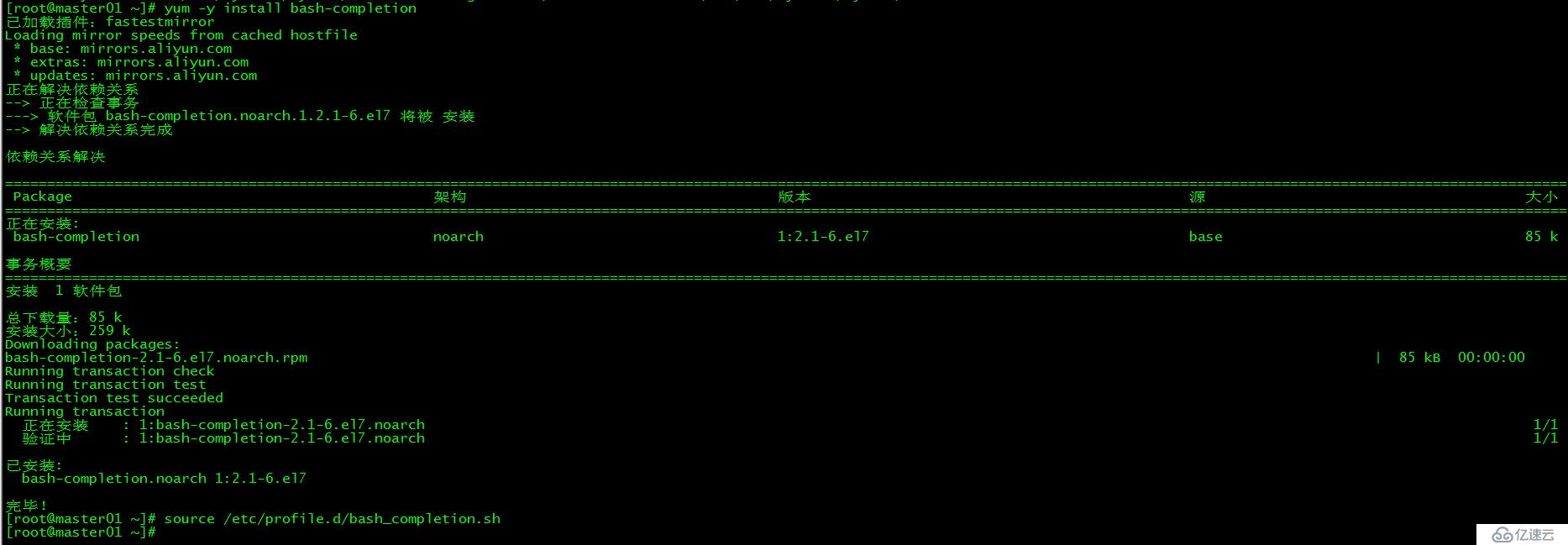

[root@master01 ~]# yum -y install bash-completion[root@master01 ~]# source /etc/profile.d/bash_completion.sh

由于Docker Hub的服務器在國外,下載鏡像會比較慢,可以配置鏡像加速器。主要的加速器有:Docker官方提供的中國registry mirror、阿里云加速器、DaoCloud 加速器,本文以阿里加速器配置為例。

登陸地址為:https://cr.console.aliyun.com ,未注冊的可以先注冊阿里云賬戶

配置daemon.json文件

[root@master01 ~]# mkdir -p /etc/docker

[root@master01 ~]# tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://v16stybc.mirror.aliyuncs.com"]

}

EOF重啟服務

[root@master01 ~]# systemctl daemon-reload

[root@master01 ~]# systemctl restart docker

加速器配置完成

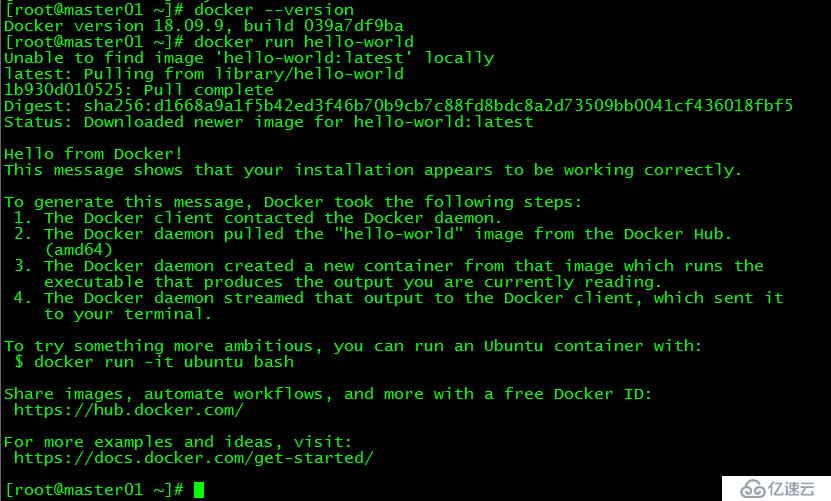

[root@master01 ~]# docker --version

[root@master01 ~]# docker run hello-world

通過查詢docker版本和運行容器hello-world來驗證docker是否安裝成功。

修改daemon.json,新增‘"exec-opts": ["native.cgroupdriver=systemd"’

[root@master01 ~]# more /etc/docker/daemon.json

{

"registry-mirrors": ["https://v16stybc.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}[root@master01 ~]# systemctl daemon-reload

[root@master01 ~]# systemctl restart docker修改cgroupdriver是為了消除告警:

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

control plane和work節點都執行本部分操作。

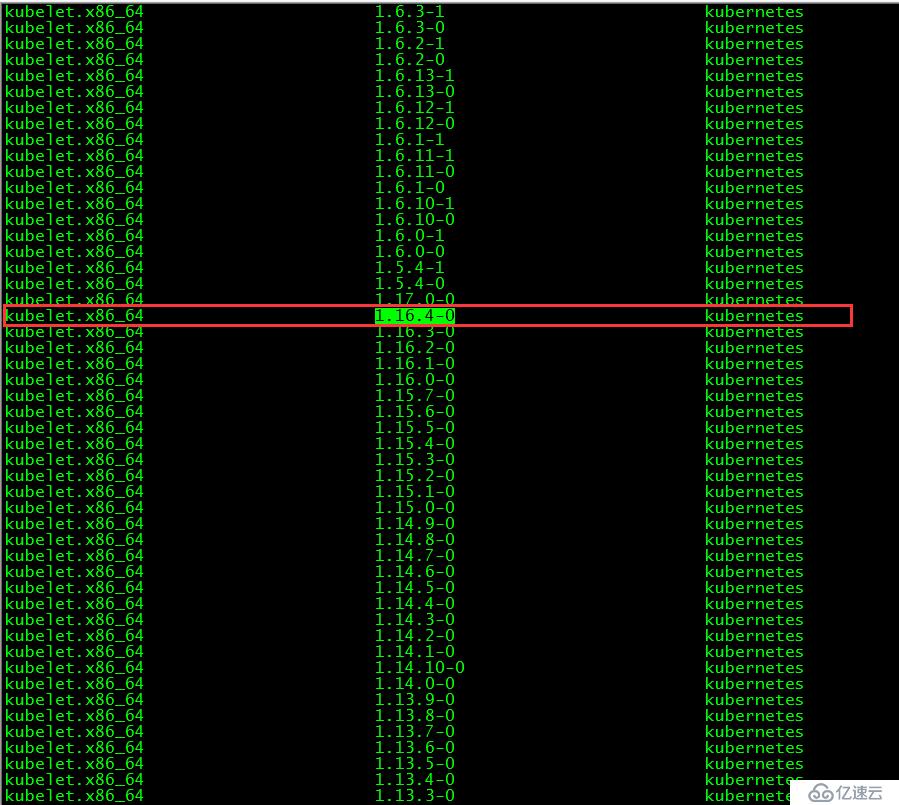

[root@master01 ~]# yum list kubelet --showduplicates | sort -r

本文安裝的kubelet版本是1.16.4,該版本支持的docker版本為1.13.1, 17.03, 17.06, 17.09, 18.06, 18.09。

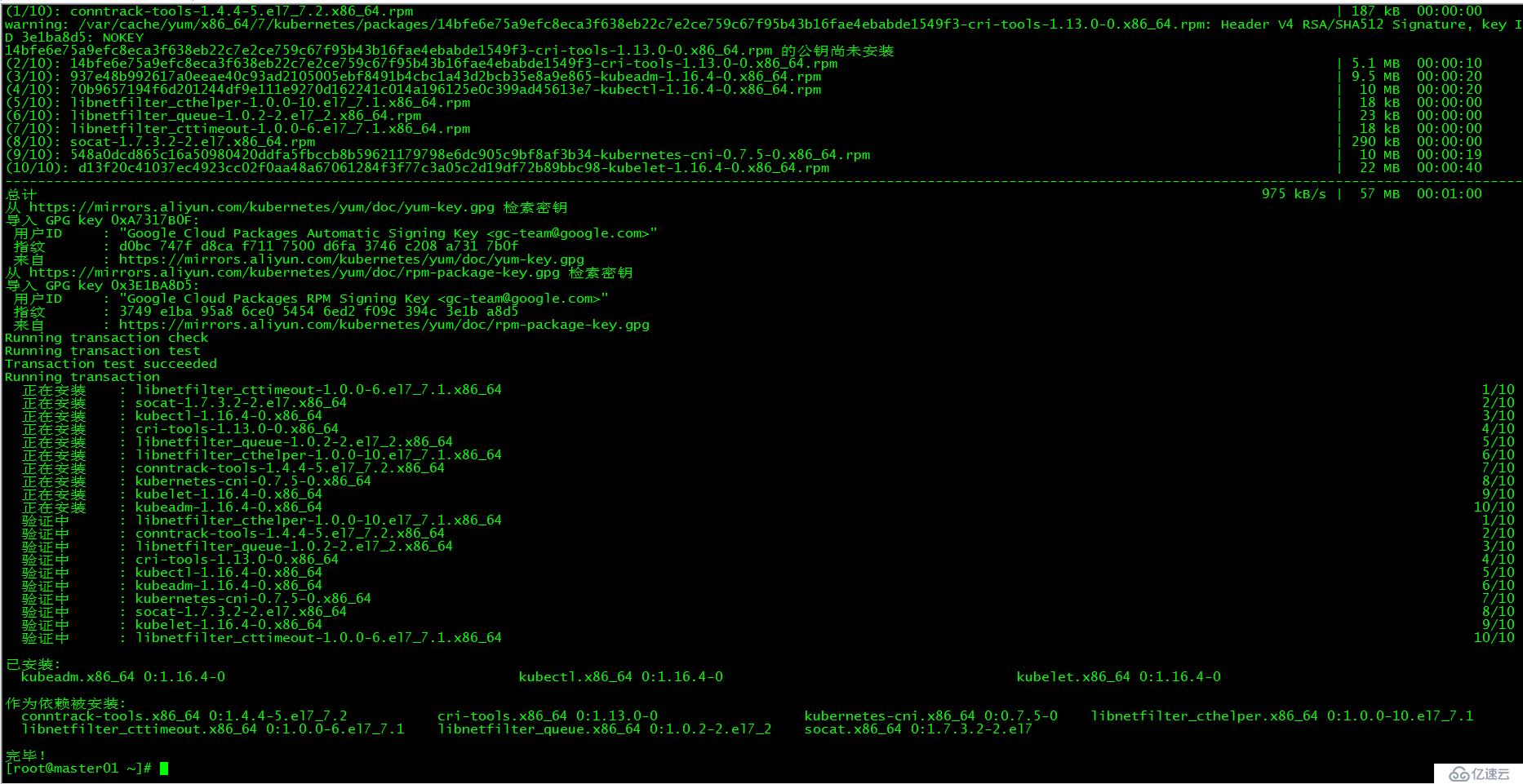

[root@master01 ~]# yum install -y kubelet-1.16.4 kubeadm-1.16.4 kubectl-1.16.4

- kubelet 運行在集群所有節點上,用于啟動Pod和容器等對象的工具

- kubeadm 用于初始化集群,啟動集群的命令工具

- kubectl 用于和集群通信的命令行,通過kubectl可以部署和管理應用,查看各種資源,創建、刪除和更新各種組件

啟動kubelet并設置開機啟動

[root@master01 ~]# systemctl enable kubelet && systemctl start kubelet[root@master01 ~]# echo "source <(kubectl completion bash)" >> ~/.bash_profile

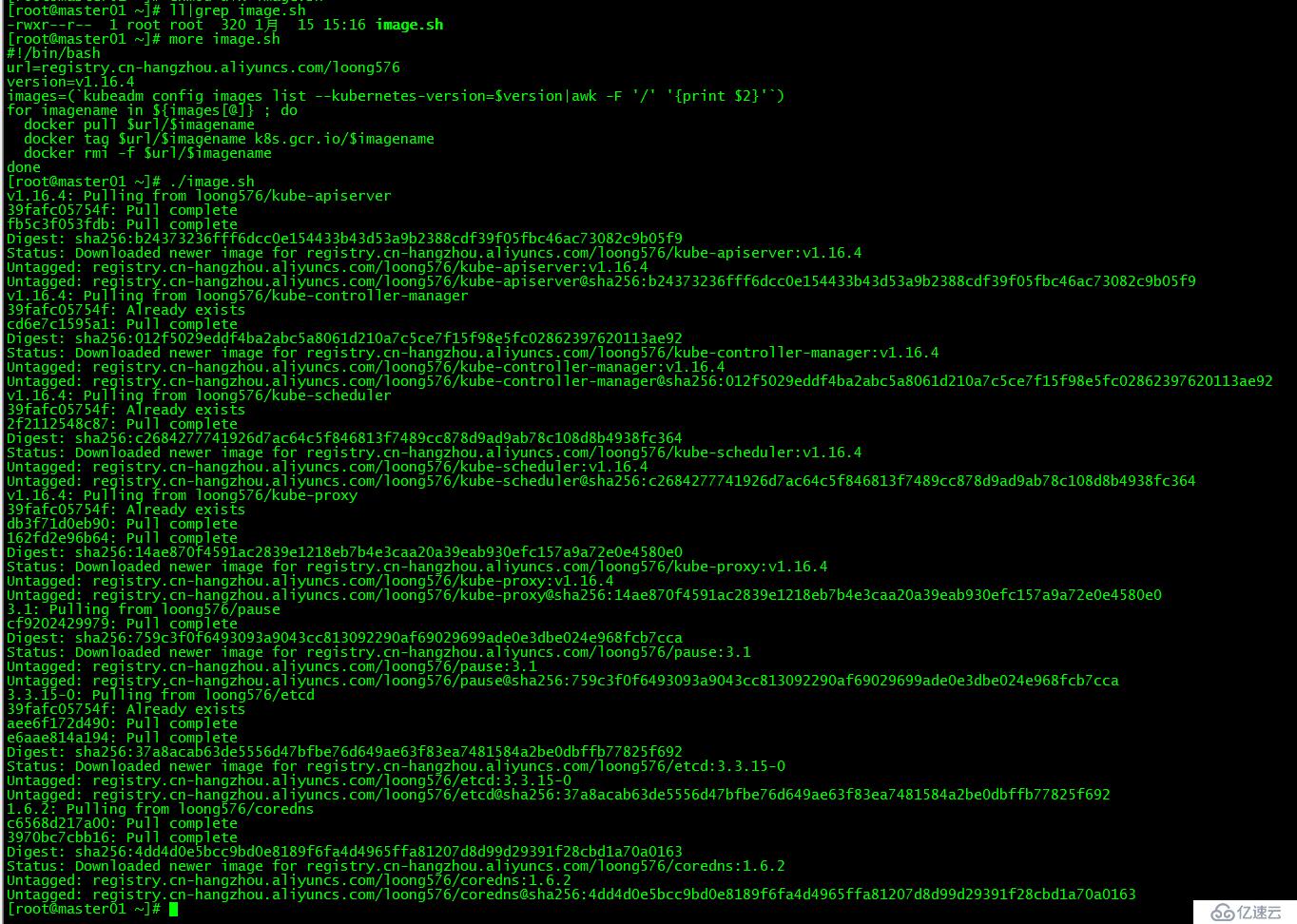

[root@master01 ~]# source .bash_profile Kubernetes幾乎所有的安裝組件和Docker鏡像都放在goolge自己的網站上,直接訪問可能會有網絡問題,這里的解決辦法是從阿里云鏡像倉庫下載鏡像,拉取到本地以后改回默認的鏡像tag。本文通過運行image.sh腳本方式拉取鏡像。

[root@master01 ~]# more image.sh

#!/bin/bash

url=registry.cn-hangzhou.aliyuncs.com/loong576

version=v1.16.4

images=(`kubeadm config images list --kubernetes-version=$version|awk -F '/' '{print $2}'`)

for imagename in ${images[@]} ; do

docker pull $url/$imagename

docker tag $url/$imagename k8s.gcr.io/$imagename

docker rmi -f $url/$imagename

doneurl為阿里云鏡像倉庫地址,version為安裝的kubernetes版本。

運行腳本image.sh,下載指定版本的鏡像

[root@master01 ~]# ./image.sh

[root@master01 ~]# docker images

master01節點執行本部分操作。

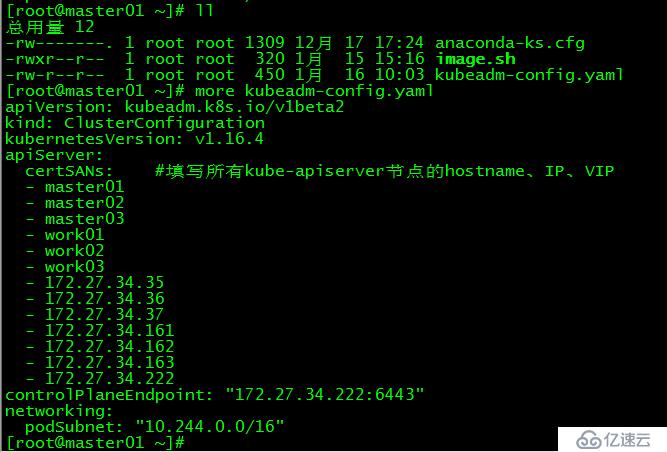

[root@master01 ~]# more kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.16.4

apiServer:

certSANs: #填寫所有kube-apiserver節點的hostname、IP、VIP

- master01

- master02

- master03

- work01

- work02

- work03

- 172.27.34.35

- 172.27.34.36

- 172.27.34.37

- 172.27.34.161

- 172.27.34.162

- 172.27.34.163

- 172.27.34.222

controlPlaneEndpoint: "172.27.34.222:6443"

networking:

podSubnet: "10.244.0.0/16"

kubeadm.conf為初始化的配置文件

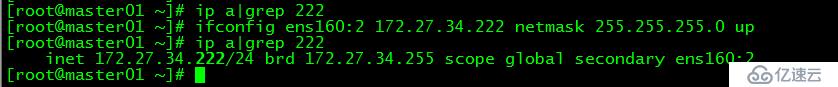

在master01上起虛ip:172.27.34.222

[root@master01 ~]# ifconfig ens160:2 172.27.34.222 netmask 255.255.255.0 up

起虛ip目的是為了執行master01的初始化,待初始化完成后去掉該虛ip

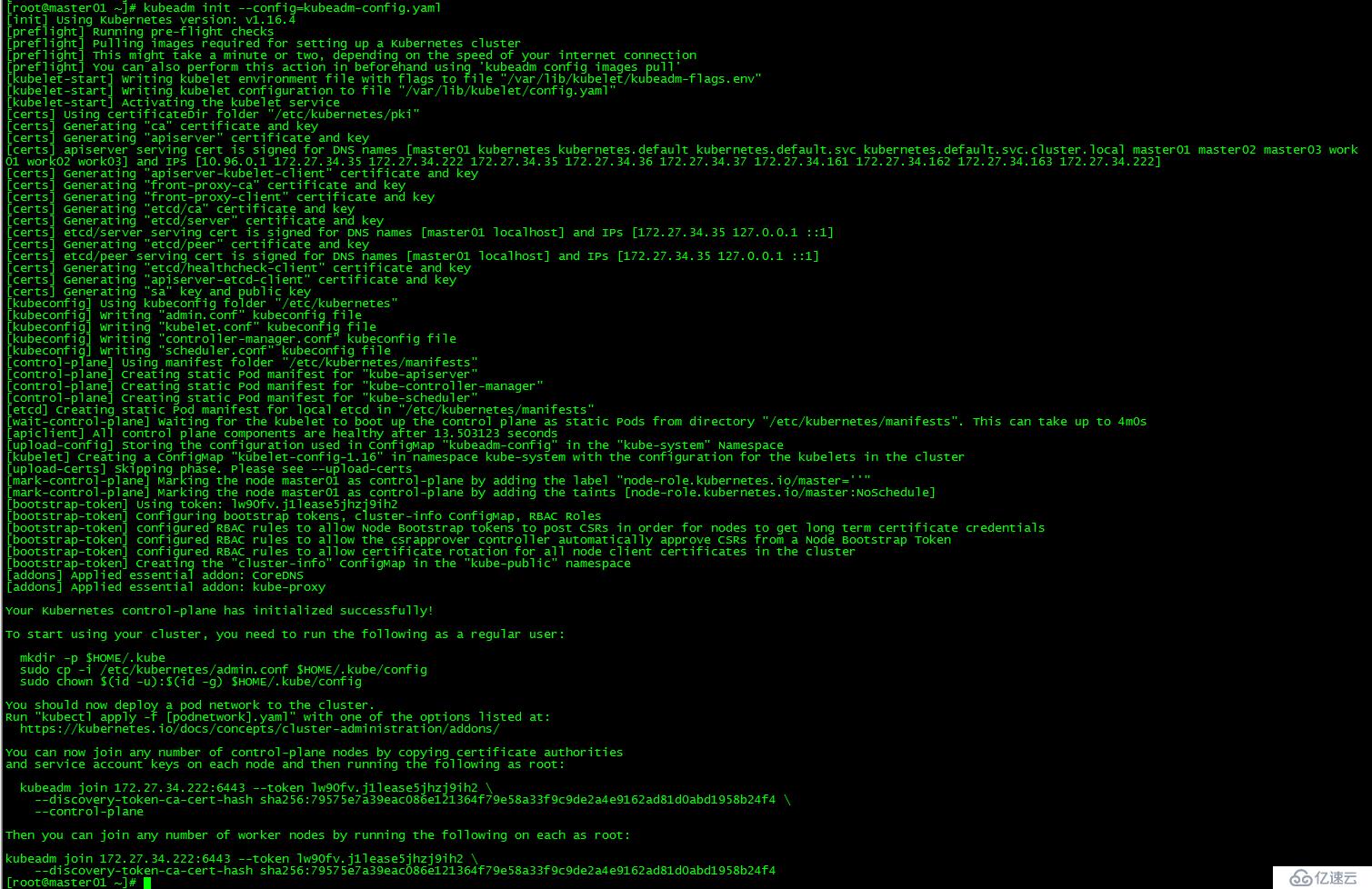

[root@master01 ~]# kubeadm init --config=kubeadm-config.yaml

記錄kubeadm join的輸出,后面需要這個命令將work節點和其他control plane節點加入集群中。

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join 172.27.34.222:6443 --token lw90fv.j1lease5jhzj9ih3 \

--discovery-token-ca-cert-hash sha256:79575e7a39eac086e121364f79e58a33f9c9de2a4e9162ad81d0abd1958b24f4 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.27.34.222:6443 --token lw90fv.j1lease5jhzj9ih3 \

--discovery-token-ca-cert-hash sha256:79575e7a39eac086e121364f79e58a33f9c9de2a4e9162ad81d0abd1958b24f4 初始化失敗:

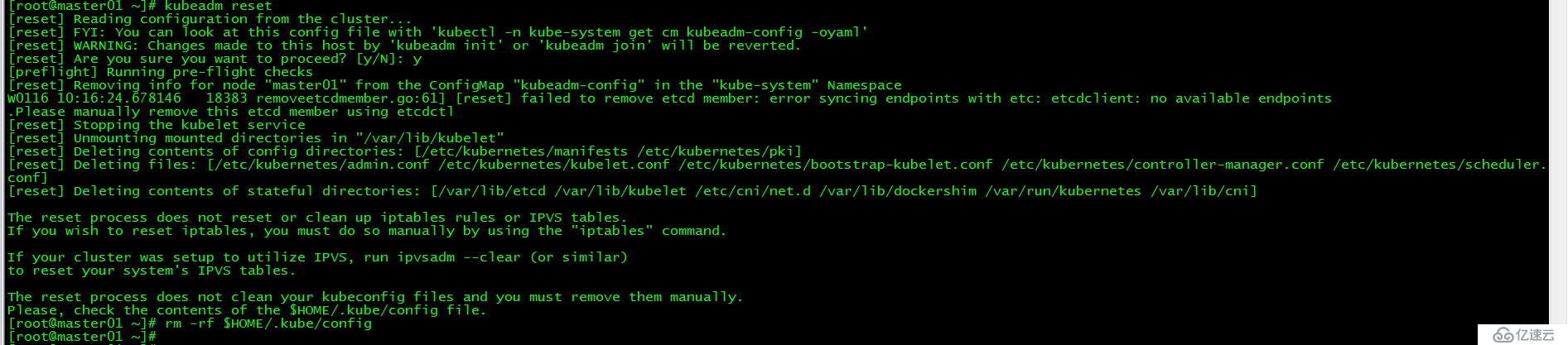

如果初始化失敗,可執行kubeadm reset后重新初始化

[root@master01 ~]# kubeadm reset

[root@master01 ~]# rm -rf $HOME/.kube/config

[root@master01 ~]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

[root@master01 ~]# source .bash_profile本文所有操作都在root用戶下執行,若為非root用戶,則執行如下操作:

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

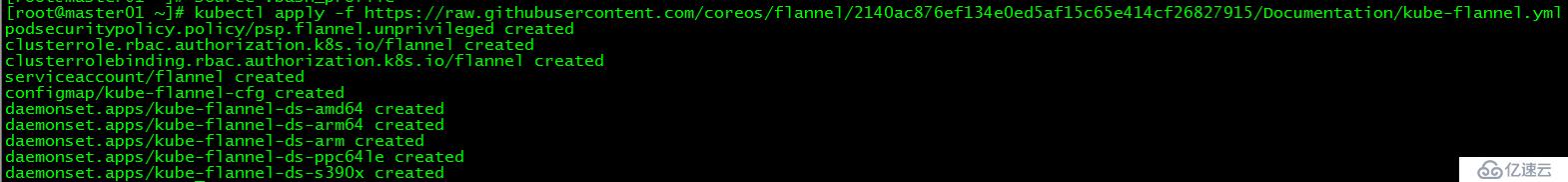

chown $(id -u):$(id -g) $HOME/.kube/config在master01上新建flannel網絡

[root@master01 ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/2140ac876ef134e0ed5af15c65e414cf26827915/Documentation/kube-flannel.yml

由于網絡原因,可能會安裝失敗,可以在文末直接下載kube-flannel.yml文件,然后再執行apply

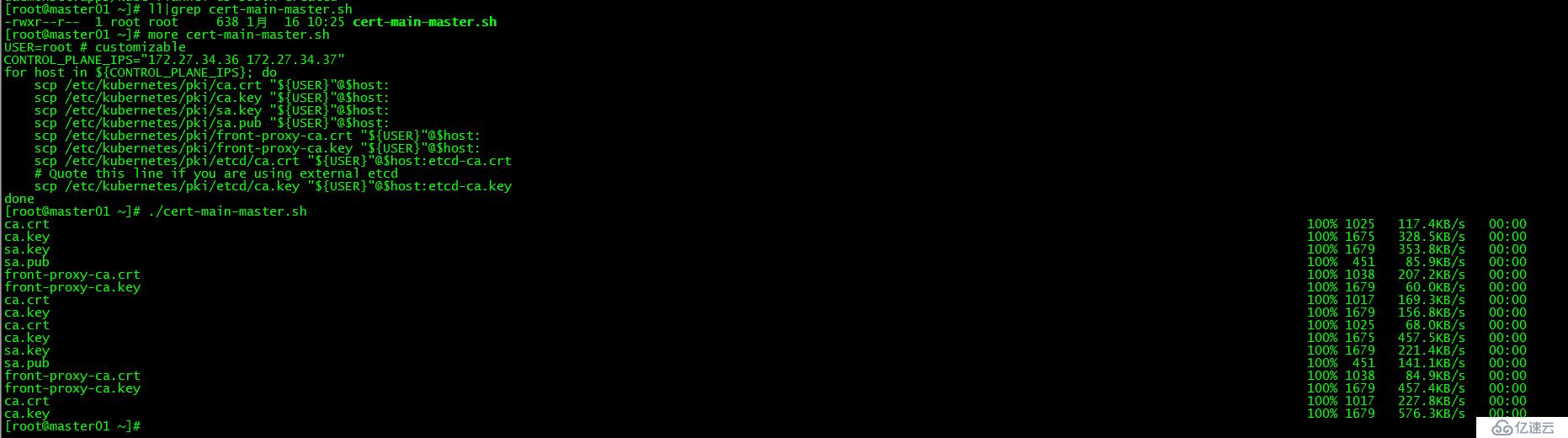

在master01上運行腳本cert-main-master.sh,將證書分發至master02和master03

[root@master01 ~]# ll|grep cert-main-master.sh

-rwxr--r-- 1 root root 638 1月 16 10:25 cert-main-master.sh

[root@master01 ~]# more cert-main-master.sh

USER=root # customizable

CONTROL_PLANE_IPS="172.27.34.36 172.27.34.37"

for host in ${CONTROL_PLANE_IPS}; do

scp /etc/kubernetes/pki/ca.crt "${USER}"@$host:

scp /etc/kubernetes/pki/ca.key "${USER}"@$host:

scp /etc/kubernetes/pki/sa.key "${USER}"@$host:

scp /etc/kubernetes/pki/sa.pub "${USER}"@$host:

scp /etc/kubernetes/pki/front-proxy-ca.crt "${USER}"@$host:

scp /etc/kubernetes/pki/front-proxy-ca.key "${USER}"@$host:

scp /etc/kubernetes/pki/etcd/ca.crt "${USER}"@$host:etcd-ca.crt

# Quote this line if you are using external etcd

scp /etc/kubernetes/pki/etcd/ca.key "${USER}"@$host:etcd-ca.key

done

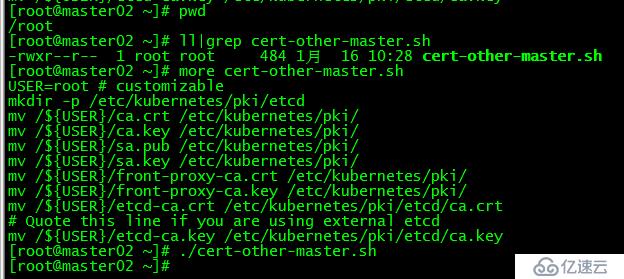

在master02上運行腳本cert-other-master.sh,將證書移至指定目錄

[root@master02 ~]# more cert-other-master.sh

USER=root # customizable

mkdir -p /etc/kubernetes/pki/etcd

mv /${USER}/ca.crt /etc/kubernetes/pki/

mv /${USER}/ca.key /etc/kubernetes/pki/

mv /${USER}/sa.pub /etc/kubernetes/pki/

mv /${USER}/sa.key /etc/kubernetes/pki/

mv /${USER}/front-proxy-ca.crt /etc/kubernetes/pki/

mv /${USER}/front-proxy-ca.key /etc/kubernetes/pki/

mv /${USER}/etcd-ca.crt /etc/kubernetes/pki/etcd/ca.crt

# Quote this line if you are using external etcd

mv /${USER}/etcd-ca.key /etc/kubernetes/pki/etcd/ca.key

[root@master02 ~]# ./cert-other-master.sh

在master03上也運行腳本cert-other-master.sh

[root@master03 ~]# pwd

/root

[root@master03 ~]# ll|grep cert-other-master.sh

-rwxr--r-- 1 root root 484 1月 16 10:30 cert-other-master.sh

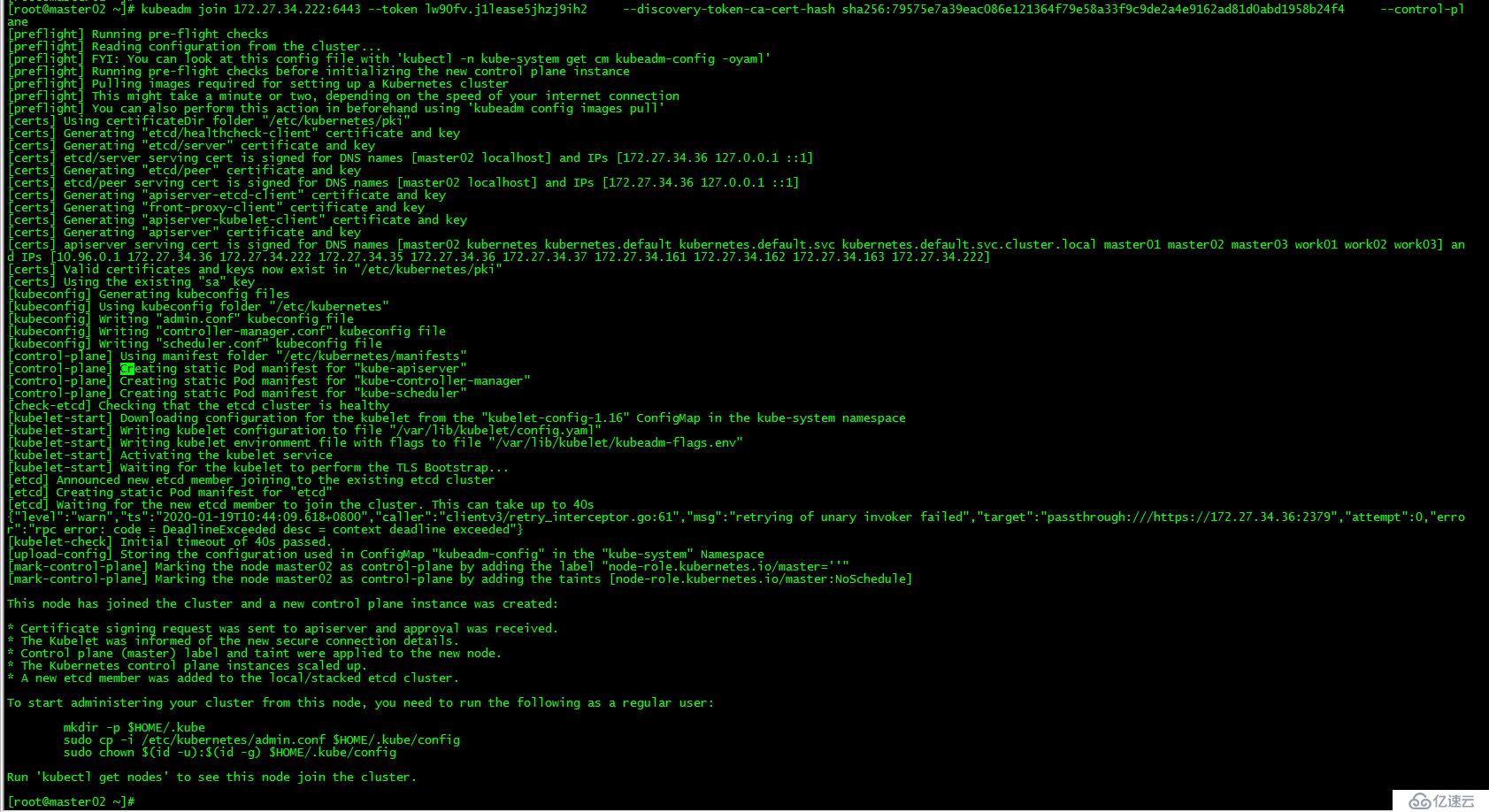

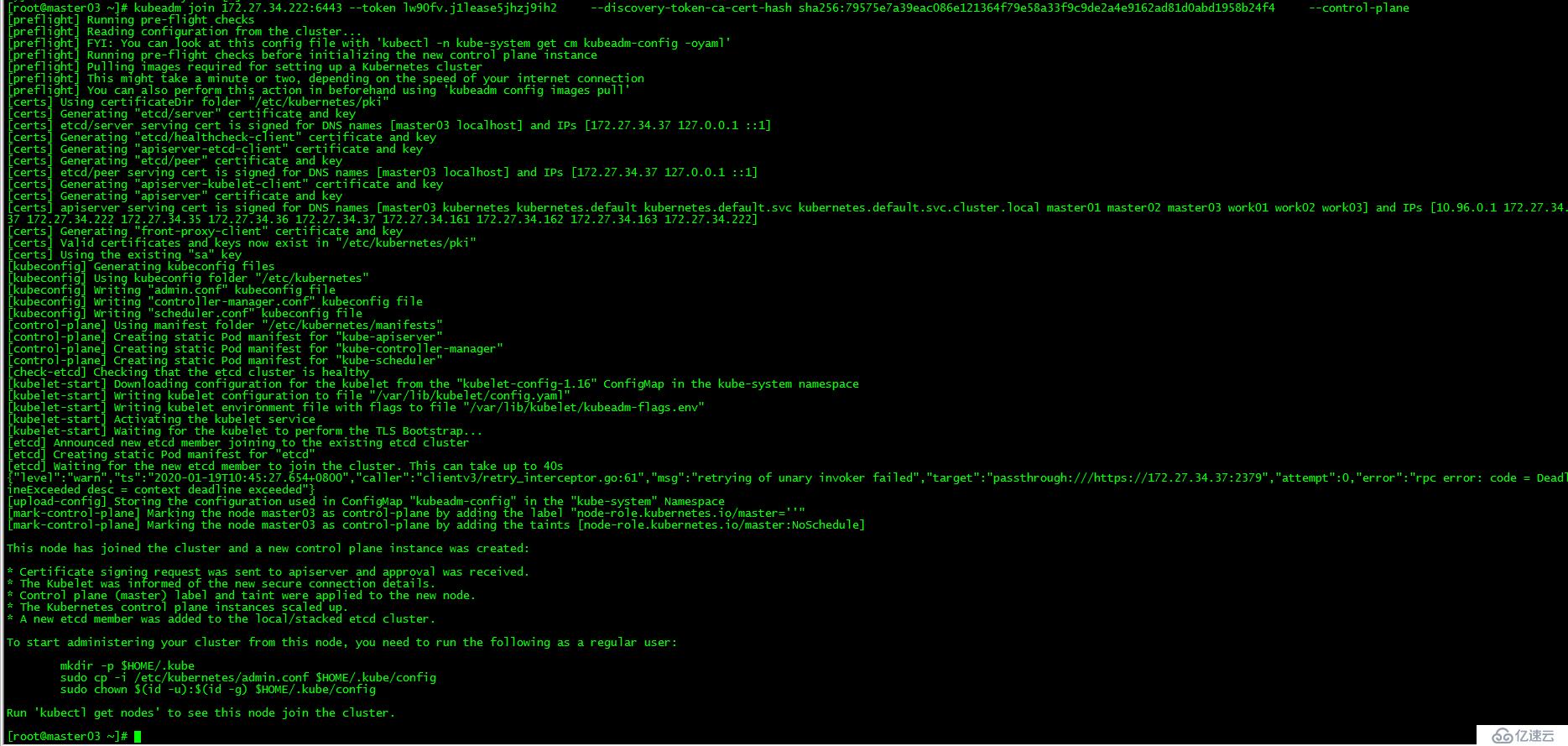

[root@master03 ~]# ./cert-other-master.sh [root@master03 ~]# kubeadm join 172.27.34.222:6443 --token lw90fv.j1lease5jhzj9ih3 --discovery-token-ca-cert-hash sha256:79575e7a39eac086e121364f79e58a33f9c9de2a4e9162ad81d0abd1958b24f4 --control-plane運行初始化master生成的control plane節點加入集群的命令

[root@master03 ~]# kubeadm join 172.27.34.222:6443 --token 0p7rzn.fdanprq4y8na36jh --discovery-token-ca-cert-hash sha256:fc7a828208d554329645044633159e9dc46b0597daf66769988fee8f3fc0636b --control-plane

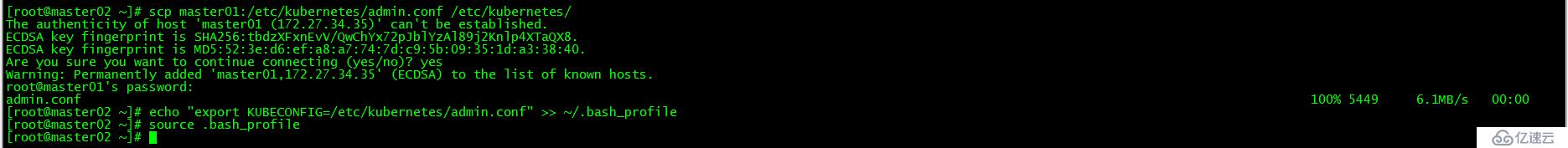

master02和master03加載環境變量

[root@master02 ~]# scp master01:/etc/kubernetes/admin.conf /etc/kubernetes/

[root@master02 ~]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

[root@master02 ~]# source .bash_profile

[root@master03 ~]# scp master01:/etc/kubernetes/admin.conf /etc/kubernetes/

[root@master03 ~]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

[root@master03 ~]# source .bash_profile

該步操作是為了在master02和master03上也能執行kubectl命令。

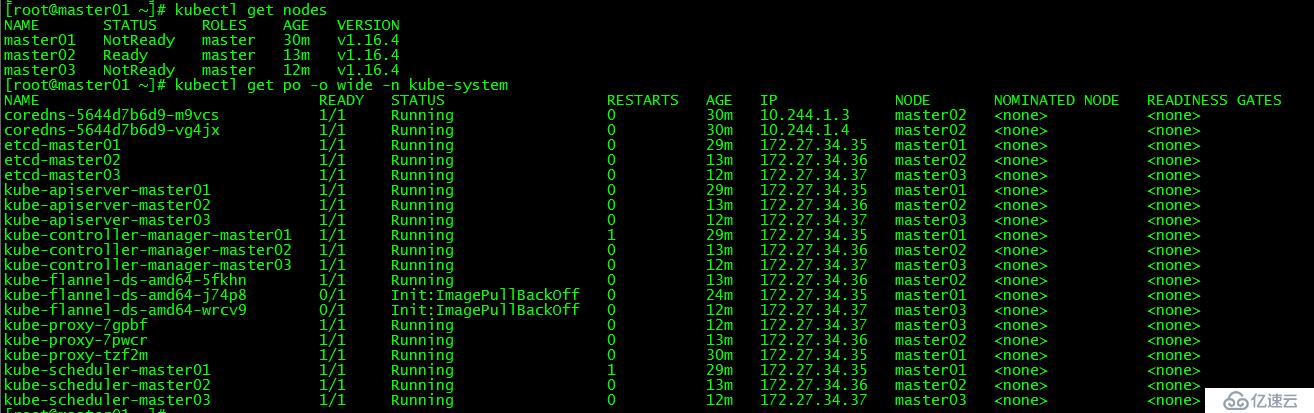

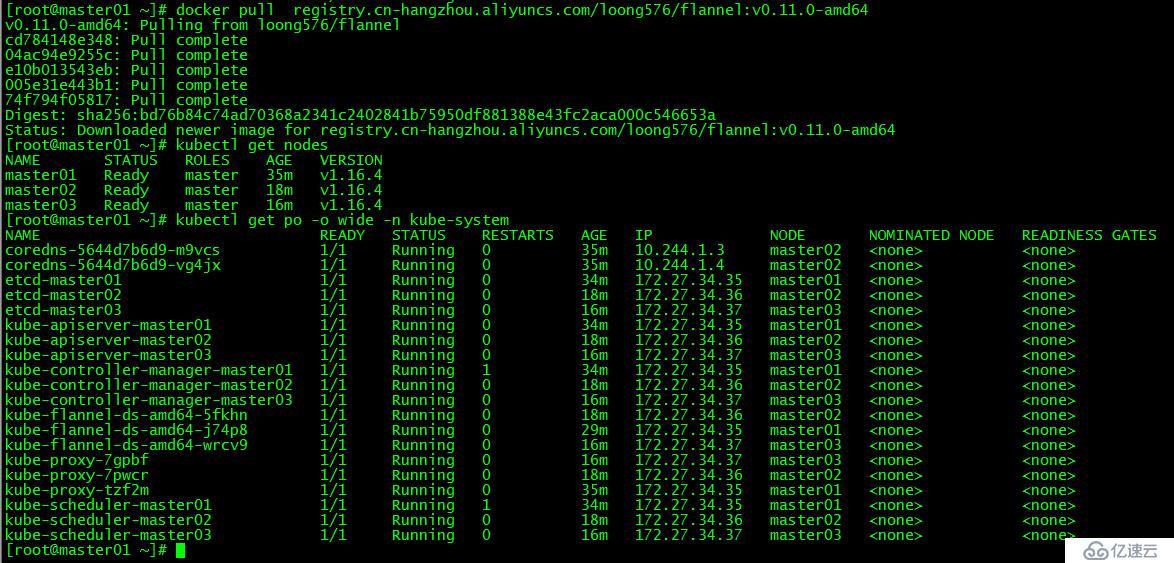

[root@master01 ~]# kubectl get nodes

[root@master01 ~]# kubectl get po -o wide -n kube-system  發現master01和master03下載flannel異常,分別在master01和master03上手動下載該鏡像后正常。

發現master01和master03下載flannel異常,分別在master01和master03上手動下載該鏡像后正常。

[root@master01 ~]# docker pull registry.cn-hangzhou.aliyuncs.com/loong576/flannel:v0.11.0-amd64

[root@master03 ~]# docker pull registry.cn-hangzhou.aliyuncs.com/loong576/flannel:v0.11.0-amd64

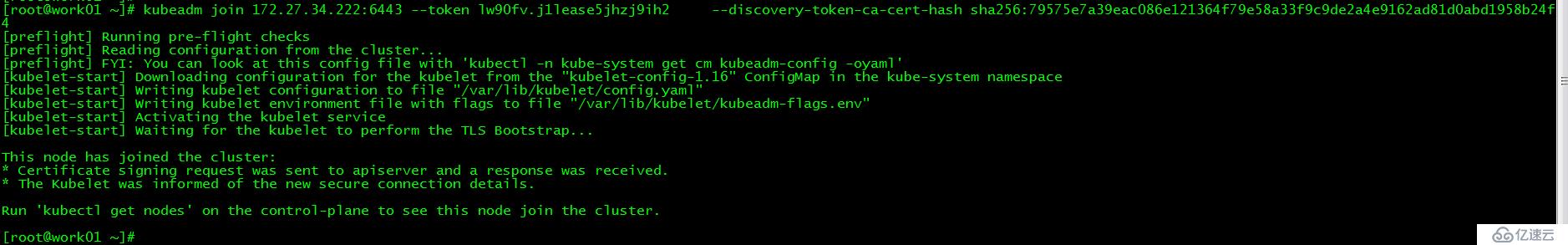

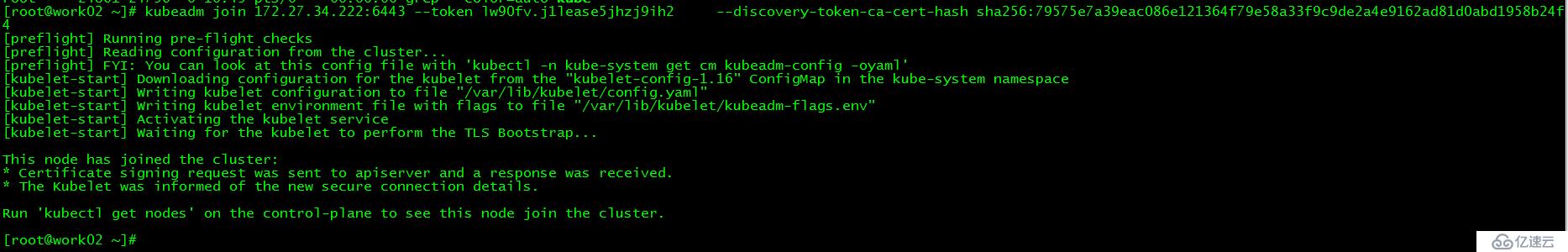

[root@work01 ~]# kubeadm join 172.27.34.222:6443 --token lw90fv.j1lease5jhzj9ih3 --discovery-token-ca-cert-hash sha256:79575e7a39eac086e121364f79e58a33f9c9de2a4e9162ad81d0abd1958b24f4運行初始化master生成的work節點加入集群的命令

[root@work02 ~]# kubeadm join 172.27.34.222:6443 --token lw90fv.j1lease5jhzj9ih3 --discovery-token-ca-cert-hash sha256:79575e7a39eac086e121364f79e58a33f9c9de2a4e9162ad81d0abd1958b24f4

[root@work03 ~]# kubeadm join 172.27.34.222:6443 --token lw90fv.j1lease5jhzj9ih3 --discovery-token-ca-cert-hash sha256:79575e7a39eac086e121364f79e58a33f9c9de2a4e9162ad81d0abd1958b24f4

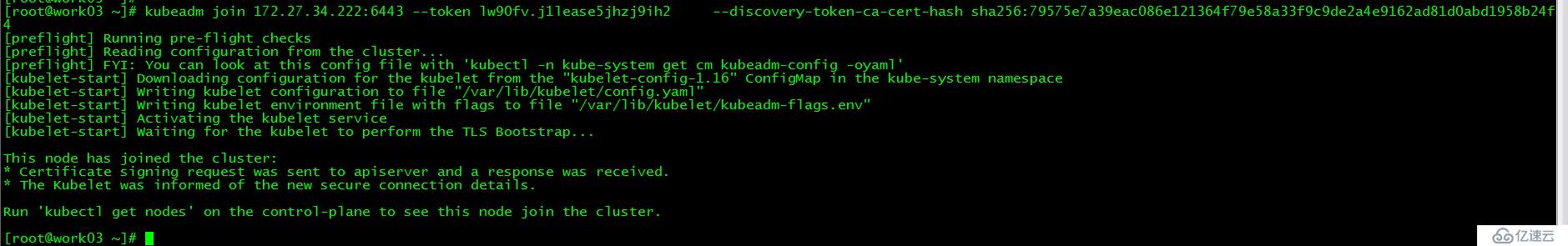

[root@master01 ~]# kubectl get nodes

[root@master01 ~]# kubectl get po -o wide -n kube-system

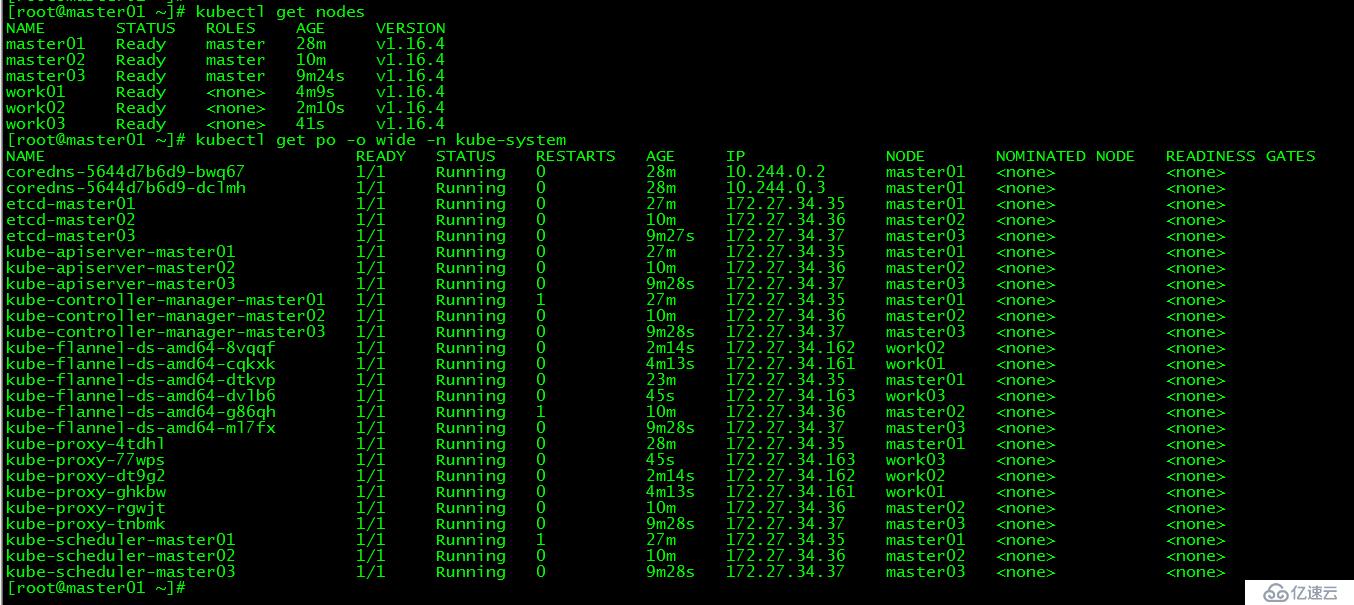

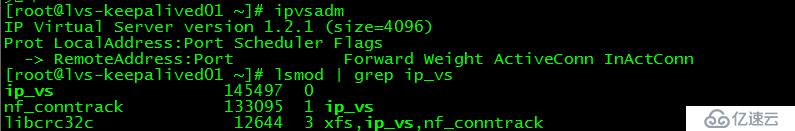

lvs-keepalived01和lvs-keepalived02都執行本操作。

LVS無需安裝,安裝的是管理工具,第一種叫ipvsadm,第二種叫keepalive。ipvsadm是通過命令行管理,而keepalive讀取配置文件管理。

[root@lvs-keepalived01 ~]# yum -y install ipvsadm

把ipvsadm模塊加載進系統

[root@lvs-keepalived01 ~]# ipvsadm

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

[root@lvs-keepalived01 ~]# lsmod | grep ip_vs

ip_vs 145497 0

nf_conntrack 133095 1 ip_vs

libcrc32c 12644 3 xfs,ip_vs,nf_conntrack

lvs相關實踐詳見:LVS+Keepalived+Nginx負載均衡搭建測試

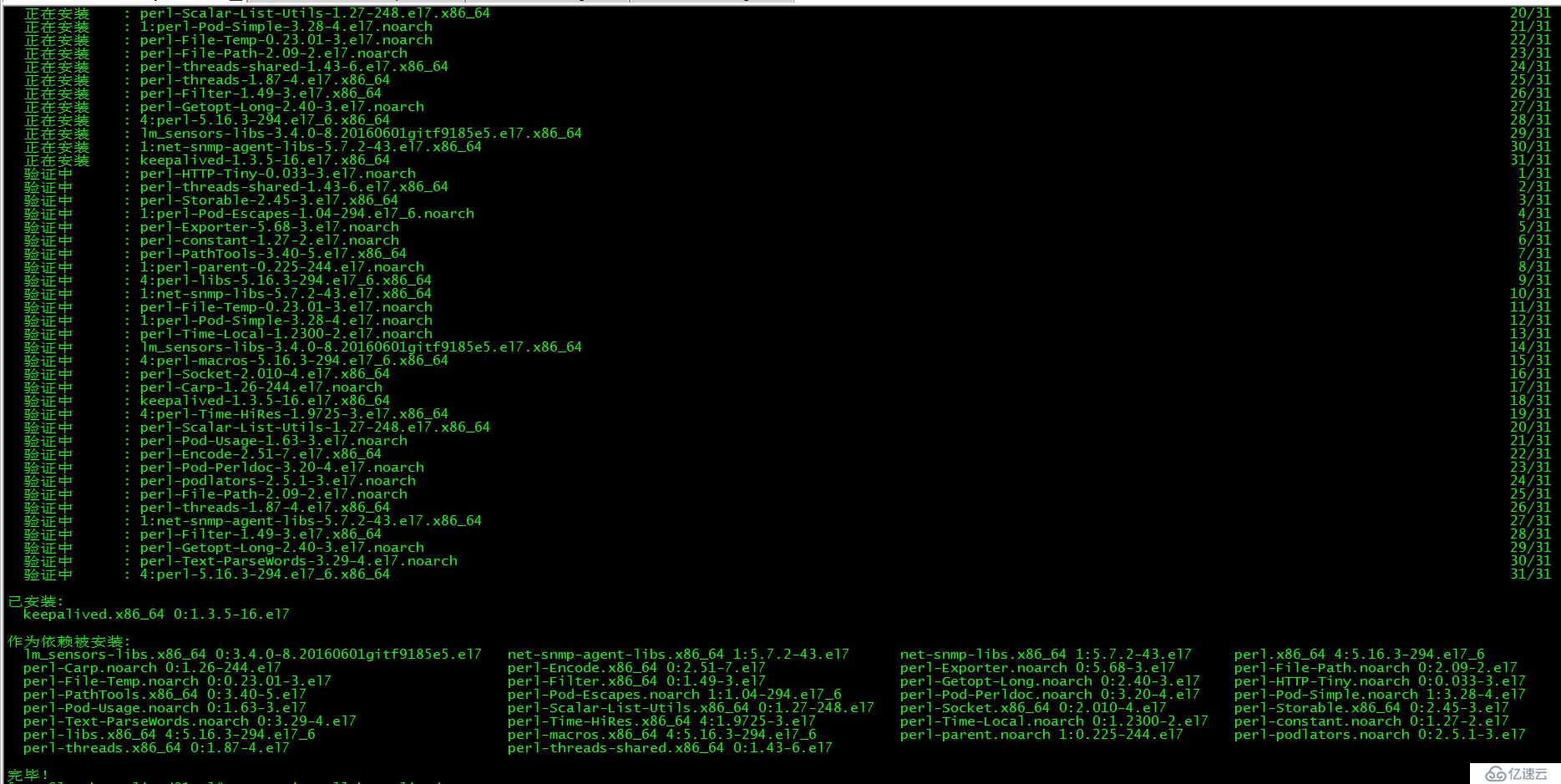

lvs-keepalived01和lvs-keepalived02都執行本操作。

[root@lvs-keepalived01 ~]# yum -y install keepalived

lvs-keepalived01配置如下:

[root@lvs-keepalived01 ~]# more /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id lvs-keepalived01 #router_id 機器標識,通常為hostname,但不一定非得是hostname。故障發生時,郵件通知會用到。

}

vrrp_instance VI_1 { #vrrp實例定義部分

state MASTER #設置lvs的狀態,MASTER和BACKUP兩種,必須大寫

interface ens160 #設置對外服務的接口

virtual_router_id 100 #設置虛擬路由標示,這個標示是一個數字,同一個vrrp實例使用唯一標示

priority 100 #定義優先級,數字越大優先級越高,在一個vrrp——instance下,master的優先級必須大于backup

advert_int 1 #設定master與backup負載均衡器之間同步檢查的時間間隔,單位是秒

authentication { #設置驗證類型和密碼

auth_type PASS #主要有PASS和AH兩種

auth_pass 1111 #驗證密碼,同一個vrrp_instance下MASTER和BACKUP密碼必須相同

}

virtual_ipaddress { #設置虛擬ip地址,可以設置多個,每行一個

172.27.34.222

}

}

virtual_server 172.27.34.222 6443 { #設置虛擬服務器,需要指定虛擬ip和服務端口

delay_loop 6 #健康檢查時間間隔

lb_algo wrr #負載均衡調度算法

lb_kind DR #負載均衡轉發規則

#persistence_timeout 50 #設置會話保持時間,對動態網頁非常有用

protocol TCP #指定轉發協議類型,有TCP和UDP兩種

real_server 172.27.34.35 6443 { #配置服務器節點1,需要指定real server的真實IP地址和端口

weight 10 #設置權重,數字越大權重越高

TCP_CHECK { #realserver的狀態監測設置部分單位秒

connect_timeout 10 #連接超時為10秒

retry 3 #重連次數

delay_before_retry 3 #重試間隔

connect_port 6443 #連接端口為6443,要和上面的保持一致

}

}

real_server 172.27.34.36 6443 { #配置服務器節點1,需要指定real server的真實IP地址和端口

weight 10 #設置權重,數字越大權重越高

TCP_CHECK { #realserver的狀態監測設置部分單位秒

connect_timeout 10 #連接超時為10秒

retry 3 #重連次數

delay_before_retry 3 #重試間隔

connect_port 6443 #連接端口為6443,要和上面的保持一致

}

}

real_server 172.27.34.37 6443 { #配置服務器節點1,需要指定real server的真實IP地址和端口

weight 10 #設置權重,數字越大權重越高

TCP_CHECK { #realserver的狀態監測設置部分單位秒

connect_timeout 10 #連接超時為10秒

retry 3 #重連次數

delay_before_retry 3 #重試間隔

connect_port 6443 #連接端口為6443,要和上面的保持一致

}

}

}lvs-keepalived02配置如下:

[root@lvs-keepalived02 ~]# more /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id lvs-keepalived02 #router_id 機器標識,通常為hostname,但不一定非得是hostname。故障發生時,郵件通知會用到。

}

vrrp_instance VI_1 { #vrrp實例定義部分

state BACKUP #設置lvs的狀態,MASTER和BACKUP兩種,必須大寫

interface ens160 #設置對外服務的接口

virtual_router_id 100 #設置虛擬路由標示,這個標示是一個數字,同一個vrrp實例使用唯一標示

priority 90 #定義優先級,數字越大優先級越高,在一個vrrp——instance下,master的優先級必須大于backup

advert_int 1 #設定master與backup負載均衡器之間同步檢查的時間間隔,單位是秒

authentication { #設置驗證類型和密碼

auth_type PASS #主要有PASS和AH兩種

auth_pass 1111 #驗證密碼,同一個vrrp_instance下MASTER和BACKUP密碼必須相同

}

virtual_ipaddress { #設置虛擬ip地址,可以設置多個,每行一個

172.27.34.222

}

}

virtual_server 172.27.34.222 6443 { #設置虛擬服務器,需要指定虛擬ip和服務端口

delay_loop 6 #健康檢查時間間隔

lb_algo wrr #負載均衡調度算法

lb_kind DR #負載均衡轉發規則

#persistence_timeout 50 #設置會話保持時間,對動態網頁非常有用

protocol TCP #指定轉發協議類型,有TCP和UDP兩種

real_server 172.27.34.35 6443 { #配置服務器節點1,需要指定real server的真實IP地址和端口

weight 10 #設置權重,數字越大權重越高

TCP_CHECK { #realserver的狀態監測設置部分單位秒

connect_timeout 10 #連接超時為10秒

retry 3 #重連次數

delay_before_retry 3 #重試間隔

connect_port 6443 #連接端口為6443,要和上面的保持一致

}

}

real_server 172.27.34.36 6443 { #配置服務器節點1,需要指定real server的真實IP地址和端口

weight 10 #設置權重,數字越大權重越高

TCP_CHECK { #realserver的狀態監測設置部分單位秒

connect_timeout 10 #連接超時為10秒

retry 3 #重連次數

delay_before_retry 3 #重試間隔

connect_port 6443 #連接端口為6443,要和上面的保持一致

}

}

real_server 172.27.34.37 6443 { #配置服務器節點1,需要指定real server的真實IP地址和端口

weight 10 #設置權重,數字越大權重越高

TCP_CHECK { #realserver的狀態監測設置部分單位秒

connect_timeout 10 #連接超時為10秒

retry 3 #重連次數

delay_before_retry 3 #重試間隔

connect_port 6443 #連接端口為6443,要和上面的保持一致

}

}

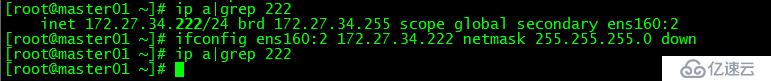

}[root@master01 ~]# ifconfig ens160:2 172.27.34.222 netmask 255.255.255.0 down

master01上去掉初始化使用的ip 172.27.34.222

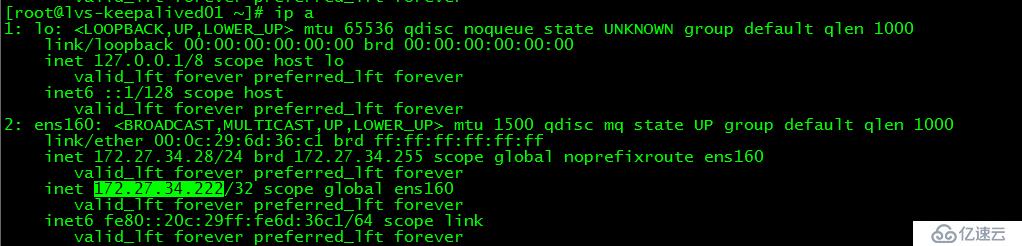

lvs-keepalived01和lvs-keepalived02都啟動keepalived并設置為開機啟動

[root@lvs-keepalived01 ~]# service keepalived start

Redirecting to /bin/systemctl start keepalived.service

[root@lvs-keepalived01 ~]# systemctl enable keepalived

Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service.[root@lvs-keepalived01 ~]# ip a

此時vip在lvs-keepalived01上

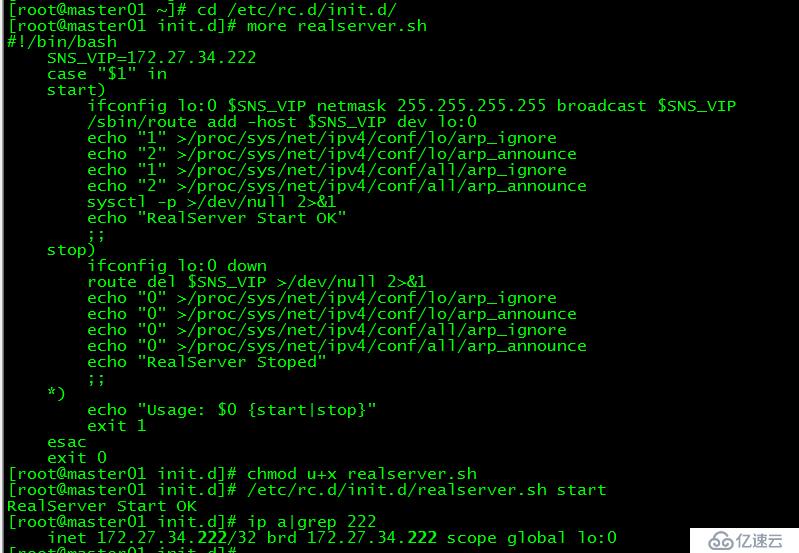

control plane都執行本操作。

打開control plane所在服務器的“路由”功能、關閉“ARP查詢”功能并設置回環ip,三臺control plane配置相同,如下:

[root@master01 ~]# cd /etc/rc.d/init.d/

[root@master01 init.d]# more realserver.sh

#!/bin/bash

SNS_VIP=172.27.34.222

case "$1" in

start)

ifconfig lo:0 $SNS_VIP netmask 255.255.255.255 broadcast $SNS_VIP

/sbin/route add -host $SNS_VIP dev lo:0

echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

sysctl -p >/dev/null 2>&1

echo "RealServer Start OK"

;;

stop)

ifconfig lo:0 down

route del $SNS_VIP >/dev/null 2>&1

echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "0" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/all/arp_announce

echo "RealServer Stoped"

;;

*)

echo "Usage: $0 {start|stop}"

exit 1

esac

exit 0此腳本用于control plane節點綁定 VIP ,并抑制響應 VIP 的 ARP 請求。這樣做的目的是為了不讓關于 VIP 的 ARP 廣播時,節點服務器應答( 因為control plane節點都綁定了 VIP ,如果不做設置它們會應答,就會亂套 )。

在所有control plane節點執行realserver.sh腳本:

[root@master01 init.d]# chmod u+x realserver.sh

[root@master01 init.d]# /etc/rc.d/init.d/realserver.sh start

RealServer Start OK給realserver.sh腳本授予執行權限并運行realserver.sh腳本

[root@master01 init.d]# sed -i '$a /etc/rc.d/init.d/realserver.sh start' /etc/rc.d/rc.local

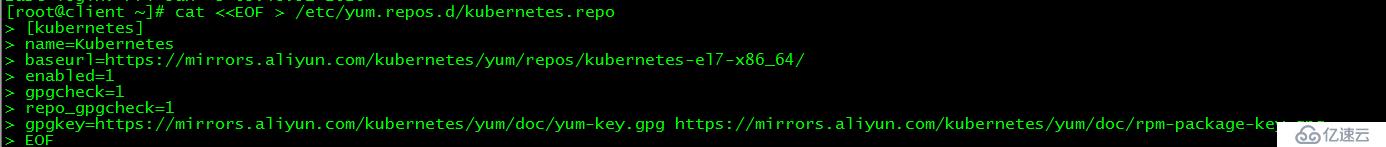

[root@master01 init.d]# chmod u+x /etc/rc.d/rc.local [root@client ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

[root@client ~]# yum clean all

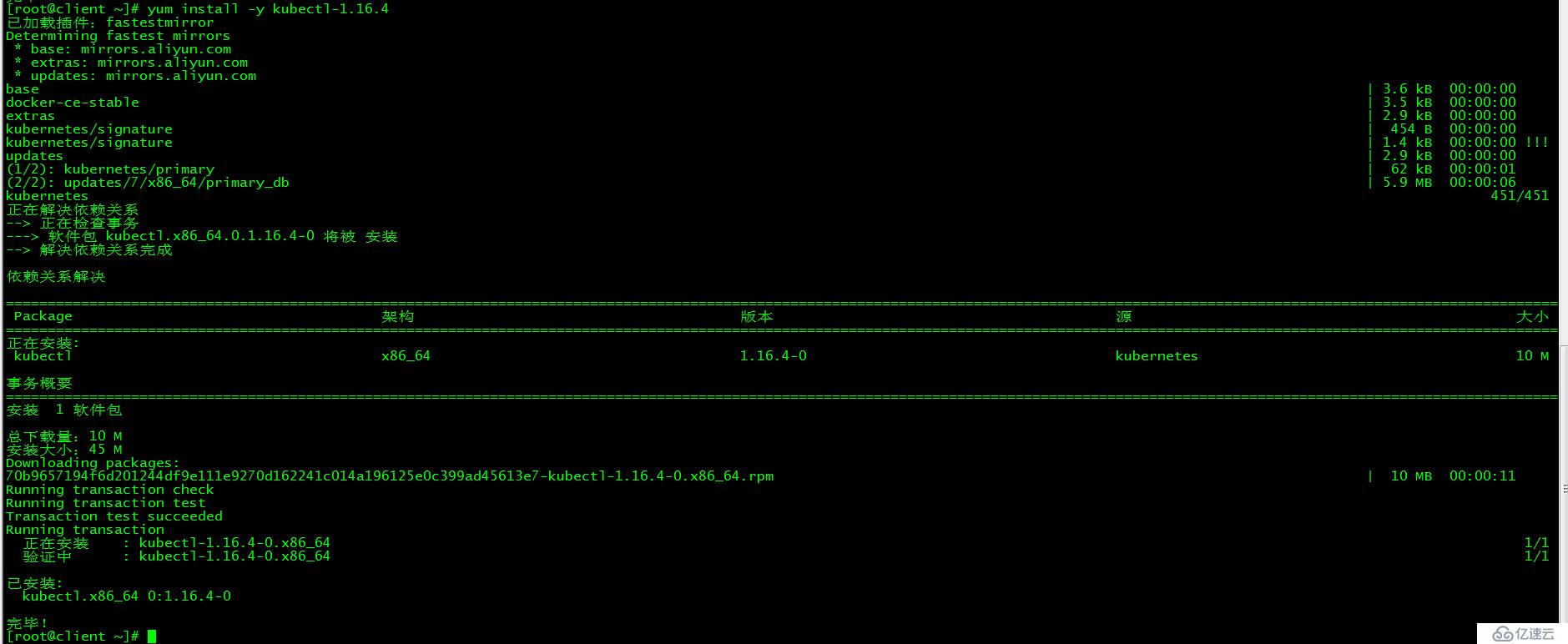

[root@client ~]# yum -y makecache[root@client ~]# yum install -y kubectl-1.16.4

安裝版本與集群版本保持一致

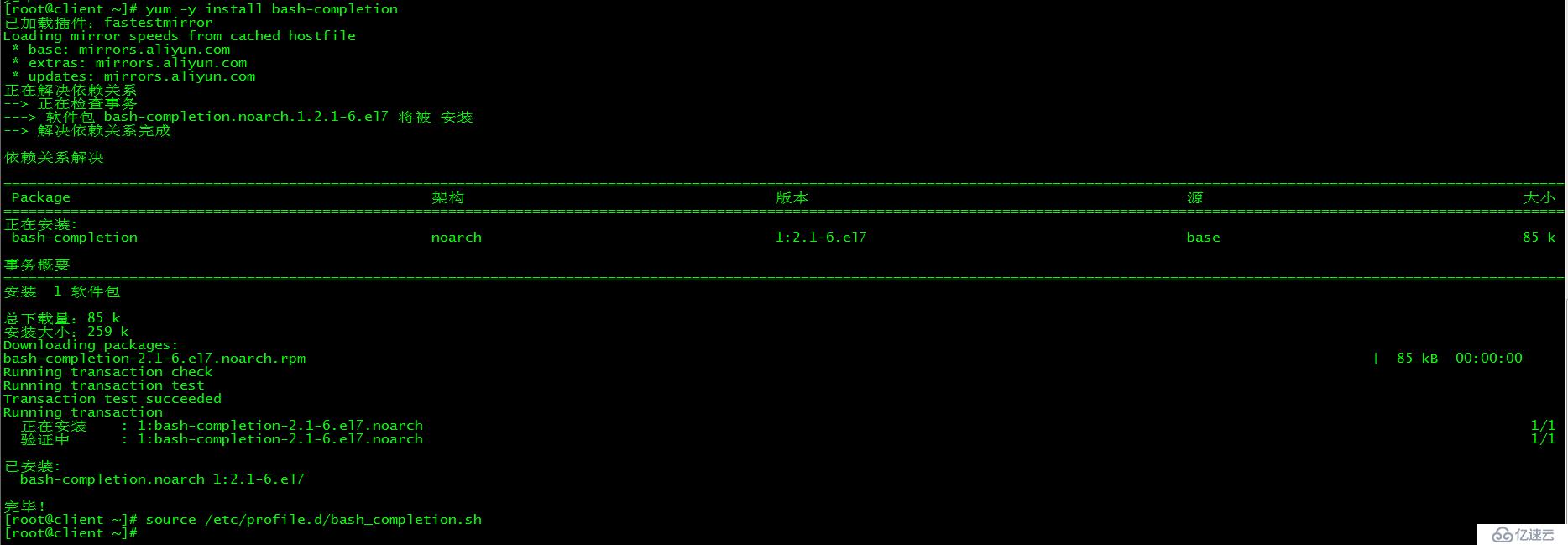

[root@client ~]# yum -y install bash-completion[root@client ~]# source /etc/profile.d/bash_completion.sh

[root@client ~]# mkdir -p /etc/kubernetes

[root@client ~]# scp 172.27.34.35:/etc/kubernetes/admin.conf /etc/kubernetes/

[root@client ~]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

[root@client ~]# source .bash_profile

[root@master01 ~]# echo "source <(kubectl completion bash)" >> ~/.bash_profile

[root@master01 ~]# source .bash_profile

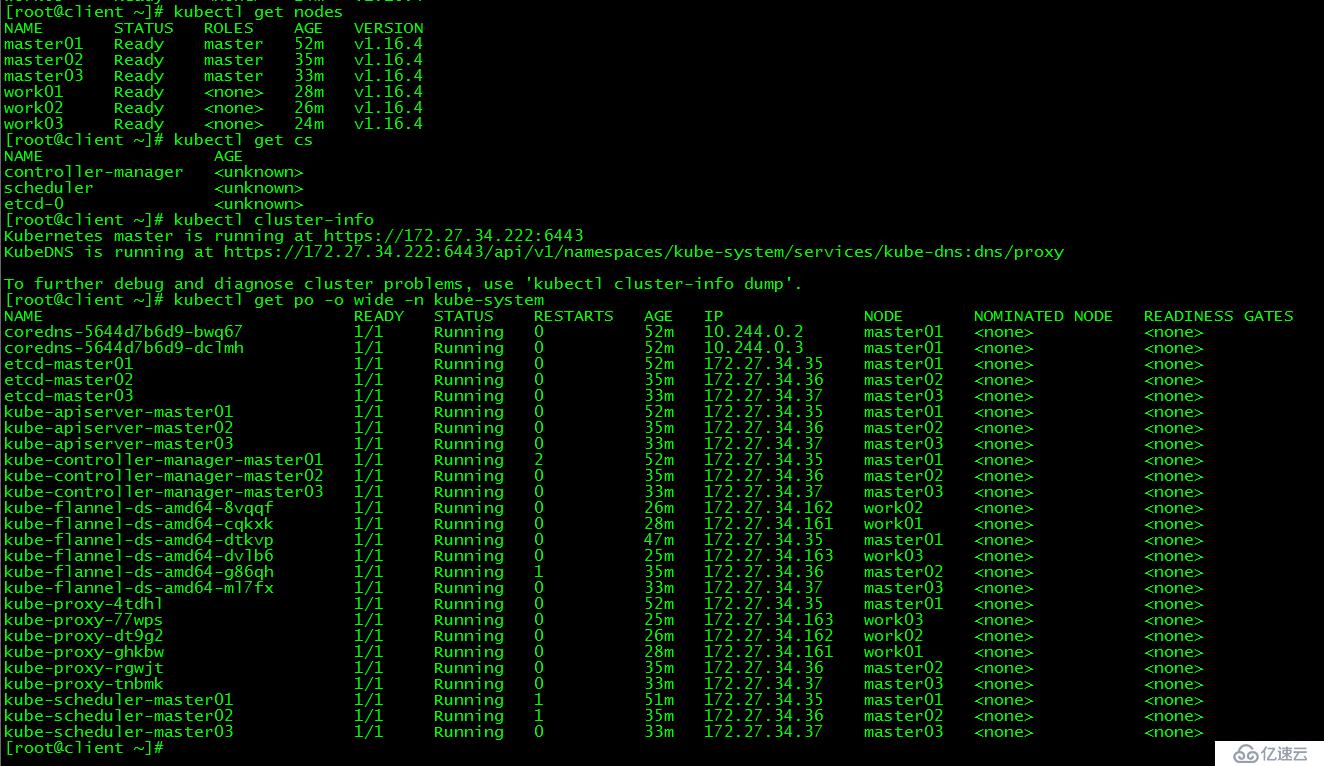

[root@client ~]# kubectl get nodes

[root@client ~]# kubectl get cs

[root@client ~]# kubectl cluster-info

[root@client ~]# kubectl get po -o wide -n kube-system

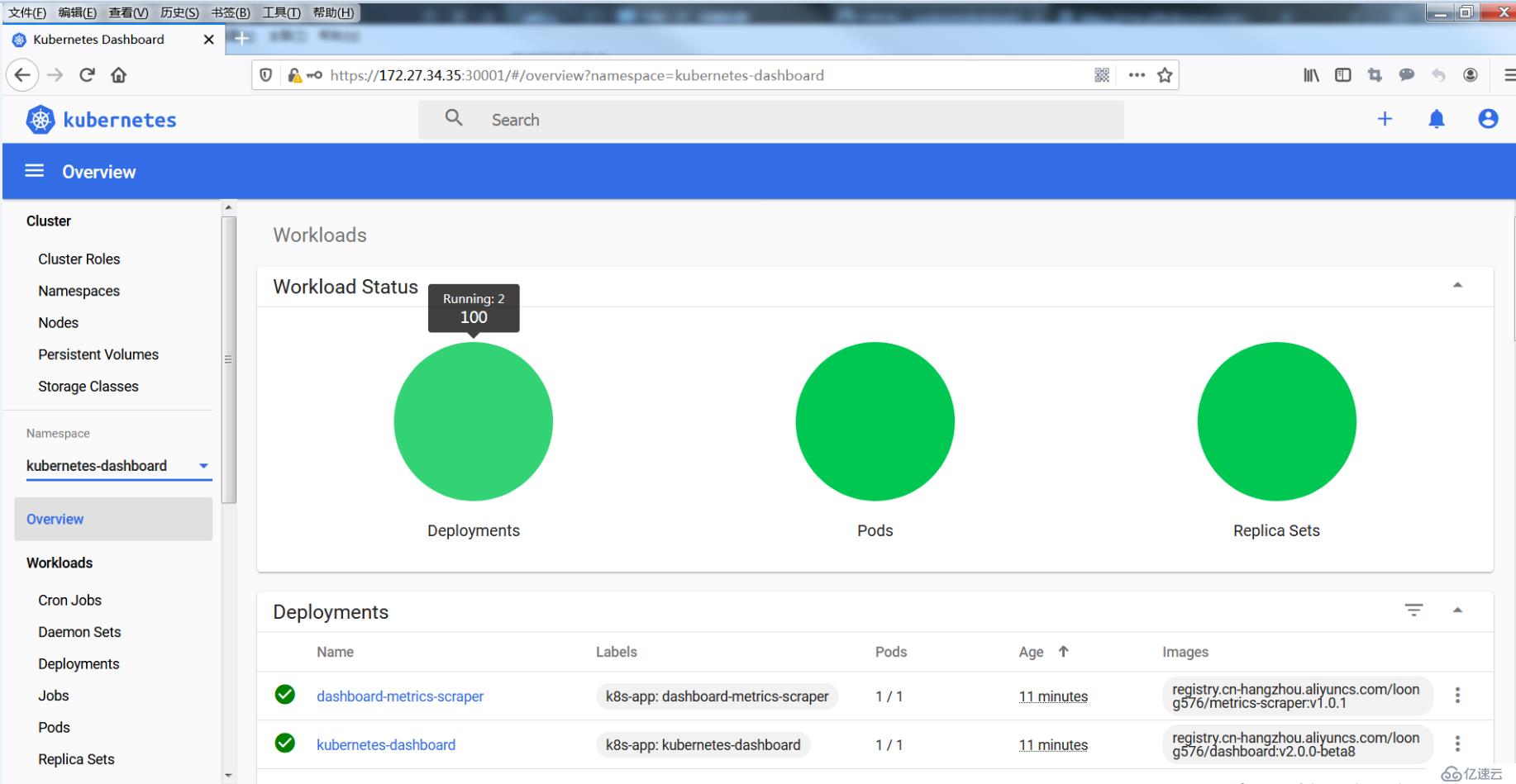

本節內容都在client節點完成。

[root@client ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta8/aio/deploy/recommended.yaml

如果連接超時,可以多試幾次。recommended.yaml已上傳,也可以在文末下載。

[root@client ~]# sed -i 's/kubernetesui/registry.cn-hangzhou.aliyuncs.com\/loong576/g' recommended.yaml

由于默認的鏡像倉庫網絡訪問不通,故改成阿里鏡像

[root@client ~]# sed -i '/targetPort: 8443/a\ \ \ \ \ \ nodePort: 30001\n\ \ type: NodePort' recommended.yaml

配置NodePort,外部通過https://NodeIp:NodePort 訪問Dashboard,此時端口為30001

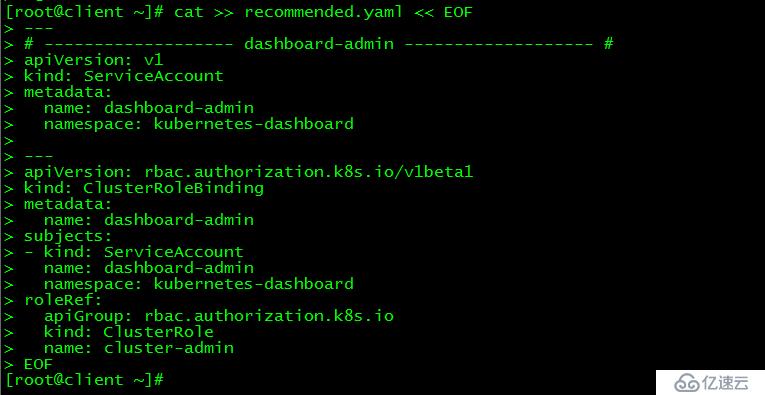

[root@client ~]# cat >> recommended.yaml << EOF

---

# ------------------- dashboard-admin ------------------- #

apiVersion: v1

kind: ServiceAccount

metadata:

name: dashboard-admin

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: dashboard-admin

subjects:

- kind: ServiceAccount

name: dashboard-admin

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

創建超級管理員的賬號用于登錄Dashboard

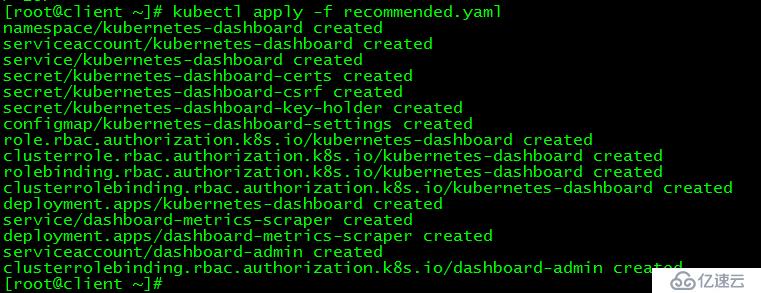

[root@client ~]# kubectl apply -f recommended.yaml

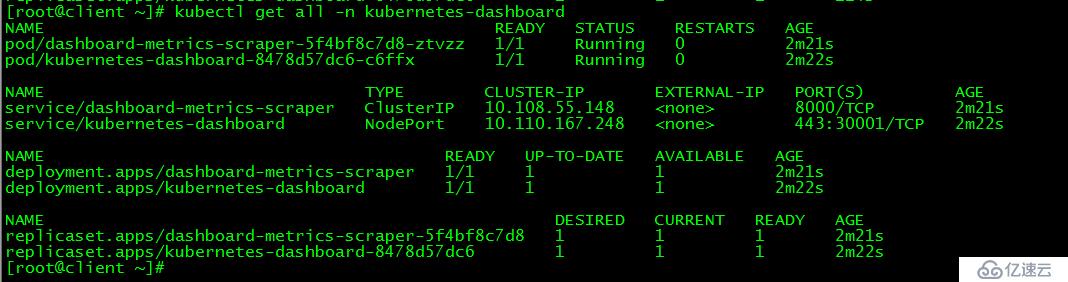

[root@client ~]# kubectl get all -n kubernetes-dashboard

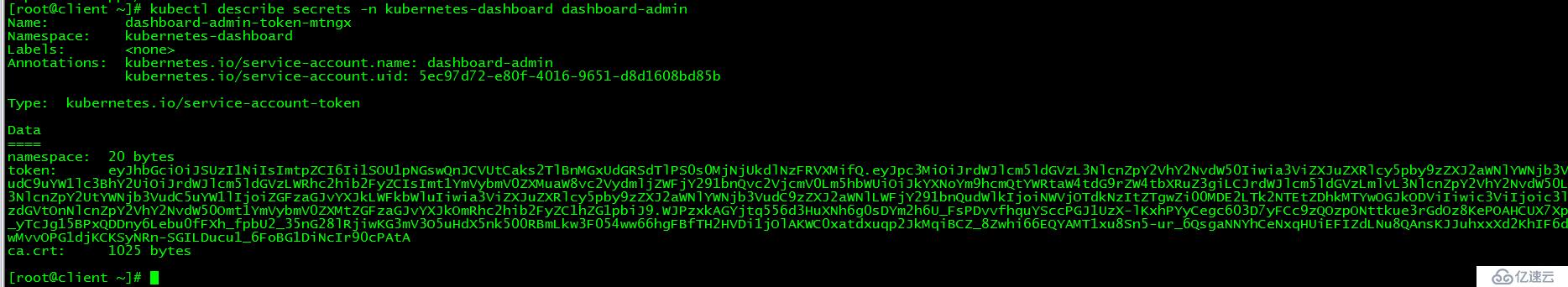

[root@client ~]# kubectl describe secrets -n kubernetes-dashboard dashboard-admin

令牌為:

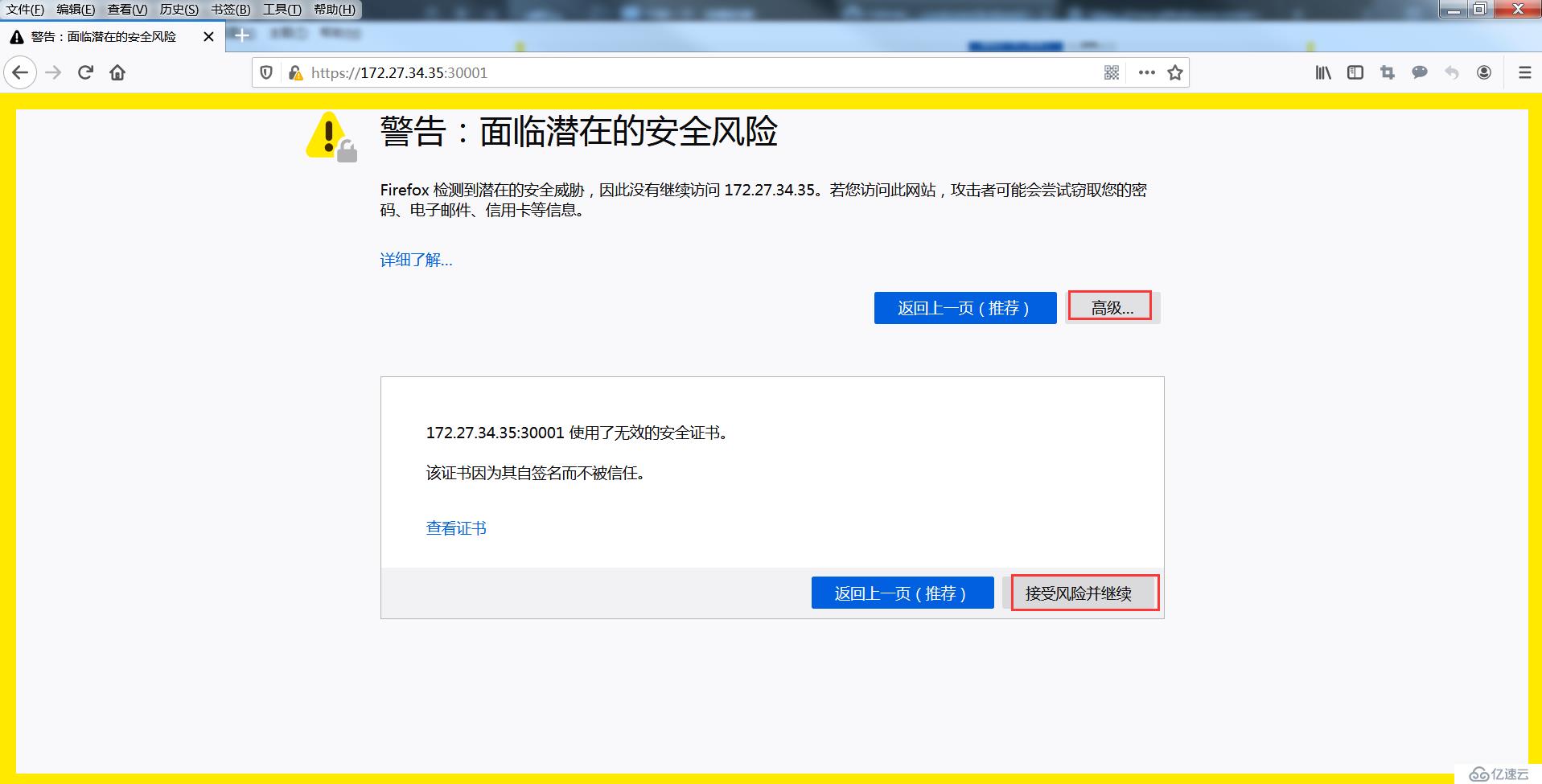

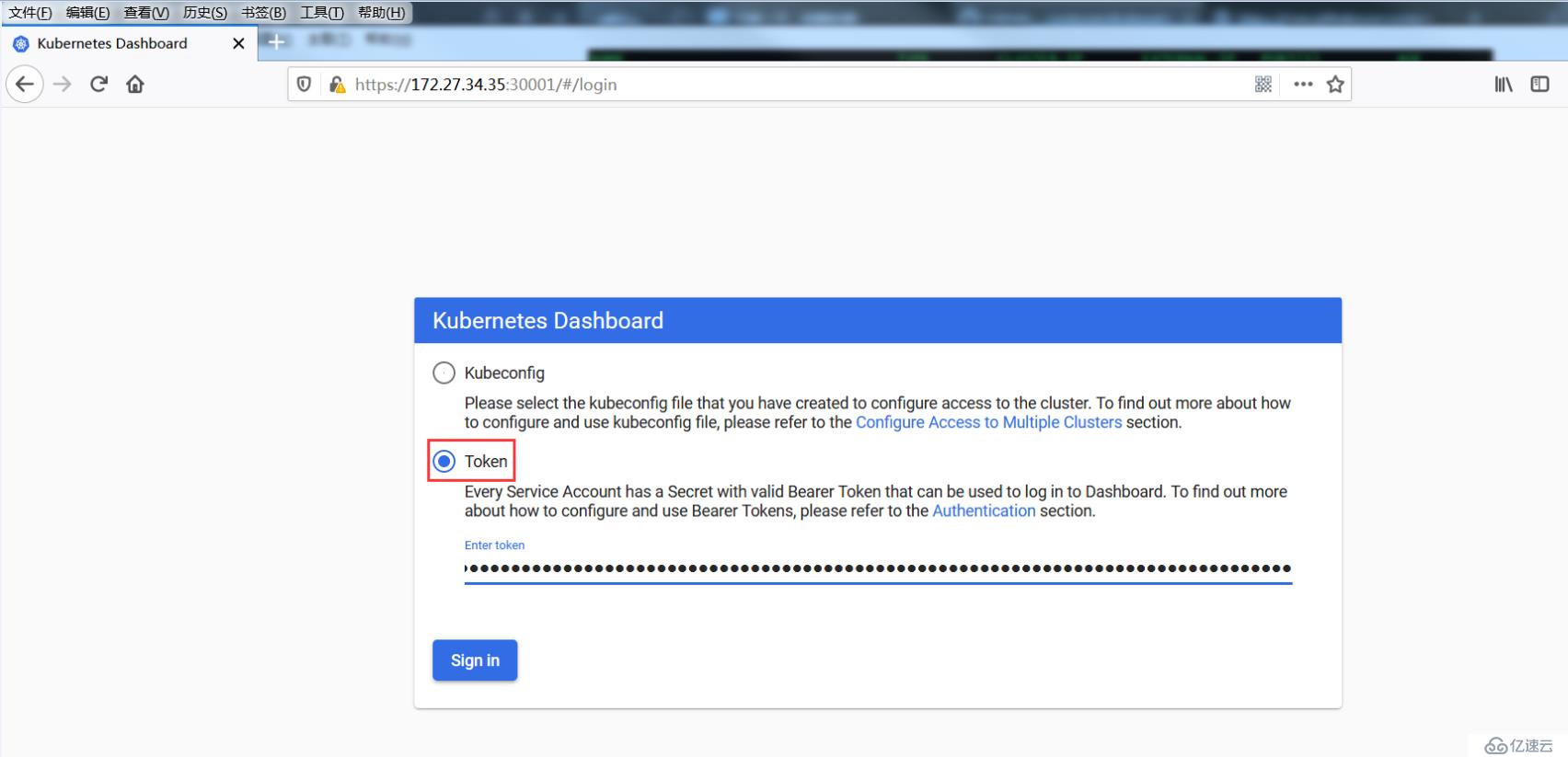

eyJhbGciOiJSUzI1NiIsImtpZCI6Ii1SOU1pNGswQnJCVUtCaks2TlBnMGxUdGRSdTlPS0s0MjNjUkdlNzFRVXMifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tbXRuZ3giLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiNWVjOTdkNzItZTgwZi00MDE2LTk2NTEtZDhkMTYwOGJkODViIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmRhc2hib2FyZC1hZG1pbiJ9.WJPzxkAGYjtq556d3HuXNh7g0sDYm2h7U_FsPDvvfhquYSccPGJ1UzX-lKxhPYyCegc603D7yFCc9zQOzpONttkue3rGdOz8KePOAHCUX7Xp_yTcJg15BPxQDDny6Lebu0fFXh_fpbU2_35nG28lRjiwKG3mV3O5uHdX5nk500RBmLkw3F054ww66hgFBfTH2HVDi1jOlAKWC0xatdxuqp2JkMqiBCZ_8Zwhi66EQYAMT1xu8Sn5-ur_6QsgaNNYhCeNxqHUiEFIZdLNu8QAnsKJJuhxxXd2KhIF6dwMvvOPG1djKCKSyNRn-SGILDucu1_6FoBG1DiNcIr90cPAtA請使用火狐瀏覽器訪問:https://control plane ip:30001,即https://172.27.34.35/36/37:30001/

接受風險

通過令牌方式登錄

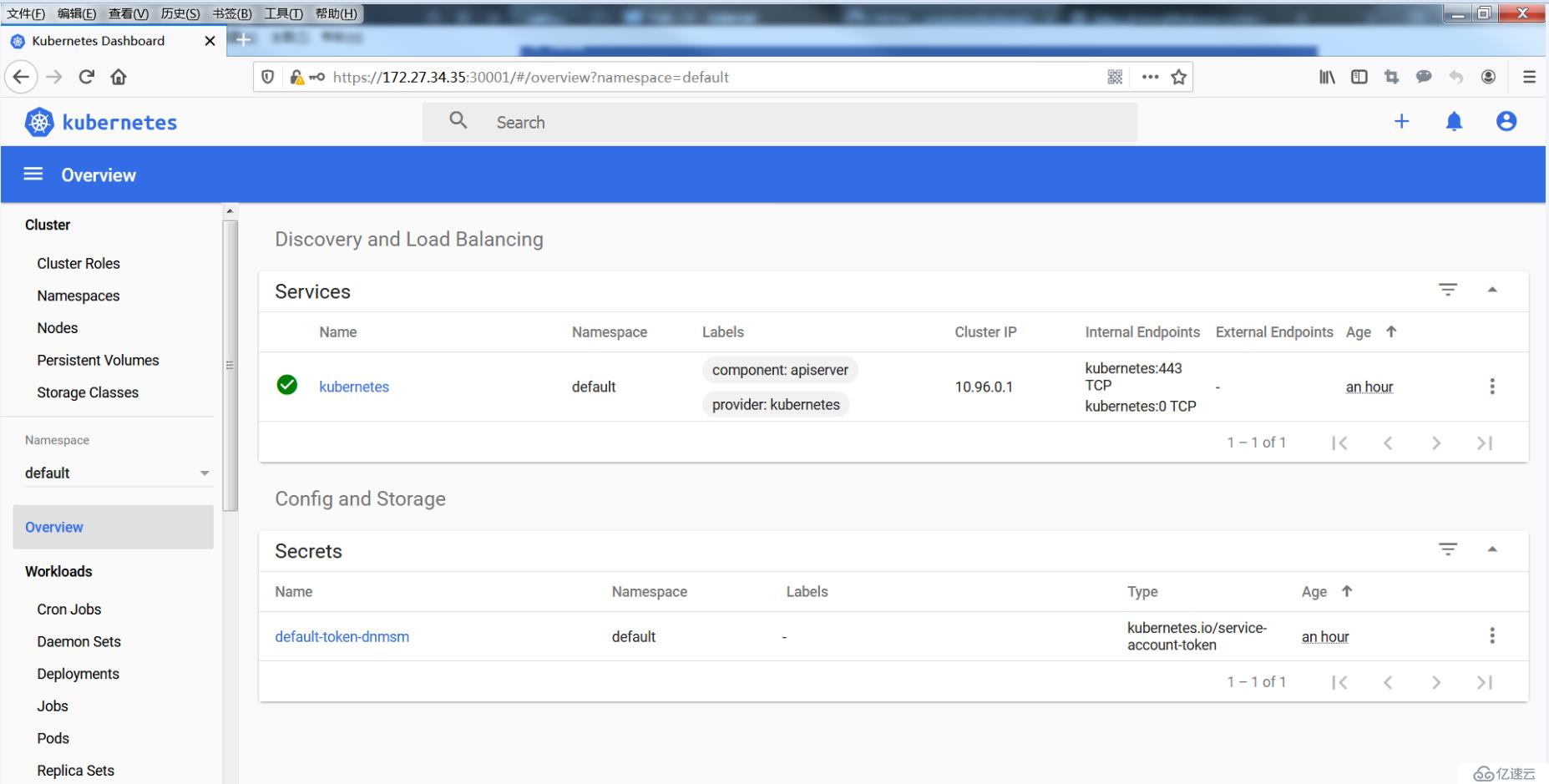

登錄的首頁顯示

切換到命名空間kubernetes-dashboard,查看資源。

Dashboard提供了可以實現集群管理、工作負載、服務發現和負載均衡、存儲、字典配置、日志視圖等功能。

為了豐富dashboard的統計數據和圖表,可以安裝heapster組件。heapster組件實踐詳見:k8s實踐(十一):heapster+influxdb+grafana實現kubernetes集群監

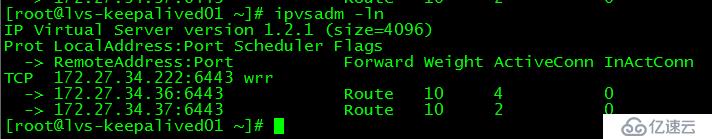

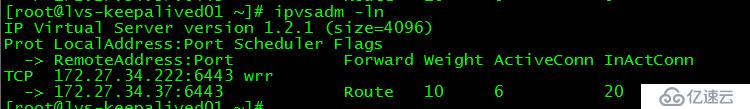

通過ipvsadm查看apiserver所在節點,通過leader-elect查看scheduler和controller-manager所在節點:

在lvs-keepalived01上執行ipvsadm查看apiserver轉發到的服務器

[root@lvs-keepalived01 ~]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.27.34.222:6443 wrr

-> 172.27.34.35:6443 Route 10 2 0

-> 172.27.34.36:6443 Route 10 2 0

-> 172.27.34.37:6443 Route 10 2 0

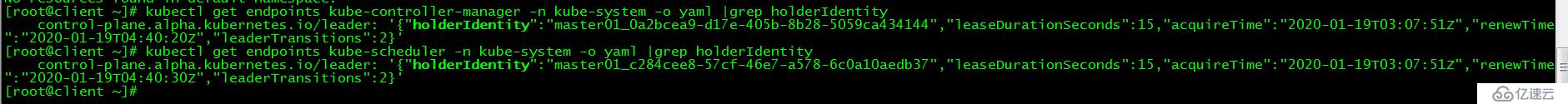

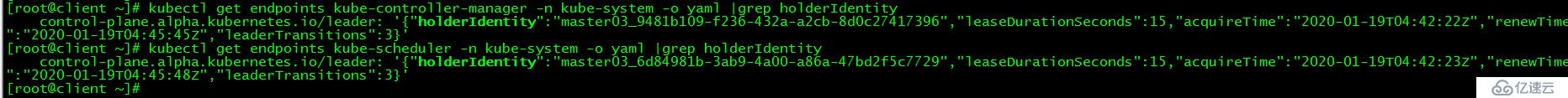

在client節點上查看controller-manager和scheduler組件所在節點

[root@client ~]# kubectl get endpoints kube-controller-manager -n kube-system -o yaml |grep holderIdentity

control-plane.alpha.kubernetes.io/leader: '{"holderIdentity":"master01_0a2bcea9-d17e-405b-8b28-5059ca434144","leaseDurationSeconds":15,"acquireTime":"2020-01-19T03:07:51Z","renewTime":"2020-01-19T04:40:20Z","leaderTransitions":2}'

[root@client ~]# kubectl get endpoints kube-scheduler -n kube-system -o yaml |grep holderIdentity

control-plane.alpha.kubernetes.io/leader: '{"holderIdentity":"master01_c284cee8-57cf-46e7-a578-6c0a10aedb37","leaseDurationSeconds":15,"acquireTime":"2020-01-19T03:07:51Z","renewTime":"2020-01-19T04:40:30Z","leaderTransitions":2}'

| 組件名 | 所在節點 |

|---|---|

| apiserver | master01、master02、master03 |

| controller-manager | master01 |

| scheduler | master01 |

關閉master01,模擬宕機

[root@master01 ~]# init 0

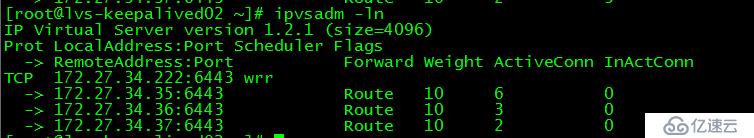

lvs-keepalived01上查看apiserver節點鏈接情況

[root@lvs-keepalived01 ~]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.27.34.222:6443 wrr

-> 172.27.34.36:6443 Route 10 4 0

-> 172.27.34.37:6443 Route 10 2 0

發現master01的apiserver被移除集群,即訪問172.27.34.222:64443時不會被調度到master01

client節點上再次運行查看controller-manager和scheduler命令

[root@client ~]# kubectl get endpoints kube-controller-manager -n kube-system -o yaml |grep holderIdentity

control-plane.alpha.kubernetes.io/leader: '{"holderIdentity":"master03_9481b109-f236-432a-a2cb-8d0c27417396","leaseDurationSeconds":15,"acquireTime":"2020-01-19T04:42:22Z","renewTime":"2020-01-19T04:45:45Z","leaderTransitions":3}'

[root@client ~]# kubectl get endpoints kube-scheduler -n kube-system -o yaml |grep holderIdentity

control-plane.alpha.kubernetes.io/leader: '{"holderIdentity":"master03_6d84981b-3ab9-4a00-a86a-47bd2f5c7729","leaseDurationSeconds":15,"acquireTime":"2020-01-19T04:42:23Z","renewTime":"2020-01-19T04:45:48Z","leaderTransitions":3}'

[root@client ~]#

controller-manager和scheduler都被切換到master03節點

| 組件名 | 所在節點 |

|---|---|

| apiserver | master02、master03 |

| controller-manager | master03 |

| scheduler | master03 |

所有功能性測試都在client節點完成。

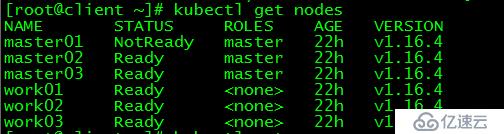

[root@client ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master01 NotReady master 22h v1.16.4

master02 Ready master 22h v1.16.4

master03 Ready master 22h v1.16.4

work01 Ready <none> 22h v1.16.4

work02 Ready <none> 22h v1.16.4

work03 Ready <none> 22h v1.16.4

master01狀態為NotReady

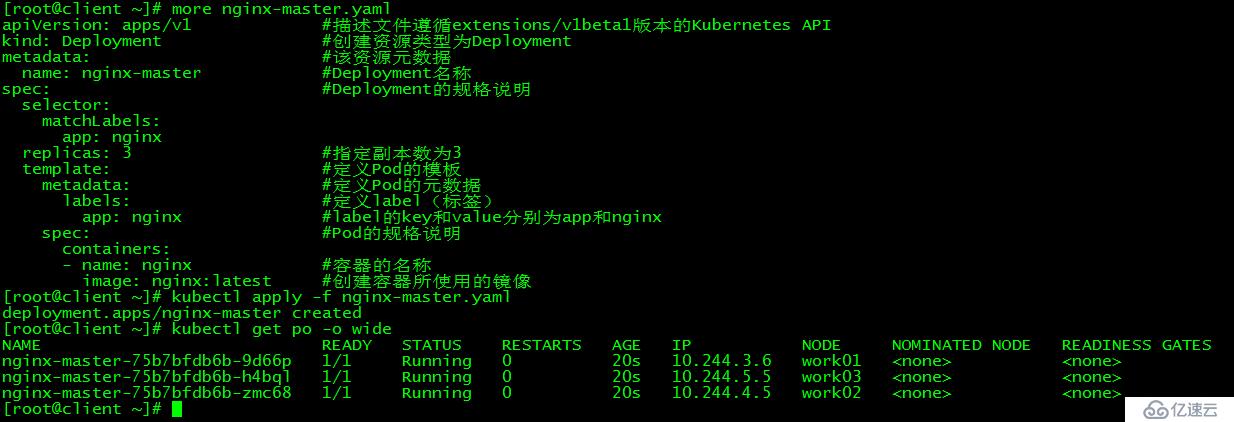

[root@client ~]# more nginx-master.yaml

apiVersion: apps/v1 #描述文件遵循extensions/v1beta1版本的Kubernetes API

kind: Deployment #創建資源類型為Deployment

metadata: #該資源元數據

name: nginx-master #Deployment名稱

spec: #Deployment的規格說明

selector:

matchLabels:

app: nginx

replicas: 3 #指定副本數為3

template: #定義Pod的模板

metadata: #定義Pod的元數據

labels: #定義label(標簽)

app: nginx #label的key和value分別為app和nginx

spec: #Pod的規格說明

containers:

- name: nginx #容器的名稱

image: nginx:latest #創建容器所使用的鏡像

[root@client ~]# kubectl apply -f nginx-master.yaml

deployment.apps/nginx-master created

[root@client ~]# kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-master-75b7bfdb6b-9d66p 1/1 Running 0 20s 10.244.3.6 work01 <none> <none>

nginx-master-75b7bfdb6b-h5bql 1/1 Running 0 20s 10.244.5.5 work03 <none> <none>

nginx-master-75b7bfdb6b-zmc68 1/1 Running 0 20s 10.244.4.5 work02 <none> <none>

以新建pod nginx為例測試集群是否能正常對外提供服務。

在3節點的k8s集群中,當有一個control plane節點宕機時,集群各項功能不受影響。

在master01處于關閉狀態下,繼續關閉master02,測試集群還能否正常對外服務。

[root@master02 ~]# init 0[root@lvs-keepalived01 ~]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.27.34.222:6443 wrr

-> 172.27.34.37:6443 Route 10 6 20

此時對集群的訪問都轉到master03

[root@client ~]# kubectl get nodes

The connection to the server 172.27.34.222:6443 was refused - did you specify the right host or port?在3節點的k8s集群中,當有兩個control plane節點同時宕機時,etcd集群崩潰,整個k8s集群也不能正常對外服務。

[root@client ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master01 Ready master 161m v1.16.4

master02 Ready master 144m v1.16.4

master03 Ready master 142m v1.16.4

work01 Ready <none> 137m v1.16.4

work02 Ready <none> 135m v1.16.4

work03 Ready <none> 134m v1.16.4集群內個節點運行正常

[root@lvs-keepalived01 ~]# ip a|grep 222

inet 172.27.34.222/32 scope global ens160發現vip運行在lvs-keepalived01上

lvs-keepalived01:

[root@lvs-keepalived01 ~]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.27.34.222:6443 wrr

-> 172.27.34.35:6443 Route 10 6 0

-> 172.27.34.36:6443 Route 10 0 0

-> 172.27.34.37:6443 Route 10 38 0 lvs-keepalived02:

[root@lvs-keepalived02 ~]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.27.34.222:6443 wrr

-> 172.27.34.35:6443 Route 10 0 0

-> 172.27.34.36:6443 Route 10 0 0

-> 172.27.34.37:6443 Route 10 0 0 關閉lvs-keepalived01,模擬宕機

[root@lvs-keepalived01 ~]# init 0[root@client ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master01 Ready master 166m v1.16.4

master02 Ready master 148m v1.16.4

master03 Ready master 146m v1.16.4

work01 Ready <none> 141m v1.16.4

work02 Ready <none> 139m v1.16.4

work03 Ready <none> 138m v1.16.4集群內個節點運行正常

[root@lvs-keepalived02 ~]# ip a|grep 222

inet 172.27.34.222/32 scope global ens160發現vip已漂移至lvs-keepalived02

lvs-keepalived02:

[root@lvs-keepalived02 ~]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.27.34.222:6443 wrr

-> 172.27.34.35:6443 Route 10 1 0

-> 172.27.34.36:6443 Route 10 4 0

-> 172.27.34.37:6443 Route 10 1 0 [root@client ~]# kubectl delete -f nginx-master.yaml

deployment.apps "nginx-master" deleted

[root@client ~]# kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-master-75b7bfdb6b-9d66p 0/1 Terminating 0 20m 10.244.3.6 work01 <none> <none>

nginx-master-75b7bfdb6b-h5bql 0/1 Terminating 0 20m 10.244.5.5 work03 <none> <none>

nginx-master-75b7bfdb6b-zmc68 0/1 Terminating 0 20m 10.244.4.5 work02 <none> <none>

[root@client ~]# kubectl get po -o wide

No resources found in default namespace.

刪除之前新建的pod nginx,成功刪除。

當lvs-keepalived集群有一臺宕機時,對k8s集群無影響,仍能正常對外提供服務。

?

本文所有腳本和配置文件已上傳github:lvs-keepalived-install-k8s-HA-cluster

?

單機版k8s集群部署詳見:k8s實踐(一):Centos7.6部署k8s(v1.14.2)集群

主備高可用版k8s集群部署詳見:k8s實踐(十五):Centos7.6部署k8s v1.16.4高可用集群(主備模式)

?

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。