溫馨提示×

您好,登錄后才能下訂單哦!

點擊 登錄注冊 即表示同意《億速云用戶服務條款》

您好,登錄后才能下訂單哦!

小編給大家分享一下springboot+webmagic如何實現java爬蟲jdbc及mysql,希望大家閱讀完這篇文章之后都有所收獲,下面讓我們一起去探討吧!

一、首先介紹一下webmagic:

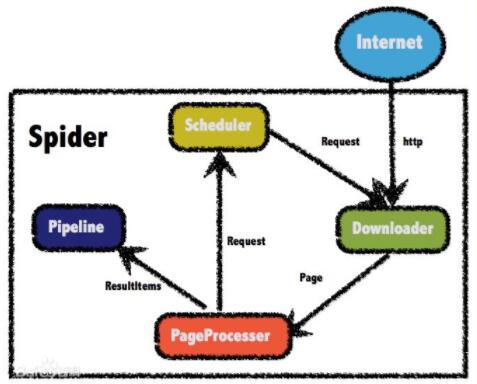

webmagic采用完全模塊化的設計,功能覆蓋整個爬蟲的生命周期(鏈接提取、頁面下載、內容抽取、持久化),支持多線程抓取,分布式抓取,并支持自動重試、自定義UA/cookie等功能。

實現理念:

Maven依賴:

<dependency> <groupId>us.codecraft</groupId> <artifactId>webmagic-core</artifactId> <version>0.7.3</version> </dependency> <dependency> <groupId>us.codecraft</groupId> <artifactId>webmagic-extension</artifactId> <version>0.7.3</version> </dependency> <dependency> <groupId>us.codecraft</groupId> <artifactId>webmagic-extension</artifactId> <version>0.7.3</version> <exclusions> <exclusion> <groupId>org.slf4j</groupId> <artifactId>slf4j-log4j12</artifactId> </exclusion> </exclusions> </dependency>

jdbc模式:

ublic class CsdnBlogDao {

private Connection conn = null;

private Statement stmt = null;

public CsdnBlogDao() {

try {

Class.forName("com.mysql.jdbc.Driver");

String url = "jdbc:mysql://localhost:3306/test?"

+ "user=***&password=***3&useUnicode=true&characterEncoding=UTF8";

conn = DriverManager.getConnection(url);

stmt = conn.createStatement();

} catch (ClassNotFoundException e) {

e.printStackTrace();

} catch (SQLException e) {

e.printStackTrace();

}

}

public int add(CsdnBlog csdnBlog) {

try {

String sql = "INSERT INTO `test`.`csdnblog` (`keyes`, `titles`, `content` , `dates`, `tags`, `category`, `views`, `comments`, `copyright`) VALUES (?, ?, ?, ?, ?, ?, ?, ?,?);";

PreparedStatement ps = conn.prepareStatement(sql);

ps.setInt(1, csdnBlog.getKey());

ps.setString(2, csdnBlog.getTitle());

ps.setString(3,csdnBlog.getContent());

ps.setString(4, csdnBlog.getDates());

ps.setString(5, csdnBlog.getTags());

ps.setString(6, csdnBlog.getCategory());

ps.setInt(7, csdnBlog.getView());

ps.setInt(8, csdnBlog.getComments());

ps.setInt(9, csdnBlog.getCopyright());

return ps.executeUpdate();

} catch (SQLException e) {

e.printStackTrace();

}

return -1;

}

}實體類:

public class CsdnBlog {

private int key;// 編號

private String title;// 標題

private String dates;// 日期

private String tags;// 標簽

private String category;// 分類

private int view;// 閱讀人數

private int comments;// 評論人數

private int copyright;// 是否原創

private String content; //文字內容

public String getContent() {

return content;

}

public void setContent(String content) {

this.content = content;

}

public int getKey() {

return key;

}

public void setKey(int key) {

this.key = key;

}

public String getTitle() {

return title;

}

public void setTitle(String title) {

this.title = title;

}

public String getDates() {

return dates;

}

public void setDates(String dates) {

this.dates = dates;

}

public String getTags() {

return tags;

}

public void setTags(String tags) {

this.tags = tags;

}

public String getCategory() {

return category;

}

public void setCategory(String category) {

this.category = category;

}

public int getView() {

return view;

}

public void setView(int view) {

this.view = view;

}

public int getComments() {

return comments;

}

public void setComments(int comments) {

this.comments = comments;

}

public int getCopyright() {

return copyright;

}

public void setCopyright(int copyright) {

this.copyright = copyright;

}

@Override

public String toString() {

return "CsdnBlog [key=" + key + ", title=" + title + ", content=" + content + ",dates=" + dates + ", tags=" + tags + ", category="

+ category + ", view=" + view + ", comments=" + comments + ", copyright=" + copyright + "]";

}

}啟動類:

public class CsdnBlogPageProcessor implements PageProcessor {

private static String username="CHENYUFENG1991"; // 設置csdn用戶名

private static int size = 0;// 共抓取到的文章數量

// 抓取網站的相關配置,包括:編碼、抓取間隔、重試次數等

private Site site = Site.me().setRetryTimes(3).setSleepTime(1000);

public Site getSite() {

return site;

}

// process是定制爬蟲邏輯的核心接口,在這里編寫抽取邏輯

public void process(Page page) {

// 列表頁

if (!page.getUrl().regex("http://blog\\.csdn\\.net/" + username + "/article/details/\\d+").match()) {

// 添加所有文章頁

page.addTargetRequests(page.getHtml().xpath("//div[@id='article_list']").links()// 限定文章列表獲取區域

.regex("/" + username + "/article/details/\\d+")

.replace("/" + username + "/", "http://blog.csdn.net/" + username + "/")// 巧用替換給把相對url轉換成絕對url

.all());

// 添加其他列表頁

page.addTargetRequests(page.getHtml().xpath("//div[@id='papelist']").links()// 限定其他列表頁獲取區域

.regex("/" + username + "/article/list/\\d+")

.replace("/" + username + "/", "http://blog.csdn.net/" + username + "/")// 巧用替換給把相對url轉換成絕對url

.all());

// 文章頁

} else {

size++;// 文章數量加1

// 用CsdnBlog類來存抓取到的數據,方便存入數據庫

CsdnBlog csdnBlog = new CsdnBlog();

// 設置編號

csdnBlog.setKey(Integer.parseInt(

page.getUrl().regex("http://blog\\.csdn\\.net/" + username + "/article/details/(\\d+)").get()));

// 設置標題

csdnBlog.setTitle(

page.getHtml().xpath("//div[@class='article_title']//span[@class='link_title']/a/text()").get());

//設置內容

csdnBlog.setContent(

page.getHtml().xpath("//div[@class='article_content']/allText()").get());

// 設置日期

csdnBlog.setDates(

page.getHtml().xpath("//div[@class='article_r']/span[@class='link_postdate']/text()").get());

// 設置標簽(可以有多個,用,來分割)

csdnBlog.setTags(listToString(page.getHtml().xpath("//div[@class='article_l']/span[@class='link_categories']/a/allText()").all()));

// 設置類別(可以有多個,用,來分割)

csdnBlog.setCategory(listToString(page.getHtml().xpath("//div[@class='category_r']/label/span/text()").all()));

// 設置閱讀人數

csdnBlog.setView(Integer.parseInt(page.getHtml().xpath("//div[@class='article_r']/span[@class='link_view']")

.regex("(\\d+)人閱讀").get()));

// 設置評論人數

csdnBlog.setComments(Integer.parseInt(page.getHtml()

.xpath("//div[@class='article_r']/span[@class='link_comments']").regex("\\((\\d+)\\)").get()));

// 設置是否原創

csdnBlog.setCopyright(page.getHtml().regex("bog_copyright").match() ? 1 : 0);

// 把對象存入數據庫

new CsdnBlogDao().add(csdnBlog);

// 把對象輸出控制臺

System.out.println(csdnBlog);

}

}

// 把list轉換為string,用,分割

public static String listToString(List<String> stringList) {

if (stringList == null) {

return null;

}

StringBuilder result = new StringBuilder();

boolean flag = false;

for (String string : stringList) {

if (flag) {

result.append(",");

} else {

flag = true;

}

result.append(string);

}

return result.toString();

}

public static void main(String[] args) {

long startTime, endTime;

System.out.println("【爬蟲開始】...");

startTime = System.currentTimeMillis();

// 從用戶博客首頁開始抓,開啟5個線程,啟動爬蟲

Spider.create(new CsdnBlogPageProcessor()).addUrl("http://blog.csdn.net/" + username).thread(5).run();

endTime = System.currentTimeMillis();

System.out.println("【爬蟲結束】共抓取" + size + "篇文章,耗時約" + ((endTime - startTime) / 1000) + "秒,已保存到數據庫,請查收!");

}

}使用mysql類型:

public class GamePageProcessor implements PageProcessor {

private static final Logger logger = LoggerFactory.getLogger(GamePageProcessor.class);

private static DianJingService d;

private static BannerService bs;

private static SportService ss;

private static YuLeNewsService ys;

private static UpdateService ud ;

// 抓取網站的相關配置,包括:編碼、抓取間隔、重試次數等

private Site site = Site.me().setRetryTimes(3).setSleepTime(1000);

public Site getSite() {

return site;

}

// process是定制爬蟲邏輯的核心接口,在這里編寫抽取邏輯

public static void main(String[] args) {

ConfigurableApplicationContext context= SpringApplication.run(GamePageProcessor.class, args);

d = context.getBean(DianJingService.class);

//Spider.create(new GamePageProcessor()).addUrl("網址").thread(5).run();

}

public void process(Page page) {

Selectable url = page.getUrl();

if (url.toString().equals("網址")) {

DianJingVideo dv = new DianJingVideo();

List<String> ls = page.getHtml().xpath("//div[@class='v']/div[@class='v-meta va']/div[@class='v-meta-title']/a/text()").all();

//hrefs

List<String> ls1 = page.getHtml().xpath("//div[@class='v']/div[@class='v-link']/a/@href").all();//獲取a標簽的href

List<String> ls2 = page.getHtml().xpath("//div[@class='v']/div[@class='v-meta va']/div[@class='v-meta-entry']/div[@class='v-meta-data']/span[@class='r']/text()").all();

//photo

List<String> ls3 = page.getHtml().xpath("//div[@class='v']/div[@class='v-thumb']/img/@src").all();

for (int i = 0; i < 5; i++) {

dv.setTitles(ls.get(i));

dv.setCategory("");

dv.setDates(ls2.get(i));

dv.setHrefs(ls1.get(i));

dv.setPhoto(ls3.get(i));

dv.setSources("");

d.addVideo(dv);

}

}

}Controller:

@Controller

@RequestMapping(value = "/dianjing")

public class DianJingController {

@Autowired

private DianJingService s;

/*

手游

*/

@RequestMapping("/dianjing")

@ResponseBody

public Object dianjing(){

List<DianJing> list = s.find2();

JSONObject jo = new JSONObject();

if(list!=null){

jo.put("code",0);

jo.put("success",true);

jo.put("count",list.size());

jo.put("list",list);

}

return jo;

}

}實體類就不展示了

dao層

@Insert("insert into dianjing (titles,dates,category,hrefs,photo,sources) values(#{titles},#{dates},#{category},#{hrefs},#{photo},#{sources})")

int adddj(DianJing dj);springboot一種全新的編程規范,其設計目的是用來簡化新Spring應用的初始搭建以及開發過程,SpringBoot也是一個服務于框架的框架,服務范圍是簡化配置文件。

看完了這篇文章,相信你對“springboot+webmagic如何實現java爬蟲jdbc及mysql”有了一定的了解,如果想了解更多相關知識,歡迎關注億速云行業資訊頻道,感謝各位的閱讀!

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。