您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

一、基礎概念

1、概念

Kubernetes(通常寫成“k8s”)Kubernetes是Google開源的容器集群管理系統。其設計目標是在主機集群之間提供一個能夠自動化部署、可拓展、應用容器可運營的平臺。

Kubernetes通常結合docker容器工具工作,并且整合多個運行著docker容器的主機集群。

2、功能特性

a、自動化容器部署 b、自動化擴/縮容器規模 c、提供容器間的負載均衡 d、快速更新和快速回滾

3、相關組件說明

3.1、Master節點組件

master節點上主要運行四個組件:api-server、scheduler、controller-manager、etcd。

APIServer:APIServer負責對外提供RESTful的Kubernetes API服務,它是系統管理指令的統一入口,任何對資源進行增刪改查的操作都給APIServer處理后再提交給etcd。

schedule:scheduler的職責很明確,就是負責調度pod到合適的Node上。如果把scheduler看成一個黑匣子,那么它的輸入是pod和由多個Node組成的列表,輸出是Pod和一

個Node的綁定,即將這個pod部署到這個Node上。Kubernetes目前提供了調度算法,但是同樣也保了接口,用戶可以根據自己的需求定義自己的調度算法。

controller-manager:如果說APIServer做的是“前臺”的工作的話,那controller manager就是負責“后臺”的。每個資源一般都對一個控制器,而controller manager就是

負責管理這些控制器的。比如我們通過APIServer創建一個pod,當這個pod創建成功后,APIServer的任務就算完成了。而后面保證Pod的狀態始終和我們預期的一樣的重任

就由controller manager去保證了。

etcd:etcd是一個高可用的鍵值存儲系統,Kubernetes使用它來存儲各個資源的狀態,從而實現了Restful的API。

3.2、Node節點組件

每個Node節點主要由三個模塊組成:kubelet、kube-proxy、runtime。

runtime:指的是容器運行環境,目前Kubernetes支持docker和rkt兩種容器。

kube-proxy:該模塊實現了Kubernetes中的服務發現和反向代理功能。反向代理方面:kube-proxy支持TCP和UDP連接轉發,默認基于Round Robin算法將客戶端流量轉發到

與service對應的一組后端pod。服務發現方面,kube-proxy使用etcd的watch機制,監控集群中service和endpoint對象數據的動態變化,并且維護一個service到endpoint

的映射關系,從而保證了后端pod的IP變化不會對訪問者造成影響。另外kube-proxy還支持session affinity。

kubelet:Kubelet是Master在每個Node節點上面的agent,是Node節點上面最重要的模塊,它負責維護和管理該Node上面的所有容器但是如果容器不是通過Kubernetes創建

的,它并不會管理。本質上,它負責使Pod得運行狀態與期望的狀態一致。

3.3、pod

Pod是k8s進行資源調度的最小單位,每個Pod中運行著一個或多個密切相關的業務容器,這些業務容器共享這個Pause容器的IP和Volume,我們以這個不易死亡的Pause容器

作為Pod的根容器,以它的狀態表示整個容器組的狀態。一個Pod一旦被創建就會放到Etcd中存儲,然后由Master調度到一個Node綁定,由這個Node上的Kubelet進行實例化。

每個Pod會被分配一個單獨的Pod IP,Pod IP + ContainerPort 組成了一個Endpoint。

3.4、Service

Service其功能使應用暴露,Pods 是有生命周期的,也有獨立的 IP 地址,隨著 Pods 的創建與銷毀,一個必不可少的工作就是保證各個應用能夠感知這種變化。這就要提

到 Service 了,Service 是 YAML 或 JSON 定義的由 Pods 通過某種策略的邏輯組合。更重要的是,Pods 獨立 IP 需要通過 Service 暴露到網絡中。

二、安裝部署

部署方式有多中,此篇文章我們采用二進制方式部署。

1、環境介紹

| 主機名 | IP | 安裝軟件包 | 系統版本 |

| k8s-master | 192.168.248.65 | kube-apiserver,kube-controller-manager,kube-scheduler | Red Hat Enterprise Linux Server release 7.3 |

| k8s-node1 | 192.168.248.66 | etcd,kubelet,kube-proxy,flannel,docker | Red Hat Enterprise Linux Server release 7.3 |

| k8s-node2 | 192.168.248.67 | etcd,kubelet,kube-proxy,flannel,docker | Red Hat Enterprise Linux Server release 7.3 |

| k8s-node3 | 192.168.248.68 | etcd,kubelet,kube-proxy,flannel,docker | Red Hat Enterprise Linux Server release 7.3 |

軟件部署版本及下載鏈接

版本

kubenetes version v1.15.0

etcd version v3.3.10

flannel version v0.11.0

下載鏈接

kubernetes網址:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.15.md#v1150

server端二進制文件:https://dl.k8s.io/v1.15.0/kubernetes-server-linux-amd64.tar.gz

node端二進制文件:https://dl.k8s.io/v1.15.0/kubernetes-node-linux-amd64.tar.gz

etcd網址:https://github.com/etcd-io/etcd/releases

flannel網址:https://github.com/coreos/flannel/releases

2、服務器初始化環境準備

同步系統時間

#?ntpdate?time1.aliyun.com #?echo?"*/5?*?*?*?*?/usr/sbin/ntpdate?-s?time1.aliyun.com"?>?/var/spool/cron/root

修改主機名

#?hostnamectl?--static?set-hostname?k8s-master #?hostnamectl?--static?set-hostname?k8s-node1 #?hostnamectl?--static?set-hostname?k8s-node2 #?hostnamectl?--static?set-hostname?k8s-node3

添加hosts解析

[root@k8s-master?~]#?cat?/etc/hosts 192.168.248.65?k8s-master 192.168.248.66?k8s-node1 192.168.248.67?k8s-node2 192.168.248.68?k8s-node3

關閉并禁用firewalld及selinux

#?systemctl?stop?firewalld #?systemctl?disable?firewalld #?setenforce?0 #?vim?/etc/sysconfig/selinux ??SELINUX=disabled

關閉swap

#?swapoff?-a?&&?sysctl?-w?vm.swappiness=0 #?sed?-i?'/?swap?/?s/^\(.*\)$/#\1/g'?/etc/fstab

設置系統參數

#?cat?/etc/sysctl.d/kubernetes.conf net.ipv4.ip_forward=1 net.ipv4.tcp_tw_recycle=0 vm.swappiness=0 vm.overcommit_memory=1 vm.panic_on_oom=0 fs.inotify.max_user_watches=89100 fs.file-max=52706963 fs.nr_open=52706963 net.ipv6.conf.all.disable_ipv6=1

3、kubernetes集群安裝部署

所有node節點安裝docker-ce

#?wget?-P?/etc/yum.repos.d/?https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo #?yum?makecache #?yum?install?docker-ce-18.06.2.ce-3.el7?-y #?systemctl?start?docker?&&?systemctl?enable?docker

創建安裝目錄

#?mkdir?/data/{install,ssl_config}?-pv

#?mkdir?/data/ssl_config/{etcd,kubernetes}?-pv

#?mkdir?/cloud/k8s/etcd/{bin,cfg,ssl}?-pv

#?mkdir?/cloud/k8s/kubernetes/{bin,cfg,ssl}?-pv添加環境變量

vim?/etc/profile ######Kubernetes######## export?PATH=$PATH:/cloud/k8s/etcd/bin/:/cloud/k8s/kubernetes/bin/

4、創建ssl證書

下載證書生成工具

[root@k8s-master?~]#?wget?-P?/usr/local/bin/?https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 [root@k8s-master?~]#?wget?-P?/usr/local/bin/?https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 [root@k8s-master?~]#?wget?-P?/usr/local/bin/?https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64? [root@k8s-master?~]#?mv?/usr/local/bin/cfssl_linux-amd64?/usr/local/bin/cfssl [root@k8s-master?~]#?mv?/usr/local/bin/cfssljson_linux-amd64?/usr/local/bin/cfssljson [root@k8s-master?~]#?mv?/usr/local/bin/cfssl-certinfo_linux-amd64?/usr/local/bin/cfssl-certinfo [root@k8s-master?~]#?chmod?+x?/usr/local/bin/*

創建etcd相關證書

#?etcd證書ca配置

[root@k8s-master?etcd]#?pwd

/data/ssl_config/etcd

[root@k8s-master?etcd]#?cat?ca-config.json?

{

??"signing":?{

????"default":?{

??????"expiry":?"87600h"

????},

????"profiles":?{

??????"www":?{

?????????"expiry":?"87600h",

?????????"usages":?[

????????????"signing",

????????????"key?encipherment",

????????????"server?auth",

????????????"client?auth"

????????]

??????}

????}

??}

}

#?etcd?ca配置文件

[root@k8s-master?etcd]#?cat?ca-csr.json?

{

????"CN":?"etcd?CA",

????"key":?{

????????"algo":?"rsa",

????????"size":?2048

????},

????"names":?[

????????{

????????????"C":?"CN",

????????????"L":?"Beijing",

????????????"ST":?"Beijing"

????????}

????]

}

#?etcd?server?證書

[root@k8s-master?etcd]#?cat?server-csr.json?

{

????"CN":?"etcd",

????"hosts":?[

????"k8s-node3",

????"k8s-node2",

????"k8s-node1",

????"192.168.248.66",

????"192.168.248.67",

????"192.168.248.68"

????],

????"key":?{

????????"algo":?"rsa",

????????"size":?2048

????},

????"names":?[

????????{

????????????"C":?"CN",

????????????"L":?"Beijing",

????????????"ST":?"Beijing"

????????}

????]

}

#?生成etcd?ca證書和私鑰

#?cfssl?gencert?-initca?ca-csr.json?|?cfssljson?-bare?ca?-

#?cfssl?gencert?-ca=ca.pem?-ca-key=ca-key.pem?-config=ca-config.json?-profile=www?server-csr.json?|?cfssljson?-bare?server創建kubernetes相關證書

#?kubernetes?證書ca配置

[root@k8s-master?kubernetes]#?pwd

/data/ssl_config/kubernetes

[root@k8s-master?kubernetes]#?cat?ca-config.json

{

??"signing":?{

????"default":?{

??????"expiry":?"87600h"

????},

????"profiles":?{

??????"kubernetes":?{

?????????"expiry":?"87600h",

?????????"usages":?[

????????????"signing",

????????????"key?encipherment",

????????????"server?auth",

????????????"client?auth"

????????]

??????}

????}

??}

}

#?創建ca證書配置

[root@k8s-master?kubernetes]#?cat?ca-csr.json?

{

????"CN":?"kubernetes",

????"key":?{

????????"algo":?"rsa",

????????"size":?2048

????},

????"names":?[

????????{

????????????"C":?"CN",

????????????"L":?"Beijing",

????????????"ST":?"Beijing",

????????????"O":?"k8s",

????????????"OU":?"System"

????????}

????]

}

#?生成API_SERVER證書

[root@k8s-master?kubernetes]#?cat?server-csr.json?

{

????"CN":?"kubernetes",

????"hosts":?[

??????"10.0.0.1",

??????"127.0.0.1",

??????"192.168.248.65",

??????"k8s-master",

??????"kubernetes",

??????"kubernetes.default",

??????"kubernetes.default.svc",

??????"kubernetes.default.svc.cluster",

??????"kubernetes.default.svc.cluster.local"

????],

????"key":?{

????????"algo":?"rsa",

????????"size":?2048

????},

????"names":?[

????????{

????????????"C":?"CN",

????????????"L":?"Beijing",

????????????"ST":?"Beijing",

????????????"O":?"k8s",

????????????"OU":?"System"

????????}

????]

}

#?創建?Kubernetes?Proxy?證書

[root@k8s-master?kubernetes]#?cat?kube-proxy-csr.json

{

??"CN":?"system:kube-proxy",

??"hosts":?[],

??"key":?{

????"algo":?"rsa",

????"size":?2048

??},

??"names":?[

????{

??????"C":?"CN",

??????"L":?"Beijing",

??????"ST":?"Beijing",

??????"O":?"k8s",

??????"OU":?"System"

????}

??]

}

#?生成ca證書

#?cfssl?gencert?-initca?ca-csr.json?|?cfssljson?-bare?ca?-

#?生成?api-server?證書

#?cfssl?gencert?-ca=ca.pem?-ca-key=ca-key.pem?-config=ca-config.json?-profile=kubernetes?server-csr.json?|?cfssljson?-bare?server

#?生成?kube-proxy?證書

#?cfssl?gencert?-ca=ca.pem?-ca-key=ca-key.pem?-config=ca-config.json?-profile=kubernetes?kube-proxy-csr.json?|?cfssljson?-bare?kube-proxy5、部署etcd集群(在所有node節點操作)

解壓并配置etcd軟件包

#?tar?-xvf?etcd-v3.3.10-linux-amd64.tar.gz

#?cp?etcd-v3.3.10-linux-amd64/{etcd,etcdctl}?/cloud/k8s/etcd/bin/編寫etcd配置文件

[root@k8s-node1?~]#?cat?/cloud/k8s/etcd/cfg/etcd? #[Member] ETCD_NAME="etcd01" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.248.66:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.248.66:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.248.66:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.248.66:2379" ETCD_INITIAL_CLUSTER="etcd01=https://192.168.248.66:2380,etcd02=https://192.168.248.67:2380,etcd03=https://192.168.248.68:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" [root@k8s-node2?~]#?cat?/cloud/k8s/etcd/cfg/etcd? #[Member] ETCD_NAME="etcd01" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.248.67:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.248.67:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.248.67:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.248.67:2379" ETCD_INITIAL_CLUSTER="etcd01=https://192.168.248.66:2380,etcd02=https://192.168.248.67:2380,etcd03=https://192.168.248.68:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" [root@k8s-node3?~]#?cat?/cloud/k8s/etcd/cfg/etcd? #[Member] ETCD_NAME="etcd01" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.248.68:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.248.68:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.248.68:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.248.68:2379" ETCD_INITIAL_CLUSTER="etcd01=https://192.168.248.66:2380,etcd02=https://192.168.248.67:2380,etcd03=https://192.168.248.68:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new"

創建etcd啟動文件

[root@k8s-node1?~]#?cat?/usr/lib/systemd/system/etcd.service?

[Unit]

Description=Etcd?Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/cloud/k8s/etcd/cfg/etcd

ExecStart=/cloud/k8s/etcd/bin/etcd?\

--name=${ETCD_NAME}?\

--data-dir=${ETCD_DATA_DIR}?\

--listen-peer-urls=${ETCD_LISTEN_PEER_URLS}?\

--listen-client-urls=${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379?\

--advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS}?\

--initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS}?\

--initial-cluster=${ETCD_INITIAL_CLUSTER}?\

--initial-cluster-token=${ETCD_INITIAL_CLUSTER_TOKEN}?\

--initial-cluster-state=new?\

--cert-file=/cloud/k8s/etcd/ssl/server.pem?\

--key-file=/cloud/k8s/etcd/ssl/server-key.pem?\

--peer-cert-file=/cloud/k8s/etcd/ssl/server.pem?\

--peer-key-file=/cloud/k8s/etcd/ssl/server-key.pem?\

--trusted-ca-file=/cloud/k8s/etcd/ssl/ca.pem?\

--peer-trusted-ca-file=/cloud/k8s/etcd/ssl/ca.pem

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target將生成的etcd證書文件拷貝到所有node節點

[root@k8s-master?etcd]#?pwd /data/ssl_config/etcd [root@k8s-master?etcd]#?scp?*.pem?k8s-node1:/cloud/k8s/etcd/ssl/ [root@k8s-master?etcd]#?scp?*.pem?k8s-node2:/cloud/k8s/etcd/ssl/ [root@k8s-master?etcd]#?scp?*.pem?k8s-node3:/cloud/k8s/etcd/ssl/

啟動etcd集群服務

systemctl?daemon-reload systemctl?enable?etcd systemctl?start?etcd

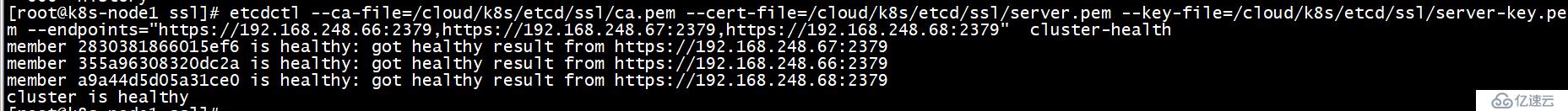

查看啟動狀態(任意一個node節點上執行即可)

[root@k8s-node1?ssl]#?etcdctl?--ca-file=/cloud/k8s/etcd/ssl/ca.pem?--cert-file=/cloud/k8s/etcd/ssl/server.pem?--key-file=/cloud/k8s/etcd/ssl/server-key.pem?--endpoints="https://192.168.248.66:2379,https://192.168.248.67:2379,https://192.168.248.68:2379"??cluster-health member?2830381866015ef6?is?healthy:?got?healthy?result?from?https://192.168.248.67:2379 member?355a96308320dc2a?is?healthy:?got?healthy?result?from?https://192.168.248.66:2379 member?a9a44d5d05a31ce0?is?healthy:?got?healthy?result?from?https://192.168.248.68:2379 cluster?is?healthy

6、部署flannel網絡(所有node節點)

向etcd集群中寫入pod網段信息(任意一臺node節點上執行)

etcdctl?--ca-file=/cloud/k8s/etcd/ssl/ca.pem?\

--cert-file=/cloud/k8s/etcd/ssl/server.pem?\

--key-file=/cloud/k8s/etcd/ssl/server-key.pem??\

--endpoints="https://192.168.248.66:2379,https://192.168.248.67:2379,https://192.168.248.68:2379"??\

set?/coreos.com/network/config?'{?"Network":?"172.18.0.0/16",?"Backend":?{"Type":?"vxlan"}}'

查看寫入etcd集群中的網段信息

#?etcdctl?--ca-file=/cloud/k8s/etcd/ssl/ca.pem?\ --cert-file=/cloud/k8s/etcd/ssl/server.pem?\ --key-file=/cloud/k8s/etcd/ssl/server-key.pem??\ --endpoints="https://192.168.248.66:2379,https://192.168.248.67:2379,https://192.168.248.68:2379"??\ get?/coreos.com/network/config #?[root@k8s-node1?ssl]#?etcdctl?--ca-file=/cloud/k8s/etcd/ssl/ca.pem?\ >?--cert-file=/cloud/k8s/etcd/ssl/server.pem?\ >?--key-file=/cloud/k8s/etcd/ssl/server-key.pem??\ >?--endpoints="https://192.168.248.66:2379,https://192.168.248.67:2379,https://192.168.248.68:2379"?\ >?ls?/coreos.com/network/subnets /coreos.com/network/subnets/172.18.95.0-24 /coreos.com/network/subnets/172.18.22.0-24 /coreos.com/network/subnets/172.18.54.0-24

解壓并配置flannel網絡插件

#?tar?xf??flannel-v0.11.0-linux-amd64.tar.gz #?mv?flanneld?mk-docker-opts.sh?/cloud/k8s/kubernetes/bin/

配置flannel

[root@k8s-node1?cfg]#?cat?/cloud/k8s/kubernetes/cfg/flanneld? FLANNEL_OPTIONS="--etcd-endpoints=https://192.168.248.66:2379,https://192.168.248.67:2379,https://192.168.248.68:2379?-etcd-cafile=/cloud/k8s/etcd/ssl/ca.pem?-etcd-certfile=/cloud/k8s/etcd/ssl/server.pem?-etcd-keyfile=/cloud/k8s/etcd/ssl/server-key.pem"

配置flanneld啟動文件

[root@k8s-node1?cfg]#?cat?/usr/lib/systemd/system/flanneld.service? [Unit] Description=Flanneld?overlay?address?etcd?agent After=network-online.target?network.target Before=docker.service [Service] Type=notify EnvironmentFile=/cloud/k8s/kubernetes/cfg/flanneld ExecStart=/cloud/k8s/kubernetes/bin/flanneld?--ip-masq?$FLANNEL_OPTIONS ExecStartPost=/cloud/k8s/kubernetes/bin/mk-docker-opts.sh?-k?DOCKER_NETWORK_OPTIONS?-d?/run/flannel/subnet.env Restart=on-failure [Install] WantedBy=multi-user.target

配置Docker啟動指定子網段

[root@k8s-node1?cfg]#?cat?/usr/lib/systemd/system/docker.service? [Unit] Description=Docker?Application?Container?Engine Documentation=https://docs.docker.com After=network-online.target?firewalld.service Wants=network-online.target [Service] Type=notify EnvironmentFile=/run/flannel/subnet.env #?the?default?is?not?to?use?systemd?for?cgroups?because?the?delegate?issues?still #?exists?and?systemd?currently?does?not?support?the?cgroup?feature?set?required #?for?containers?run?by?docker ExecStart=/usr/bin/dockerd?$DOCKER_NETWORK_OPTIONS ExecReload=/bin/kill?-s?HUP?$MAINPID #?Having?non-zero?Limit*s?causes?performance?problems?due?to?accounting?overhead #?in?the?kernel.?We?recommend?using?cgroups?to?do?container-local?accounting. LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity #?Uncomment?TasksMax?if?your?systemd?version?supports?it. #?Only?systemd?226?and?above?support?this?version. #TasksMax=infinity TimeoutStartSec=0 #?set?delegate?yes?so?that?systemd?does?not?reset?the?cgroups?of?docker?containers Delegate=yes #?kill?only?the?docker?process,?not?all?processes?in?the?cgroup KillMode=process #?restart?the?docker?process?if?it?exits?prematurely Restart=on-failure StartLimitBurst=3 StartLimitInterval=60s [Install] WantedBy=multi-user.target

啟動服務

systemctl?daemon-reload systemctl?start?flanneld systemctl?enable?flanneld systemctl?restart?docker

驗證fiannel網絡配置

node節點之間相互ping測docker0網卡的ip地址,能ping通說明flanneld網絡插件部署成功。

7、部署master節點組件

解壓master節點安裝包

#?tar?xf?kubernetes-server-linux-amd64.tar.gz

#?cp?kubernetes//server/bin/{kube-scheduler,kube-apiserver,kube-controller-manager,kubectl}??/cloud/k8s/kubernetes/bin/配置kubernetes相關證書

#?cp?/data/ssl_config/kubernetes/*.pem?/cloud/k8s/kubernetes/ssl/

部署 kube-apiserver 組件

創建 TLS Bootstrapping Token

[root@k8s-master?cfg]#?head?-c?16?/dev/urandom?|?od?-An?-t?x?|?tr?-d?'?'???#生成隨機字符串 [root@k8s-master?cfg]#?pwd /cloud/k8s/kubernetes/cfg [root@k8s-master?cfg]#?cat?token.csv? a081e7ba91d597006cbdacfa8ee114ac,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

apiserver配置文件

[root@k8s-master?cfg]#?cat?kube-apiserver? KUBE_APISERVER_OPTS="--logtostderr=true?\ --v=4?\ --etcd-servers=https://192.168.248.66:2379,https://192.168.248.67:2379,https://192.168.248.68:2379?\ --bind-address=192.168.248.65?\ --secure-port=6443?\ --advertise-address=192.168.248.65?\ --allow-privileged=true?\ --service-cluster-ip-range=10.0.0.0/24?\ --admission-control=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota,NodeRestriction?\ --authorization-mode=RBAC,Node?\ --enable-bootstrap-token-auth?\ --token-auth-file=/cloud/k8s/kubernetes/cfg/token.csv?\ --service-node-port-range=30000-50000?\ --tls-cert-file=/cloud/k8s/kubernetes/ssl/server.pem??\ --tls-private-key-file=/cloud/k8s/kubernetes/ssl/server-key.pem?\ --client-ca-file=/cloud/k8s/kubernetes/ssl/ca.pem?\ --service-account-key-file=/cloud/k8s/kubernetes/ssl/ca-key.pem?\ --etcd-cafile=/cloud/k8s/etcd/ssl/ca.pem?\ --etcd-certfile=/cloud/k8s/etcd/ssl/server.pem?\ --etcd-keyfile=/cloud/k8s/etcd/ssl/server-key.pem"

kube-apiserver啟動文件

[root@k8s-master?cfg]#?cat?/usr/lib/systemd/system/kube-apiserver.service? [Unit] Description=Kubernetes?API?Server Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/cloud/k8s/kubernetes/cfg/kube-apiserver ExecStart=/cloud/k8s/kubernetes/bin/kube-apiserver?$KUBE_APISERVER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target

啟動kube-apiserver服務

[root@k8s-master?cfg]#?systemctl?daemon-reload [root@k8s-master?cfg]#?systemctl?enable?kube-apiserver [root@k8s-master?cfg]#?systemctl?start?kube-apiserver [root@k8s-master?cfg]#?ps?-ef?|grep?kube-apiserver root???????1050??????1??4?09:02??????????00:25:21?/cloud/k8s/kubernetes/bin/kube-apiserver?--logtostderr=true?--v=4?--etcd-servers=https://192.168.248.66:2379,https://192.168.248.67:2379,https://192.168.248.68:2379?--bind-address=192.168.248.65?--secure-port=6443?--advertise-address=192.168.248.65?--allow-privileged=true?--service-cluster-ip-range=10.0.0.0/24?--admission-control=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota,NodeRestriction?--authorization-mode=RBAC,Node?--enable-bootstrap-token-auth?--token-auth-file=/cloud/k8s/kubernetes/cfg/token.csv?--service-node-port-range=30000-50000?--tls-cert-file=/cloud/k8s/kubernetes/ssl/server.pem?--tls-private-key-file=/cloud/k8s/kubernetes/ssl/server-key.pem?--client-ca-file=/cloud/k8s/kubernetes/ssl/ca.pem?--service-account-key-file=/cloud/k8s/kubernetes/ssl/ca-key.pem?--etcd-cafile=/cloud/k8s/etcd/ssl/ca.pem?--etcd-certfile=/cloud/k8s/etcd/ssl/server.pem?--etcd-keyfile=/cloud/k8s/etcd/ssl/server-key.pem root???????1888???1083??0?18:15?pts/0????00:00:00?grep?--color=auto?kube-apiserve

部署kube-scheduler組件

創建kube-scheduler配置文件

[root@k8s-master?cfg]#?cat?/cloud/k8s/kubernetes/cfg/kube-scheduler? KUBE_SCHEDULER_OPTS="--logtostderr=true?--v=4?--master=127.0.0.1:8080?--leader-elect"

創建kube-scheduler啟動文件

[root@k8s-master?cfg]#?cat?/usr/lib/systemd/system/kube-scheduler.service? [Unit] Description=Kubernetes?Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/cloud/k8s/kubernetes/cfg/kube-scheduler ExecStart=/cloud/k8s/kubernetes/bin/kube-scheduler?$KUBE_SCHEDULER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target

啟動kube-scheduler服務

[root@k8s-master?cfg]#?systemctl?daemon-reload? [root@k8s-master?cfg]#?systemctl?enable?kube-scheduler.service?? [root@k8s-master?cfg]#?systemctl?start?kube-scheduler.service [root@k8s-master?cfg]#?ps?-ef?|grep?kube-scheduler root???????1716??????1??0?16:12??????????00:00:19?/cloud/k8s/kubernetes/bin/kube-scheduler?--logtostderr=true?--v=4?--master=127.0.0.1:8080?--leader-elect root???????1897???1083??0?18:21?pts/0????00:00:00?grep?--color=auto?kube-scheduler

部署kube-controller-manager組件

創建kube-controller-manager配置文件

[root@k8s-master?cfg]#?cat?/cloud/k8s/kubernetes/cfg/kube-controller-manager? KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true?\ --v=4?\ --master=127.0.0.1:8080?\ --leader-elect=true?\ --address=127.0.0.1?\ --service-cluster-ip-range=10.0.0.0/24?\ --cluster-name=kubernetes?\ --cluster-signing-cert-file=/cloud/k8s/kubernetes/ssl/ca.pem?\ --cluster-signing-key-file=/cloud/k8s/kubernetes/ssl/ca-key.pem??\ --root-ca-file=/cloud/k8s/kubernetes/ssl/ca.pem?\ --service-account-private-key-file=/cloud/k8s/kubernetes/ssl/ca-key.pem"

創建kube-controller-manager啟動文件

[root@k8s-master?cfg]#?cat?/usr/lib/systemd/system/kube-controller-manager.service? [Unit] Description=Kubernetes?Controller?Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/cloud/k8s/kubernetes/cfg/kube-controller-manager ExecStart=/cloud/k8s/kubernetes/bin/kube-controller-manager?$KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target

啟動kube-controller-manager服務

[root@k8s-master?cfg]#?systemctl?daemon-reload [root@k8s-master?cfg]#?systemctl?enable?kube-controller-manager [root@k8s-master?cfg]#?systemctl?start?kube-controller-manager [root@k8s-master?cfg]#?ps?-ef?|grep?kube-controller-manager root???????1709??????1??2?16:12??????????00:03:11?/cloud/k8s/kubernetes/bin/kube-controller-manager?--logtostderr=true?--v=4?--master=127.0.0.1:8080?--leader-elect=true?--address=127.0.0.1?--service-cluster-ip-range=10.0.0.0/24?--cluster-name=kubernetes?--cluster-signing-cert-file=/cloud/k8s/kubernetes/ssl/ca.pem?--cluster-signing-key-file=/cloud/k8s/kubernetes/ssl/ca-key.pem?--root-ca-file=/cloud/k8s/kubernetes/ssl/ca.pem?--service-account-private-key-file=/cloud/k8s/kubernetes/ssl/ca-key.pem root???????1907???1083??0?18:29?pts/0????00:00:00?grep?--color=auto?kube-controller-manager

查看集群狀態

[root@k8s-master?cfg]#?kubectl?get?cs

NAME?????????????????STATUS????MESSAGE?????????????ERROR

controller-manager???Healthy???ok??????????????????

scheduler????????????Healthy???ok??????????????????

etcd-0???????????????Healthy???{"health":"true"}???

etcd-2???????????????Healthy???{"health":"true"}???

etcd-1???????????????Healthy???{"health":"true"}8、部署node節點組件(所有node節點操作)

解壓node節點安裝包

[root@k8s-node1?install]#?tar?xf?kubernetes-node-linux-amd64.tar.gz

[root@k8s-node1?install]#?cp?kubernetes/node/bin/{kubelet,kube-proxy}?/cloud/k8s/kubernetes/bin/創建kubelet bootstrap.kubeconfig 文件

[root@k8s-master?kubernetes]#?cat?environment.sh?

#?創建kubelet?bootstrapping?kubeconfig

BOOTSTRAP_TOKEN=a081e7ba91d597006cbdacfa8ee114ac

KUBE_APISERVER="https://192.168.248.65:6443"

#?設置集群參數

kubectl?config?set-cluster?kubernetes?\

??--certificate-authority=./ca.pem?\

??--embed-certs=true?\

??--server=${KUBE_APISERVER}?\

??--kubeconfig=bootstrap.kubeconfig

#?設置客戶端認證參數

kubectl?config?set-credentials?kubelet-bootstrap?\

??--token=${BOOTSTRAP_TOKEN}?\

??--kubeconfig=bootstrap.kubeconfig

#?設置上下文參數

kubectl?config?set-context?default?\

??--cluster=kubernetes?\

??--user=kubelet-bootstrap?\

??--kubeconfig=bootstrap.kubeconfig

#?設置默認上下文

kubectl?config?use-context?default?--kubeconfig=bootstrap.kubeconfig

#?執行environment.sh生成bootstrap.kubeconfig[object?Object]創建 kubelet.kubeconfig 文件

[root@k8s-master?kubernetes]#?cat?envkubelet.kubeconfig.sh

#?創建kubelet?bootstrapping?kubeconfig

BOOTSTRAP_TOKEN=a081e7ba91d597006cbdacfa8ee114ac

KUBE_APISERVER="https://192.168.248.65:6443"

#?設置集群參數

kubectl?config?set-cluster?kubernetes?\

??--certificate-authority=./ca.pem?\

??--embed-certs=true?\

??--server=${KUBE_APISERVER}?\

??--kubeconfig=kubelet.kubeconfig

#?設置客戶端認證參數

kubectl?config?set-credentials?kubelet?\

??--token=${BOOTSTRAP_TOKEN}?\

??--kubeconfig=kubelet.kubeconfig

#?設置上下文參數

kubectl?config?set-context?default?\

??--cluster=kubernetes?\

??--user=kubelet?\

??--kubeconfig=kubelet.kubeconfig

#?設置默認上下文

kubectl?config?use-context?default?--kubeconfig=kubelet.kubeconfig

#執行envkubelet.kubeconfig.sh腳本,生成kubelet.kubeconfig[object?Object]創建kube-proxy.kubeconfig文件

[root@k8s-master?kubernetes]#?cat?env_proxy.sh

#?創建kube-proxy?kubeconfig文件

BOOTSTRAP_TOKEN=a081e7ba91d597006cbdacfa8ee114ac

KUBE_APISERVER="https://192.168.248.65:6443"

kubectl?config?set-cluster?kubernetes?\

??--certificate-authority=./ca.pem?\

??--embed-certs=true?\

??--server=${KUBE_APISERVER}?\

??--kubeconfig=kube-proxy.kubeconfig

kubectl?config?set-credentials?kube-proxy?\

??--client-certificate=./kube-proxy.pem?\

??--client-key=./kube-proxy-key.pem?\

??--embed-certs=true?\

??--kubeconfig=kube-proxy.kubeconfig

kubectl?config?set-context?default?\

??--cluster=kubernetes?\

??--user=kube-proxy?\

??--kubeconfig=kube-proxy.kubeconfig

kubectl?config?use-context?default?--kubeconfig=kube-proxy.kubeconfig

#執行env_proxy.sh腳本生成kube-proxy.kubeconfig文件將以上生成的kubeconfig復制到所有node節點

[root@k8s-master?kubernetes]#?scp?bootstrap.kubeconfig?kubelet.kubeconfig?kube-proxy.kubeconfig?k8s-node1:/cloud/k8s/kubernetes/cfg/ [root@k8s-master?kubernetes]#?scp?bootstrap.kubeconfig?kubelet.kubeconfig?kube-proxy.kubeconfig?k8s-node2:/cloud/k8s/kubernetes/cfg/ [root@k8s-master?kubernetes]#?scp?bootstrap.kubeconfig?kubelet.kubeconfig?kube-proxy.kubeconfig?k8s-node3:/cloud/k8s/kubernetes/cfg/

所有node節點創建kubelet 參數配置模板文件

[root@k8s-node1?cfg]#?cat?kubelet.config? kind:?KubeletConfiguration apiVersion:?kubelet.config.k8s.io/v1beta1 address:?192.168.248.66 port:?10250 readOnlyPort:?10255 cgroupDriver:?cgroupfs clusterDNS:?["10.0.0.2"] clusterDomain:?cluster.local. failSwapOn:?false authentication: ??anonymous: ????enabled:?true [root@k8s-node2?cfg]#?cat?kubelet.config? kind:?KubeletConfiguration apiVersion:?kubelet.config.k8s.io/v1beta1 address:?192.168.248.67 port:?10250 readOnlyPort:?10255 cgroupDriver:?cgroupfs clusterDNS:?["10.0.0.2"] clusterDomain:?cluster.local. failSwapOn:?false authentication: ??anonymous: ????enabled:?true ???? [root@k8s-node3?cfg]#?cat?kubelet.config? kind:?KubeletConfiguration apiVersion:?kubelet.config.k8s.io/v1beta1 address:?192.168.248.68 port:?10250 readOnlyPort:?10255 cgroupDriver:?cgroupfs clusterDNS:?["10.0.0.2"] clusterDomain:?cluster.local. failSwapOn:?false authentication: ??anonymous: ????enabled:?true

創建kubelet配置文件

[root@k8s-node1?cfg]#?cat?/cloud/k8s/kubernetes/cfg/kubelet KUBELET_OPTS="--logtostderr=true?\ --v=4?\ --hostname-override=k8s-node1?\ --kubeconfig=/cloud/k8s/kubernetes/cfg/kubelet.kubeconfig?\ --bootstrap-kubeconfig=/cloud/k8s/kubernetes/cfg/bootstrap.kubeconfig?\ --config=/cloud/k8s/kubernetes/cfg/kubelet.config?\ --cert-dir=/cloud/k8s/kubernetes/ssl?\ --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0" [root@k8s-node2?cfg]#?cat?/cloud/k8s/kubernetes/cfg/kubelet KUBELET_OPTS="--logtostderr=true?\ --v=4?\ --hostname-override=k8s-node2?\ --kubeconfig=/cloud/k8s/kubernetes/cfg/kubelet.kubeconfig?\ --bootstrap-kubeconfig=/cloud/k8s/kubernetes/cfg/bootstrap.kubeconfig?\ --config=/cloud/k8s/kubernetes/cfg/kubelet.config?\ --cert-dir=/cloud/k8s/kubernetes/ssl?\ --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0" [root@k8s-node3?cfg]#?cat?/cloud/k8s/kubernetes/cfg/kubelet KUBELET_OPTS="--logtostderr=true?\ --v=4?\ --hostname-override=k8s-node3?\ --kubeconfig=/cloud/k8s/kubernetes/cfg/kubelet.kubeconfig?\ --bootstrap-kubeconfig=/cloud/k8s/kubernetes/cfg/bootstrap.kubeconfig?\ --config=/cloud/k8s/kubernetes/cfg/kubelet.config?\ --cert-dir=/cloud/k8s/kubernetes/ssl?\ --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"

創建kubelet啟動文件

[root@k8s-node1?cfg]#?cat?/usr/lib/systemd/system/kubelet.service? [Unit] Description=Kubernetes?Kubelet After=docker.service Requires=docker.service [Service] EnvironmentFile=/cloud/k8s/kubernetes/cfg/kubelet ExecStart=/cloud/k8s/kubernetes/bin/kubelet?$KUBELET_OPTS Restart=on-failure KillMode=process [Install] WantedBy=multi-user.target

將kubelet-bootstrap用戶綁定到系統集群角色(不綁定角色,kubelet將無法啟動成功)

kubectl?create?clusterrolebinding?kubelet-bootstrap?\ ??--clusterrole=system:node-bootstrapper?\ ??--user=kubelet-bootstrap

啟動kubelet服務(所有node節點)

[root@k8s-node1?cfg]#?systemctl?daemon-reload [root@k8s-node1?cfg]#?systemctl?enable?kubelet [root@k8s-node1?cfg]#?systemctl?start?kubelet [root@k8s-node1?cfg]#?ps?-ef?|grep?kubelet root???????3306??????1??2?09:02??????????00:14:47?/cloud/k8s/kubernetes/bin/kubelet?--logtostderr=true?--v=4?--hostname-override=k8s-node1?--kubeconfig=/cloud/k8s/kubernetes/cfg/kubelet.kubeconfig?--bootstrap-kubeconfig=/cloud/k8s/kubernetes/cfg/bootstrap.kubeconfig?--config=/cloud/k8s/kubernetes/cfg/kubelet.config?--cert-dir=/cloud/k8s/kubernetes/ssl?--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0 root??????87181??12020??0?19:22?pts/0????00:00:00?grep?--color=auto?kubelet

在master節點上approve kubelet CSR 請求

kubectl?get?csr kubectl?certificate?approve?$NAME csr?狀態變為?Approved,Issued?即可

查看集群狀態及node節點

[root@k8s-master?kubernetes]#?kubectl??get?cs,node

NAME?????????????????????????????????STATUS????MESSAGE?????????????ERROR

componentstatus/controller-manager???Healthy???ok??????????????????

componentstatus/scheduler????????????Healthy???ok??????????????????

componentstatus/etcd-2???????????????Healthy???{"health":"true"}???

componentstatus/etcd-0???????????????Healthy???{"health":"true"}???

componentstatus/etcd-1???????????????Healthy???{"health":"true"}???

NAME?????????????STATUS???ROLES????AGE????VERSION

node/k8s-node1???Ready????<none>???4d2h???v1.15.0

node/k8s-node2???Ready????<none>???4d2h???v1.15.0

node/k8s-node3???Ready????<none>???4d2h???v1.15.0部署 node kube-proxy 組件

[root@k8s-node1?cfg]#?cat?/cloud/k8s/kubernetes/cfg/kube-proxy KUBE_PROXY_OPTS="--logtostderr=true?\ --v=4?\ --hostname-override=k8s-node1?\ --cluster-cidr=10.0.0.0/24?\ --kubeconfig=/cloud/k8s/kubernetes/cfg/kube-proxy.kubeconfig"

創建kube-proxy啟動文件

[root@k8s-node1?cfg]#?cat?/usr/lib/systemd/system/kube-proxy.service? [Unit] Description=Kubernetes?Proxy After=network.target [Service] EnvironmentFile=/cloud/k8s/kubernetes/cfg/kube-proxy ExecStart=/cloud/k8s/kubernetes/bin/kube-proxy?$KUBE_PROXY_OPTS Restart=on-failure [Install] WantedBy=multi-user.target

啟動kube-proxy服務

[root@k8s-node1?cfg]#?systemctl?daemon-reload [root@k8s-node1?cfg]#?systemctl?enable?kube-proxy [root@k8s-node1?cfg]#?systemctl?start?kube-proxy [root@k8s-node1?cfg]#?ps?-ef?|grep?kube-proxy root????????966??????1??0?09:02??????????00:01:20?/cloud/k8s/kubernetes/bin/kube-proxy?--logtostderr=true?--v=4?--hostname-override=k8s-node1?--cluster-cidr=10.0.0.0/24?--kubeconfig=/cloud/k8s/kubernetes/cfg/kube-proxy.kubeconfig root??????87093??12020??0?19:22?pts/0????00:00:00?grep?--color=auto?kube-proxy

部署Coredns組件

[root@k8s-master?~]#?cat?coredns.yaml?

#?Warning:?This?is?a?file?generated?from?the?base?underscore?template?file:?coredns.yaml.base

apiVersion:?v1

kind:?ServiceAccount

metadata:

??name:?coredns

??namespace:?kube-system

??labels:

??????kubernetes.io/cluster-service:?"true"

??????addonmanager.kubernetes.io/mode:?Reconcile

---

apiVersion:?rbac.authorization.k8s.io/v1

kind:?ClusterRole

metadata:

??labels:

????kubernetes.io/bootstrapping:?rbac-defaults

????addonmanager.kubernetes.io/mode:?Reconcile

??name:?system:coredns

rules:

-?apiGroups:

??-?""

??resources:

??-?endpoints

??-?services

??-?pods

??-?namespaces

??verbs:

??-?list

??-?watch

---

apiVersion:?rbac.authorization.k8s.io/v1

kind:?ClusterRoleBinding

metadata:

??annotations:

????rbac.authorization.kubernetes.io/autoupdate:?"true"

??labels:

????kubernetes.io/bootstrapping:?rbac-defaults

????addonmanager.kubernetes.io/mode:?EnsureExists

??name:?system:coredns

roleRef:

??apiGroup:?rbac.authorization.k8s.io

??kind:?ClusterRole

??name:?system:coredns

subjects:

-?kind:?ServiceAccount

??name:?coredns

??namespace:?kube-system

---

apiVersion:?v1

kind:?ConfigMap

metadata:

??name:?coredns

??namespace:?kube-system

??labels:

??????addonmanager.kubernetes.io/mode:?EnsureExists

data:

??Corefile:?|

????.:53?{

????????errors

????????health

????????kubernetes?cluster.local?in-addr.arpa?ip6.arpa?{

????????????pods?insecure

????????????upstream

????????????fallthrough?in-addr.arpa?ip6.arpa

????????}

????????prometheus?:9153

????????proxy?.?/etc/resolv.conf

????????cache?30

????????loop

????????reload

????????loadbalance

????}

---

apiVersion:?extensions/v1beta1

kind:?Deployment

metadata:

??name:?coredns

??namespace:?kube-system

??labels:

????k8s-app:?kube-dns

????kubernetes.io/cluster-service:?"true"

????addonmanager.kubernetes.io/mode:?Reconcile

????kubernetes.io/name:?"CoreDNS"

spec:

??#?replicas:?not?specified?here:

??#?1.?In?order?to?make?Addon?Manager?do?not?reconcile?this?replicas?parameter.

??#?2.?Default?is?1.

??#?3.?Will?be?tuned?in?real?time?if?DNS?horizontal?auto-scaling?is?turned?on.

??replicas:?3

??strategy:

????type:?RollingUpdate

????rollingUpdate:

??????maxUnavailable:?1

??selector:

????matchLabels:

??????k8s-app:?kube-dns

??template:

????metadata:

??????labels:

????????k8s-app:?kube-dns

??????annotations:

????????seccomp.security.alpha.kubernetes.io/pod:?'docker/default'

????spec:

??????serviceAccountName:?coredns

??????tolerations:

????????-?key:?node-role.kubernetes.io/master

??????????effect:?NoSchedule

????????-?key:?"CriticalAddonsOnly"

??????????operator:?"Exists"

??????containers:

??????-?name:?coredns

????????image:?coredns/coredns:1.3.1

????????imagePullPolicy:?IfNotPresent

????????resources:

??????????limits:

????????????memory:?170Mi

??????????requests:

????????????cpu:?100m

????????????memory:?70Mi

????????args:?[?"-conf",?"/etc/coredns/Corefile"?]

????????volumeMounts:

????????-?name:?config-volume

??????????mountPath:?/etc/coredns

??????????readOnly:?true

????????ports:

????????-?containerPort:?53

??????????name:?dns

??????????protocol:?UDP

????????-?containerPort:?53

??????????name:?dns-tcp

??????????protocol:?TCP

????????-?containerPort:?9153

??????????name:?metrics

??????????protocol:?TCP

????????livenessProbe:

??????????httpGet:

????????????path:?/health

????????????port:?8080

????????????scheme:?HTTP

??????????initialDelaySeconds:?60

??????????timeoutSeconds:?5

??????????successThreshold:?1

??????????failureThreshold:?5

????????securityContext:

??????????allowPrivilegeEscalation:?false

??????????capabilities:

????????????add:

????????????-?NET_BIND_SERVICE

????????????drop:

????????????-?all

??????????readOnlyRootFilesystem:?true

??????dnsPolicy:?Default

??????volumes:

????????-?name:?config-volume

??????????configMap:

????????????name:?coredns

????????????items:

????????????-?key:?Corefile

??????????????path:?Corefile

---

apiVersion:?v1

kind:?Service

metadata:

??name:?kube-dns

??namespace:?kube-system

??annotations:

????prometheus.io/port:?"9153"

????prometheus.io/scrape:?"true"

??labels:

????k8s-app:?kube-dns

????kubernetes.io/cluster-service:?"true"

????addonmanager.kubernetes.io/mode:?Reconcile

????kubernetes.io/name:?"CoreDNS"

spec:

??selector:

????k8s-app:?kube-dns

??clusterIP:?10.0.0.2?

??ports:

??-?name:?dns

????port:?53

????protocol:?UDP

??-?name:?dns-tcp

????port:?53

????protocol:?TCP[root@k8s-master?~]#?kubectl?apply?-f?coredns.yaml? serviceaccount/coredns?unchanged clusterrole.rbac.authorization.k8s.io/system:coredns?unchanged clusterrolebinding.rbac.authorization.k8s.io/system:coredns?unchanged configmap/coredns?unchanged deployment.extensions/coredns?unchanged service/kube-dns?unchanged [root@k8s-master?~]#?kubectl?get?deployment?-n?kube-system??? NAME??????READY???UP-TO-DATE???AVAILABLE???AGE coredns???3/3?????3????????????3???????????33h [root@k8s-master?~]#?kubectl?get?deployment?-n?kube-system?-o?wide NAME??????READY???UP-TO-DATE???AVAILABLE???AGE???CONTAINERS???IMAGES??????????????????SELECTOR coredns???3/3?????3????????????3???????????33h???coredns??????coredns/coredns:1.3.1???k8s-app=kube-dns [root@k8s-master?~]#?kubectl?get?pod?-n?kube-system?-o?wide?????????? NAME??????????????????????READY???STATUS????RESTARTS???AGE???IP????????????NODE????????NOMINATED?NODE???READINESS?GATES coredns-b49c586cf-nwzv6???1/1?????Running???1??????????33h???172.18.54.3???k8s-node3???<none>???????????<none> coredns-b49c586cf-qv5b9???1/1?????Running???1??????????33h???172.18.22.3???k8s-node1???<none>???????????<none> coredns-b49c586cf-rcqhc???1/1?????Running???1??????????33h???172.18.95.2???k8s-node2???<none>???????????<none> [root@k8s-master?~]#?kubectl?get?svc?-n?kube-system?-o?wide??? NAME???????TYPE????????CLUSTER-IP???EXTERNAL-IP???PORT(S)?????????AGE???SELECTOR kube-dns???ClusterIP???10.0.0.2?????<none>????????53/UDP,53/TCP???33h???k8s-app=kube-dns

到此kubernetes V1.15.0乞丐版部署完成。

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。