您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

SVM算法(Support Vector Machine,支持向量機)的核心思想有2點:1、如果數據線性可分,那么基于最大間隔的方式來確定超平面,以確保全局最優,使得分類器盡可能健壯;2、如果數據線性不可分,通過核函數將低維樣本轉化為高維樣本使其線性可分。注意和AdaBoost類似,SVM只能解決二分類問題。

SVM的算法在數學上實在是太復雜了,沒研究明白。建議還是直接使用現成的第三方組件吧,比如libsvm的C#版本,推薦這個:http://www.matthewajohnson.org/software/svm.html。

雖然沒研究明白,不過這幾天照著Python版本的代碼試著用C#改寫了一下,算是研究SVM過程中唯一的收獲吧。此版本基于SMO(序列最小優化)算法求解,核函數使用的是比較常用的徑向基函數(RBF)。別問我為什么沒有注釋,我只是從Python移植過來的,我也沒看懂,等我看懂了再來補注釋吧。

using System;

using System.Collections.Generic;

using System.Linq;

namespace MachineLearning

{

/// <summary>

/// 支持向量機(SMO算法,RBF核)

/// </summary>

public class SVM

{

private Random m_Rand;

private double[][] m_Kernel;

private double[] m_Alpha;

private double m_C = 1.0;

private double m_B = 0.0;

private double m_Toler = 0.0;

private double[][] m_Cache;

private double[][] m_Data;

private double m_Reach;

private int[] m_Label;

private int m_Count;

private int m_Dimension;

public SVM()

{

m_Rand = new Random();

}

/// <summary>

/// 訓練

/// </summary>

/// <param name="trainingSet"></param>

/// <param name="C"></param>

/// <param name="toler"></param>

/// <param name="reach"></param>

/// <param name="iterateCount"></param>

public void Train(List<DataVector<double, int>> trainingSet, double C, double toler, double reach, int iterateCount = 10)

{

//初始化

m_Count = trainingSet.Count;

m_Dimension = trainingSet[0].Dimension;

m_Toler = toler;

m_C = C;

m_Reach = reach;

this.Init(trainingSet);

this.InitKernel();

int iter = 0;

int alphaChanged = 0;

bool entireSet = true;

while(iter < iterateCount && (alphaChanged > 0 || entireSet))

{

alphaChanged = 0;

if(entireSet)

{

for(int i = 0;i < m_Count;++i)

alphaChanged += InnerL(i);

iter++;

}

else

{

for(int i = 0;i < m_Count;++i)

{

if(m_Alpha[i] > 0 && m_Alpha[i] < m_C)

alphaChanged += InnerL(i);

}

iter += 1;

}

if(entireSet)

entireSet = false;

else if(alphaChanged == 0)

entireSet = true;

}

}

/// <summary>

/// 分類

/// </summary>

/// <param name="vector"></param>

/// <returns></returns>

public int Classify(DataVector<double, int> vector)

{

double predict = 0.0;

int svCnt = m_Alpha.Count(a => a > 0);

var supportVectors = new double[svCnt][];

var supportLabels = new int[svCnt];

var supportAlphas = new double[svCnt];

int index = 0;

for(int i = 0;i < m_Count;++i)

{

if(m_Alpha[i] > 0)

{

supportVectors[index] = m_Data[i];

supportLabels[index] = m_Label[i];

supportAlphas[index] = m_Alpha[i];

index++;

}

}

var kernelEval = KernelTrans(supportVectors, vector.Data);

for(int i = 0;i < svCnt;++i)

predict += kernelEval[i] * supportAlphas[i] * supportLabels[i];

predict += m_B;

return Math.Sign(predict);

}

/// <summary>

/// 將原始數據轉化成方便使用的形式

/// </summary>

/// <param name="trainingSet"></param>

private void Init(List<DataVector<double, int>> trainingSet)

{

m_Data = new double[m_Count][];

m_Label = new int[m_Count];

m_Alpha = new double[m_Count];

m_Cache = new double[m_Count][];

for(int i = 0;i < m_Count;++i)

{

m_Label[i] = trainingSet[i].Label;

m_Alpha[i] = 0.0;

m_Cache[i] = new double[2];

m_Cache[i][0] = 0.0;

m_Cache[i][1] = 0.0;

m_Data[i] = new double[m_Dimension];

for(int j = 0;j < m_Dimension;++j)

m_Data[i][j] = trainingSet[i].Data[j];

}

}

/// <summary>

/// 初始化RBF核

/// </summary>

private void InitKernel()

{

m_Kernel = new double[m_Count][];

for(int i = 0;i < m_Count;++i)

{

m_Kernel[i] = new double[m_Count];

var kernels = KernelTrans(m_Data, m_Data[i]);

for(int k = 0;k < kernels.Length;++k)

m_Kernel[i][k] = kernels[k];

}

}

private double[] KernelTrans(double[][] X, double[] A)

{

var kernel = new double[X.Length];

for(int i = 0;i < X.Length;++i)

{

double delta = 0.0;

for(int k = 0;k < X[0].Length;++k)

delta += Math.Pow(X[i][k] - A[k], 2);

kernel[i] = Math.Exp(delta * -1.0 / Math.Pow(m_Reach, 2));

}

return kernel;

}

private double E(int k)

{

double x = 0.0;

for(int i = 0;i < m_Count;++i)

x += m_Alpha[i] * m_Label[i] * m_Kernel[i][k];

x += m_B;

return x - m_Label[k];

}

private void UpdateE(int k)

{

double Ek = E(k);

m_Cache[k][0] = 1.0;

m_Cache[k][1] = Ek;

}

private int InnerL(int i)

{

double Ei = E(i);

if((m_Label[i] * Ei < -m_Toler && m_Alpha[i] < m_C) || (m_Label[i] * Ei > m_Toler && m_Alpha[i] > 0))

{

double Ej = 0.0;

int j = SelectJ(i, Ei, out Ej);

double oldAi = m_Alpha[i];

double oldAj = m_Alpha[j];

double H, L;

if(m_Label[i] != m_Label[j])

{

L = Math.Max(0, m_Alpha[j] - m_Alpha[i]);

H = Math.Min(m_C, m_C + m_Alpha[j] - m_Alpha[i]);

}

else

{

L = Math.Max(0, m_Alpha[j] + m_Alpha[i] - m_C);

H = Math.Min(m_C, m_Alpha[j] + m_Alpha[i]);

}

if(L == H)

return 0;

double eta = 2.0 * m_Kernel[i][j] - m_Kernel[i][i] - m_Kernel[j][j];

if(eta >= 0)

return 0;

m_Alpha[j] -= m_Label[j] * (Ei - Ej) / eta;

m_Alpha[j] = ClipAlpha(m_Alpha[j], H, L);

UpdateE(j);

if(Math.Abs(m_Alpha[j] - oldAj) < 0.00001)

return 0;

m_Alpha[i] += m_Label[j] * m_Label[i] * (oldAj - m_Alpha[j]);

UpdateE(i);

double b1 = m_B - Ei - m_Label[i] * (m_Alpha[i] - oldAi) * m_Kernel[i][i] - m_Label[j] * (m_Alpha[j] - oldAj) * m_Kernel[i][j];

double b2 = m_B - Ej - m_Label[i] * (m_Alpha[i] - oldAi) * m_Kernel[i][j] - m_Label[j] * (m_Alpha[j] - oldAj) * m_Kernel[j][j];

if(m_Alpha[i] > 0 && m_Alpha[i] < m_C)

m_B = b1;

else if(m_Alpha[j] > 0 && m_Alpha[j] < m_C)

m_B = b2;

else

m_B = (b1 + b2) / 2.0;

return 1;

}

return 0;

}

private int SelectJ(int i, double Ei, out double Ej)

{

Ej = 0.0;

int j = 0;

int maxK = -1;

double maxDeltaE = 0.0;

m_Cache[i][0] = 1;

m_Cache[i][1] = Ei;

for(int k = 0;k < m_Count;++k)

{

if(k == i || m_Cache[k][0] == 0)

continue;

double Ek = E(k);

double deltaE = Math.Abs(Ei - Ek);

if(deltaE > maxDeltaE)

{

maxK = k;

maxDeltaE = deltaE;

Ej = Ek;

}

}

if(maxK >= 0)

{

j = maxK;

}

else

{

j = RandomSelect(i);

}

return j;

}

private int RandomSelect(int i)

{

int j = 0;

do

{

j = m_Rand.Next(0, m_Count);

}

while(j == i);

return j;

}

private double ClipAlpha(double alpha, double H, double L)

{

return alpha > H ? H : (alpha < L ? L : alpha);

}

}

}最后上測試,還是使用上次的breast-cancer-wisconsin.txt做測試,之前用kNN和AdaBoost測試的錯誤率分別是2.02%和1.01%,這回用SVM對比一下。上測試代碼:

public void TestSvm()

{

var trainingSet = new List<DataVector<double, int>>();

var testSet = new List<DataVector<double, int>>();

//讀取數據

var file = new StreamReader("breast-cancer-wisconsin.txt", Encoding.Default);

for(int i = 0;i < 699;++i)

{

string line = file.ReadLine();

var parts = line.Split(',');

var p = new DataVector<double, int>(9);

for(int j = 0;j < p.Dimension;++j)

{

if(parts[j + 1] == "?")

parts[j + 1] = "0";

p.Data[j] = Convert.ToDouble(parts[j + 1]);

}

p.Label = Convert.ToInt32(parts[10]) == 2 ? 1 : -1;

//和上次一樣,600個做訓練,99個做測試

if(i < 600)

trainingSet.Add(p);

else

testSet.Add(p);

}

file.Close();

//檢驗

var svm = new SVM();

svm.Train(trainingSet, 1, 0.01, 3.0, 10);

int error = 0;

foreach(var p in testSet)

{

var label = boost.Classify(p);

if(label != p.Label)

error++;

}

Console.WriteLine("Error = {0}/{1}, {2}%", error, testSet.Count, (error * 100.0 / testSet.Count));

}最終結果是99個測試樣本猜錯1個,錯誤率1.01%,和AdaBoost相當。

Train時使用不同的參數,錯誤率會變化,很可惜的是參數的選擇往往沒有固定的方法,需要在一定范圍內嘗試以得到最小錯誤率。

另外對于為什么核函數可以處理線性不可分數據,網上有2張圖很能說明問題,轉載一下:

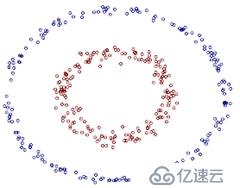

以下數據明顯是線性不可分的,在二維空間下找不到一條直線能將數據分開:

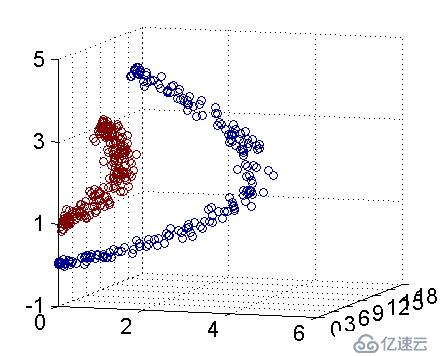

但在在二維空間下的線性不可分,到了三維空間,是可以找到一個平面來分隔數據的:

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。